Monitoring a Batch Job

In the batch processing mode, data is processed periodically in batches based on the job-level scheduling plan, which is used in scenarios with low real-time requirements. This type of job is a pipeline that consists of one or more nodes and is scheduled as a whole. It cannot run for an unlimited period of time, that is, it must end after running for a certain period of time.

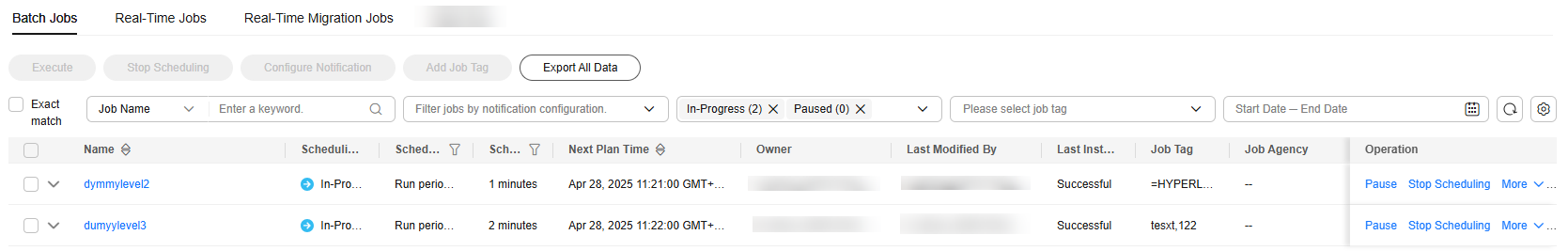

You can choose Monitor Job and click the Batch Job Monitoring tab to view the scheduling status, scheduling period, start time of a batch job, and SQL complexity, and perform the operations listed in Table 1.

|

Operation |

Description |

|---|---|

|

Filtering jobs by Job Name, Owner, CDM Job, Job ID, Scheduling Identity, or Node Type |

N/A |

|

Filtering jobs by whether notifications have been configured, scheduling status, job tag, or next plan time |

You can filter jobs for which no notification has been configured by notification type (such as exception or failure) so that you can set alarm notifications in batches. |

|

Performing operations on jobs in a batch |

Select multiple jobs and perform operations on them. |

|

Viewing job instance status |

Click In the Operation column of the last instance, you can view the run logs of the instance and rerun the instance.

NOTE:

|

|

Viewing node information of the job |

Click a job name. On the displayed page, click the job node and view its associated jobs/scripts and monitoring information. Click a job name. On the displayed page, view the job instance. For details, see Batch Job Monitoring: Job Instances. |

|

Job scheduling operations |

You can run, pause, recover, stop, and configure scheduling. For details, see Batch Job Monitoring: Scheduling a Job.

NOTE:

When stopping or pausing a job, you can select Apply immediately or Apply next time. Apply immediately: The current job instance will be stopped immediately, and no new job instance will be generated. Apply next time: The current job instance will continue to run, but no new job instance will be generated. |

|

Configuring notifications |

In the Operation column of a job, choose . In the displayed dialog box, configure notification parameters. Table 1 describes the notification parameters. |

|

Monitoring instances |

In the Operation column of a job, choose to view the running records of all instances of the job. |

|

Configuring scheduling information |

In the Operation column of a job, click More and select Scheduling Setup. On the displayed job development page, you can view and configure the job scheduling information.

NOTE:

You cannot configure scheduling information for a running job. |

|

PatchData |

In the Operation column of a job, choose . For details, see Batch Job Monitoring: PatchData. This function is available only for jobs that are scheduled periodically. |

|

Adding a job tag |

In the Operation column of a job, choose . For details, see Batch Job Monitoring: Adding a Job Tag. |

|

Viewing a job dependency graph |

In the Operation column of a job, click More and select View Job Dependency Graph. For details, see Batch Job Monitoring: Viewing a Job Dependency Graph. |

|

Exporting all data |

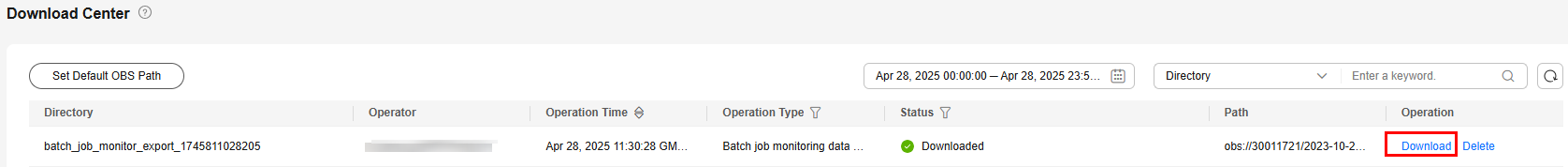

Click Export All Data. After the export is complete, you can go to the Download Center page and view and download the exported file.

Figure 2 Viewing export history

If the default storage path is not configured, you can set a storage path and select Set as default OBS path in the Export to OBS dialog box. A maximum of 30 MB data can be exported. If there are more than 30 MB data, the data will be automatically truncated. The exported job instances map job nodes. You cannot export data by selecting job names. Instead, you can select the data to be exported by setting filter criteria. |

Click a job name. On the displayed page, view the job parameters, properties, and instances.

Click a node of a job to view the node properties, script content, and node monitoring information.

In addition, you can view the current job version and job scheduling status, schedule, stop, or pause a job, configure patch data, notification, or update frequency for a job.

Batch Job Monitoring: Job Instances

- Log in to the DataArts Studio console by following the instructions in Accessing the DataArts Studio Instance Console.

- On the DataArts Studio console, locate a workspace and click DataArts Factory.

- In the left navigation pane of DataArts Factory, choose .

- Click the Batch Job Monitoring tab.

- Click a job name. On the displayed page, click the Job Instances tab to view job instances. You can perform the following operations:

- Select Show Instances to Be Generated and set the time range to filter job instances that are expected to be generated in the future.

A maximum of 100 instances expected to be generated can be displayed.

- Freeze or unfreeze job instances that are expected to be generated in the future. You can click Freeze or Unfreeze above the job instance list, or click More in the Operation column and select Freeze or Unfreeze.

Freeze: You can only freeze job instances that have not been generated or are in waiting state.

You cannot freeze jobs instances that have been frozen.

When a job is frozen, it is considered to be failed and its downstream jobs will be suspended, executed, or canceled based on the failure policy configured for the job.

When job instances that have not been generated are frozen, you can view them on the Batch Job Monitoring page or filter them by status on the Monitor Instance page.

Unfreeze: You can unfreeze a job instance that has not been scheduled and has been frozen.

- Perform other operations on job instances, such as stopping, rerunning, and retrying job instances, continuing running job instances, making job instances succeed, viewing waiting job instances, and viewing job configuration. When viewing waiting job instances, you can click Remove Dependency in the Operation column to remove dependency on an upstream instance.

- If jobs need manual confirmation before they are executed, they are in waiting confirmation state on the Batch Jobs page. When you click Execute, the jobs are in waiting execution state.

- Select Show Instances to Be Generated and set the time range to filter job instances that are expected to be generated in the future.

Batch Job Monitoring: Scheduling a Job

After developing a job, you can manage job scheduling tasks on the Monitor Job page. Specific operations include to run, pause, restore, or stop scheduling.

- Log in to the DataArts Studio console by following the instructions in Accessing the DataArts Studio Instance Console.

- On the DataArts Studio console, locate a workspace and click DataArts Factory.

- In the left navigation pane of DataArts Factory, choose .

- Click the Batch Job Monitoring tab.

You can filter batch processing jobs by scheduling type or scheduling frequency.

- In the Operation column of the job, click Execute, Pause, Restore, or Stop Scheduling.

If a dependent job has been configured for a batch job, you can select either Start Current Job Only or Start Current and Depended Jobs when submitting the batch job. For details about how to configure dependent jobs, see Setting Up Scheduling for a Job Using the Batch Processing Mode.

If the job is on the baseline task link, the system automatically displays a dialog box indicating that the baseline is associated when the scheduling is paused or stopped.

If the job is on the baseline task link or is depended on by other jobs, the system automatically displays a dialog box when the scheduling is paused or stopped.

Batch Job Monitoring: PatchData

A job executes a scheduling task to generate a series of instances in a certain period of time. This series of instances are called PatchData. PatchData can be used to fix the job instances that have data errors in the historical records or to build job records for debugging programs.

Only the periodically scheduled jobs support PatchData. For details about the execution records of PatchData, see Monitoring PatchData.

Do not modify the job configuration when PatchData is being performed. Otherwise, job instances generated during PatchData will be affected.

- Log in to the DataArts Studio console by following the instructions in Accessing the DataArts Studio Instance Console.

- On the DataArts Studio console, locate a workspace and click DataArts Factory.

- In the left navigation pane of DataArts Factory, choose .

- Click the Batch Job Monitoring tab.

- In the Operation column of the job, choose .

- Configure PatchData parameters based on Table 2.

Figure 5 PatchData parameters

Table 2 Parameters Parameter

Description

PatchData Name

Name of the automatically generated PatchData task. The value can be modified.

Job Name

Name of the job that requires PatchData.

Scheduling Time Type

Date

If Scheduling Time Type is set to Consecutive date range:

Period of time when PatchData is required. If the date is later than the current time, the current time is displayed by default.

NOTE:PatchData can be configured for a job multiple times. However, avoid configuring PatchData multiple times on the same date to prevent data duplication or disorder.

If you select Patch data in reverse order of date, the patch data of each day is in positive sequence.

NOTE:- This function is applicable when the data of each day is not coupled with each other.

- The PatchData job will ignore the dependencies between the job instances created before this date.

If Scheduling Time Type is set to Discrete date ranges:

You also need to set the following PatchData parameters:

You can click Add Date Range to add multiple discrete date ranges for PatchData. You must set at least one date range.

You can click Delete to delete discrete date ranges.

NOTE:DataArts Studio does not support concurrent running of PatchData instances and periodic job instances of underlying services (such as CDM and DLI). To prevent PatchData instances from affecting periodic job instances and avoid exceptions, ensure that they do not run at the same time.

Run PatchData Tasks Periodically

- Yes: PatchData jobs will be executed based on the configured period.

The first value indicates a specific value.

The second value indicates that data is patched based on a specified period, for example, hours, days, weeks, or months.

NOTE:If you set a period, PatchData tasks will be scheduled based on that period. If the job is scheduled every few minutes, hours, or days, PatchData tasks will be scheduled based on the period you set. For example, if you want to patch data from 00:00 on Jan 1, 2023 to 00:00 on Feb 1, 2023 for an hourly job that starts at 01:00 every day, and set the PatchData period to two days, PatchData tasks will be scheduled at 00:00 on Jan 1, 2023, 00:00 on Jan 3, 2023, 00:00 on Jan 5, 2023, and so on. If the PatchData task scheduling period is in months and the first scheduling date falls on the last day of a month, PatchData tasks will be scheduled on the last day of each month.

- No: PatchData jobs will not be executed periodically. Instead, the system executes PatchData jobs based on the existing rule.

Cycle

This parameter is required when Scheduling Time Type is set to Discrete date ranges.

It specifies the PatchData cycle.

You can click Viewing Scheduling Details to view the execution time of the task instances in the current time segment.

NOTE:This parameter is required only when a job is scheduled by hour or minute and Scheduling Time Type is set to Discrete date ranges.

Parallel Instances

Number of instances to be executed at the same time. A maximum of five instances can be executed at the same time.

If you select Yes for Patch Data by Day, Parallel Instances means the number of concurrent job instances on the same day.

If you select No for Patch Data by Day, Parallel Instances means the number of concurrent job instances in the scheduling cycle.

NOTE:Set this parameter based on the site requirements. For example, if a CDM job instance is used, data cannot be supplemented at the same time. The value of this parameter can only be set to 1.

Upstream or Downstream Job

Select the upstream and downstream jobs (jobs that depend on the current job) that require PatchData.

The job dependency graph is displayed. For details about the operations on the job dependency graph, see Batch Job Monitoring: Viewing a Job Dependency Graph.

NOTE:If you set Run PatchData Tasks Periodically to Yes, you can only select an upstream or downstream job with the same scheduling period as the job.

Patch Data by Day

If you select Yes, PatchData instances on the same day can be executed concurrently for a job, but those on different days cannot be executed concurrently. For example, a job instance scheduled at 5:00 and one scheduled at 6:00 can be executed concurrently, but a job instance scheduled on 1st of a month and one scheduled on 2nd of the month cannot be executed concurrently.

Yes: Data is patched by day.

No: Data is not patched by day.

Stop Upon Failure

This parameter is mandatory if Patch Data by Day is set to Yes.

Yes: If a daily PatchData task fails, subsequent PatchData tasks stop immediately.

No: If a daily PatchData task fails, subsequent PatchData tasks continue.

NOTE:If data is patched by day and a PatchData task fails on a day, no PatchData task will be executed on the next day. This function is supported only by daily PatchData tasks, and not by hourly PatchData tasks.

Priority

Select a PatchData priority. You can set the priority of a workspace-level PatchData job in Default Configuration.

NOTE:The priority of PatchData is higher than that of PatchData in the workspace.

Currently, only the priorities of DLI SQL operators can be set.

Ignore OBS Listening

- Yes: OBS listening is ignored in PatchData scenarios.

- No: The system listens to the OBS path in PatchData scenarios.

Set Running Period

Whether a running period can be set for the PatchData task.

- Click OK. The system starts to perform PatchData and the PatchData Monitoring page is displayed.

Batch Job Monitoring: Adding a Job Tag

Tags can be added to jobs to facilitate job instance filtering.

- Log in to the DataArts Studio console by following the instructions in Accessing the DataArts Studio Instance Console.

- On the DataArts Studio console, locate a workspace and click DataArts Factory.

- In the left navigation pane of DataArts Factory, choose .

- Click the Batch Job Monitoring tab.

- In the Operation column of a job, choose .

- In the Add Job Tag dialog box displayed, set the job tag parameters.

Figure 6 Parameters for adding a job tag

- Click OK.

Batch Job Monitoring: Viewing a Job Dependency Graph

In the job dependency graph, you can view the dependencies between jobs.

- Log in to the DataArts Studio console by following the instructions in Accessing the DataArts Studio Instance Console.

- On the DataArts Studio console, locate a workspace and click DataArts Factory.

- In the left navigation pane of DataArts Factory, choose .

- Click the Batch Job Monitoring tab.

- In the Operation column of a job, choose .

- On the displayed Job Dependency page, perform any of the following operations:

- In the upper right corner, select Display complete dependency graphs, Display the current job and its upstream and downstream jobs, or Display the current job and its directly connected jobs.

- In the search box in the upper right corner, you can enter the name of a node to search for the node. The node found will be highlighted.

- Click Download to download the job dependency file.

- Scroll your mouse wheel to zoom in or zoom out the dependency graph.

- Drag the blank area to view the complete relationship graph.

- When the cursor is hovered on a job node, the node is marked green, its upstream job is marked blue, and its downstream job is marked orange.

Figure 7 Marking upstream and downstream job nodes of a node

- Right-click a job node to view the job, copy the job name, and collapse upstream or downstream jobs.

Figure 8 Job node operations

You can also view the node monitoring information of a job on the job details page.

- Log in to the DataArts Studio console by following the instructions in Accessing the DataArts Studio Instance Console.

- On the DataArts Studio console, locate a workspace and click DataArts Factory.

- In the left navigation pane of DataArts Factory, choose .

- Click the Batch Job Monitoring tab.

- Click a job name and then a node to view monitoring information of the node.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot