Configuring an Offline Processing Migration Job

When creating an offline processing migration job, you can select the source and destination data and configure parameters to periodically synchronize all or incremental data of a table, database/table partition, or entire database from the source to a destination table.

This section describes the common configurations of an offline processing migration job. The configuration varies depending on the data source. For details, see Configuring Source Job Parameters and Configuring Destination Job Parameters.

Notes and Constraints

Pay attention to the precision of the field types at the source and destination. If the maximum value of the field type at the destination is less than the maximum value at the source (or the minimum value of the field type at the destination is greater than the minimum value of the field type at the source, or the precision is lower than the precision at the source), the write may fail or the precision may be truncated.

Prerequisites

You have completed the self-check. For details, see Performing a Check Before Using an Offline Job.

Procedure

- Create an offline processing migration job by referring to Creating an Offline Processing Migration Job.

- Configure types.

Figure 1 Configuring types

- Set the source connection type and destination connection type. For details about supported data sources, see Overview.

- Set Migration Job Type.

- Migration Type: The default value is Offline and cannot be changed.

- Migration Scenario: Select one from Single table, Database/Table partition, and Entire DB Migration. For details about the supported data sources, see Overview.

- Configure Network Resource Configuration.

- Select a created source data connection (DataArts Migration was selected for the connection). If no connection is available, create one by referring to Creating a DataArts Studio Data Connection.

Check whether the source and the resource group can communicate with each other. If they cannot, modify the network settings as prompted.

- Select a resource group. For details about how to create a cluster, see Creating a CDM Cluster.

If multiple clusters are selected, the system randomly delivers tasks. Therefore, you are advised to select clusters of the same version. Otherwise, the job may fail due to inconsistent cluster versions.

- Select a created destination data connection (DataArts Migration was selected for the connection). If no connection is available, create one by referring to Creating a DataArts Studio Data Connection.

Check whether the data connection is available. If the data connection is unavailable, change another one as prompted.

- Select a created source data connection (DataArts Migration was selected for the connection). If no connection is available, create one by referring to Creating a DataArts Studio Data Connection.

- Configure source parameters.

The configuration varies depending on the data source and synchronization scenario. After selecting a source connection, configure job parameters by referring to Configuring Source Job Parameters.

Table 1 Required source job parameters Scenario

Required Source Parameters

Field Mapping

Single table

- Basic parameters

- Advanced attributes

Supported

Database/Table partition

- Database/Table mode, exact match or regular expression match

- Advanced attributes

Supported

Entire DB migration

- Database tables to be migrated

- Advanced attributes

Not supported

- Configure destination parameters.

The configuration varies depending on the data source and synchronization scenario. After selecting a destination connection, configure job parameters by referring to Configuring Destination Job Parameters.

Pay attention to the precision of the field types at the source and destination. If the maximum value of the field type at the destination is less than the maximum value at the source (or the minimum value of the field type at the destination is greater than the minimum value of the field type at the source, or the precision is lower than the precision at the source), the write may fail or the precision may be truncated.

Table 2 Required destination job parameters Scenario

Required Destination Parameters

Field Mapping

Single table

- Basic parameters

- Advanced attributes

Supported

Database/Table partition

- Basic parameters

- Advanced attributes

Supported

Entire DB migration

Database and table matching policy

Not supported

- Configuring field mapping.

After configuring source and destination parameters, you need to configure the mapping between source and destination columns. After the field mapping is configured, the job writes source fields to fields of the corresponding types at the destination based on the field mapping.

- Field mapping configuration: Set the field mapping mode and batch field mapping rule.

- Field Mapping Mode

- Same name: Fields with the same name are mapped. Fields with the same column name are automatically mapped.

- Same row: Fields with different names but in the same row of the source and destination table are mapped. Source and destination fields in the same row are automatically mapped.

- Batch Field Mapping: This parameter is not displayed when Use SQL Statement in the source configuration is set to Yes.

Enter field mappings, with one field mapping in each row. Place fields from the source table to the left of the equal sign (=) and fields from the destination table to the right of the equal sign (=), for example, reader_column=writer_column.

Click View and Edit to set the batch field mapping.

- Field Mapping Mode

- Field mapping: batch conversion, field adding, and row moving

- Sensitive information detection: Check whether the source data contains sensitive information. If there is sensitive information, data cannot be migrated, and you need to modify the information as prompted.

- Set Converter: Convert source fields in batches.

Select the target fields and click Set Converter. In the displayed dialog box, create a converter as prompted.

- Delete Field: This parameter is unavailable when Use SQL Statement in the source configuration is set to Yes. Select the target fields and click Delete Field.

You can view the deleted fields in Removed Fields in the Add Field dialog box.

- Add Field: This parameter is unavailable when Use SQL Statement in the source configuration is set to Yes. You can add new fields or removed fields to the source and destination configurations.

The following types of fields are supported:

- Functions, such as now() and curdate() for MySQL, and now() and transaction_timestamp() for PostgreSQL

- Functions with keywords, for example, to_char(current_date,'yyyy-MM-dd') for PostgreSQL

- Fixed values, such as 123 and '123' (both indicate string 123)

- Variable values, for example, ${workDate} (workDate must be defined in the job variable.)

- Fixed variables for JDBC, such as DB_NAME_SRC (source database name), TABLE_NAME_SRC (source table name), and DATASOURCE_NAME_SRC (data source name)

- as statements are supported, such as '123' as test and now() as curTime.

- Move rows: This function is unavailable when Use SQL Statement in the source configuration is set to Yes. Drag the row of a field and move the row up or down.

- View converters: (Optional) CDM can convert fields. Click

and then click Create Converter. For details about how to use converters, see Configuring Field Converters.

and then click Create Converter. For details about how to use converters, see Configuring Field Converters. - Search for and match destination fields: Click

in the Operation column. In the displayed dialog box, search for a field by keyword or click a field directly.

in the Operation column. In the displayed dialog box, search for a field by keyword or click a field directly. - Delete fields: You can delete the default fields of the table. To delete a field, click

in the Operation column. Removed fields can be found in Removed Fields in the Add Field dialog box.

in the Operation column. Removed fields can be found in Removed Fields in the Add Field dialog box. - Example Field Mapping: This parameter is not displayed when Use SQL Statement in the source configuration is set to Yes. You can view the example mapping of source and destination fields.

- If files are migrated between FTP, SFTP, OBS, and HDFS, and the migration source's File Format is set to Binary, files will be directly transferred, free from field mapping.

- Field mapping configuration is not required for entire DB migration.

- During the migration, the field types at the source and destination may not match. As a result, dirty data is generated, and data cannot be written to the destination. For details about the number of dirty data records allowed during the migration, see the next step.

- If a field at the source is not mapped to a field at the destination, the field at the source will not be synchronized to the destination.

- In other scenarios, CDM automatically maps fields of the source table and the destination table. You need to check whether the mapping and time format are correct. For example, check whether the source field type can be converted into the destination field type.

- If the field mapping is incorrect, you can drag fields to adjust the mapping. (This function is supported when Use SQL Statement in the source configuration is set to No.)

- If you cannot obtain all columns by obtaining sample values on the field mapping page, you can click

to add a custom field or click

to add a custom field or click  in the Operation column to create a field converter to ensure that all required data can be imported to the destination.

in the Operation column to create a field converter to ensure that all required data can be imported to the destination. - In the Field Mapping area, you can click

to add custom constants, variables, and expressions.

to add custom constants, variables, and expressions. - The column name is available only the Extract first row as columns parameter is set to Yes during the migration of a CSV file from OBS.

- When SQLServer is the destination, fields of the timestamp type cannot be written. You must change their type (for example, to datetime) so that they can be written.

- If the data is imported to DWS, you need to select the distribution columns in the destination fields. You are advised to select the distribution columns according to the following principles:

- Use the primary key as the distribution column.

- If multiple data segments are combined as primary keys, specify all primary keys as the distribution column.

- In the scenario where no primary key is available, if no distribution column is selected, DWS uses the first column as the distribution column by default. As a result, data skew risks exist.

- Field mapping configuration: Set the field mapping mode and batch field mapping rule.

- Configuring task properties.

You can configure the parameters listed in Table 3 for data synchronization.

Table 3 Task parameters Parameter

Description

Example Value

Expected Max. Concurrent Threads

The maximum number of concurrent threads to read data from the source or write data to the destination. Due to the sharding policy, the actual number of concurrent threads may be smaller than the value of this parameter.

The maximum number of concurrent extractors for a cluster varies depending on the CDM cluster flavor. You are advised to set the maximum number of concurrent extractors to twice the number of vCPUs of the CDM cluster.

For example, the maximum number of concurrent extractors for a cluster with 8 vCPUs and 16 GB memory is 16.

3

Number of split retries

Number of retries when a split fails to be executed. Value 0 indicates that no retry will be performed.

NOTE:This parameter takes effect only when the destination is Hudi or DWS and the import mode is UPSERT.

0

Write Dirty Data

Whether to record dirty data. By default, dirty data is not recorded. If there is a large amount of dirty data, the synchronization speed of the task is affected.

- No: Dirty data is not recorded. This is the default value.

Dirty data is not allowed. If dirty data is generated during the synchronization, the task fails and exits.

- Yes: Dirty data is allowed, that is, dirty data does not affect task execution.

When dirty data is allowed and its threshold is set:

- If the generated dirty data is within the threshold, the synchronization task ignores the dirty data (that is, the dirty data is not written to the destination) and is executed normally.

- If the generated dirty data exceeds the threshold, the synchronization task fails and exits.

NOTE:Criteria for determining dirty data: Dirty data is meaningless to services, is in an invalid format, or is generated when the synchronization task encounters an error. If an exception occurs when a piece of data is written to the destination, this piece of data is dirty data. Therefore, data that fails to be written is classified as dirty data.

For example, if data of the VARCHAR type at the source is written to a destination column of the INT type, dirty data cannot be written to the migration destination due to improper conversion. When configuring a synchronization task, you can configure whether to write dirty data during the synchronization and configure the number of dirty data records (maximum number of error records allowed in a single partition) to ensure task running. That is, when the number of dirty data records exceeds the threshold, the task fails and exits.

No

Write Dirty Data Link

This parameter is only displayed when Write Dirty Data is set to Yes.

Only links to OBS support dirty data writes.

obslink

OBS Bucket

This parameter is only displayed when Write Dirty Data Link is a link to OBS.

Name of the OBS bucket to which the dirty data will be written.

dirtydata

Dirty Data Directory

This parameter is only displayed when Write Dirty Data is set to Yes.

Directory for storing dirty data on OBS. Dirty data is saved only when this parameter is configured.

You can go to this directory to query data that fails to be processed or is filtered out during job execution, and check the source data that does not meet conversion or cleaning rules.

/user/dirtydir

Max. Error Records in a Single Shard

This parameter is only displayed when Write Dirty Data is set to Yes.

When the number of error records of a single partition exceeds the upper limit, the job will automatically terminate and the imported data cannot be rolled back.

You are advised to use a temporary table as the destination table. After the data is imported, rename the table or combine it into the final data table.

0

Throttling

Whether to enable throttling for the synchronization. This rate indicates the CDM transmission rate, not the NIC traffic.

- Yes: By limiting the synchronization rate, you can prevent the source database from being overloaded due to a high extraction speed. The minimum rate allowed is 1 MB/s.

- No: The task provides the highest transmission performance with the existing hardware based on the configured maximum number of concurrent tasks.

NOTE:- It can control the rate for a job migrating data to DLI, OBS, Apache HDFS, or relational databases.

- To configure throttling for multiple jobs, multiply the rate by the number of concurrent jobs.

Yes

byteRate(MB/s)

Maximum rate for a job. To configure throttling for multiple jobs, multiply the rate by the number of concurrent jobs. The rate is an integer greater than 1. The unit is MB/s.

10

Max. Rows Migrated Concurrently per Second

Maximum number of rows that can be migrated concurrently per second. The unit is record/s.

100000

ChannelCapacity(Mb)

Amount of data that the intermediate queue can cache. The value ranges from 1 to 500.

If the amount of data of a row exceeds the value of this parameter, the migration may fail.

If the value of this parameter is too large, the cluster may not run properly.

Set an appropriate value for this parameter and use the default value unless otherwise specified.

64

Detect Sensitive Information in Jobs in Real Time

Whether to detect sensitive information in jobs in real time

No

- No: Dirty data is not recorded. This is the default value.

- Save the job.

After configuring the job, click Save in the upper left corner to save the job configuration.

If real-time sensitive information detection is enabled for a job, the system automatically checks whether the source data contains sensitive information. If there is sensitive information, data cannot be migrated. In this case, you must make modifications as prompted.

After the job is saved, a version is automatically generated and displayed in Versions. The version can be rolled back. If you save a job multiple times within a minute, only one version is recorded. If the intermediate data is important, you can click Save new version to save and add a version.

- Test the job.

After configuring the job, click Test in the upper left corner to test the job. If the test fails, view the logs of the job node and locate and rectify the fault.

- A test execution is similar to a single execution and migrates data.

- You can view the test run logs of the job by clicking View Log.

- If you test the job before submitting a version, the version of the generated job instance is 0 on the Job Monitoring page.

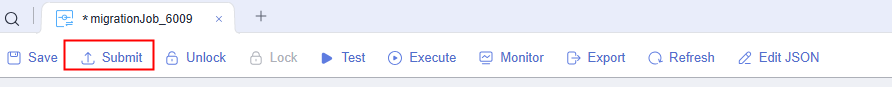

- Submit a job version.

If you want the job to be scheduled periodically, you need to release the job to the production environment. For how to release a job, see Releasing a Job Task.

Figure 2 Submitting the job

- Schedule the job.

Set the scheduling mode for the job. For details about how to schedule the job, see Setting Up Scheduling for a Job.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot