Creating a Data Integration Job

This section described how to create a DataArts Studio data integration job.

In this example, you need to create the following three types of integration jobs:

- Migrating Data from OBS to MySQL: To facilitate demonstration, you need to import the sample data in CSV format from OBS to the MySQL database.

- Migrating Data from MySQL to OBS: In the formal service process, the original sample data in MySQL needs to be imported to OBS and normalized as the vertex data sets and edge data sets.

- Migrating Data from MySQL to MRS Hive: In the formal service process, the original sample data in MySQL needs to be imported to MRS Hive and converted to standard vertex and edge data sets.

Creating a Cluster

DataArts Migration clusters can migrate data to the cloud and integrate data into the data lake. It provides wizard-based configuration and management and can integrate data from a single table or an entire database incrementally or periodically. The DataArts Studio basic package contains a CDM cluster. If the cluster cannot meet your requirements, you can buy a CDM incremental package.

For details about how to buy a CDM incremental package, see Buying a DataArts Migration Incremental Package.

Creating Data Integration Connections

- Log in to the CDM console and choose Cluster Management in the left navigation pane.

Another method: Log in to the DataArts Studio console by following the instructions in Accessing the DataArts Studio Instance Console. On the DataArts Studio console, locate a workspace and click DataArts Migration to access the CDM console.

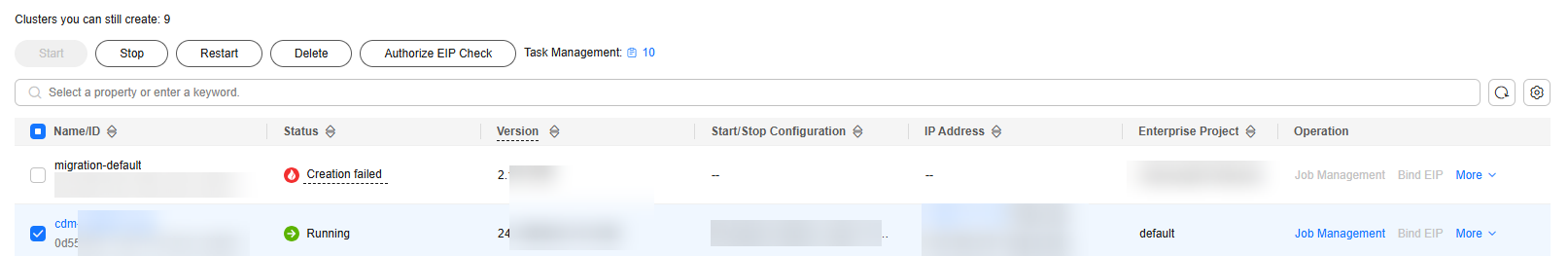

Figure 1 Cluster list

The Source column is displayed only when you access the DataArts Migration page from the DataArts Studio console.

- In the left navigation pane, choose Cluster Management. In the cluster list, locate the required cluster and click Job Management.

Figure 2 Cluster management

- On the Job Management page, click Links.

Figure 3 Links

- Create the OBS connection, Cloud Database MySQL connection, and MRS Hive connection required for an integration task.

Click Create Link. On the page displayed, select Object Storage Service (OBS) and click Next. Then, set the link parameters and click Save.Figure 4 Creating an OBS link

Table 1 Parameter description Parameter

Description

Example Value

Name

Link name, which should be defined based on the data source type, so it is easier to remember what the link is for

obs_link

OBS Endpoint

An endpoint is the request address for calling an API. Endpoints vary depending on services and regions. You can obtain the OBS bucket endpoint by either of the following means:

To obtain the endpoint of an OBS bucket, go to the OBS console and click the bucket name to go to its details page.

NOTE:- If the CDM cluster and OBS bucket are not in the same region, the CDM cluster cannot access the OBS bucket.

- Do not change the password or user when the job is running. If you do so, the password will not take effect immediately and the job will fail.

obs.myregion.mycloud.com

Port

Data transmission port. The HTTPS port number is 443 and the HTTP port number is 80.

443

OBS Bucket Type

Select a value from the drop-down list, generally, Object Storage.

Object Storage

AK

AK and SK are used to log in to the OBS server.

You need to create an access key for the current account and obtain an AK/SK pair.

To obtain an access key, perform the following steps:- Log in to the management console, move the cursor to the username in the upper right corner, and select My Credentials from the drop-down list.

- On the My Credentials page, choose Access Keys, and click Create Access Key. See Figure 5.

- Click OK and save the access key file as prompted. The access key file will be saved to your browser's configured download location. Open the credentials.csv file to view Access Key Id and Secret Access Key.

NOTE:

- Only two access keys can be added for each user.

- To ensure access key security, the access key is automatically downloaded only when it is generated for the first time and cannot be obtained from the management console later. Keep them properly.

-

SK

-

Link Attributes

(Optional) Displayed when you click Show Advanced Attributes.

You can click Add to add custom attributes for the link.

Only file.gen.identifier.only.when.data.migrate can be configured.

The following are some examples:

The file.gen.identifier.only.when.data.migrate parameter is used to generate a success identifier file. If it is set to true, the system checks whether data has been generated. If there is data, an identifier file is generated. Otherwise, the system does not check whether data has been generated.

-

On the Links tab page, click Create Link again. On the page displayed, select the RDS for MySQL connector and click Next. Then, set the connection parameters and click Save.

Table 2 MySQL link parameters Parameter

Description

Example Value

Name

Unique link name

mysqllink

Database Server

IP address or domain name of the MySQL database server

-

Port

MySQL database port

3306

Database Name

Name of the MySQL database

sqoop

Username

User who has the read, write, and delete permissions on the MySQL database

admin

Password

Password of the user

-

Use Local API

Whether to use the local API of the database for acceleration. (The system attempts to enable the local_infile system variable of the MySQL database.)

Yes

Use Agent

The agent function will be unavailable soon and does not need to be configured.

-

local_infile Character Set

When using local_infile to import data to MySQL, you can configure the encoding format.

utf8

Driver Version

Before connecting CDM to a relational database, you need to upload the JDK 8 .jar driver of the relational database. Download the MySQL driver 5.1.48 from https://downloads.mysql.com/archives/c-j/, obtain mysql-connector-java-5.1.48.jar, and upload it.

-

On the Links tab page, click Create Link again. On the page displayed, select MRS Hive and click Next. Then, set the link parameters and click Save.Figure 6 Creating an MRS Hive link

Table 3 MRS Hive link parameters Parameter

Description

Example Value

Name

Link name, which should be defined based on the data source type, so it is easier to remember what the link is for

hivelink

Manager IP

Enter or select the Manager IP address.

You can click Select to select a created MRS cluster. CDM automatically fills in the authentication information.

If the Hadoop type is MRS, enter the IP address of MRS Manager.

If the Hadoop type is FusionInsight HD, enter the IP address of FusionInsight HD Manager.

Enter the IP address based on the scenario and sequence.

- If you enter one IP address, enter the management-plane floating IP address of the MRS cluster.

- If you enter two IP addresses, enter the IP addresses of the active and standby nodes on the service plane of the MRS cluster. Use semicolons (;) to separate the IP addresses.

- If you enter three IP addresses, enter the IP address of the active node on the service plane of the MRS cluster, IP address of the standby node on the service plane of the MRS cluster, and the floating IP address of the management plane of the MRS cluster. Use semicolons (;) to separate the IP addresses.

NOTE:MRS clusters whose Kerberos encryption type is aes256-sha2,aes128-sha2 are not supported, and only MRS clusters whose Kerberos encryption type is aes256-sha1,aes128-sha1 are supported.

- 127.0.0.1

- 127.0.0.1;127.0.0.2;127.0.0.3

Authentication Method

Authentication method used for accessing MRS- SIMPLE: Select this for non-security mode.

- KERBEROS: Select this for security mode.

SIMPLE

Kerberos Authentication Type

This parameter is displayed when Authentication Method is set to KERBEROS.

You can select the BASIC or KEYTAB authentication type.

- BASIC: The username and password are used for authentication.

When you select this option:

- The link can be used as the source link. Data can be read through JDBC but not HDFS at the source, and concurrent reading is not supported.

- The link cannot be used as the destination link.

- HIVE_3_X is supported.

- KEYTAB: The keytab file is used for authentication.

KEYTAB

HIVE Version

Set this to the Hive version on the server.

HIVE_3_X

Username

If Authentication Method is set to KERBEROS, you must provide the username and password used for logging in to MRS Manager. If you need to create a snapshot when exporting a directory from HDFS, the user configured here must have the administrator permission on HDFS.

To create a data connection for an MRS security cluster, do not use user admin. The admin user is the default management page user and cannot be used as the authentication user of the security cluster. You can create an MRS user and set Username and Password to the username and password of the created MRS user when creating an MRS data connection.NOTE:- If the CDM cluster version is 2.9.0 or later and the MRS cluster version is 3.1.0 or later, the created user must have the permissions of the Manager_viewer role to create links on CDM. To perform operations on databases, tables, and columns of an MRS component, you also need to add the database, table, and column permissions of the MRS component to the user by following the instructions in the MRS documentation.

- If the CDM cluster version is earlier than 2.9.0 or the MRS cluster version is earlier than 3.1.0, the created user must have the permissions of Manager_administrator or System_administrator to create links on CDM.

- A user with only the Manager_tenant or Manager_auditor permission cannot create connections.

cdm

Password

Password used for logging in to MRS Manager

-

Enable ldap

This parameter is available when Proxy connection is selected for Connection Type.

If LDAP authentication is enabled for an external LDAP server connected to MRS Hive, the LDAP username and password are required for authenticating the connection to MRS Hive. In this case, this option must be enabled. Otherwise, the connection will fail.

No

ldapUsername

This parameter is mandatory when Enable ldap is enabled.

Enter the username configured when LDAP authentication was enabled for MRS Hive.

-

ldapPassword

This parameter is mandatory when Enable ldap is enabled.

Enter the password configured when LDAP authentication was enabled for MRS Hive.

-

OBS storage support

The server must support OBS storage. When creating a Hive table, you can store the table in OBS.

No

AK

This parameter is mandatory when OBS storage support is enabled. The account corresponding to the AK/SK pair must have the OBS Buckets Viewer permission. Otherwise, OBS cannot be accessed and the "403 AccessDenied" error is reported.

You need to create an access key for the current account and obtain an AK/SK pair.

- Log in to the management console, move the cursor to the username in the upper right corner, and select My Credentials from the drop-down list.

- On the My Credentials page, choose Access Keys, and click Create Access Key. See Figure 7.

- Click OK and save the access key file as prompted. The access key file will be saved to your browser's configured download location. Open the credentials.csv file to view Access Key Id and Secret Access Key.

NOTE:

- Only two access keys can be added for each user.

- To ensure access key security, the access key is automatically downloaded only when it is generated for the first time and cannot be obtained from the management console later. Keep them properly.

-

SK

-

Project ID

Project ID

-

Run Mode

This parameter is used only when the Hive version is HIVE_3_X. Possible values are:- EMBEDDED: The link instance runs with CDM. This mode delivers better performance.

- Standalone: The link instance runs in an independent process. If CDM needs to connect to multiple Hadoop data sources (MRS, Hadoop, or CloudTable) with both Kerberos and Simple authentication modes, Standalone prevails.

NOTE:

The STANDALONE mode is used to solve the version conflict problem. If the connector versions of the source and destination ends of the same link are different, a JAR file conflict occurs. In this case, you need to place the source or destination end in the STANDALONE process to prevent the migration failure caused by the conflict.

EMBEDDED

Check Hive JDBC Connectivity

Whether to check the Hive JDBC connectivity

No

Use Cluster Config

You can use the cluster configuration to simplify parameter settings for the Hadoop connection.

No

Cluster Config Name

This parameter is valid only when Use Cluster Config is set to Yes. Select a cluster configuration that has been created.

For details about how to configure a cluster, see Managing Cluster Configurations.

hive_01

Creating a Job for Migrating Data from OBS to MySQL

To facilitate demonstration, you need to import the sample data in CSV format from OBS to the MySQL database.

- On the DataArts Migration console, click Cluster Management in the left navigation pane, locate the required cluster in the cluster list, and click Job Management.

- On the Job Management page, click Table/File Migration and click Create Job.

Figure 8 Table/File Migration

- Perform the following steps to migrate the four original data tables in Preparing Data Sources from OBS to the MySQL database:

- Configure the vertex_user_obs2rds job.

Set Source Directory/File to the vertex_user.csv file uploaded to OBS in Preparing Data Sources. Because the table contains Chinese characters, you need to set encoding type to GBK. Set destination Table Name to the vertex_user table created in Making Preparations. Click Next.Figure 9 vertex_user_obs2rds job configuration

- In Map Field, check whether the field mapping sequence is correct. If the field mapping sequence is correct, click Next.

Figure 10 Field mapping of the vertex_user_obs2rds job

- The task configuration does not need to be modified. Save the configuration and run the task.

Figure 11 Configuring the task

- Configure the vertex_user_obs2rds job.

- Wait till the job is complete. If the job is successfully executed, the vertex_user table has been successfully migrated to the MySQL database.

Figure 12 vertex_user_obs2rds job completed

- Create the vertex_movie_obs2rds, edge_friends_obs2rds, and edge_rate_obs2rds jobs and migrate the four created original tables from OBS to MySQL based on instructions from Step 2 to Step 4.

Creating a Job for Migrating Data from MySQL to OBS

In the formal service process, the original sample data in MySQL needs to be imported to OBS and converted to standard vertex data sets and edge data sets.

- On the DataArts Migration console, click Cluster Management in the left navigation pane, locate the required cluster in the cluster list, and click Job Management.

- On the Job Management page, click Table/File Migration and click Create Job.

Figure 13 Table/File Migration

- Perform the following steps to migrate the four original data tables from MySQL to OBS buckets in sequence:

- Configure the vertex_user_rds2obs job.

Set the source table name to the name of the vertex_user table migrated to MySQL in Creating a Job for Migrating Data from OBS to MySQL. Set the destination write directory to a directory other than the directory where the raw data is stored, to avoid file overwriting. Set file format to CSV. Because the table contains Chinese characters, you need to set encoding type to GBK.

Note: You need to configure custom file name in the advanced attributes of the destination end. The value is ${tableName}. If this parameter is not set, the names of CSV files migrated to OBS will contain extra fields such as timestamps. As a result, the names of files obtained each time a migration job is executed are different, and the graph data cannot be automatically imported to GES after a migration.

Other advanced attributes do not need to be set. Click Next.Figure 14 Basic configuration of the vertex_user_rds2obs job Figure 15 Advanced configuration of the vertex_user_rds2obs job

Figure 15 Advanced configuration of the vertex_user_rds2obs job

- In field mapping, the label field needs to be added as the label of the graph file based on the GES graph data requirements.

- vertex_user: Set label to user and move this field to the second column.

- vertex_movie: Set label to movie and move this field to the second column.

- edge_friends: Set label to friends and move this field to the third column.

- edge_rate: Set label to rate and move this field to the third column.

Standardize the raw data structure based on the GES graph import requirements, that is, add labels to the second columns of vertex tables vertex_user and vertex_movie, and add labels to the third columns of edge tables edge_rate and edge_friends.

The vertex and edge data sets must comply with the data format requirements of GES graphs. The graph data format requirements are briefed as follows. For details, see Graph Data Formats.

The vertex and edge data sets must comply with the data format requirements of GES graphs. The graph data format requirements are briefed as follows. For details, see Graph Data Formats.- The vertex data set contains the data of each vertex. Each row is the data of a vertex. The format is as follows. id is the unique identifier of vertex data.

id,label,property 1,property 2,property 3,...

- The edge data set contains the data of each edge. Each row is the data of an edge. Graph specifications in GES are defined based on the edge quantity, for example, one million edges. The format is as follows. id 1 and id 2 are the IDs of the two endpoints of an edge.

id 1, id 2, label, property 1, property 2,...

Figure 16 Adding field mapping in the vertex_user_rds2obs job

- Adjust the field sequence. For the vertex data set, move label to the second column. For the edge data set, move label to the third column. After the adjustment is complete, as shown in Figure 18. Then, click Next.

Figure 17 Adjusting the field sequence of the vertex_user_rds2obs job

- The task configuration does not need to be modified. Save the configuration and run the task.

Figure 19 Configuring the task

- Configure the vertex_user_rds2obs job.

- Wait till the job is complete. If the job is successfully executed, the vertex_user.csv table has been successfully migrated to the OBS buckets.

Figure 20 vertex_user_rds2obs job completed

- Create the vertex_movie_rds2obs, edge_friends_rds2obs, and edge_rate_rds2obs jobs and write the four original tables from MySQL to OBS buckets based on instructions from Step 2 to Step 4.

Creating a Job for Migrating Data from MySQL to MRS Hive

In the formal service process, the original sample data in MySQL needs to be imported to MRS Hive and converted to standard vertex data sets and edge data sets.

- On the DataArts Migration console, click Cluster Management in the left navigation pane, locate the required cluster in the cluster list, and click Job Management.

- On the Job Management page, click Table/File Migration and click Create Job.

Figure 21 Table/File Migration

- Perform the following steps to migrate the four original data tables from MySQL to MRS Hive in sequence:

- Configure the vertex_user_rds2hive job.

Set source table name to the name of the vertex_user table migrated to the MySQL database in Creating a Job for Migrating Data from OBS to MySQL. Set the destination table name to the name of the vertex_user table created in Step 2. Set other parameters as shown in the following figure. The advanced attributes are not required. Then, click Next.

Figure 22 Basic configuration of the vertex_user_rds2hive job

- In field mapping, the label field needs to be added as the label of the graph file based on the GES graph data requirements.

- vertex_user: Set label to user and move this field to the second column.

- vertex_movie: Set label to movie and move this field to the second column.

- edge_friends: Set label to friends and move this field to the third column.

- edge_rate: Set label to rate and move this field to the third column.

Standardize the raw data structure based on the GES graph import requirements, that is, add labels to the second columns of vertex tables vertex_user and vertex_movie, and add labels to the third columns of edge tables edge_rate and edge_friends.

The vertex and edge data sets must comply with the data format requirements of GES graphs. The graph data format requirements are briefed as follows. For details, see Graph Data Formats.

The vertex and edge data sets must comply with the data format requirements of GES graphs. The graph data format requirements are briefed as follows. For details, see Graph Data Formats.- The vertex data set contains the data of each vertex. Each row is the data of a vertex. The format is as follows. id is the unique identifier of vertex data.

id,label,property 1,property 2,property 3,...

- The edge data set contains the data of each edge. Each row is the data of an edge. Graph specifications in GES are defined based on the edge quantity, for example, one million edges. The format is as follows. id 1 and id 2 are the IDs of the two endpoints of an edge.

id 1, id 2, label, property 1, property 2,...

Figure 23 Adding field mapping in the vertex_user_rds2hive job

- Adjust the field sequence. For the vertex file, move label to the second column. For the edge file, move label to the third column. After the adjustment is complete, as shown in Figure 25. Then, click Next.

Figure 24 Adjusting the field sequence of the vertex_user_rds2hive job

- The task configuration does not need to be modified. Save the configuration and run the task.

Figure 26 Configuring the task

- Configure the vertex_user_rds2hive job.

- Wait till the job is complete. If the job is successfully executed, the vertex_user table has been successfully migrated to MRS Hive.

Figure 27 vertex_user_rds2hive job completed

- Create the vertex_movie_rds2hive, edge_friends_rds2hive, and edge_rate_rds2hive jobs and migrate the four original tables from MySQL to MRS Hive based on instructions from Step 2 to Step 4.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot