Using Edge Cloud Resources in a Remote CCE Turbo Cluster

Cloud resources are categorized into two main types based on deployment location, service scope, and functions:

- Central cloud resources: deployed in large data centers to provide global, highly elastic, and full-featured cloud computing services, such as ECS and OBS.

- Edge cloud resources: distributed across physical or virtual devices located closer to data sources or end users to provide low-latency and localized computing capabilities. Such services include CloudPond.

CCE Turbo clusters enable seamless collaboration between central and edge cloud environments by supporting distributed clouds (HomeZones/CloudPond). This approach allows services to benefit from both low latency at the edge and strong computing resources at the central cloud. Once enabled, you can manage resources across both data centers and edge sites in a single cluster and flexibility select deployment location based on application requirements, as shown in Figure 1. CloudPond is used as an example to describe how to enable the support for distributed clouds (HomeZones/CloudPond) and how to deploy a cluster on edge cloud resources.

Concepts

- Availability zone (AZ): An AZ contains one or more physical data centers. Each AZ has independent cooling, fire suppression, moisture-proof, and electricity facilities. An AZ's computing, network, storage, and other resources are logically divided into multiple clusters. AZs within a region are interconnected using high-speed optical fibers to allow you to build cross-AZ high-availability systems. AZs are categorized into:

- Central AZs: deployed in the Huawei Cloud data centers, shared by public cloud users.

- Edge AZs: located in on-premises data centers, dedicated to CloudPond users.

- Partition: A partition is a logical unit for managing cloud computing resources. It is used to organize resources within a region or across AZs for collaborative scheduling and efficient management of both central cloud and edge computing resources. A partition can be understood from the following aspects:

- Computing: A partition consists of resources from one or more AZs, which are isolated yet geographically close (typically with latency under 2 ms). Deploying workloads across different AZs in the same partition enhances high availability.

- Partition type: Resources are categorized into a central cloud and one or more edge partitions.

Network Model of Distributed CCE Turbo Clusters

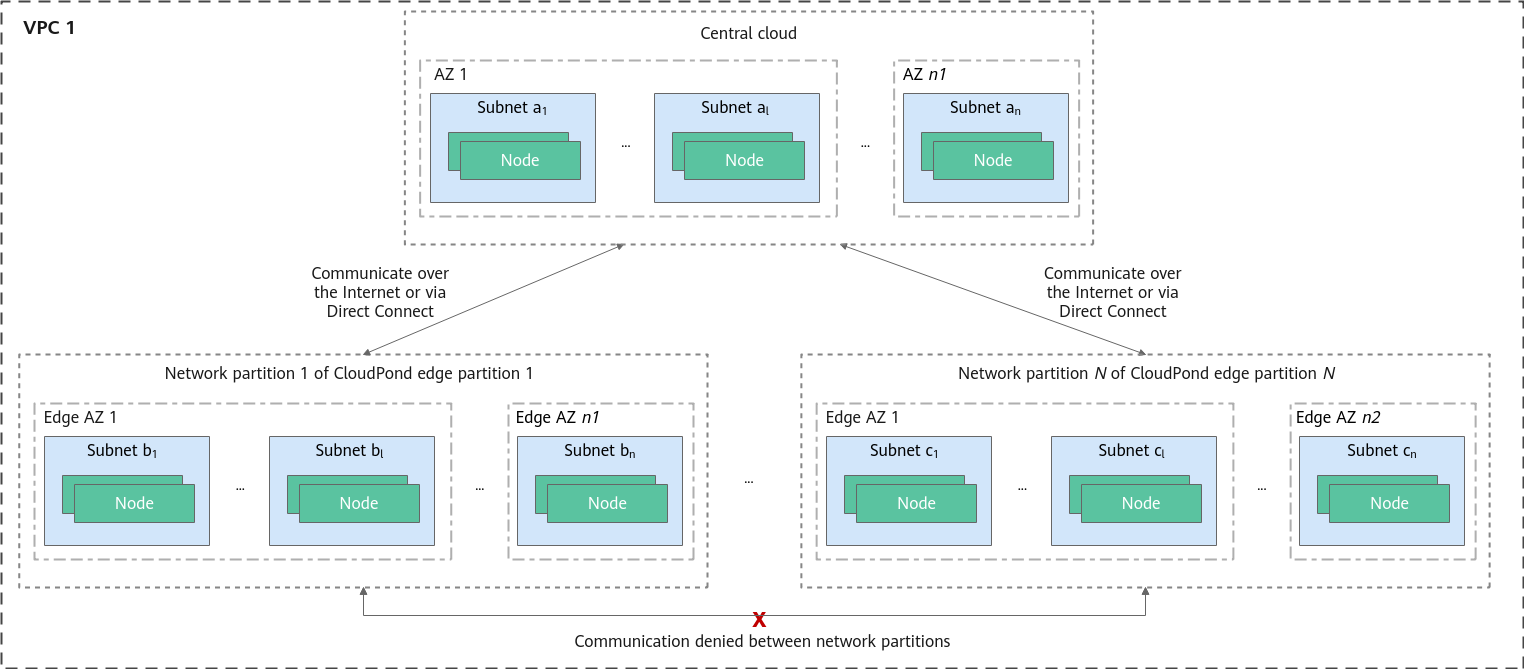

The following uses CloudPond as an example to describe the network framework of a distributed CCE Turbo cluster.

In a distributed cluster, the central cloud and edge nodes are deployed within the same VPC. However, the networking design differs from traditional public cloud setups. The key aspects of this architecture are as follows:

- Each edge partition corresponds to a network partition (PublicBoardGroup) and contains one or more AZs that are geographically close to each other. Each network partition communicates with the central cloud over the Internet or a private line. Different network partitions are isolated from each other and cannot communicate directly.

- The central cloud and edge partitions have distinct subnets that cannot be used interchangeably.

Each edge partition has a location relationship with its corresponding network partition. When initializing an edge partition, you need to associate it with a specific network partition and create subnets in the network partition for nodes and containers.

Process

|

Procedure |

Description |

Billing |

|---|---|---|

|

When creating a CCE Turbo cluster, enable the distributed cloud (HomeZones/CloudPond) in Advanced Settings. After this function is enabled, CCE will create a remote Turbo cluster. This cluster can seamlessly integrate with and use CloudPond edge computing resources for unified management and scheduling of central cloud and edge cloud resources. |

Enabling the distributed cloud does not incur any additional expenditures. You will be billed only for cluster management. For details, see Price Calculator. |

|

|

Create an edge subnet in the VPC that hosts the cluster, and associate it with the corresponding edge partition to allow nodes and containers to use the subnet. |

N/A |

|

|

Create an edge node in the cluster to seamlessly connect CloudPond edge resources to the remote Turbo cluster for centralized management. |

N/A |

|

|

Deploy a workload that requires low latency on the edge node to minimize data transmission delay, speed up response, and enhance real-time processing. |

N/A |

Prerequisites

Before enabling distributed clouds (HomeZones/CloudPond), you have registered and deployed the CloudPond service.

Notes and Constraints

- Constraints on edge cloud resources: When using distributed cloud resources, ensure that the network between the edge cloud and central cloud is connected and has sufficient bandwidth. If the edge region network experiences a failure, applications running on both the central management plane and edge data plane may be disrupted. For details about the bandwidth requirements and impact of a network fault, see Network Bandwidth Requirements and Impact of Network Interruptions in Edge Regions.

- Constraints on clusters

Cluster Resource

Constraint

Node

Only common x86 VMs can be used as edge nodes. Node migration is unavailable currently.

Node pool

Random scheduling of a node pool only applies to partitions.

Storage

You can only create EVS disks in edge regions.

Service and ingress

Only dedicated load balancers are supported.

Add-on

The following add-ons are supported by remote clusters and preferentially deployed on the nodes on the central cloud:Cloud native cluster monitoring

Monitoring Center: The Cloud Native Cluster Monitoring of 3.12.0 or later must be installed in the cluster.

Logging: The Cloud Native Log Collection of 1.7.2 or later must be installed in the cluster.

Alarm Center: The Cloud Native Cluster Monitoring of 3.12.0 or later must be installed in the cluster.

Step 1: Enable the Distributed Cloud

Enable the distributed cloud (HomeZones/CloudPond) during cluster creation. After this function is enabled, CCE will create a remote Turbo cluster, which can interconnect with CloudPond.

- Log in to the CCE console.

- On the Clusters page, click Buy Cluster.

- In the Advanced Settings area, enable Distributed Cloud (HomeZones/CloudPond). For details about other parameters, see Buying a CCE Standard/Turbo Cluster.

Figure 3 Enabling the distributed cloud (HomeZones/CloudPond)

- Click Next: Confirm Settings. The cluster resource list is displayed. Confirm the information and click Submit.

It takes about 5 to 10 minutes to create a cluster. You can click Back to Cluster List to perform other operations on the cluster or click Go to Cluster Events to view the cluster details.

Step 2: Initialize an Edge Partition

After a remote CCE Turbo cluster is created, CCE automatically detects CloudPond service information and creates an edge partition. However, at this stage, the edge partition remains uninitialized, meaning that the node subnet and container subnet have not yet been bound. Create edge subnets in the VPC hosting the cluster and initialize the edge partition to provide the necessary network resources for deploying cluster services. For details about network connectivity, see Configuring and Testing Network Connectivity Between an Edge Site and the Cloud.

- Create an edge subnet for initializing the edge partition by taking the following steps:

- Log in to the Network Console. In the navigation pane, choose Virtual Private Cloud > Subnets. In the right pane, click Create Subnet in the upper right corner.

- On the Create Subnet page, configure parameters as required. This example describes only some parameters. For details about other parameters, see Creating a Subnet for an Existing VPC.

Figure 4 Creating a subnet

Table 1 Subnet settings Parameter

Description

Region

Select the region where the cluster is located.

VPC

Select the VPC where the cluster is located.

AZ Type

Select Edge for using CloudPond resources.

Edge

Select the edge AZ to be used.

- Click Create Now in the lower right corner.

- Associate the node subnet and container subnet with the edge partition to initialize the partition by taking the following steps:

- Go back to the CCE console and click the cluster name to access the cluster console. In the navigation pane, choose Overview.

- In the navigation pane, choose Cluster > Partitions. In the right pane, choose Unavailable Partitions > Local DC and click the name of the target partition.

- In the Initialize dialog box displayed, select the created edge subnet for both Node Subnet and Pod Subnet and click OK. If there are multiple edge subnets, you can select a proper one for initialization.

Figure 5 Initializing an edge partition

- Wait until the initialization is complete.

Step 3: Create an Edge Node

Create an edge node in the cluster to seamlessly connect CloudPond edge resources to the remote Turbo cluster for centralized management.

The edge nodes created in the cluster will have the following taint and Kubernetes labels added by default:

- Taint: distribution.io/category=IES:NoSchedule

- Kubernetes labels:

- distribution.io/category=IES

- distribution.io/partition=<AZ name>, where <AZ name> specifies the name of an edge AZ.

- distribution.io/publicbordergroup=<AZ name>, where <AZ name> specifies the name of an edge AZ.

- Go back to the CCE console and click the cluster name to access the cluster console. In the navigation pane, choose Overview.

- In the navigation pane, choose Cluster > Nodes. In the right pane, click the Nodes tab and click Create Node in the upper right corner.

- In the Node Configuration area, set Billing Mode to Pay-per-use, set AZ to the corresponding edge AZ, and configure other parameters by referring to Creating a Node.

- Click Next: Confirm in the lower right corner. Confirm the configuration and click Submit in the lower right corner. Click Back to Node List. The node is created successfully if it changes to the Running state.

It takes about 6 to 10 minutes to create a node.

Figure 6 Node created

Step 3: Deploy a Workload on the Edge Node

After the edge node is created, CCE automatically adds the taint distribution.io/category=IES:NoSchedule to it. This taint prevents workloads with no matching toleration from being scheduled to that node. To schedule a workload to the edge node, the following conditions must be met:

- Toleration: The workload has the matching toleration configured. The scheduling also depends on other factors like the available resources and scheduling rules of the node.

- (Optional) Node affinity: The workload has the required node affinity configured.

Only some parameters are described. For details about other parameters, see Creating a Workload.

- In the navigation pane, choose Workloads. In the upper right corner of the displayed page, click Create Workload.

- In the Advanced Settings area, click Toleration and add the distribution.io/category toleration policy for the workload. For details about the parameters, see Configuring Tolerance Policies.

Figure 7 Adding a toleration

Table 2 Toleration settings Parameter

Description

Taint key

Enter distribution.io/category.

Operator

Select Equal. This operator requires a perfect match of the taint key and value. If the taint value is left blank, all taints with the key the same as the specified taint key will be matched.

Taint value

Enter IES.

Taint Policy

Select NoSchedule. This ensures that the taint with the NoSchedule effect is matched.

- (Take this step only when you need to schedule the workload to a specified edge node.) In the Advanced Settings area, click Scheduling. Set Node Affinity to Customize affinity. In the Required area, add the scheduling policy shown in the figure below. With this scheduling policy added, the workload can be scheduled to a specific edge node. You can add more policies to schedule workloads more precisely. For details, see Configuring Node Affinity Scheduling (nodeAffinity).

Figure 8 Adding a scheduling policy

- Configure other parameters and click Create Workload in the lower right corner. If the workload status changes to Running, the workload has been created.

- Click the workload name to access the workload details page. Click the Pods tab and view the node where the workload pod runs. You can then check whether the pod has been scheduled to the edge node. The below figure shows that the pod has been scheduled to the 10.12.155.29 node, which is the edge node created in Step 3: Create an Edge Node.

Figure 9 Verifying the scheduling

- Use kubectl to access the cluster. For details, see Accessing a Cluster Using kubectl.

- Create a description file named nginx-deployment.yaml. nginx-deployment.yaml is an example file name, and you can rename it as needed.

vi nginx-deployment.yamlBelow is the file content. For details about the toleration and node affinity parameters, see Configuring Tolerance Policies and Configuring Node Affinity Scheduling (nodeAffinity).apiVersion: apps/v1 kind: Deployment metadata: name: nginx spec: replicas: 1 selector: matchLabels: app: nginx strategy: type: RollingUpdate template: metadata: labels: app: nginx spec: containers: - image: nginx # If you use an image from an open-source image registry, enter the image name. If you use an image in My Images, obtain the image address from SWR. imagePullPolicy: Always name: nginx imagePullSecrets: - name: default-secret tolerations: # Add the required tolerations. - key: node.kubernetes.io/not-ready operator: Exists effect: NoExecute tolerationSeconds: 300 - key: node.kubernetes.io/unreachable operator: Exists effect: NoExecute tolerationSeconds: 300 - key: distribution.io/category # Taint key. Set it to distribution.io/category. operator: Equal # Operator. Set it to Equal for a perfect match between the taint and toleration key and value. value: IES # Taint value. Set it to IES. effect: NoSchedule # Taint policy. Set it to NoSchedule, which ensures that the taint with the NoSchedule effect is matched. affinity: # Configure a scheduling policy. nodeAffinity: # Node affinity scheduling requiredDuringSchedulingIgnoredDuringExecution: # Scheduling policy that must be met nodeSelectorTerms: # Select a node that meets the requirements according to the node label. - matchExpressions: # Node label matching rule - key: distribution.io/category # The key of the node label is distribution.io/category. operator: In # The rule is met if a value exists in the value list. values: # The value of the node label is IES. - IES - Create the workload.

kubectl create -f nginx-deployment.yaml

If information similar to the following is displayed, the workload is being created:

deployment.apps/nginx created

- Check whether the workload pod has been created and the node where the pod runs.

kubectl get deployment -o wide

The command output shows that the pod has been created and is in the Running state, and it has been scheduled to the 10.12.155.29 node, which is the edge node created in Step 3: Create an Edge Node.

NAME READY STATUS RESTARTS AGE IP NODE nginx-856bb778d4-vt8r5 1/1 Running 0 13m 10.12.0.249 10.12.155.29

Network Bandwidth Requirements and Impact of Network Interruptions in Edge Regions

- During node creation, the network bandwidth requirement is directly proportional to the number of nodes. For every 100 nodes, 10-Mbit/s bandwidth is required.

- During node operation, the required bandwidth is determined by factors such as the scale of service containers, download density, and the volume of service O&M data (mainly logs). You are advised to configure 10-Mbit/s bandwidth or higher, and you can adjust the bandwidth as needed.

If a network fault occurs in an edge region, applications deployed on the management plane in the central region and the data plane in the edge region may be affected.

|

Impact |

Interrupted Management Plane |

Interrupted Edge Data Plane (VPC) |

|---|---|---|

|

Cloud service management, including creation, deletion, and configuration modification |

Cloud service management is unavailable. |

Cloud service management requests in the affected region are interrupted and cannot be sent to the edge data plane. |

|

Cloud service instances |

Cloud service instances are not affected. |

|

|

Cloud service O&M |

Cloud service O&M is unavailable. |

Cloud service O&M in the affected region is interrupted. |

Helpful Links

- For details about CloudPond, see What Is CloudPond?

- For details about service communication links, see Networking Requirements.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot