Preparations

To ensure a smooth migration, you need to make the following preparations.

Preparing a Huawei Account

Before using MgC, prepare a HUAWEI ID or an IAM user that can access MgC and obtain an AK/SK pair for the account or IAM user. For details, see Preparations.

When you create a target connection, if you want to collect and view resource usage information, assign the read-only permission (ReadOnly) for MRS and DLI to the IAM user.

Obtaining an AK/SK Pair for Your Alibaba Cloud Account

Obtain an AK/SK pair for your Alibaba Cloud account. For more information, see Viewing the AccessKey Pairs of a RAM User.

Ensure that the AK/SK pair has the following permissions:

- AliyunReadOnlyAccess: read-only permission for OSS

- AliyunMaxComputeReadOnlyAccess: read-only permission for MaxCompute

For details about how to obtain these permissions, see Granting Permissions to RAM Users.

(Optional) If there are partitioned tables to be migrated, the Information Schema permission is required for the source account. To learn how to obtain the permission, see Authorization for RAM Users.

Creating a Big Data Migration Project

On the MgC console, create a migration project. For details, see Managing Migration Projects.

Configuring an Agency

To ensure that DLI functions can be used properly, you need to configure an agency with DLI and OBS permissions.

- Sign in to the Huawei Cloud console.

- Click the username in the upper right corner and choose Identity and Access Management from the drop-down list.

- In the navigation pane, choose Agencies.

- Click Create Agency.

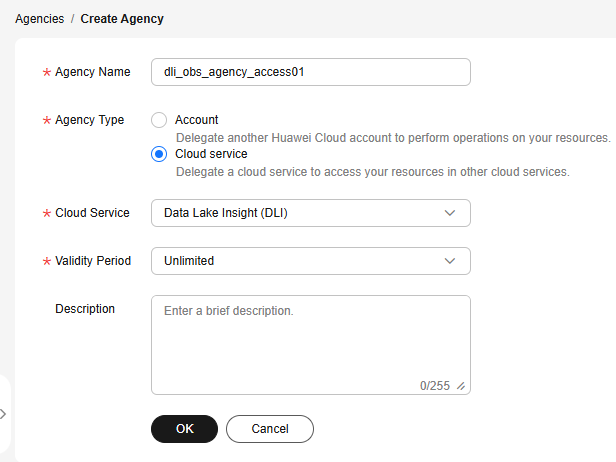

- On the Create Agency page, set the following parameters:

- Agency Name: Set a name, for example, dli_obs_agency_access.

- Agency Type: Select Cloud service.

- Cloud Service: Select Data Lake Insight (DLI) from the drop-down list.

- Validity Period: Set a period as needed.

- Description: This parameter is optional.

- After configuring the basic information, click Create. A dialog box is displayed, indicating that the agency is created successfully.

- Click Authorize Now.

- Click Create Policy in the upper right corner. Create two policies (one for OBS and one for DLI) by referring to step 9 and step 10. If there are existing policies containing the required permissions, you can use them.

- Configure policy information.

- Policy Name: Set a name, for example, dli-obs-agency.

- Policy View: Select JSON.

- Copy and paste the following content to the Policy Content box.

Replace <bucket-name> with the name of the bucket where the JAR packages are stored.

{ "Version": "1.1", "Statement": [ { "Effect": "Allow", "Action": [ "obs:bucket:GetBucketPolicy", "obs:bucket:GetLifecycleConfiguration", "obs:bucket:GetBucketLocation", "obs:bucket:ListBucketMultipartUploads", "obs:bucket:GetBucketLogging", "obs:object:GetObjectVersion", "obs:bucket:GetBucketStorage", "obs:bucket:GetBucketVersioning", "obs:object:GetObject", "obs:object:GetObjectVersionAcl", "obs:object:DeleteObject", "obs:object:ListMultipartUploadParts", "obs:bucket:HeadBucket", "obs:bucket:GetBucketAcl", "obs:bucket:GetBucketStoragePolicy", "obs:object:AbortMultipartUpload", "obs:object:DeleteObjectVersion", "obs:object:GetObjectAcl", "obs:bucket:ListBucketVersions", "obs:bucket:ListBucket", "obs:object:PutObject" ], "Resource": [ "OBS:*:*:bucket:<bucket-name>",//Replace <bucket-name> with the name of the bucket where the JAR packages are stored. "OBS:*:*:object:*" ] }, { "Effect": "Allow", "Action": [ "obs:bucket:ListAllMyBuckets" ] } ] }

- Configure policy information.

- Policy Name: Set a name, for example, dli-agency.

- Policy View: Select JSON.

- Copy and paste the following content to the Policy Content box.

{ "Version": "1.1", "Statement": [ { "Effect": "Allow", "Action": [ "dli:table:showPartitions", "dli:table:alterTableAddPartition", "dli:table:alterTableAddColumns", "dli:table:alterTableRenamePartition", "dli:table:delete", "dli:column:select", "dli:database:dropFunction", "dli:table:insertOverwriteTable", "dli:table:describeTable", "dli:database:explain", "dli:table:insertIntoTable", "dli:database:createDatabase", "dli:table:alterView", "dli:table:showCreateTable", "dli:table:alterTableRename", "dli:table:compaction", "dli:database:displayAllDatabases", "dli:database:dropDatabase", "dli:table:truncateTable", "dli:table:select", "dli:table:alterTableDropColumns", "dli:table:alterTableSetProperties", "dli:database:displayAllTables", "dli:database:createFunction", "dli:table:alterTableChangeColumn", "dli:database:describeFunction", "dli:table:showSegments", "dli:database:createView", "dli:database:createTable", "dli:table:showTableProperties", "dli:database:showFunctions", "dli:database:displayDatabase", "dli:table:alterTableRecoverPartition", "dli:table:dropTable", "dli:table:update", "dli:table:alterTableDropPartition" ] } ] }

- Click Next.

- Select the created custom policies with OBS and DLI permissions and click Next. Set Scope to All resources.

- Click OK. It takes 15 to 30 minutes for the authorization to be in effect.

- Update the agency permissions by referring to Updating DLI Agency Permissions.

Creating a VPC

Before purchasing an ECS, you need to create a VPC and subnet to deploy it. For details, see Creating a VPC and Subnet.

You need to deploy an ECS in this VPC and buy a DLI elastic resource pool in a different VPC. A DLI resource pool defaults to run in the CIDR block 172.16.0.0/18.

Purchasing an ECS

- Purchase a Linux ECS. The ECS and the DLI resources you prepare later must be in the same region. For details, see Purchasing an ECS. Select the created VPC and subnet for the ECS. The ECS must:

- Be able to access the Internet and the domain names of MgC, IoTDA, and other cloud services. For details about the domain names to be accessed, see Domain Names.

- Allow outbound traffic on 8883 if it is in a security group.

- Run CentOS 8.X.

- Have at least 8 vCPUs and 16 GB of memory.

- Configure an EIP for the ECS, so that the ECS can access the Internet. For details, see Assigning an EIP and Binding an EIP to an ECS.

- Billing Mode: Select Pay-per-use.

- Bandwidth: 5 Mbit/s is recommended.

Installing the MgC Agent (formerly Edge) and Connecting It to MgC

- On the purchased ECS, install the MgC Agent, which will be used for data verification. For details, see Installing the MgC Agent on Linux.

- Sign in to the MgC Agent console. In the address box of a browser, enter the NIC IP address of the Linux ECS and port 27080, for example, https://x.x.x.x:27080. Enter the AK/SK pair of your Huawei Cloud account to log in to the MgC Agent console.

- Connect the MgC Agent to MgC.

- When connecting the MgC Agent to MgC, save the entered AK/SK pair of your Huawei Cloud account as the target credentials, which will be used for migration later.

- The MgC Agent does not support automatic restart. Do not restart the MgC Agent during task execution, or tasks will fail.

Adding Credentials

On the MgC Agent console, add the AK/SK pairs of your Alibaba Cloud account and Huawei Cloud account. For details, see Adding Resource Credentials.

Creating an OBS Bucket and Uploading JAR Packages

Create a bucket on Huawei Cloud OBS and upload the Java files (in JAR packages) that data migration depends on to the bucket. For details about how to create an OBS bucket, see Creating a Bucket. For details about how to upload files, see Uploading an Object.

The JAR packages that data migration depends on are migration-dli-spark-1.0.0.jar, fastjson-1.2.54.jar, and datasource.jar. Below, you'll find a description of what each package is used for and how to obtain them.

- migration-dli-spark-1.0.0.jar

- This package is used to create Spark sessions and submit SQL statements.

- You can obtain the package from the /opt/cloud/MgC-Agent/tools/plugins/collectors/bigdata-migration/dliSpark directory on the ECS where the MgC Agent is installed.

- fastjson-1.2.54.jar

- This package provides fast JSON conversion.

- You can obtain the package from the /opt/cloud/MgC-Agent/tools/plugins/collectors/bigdata-migration/deltaSpark directory on the ECS where the MgC Agent is installed.

- datasource.jar

- This package contains the configuration and connection logic of data sources and allows the MgC Agent to connect to different databases or data storage systems.

- You need to obtain and compile files in datasource.jar as required.

Purchasing an Elastic Resource Pool and Adding a Queue on DLI

- Sign in to the DLI console. In the navigation pane on the left, choose Resources > Resource Pool.

- Click Buy Resource Pool

An elastic resource pool provides compute resources (CPU and memory) required for running DLI jobs. For details about how to buy a resource pool, see Buying an Elastic Resource Pool.

- Create queues within the elastic resource pool.

You need to add a general-purpose queue and an SQL queue to the pool for running jobs. For more information, see Creating Queues in an Elastic Resource Pool.

Creating an Enhanced Datasource Connection on DLI

- Sign in to the DLI console. In the navigation pane on the left, choose Resources > Resource Pool.

- Click

next to the name of the elastic resource pool. In the expanded information, obtain the CIDR block of the elastic resource pool.

next to the name of the elastic resource pool. In the expanded information, obtain the CIDR block of the elastic resource pool. - For the security group of the ECS with the MgC Agent installed, add an inbound rule to allow access from the CIDR block of the elastic resource pool.

- Sign in to the ECS console.

- In the ECS list, click the name of the Linux ECS with the MgC Agent installed.

- Click the Security Groups tab and click Manage Rule.

- Under Inbound Rules, click Add Rule.

- Configure parameters for the inbound rule.

- Priority: Set it to 1.

- Action: Select Allow.

- Type: Select IPv4.

- Protocol & Port: Select Protocols/All.

- Source: Select IP Address and enter the CIDR block of the elastic resource pool.

- Click OK.

- Switch to the DLI console. In the navigation pane on the left, click Datasource Connections.

- Under Enhanced, click Create.

- Configure enhanced datasource connection information based on Table 1.

Table 1 Parameters for creating an enhanced connection Parameter

Configuration

Connection Name

User-defined

Resource Pool

Select the purchased elastic resource pool.

VPC

Select the created VPC and subnet.

Subnet

Route Table

Retain the preset value.

Host Information

Add the information about the source MaxCompute hosts in the following format:

EndpointIP Endpoint (with a space in between)

TunnelEndpointIP TunnelEndpoint (with a space in between)

Separate two pieces of information by pressing Enter. For example:

118.178.xxx.xx service.cn-hangzhou.maxcompute.aliyun.com.vipgds.alibabadns.com

47.97.xxx.xx dt.cn-hangzhou.maxcompute.aliyun.com

To obtain the values of EndpointIP and TunnelEndpointIP, use any server with a public IP address to ping the endpoint and tunnel endpoint of the region where the source MaxCompute cluster is located. The corresponding IP addresses will display in the command output.

For details about MaxCompute endpoints and Tunnel endpoints, see Endpoints in different regions.

- Click OK. After the creation process is finished, the Connection Status of the newly created connection will be Active, indicating that the connection has been successfully created.

Adding and Configuring Routes

- Add routes.

Add two routes for the DLI enhanced datasource connection. For details, see Adding a Route. In the routes, the IP address must be the same as the host IP addresses configured during datasource connection creation.

- Configure routes.

- Sign in to the VPC console.

- In the navigation pane on the left, choose Virtual Private Cloud > Route Tables.

- In the route table list, click the route table used for creating a datasource connection (that is, the route table of the VPC where the ECS resides).

- Click Add Route.

- Set the parameters by following the instructions. You need to click

to add two routes.

to add two routes.

- Destination Type: Select IP address.

- Destination: Enter the host IP address configured during datasource connection creation.

- Next Hop Type: Select Server.

- Next Hop: Select the purchased ECS.

Configuring SNAT Rules

Configure SNAT rules for the ECS by following the instructions below. Restarting the ECS will clear the rules, so you will need to reconfigure them after a restart.

- Log in to the purchased ECS.

- Run the following commands in sequence:

sysctl net.ipv4.ip_forward=1

This command is used to enable IP forwarding in Linux.

iptables -t nat -A POSTROUTING -o eth0 -s {CIDR block where the DLI elastic resource pool resides} -j SNAT --to {Private IP address of the ECS}This command is used to set an iptables rule for network address translation.

Testing the Connectivity Between the DLI Queue and the Data Source

- Sign in to the DLI console. In the navigation pane on the left, choose Resources > Queue Management.

- Locate the elastic resource pool that the DLI queue was added and choose More > Test Address Connectivity in the Operation column.

- Enter the endpoint, endpoint IP address, Tunnel endpoint, and Tunnel endpoint IP address of MaxCompute to perform four connectivity tests. You are advised to use port 443.

- Click Test.

If the test address is reachable, you will receive a message.

If the test address is unreachable, you will also receive a message. Check the network configurations and try again. Network configurations include the VPC peering and the datasource connection. Check whether they have been activated.

Adding to the DLI Spark 3.3 Whitelist and the JAR Program Whitelist for Metadata Access

Contact the DLI technical support to whitelist you to use the DLI Spark 3.3 feature and allow Jar access to DLI metadata.

(Optional) Enabling DLI Spark Lifecycle Whitelist

If the metadata to be migrated has a lifecycle (that is, the DDL contains the LIFECYCLE field), contact DLI technical support to enable the Spark lifecycle feature whitelist.

(Optional) Enabling the CIDR Block 100 Whitelist

If you use Direct Connect to migrate data, you need to request VPC support to enable the whitelist of the 100.100.x.x segment.

Submit a service ticket to the VPC service and provide the following information:

- Huawei Cloud account and project ID of the region where your DLI resources reside. For details about how to obtain them, see API Credentials.

- DLI tenant name and tenant project ID: Contact DLI technical support to obtain them.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot