Buying a Kafka Instance

Kafka instances are tenant-exclusive, and physically isolated in deployment. You can customize the computing capabilities and storage space of a Kafka instance as required.

Video Tutorial

This video shows how to customize a Kafka instance.

Preparing Instance Dependencies

Before creating a Kafka instance, prepare the resources listed in Table 1.

|

Resource |

Requirement |

Operations |

|---|---|---|

|

VPC and subnet |

You need to configure a VPC and subnet for the Kafka instance as required. You can use the current account's existing VPC and subnet or shared ones, or create new ones. VPC owners can share the subnets in a VPC with one or multiple accounts through Resource Access Manager (RAM). Through VPC sharing, you can easily configure, operate, and manage multiple accounts' resources at low costs. For more information about VPC and subnet sharing, see VPC Sharing. Note when creating a VPC and a subnet:

|

For details on how to create a VPC and a subnet, see Creating a VPC and Subnet. If you need to create and use a new subnet in an existing VPC, see Creating a Subnet for an Existing VPC. |

|

Security group |

Different Kafka instances can use the same or different security groups. The security group must be in the same region as the Kafka instance. Before accessing a Kafka instance, configure security groups based on the access mode. For details, see Table 2. |

For details on how to create a security group, see Creating a Security Group. For details on how to add rules to a security group, see Adding a Security Group Rule. |

|

EIP |

To access a Kafka instance on a client over a public network, create EIPs in advance. Note the following when creating EIPs:

|

For details about how to create an EIP, see Assigning an EIP. |

Notes and Constraints

- SASL_SSL cannot be manually configured for instances with IPv6 enabled.

- Ciphertext access and Smart Connect are unavailable for single-node instances.

Buying a Kafka instance

DMS for Kafka provides multiple options for you to purchase Kafka instances.

|

How to Purchase |

Scenario |

|---|---|

|

Quickly configuring a cluster Kafka instance |

For a quick purchase, DMS for Kafka offers preconfigured instance specifications. |

|

Customizing a single-node/cluster Kafka instance |

For a standard purchase, you can customize a single-node or cluster Kafka instance as required. |

- Go to the Buy Instance page.

- Set basic instance configurations on the Quick Config page.

Table 3 Basic instance configuration parameters Parameter

Description

Billing Mode

- Yearly/Monthly is a prepaid mode. You need to pay first, and will be billed for your subscription period.

- Pay-per-use is a postpaid mode. You can pay after using the service, and will be billed for your usage duration. The fees are calculated in seconds and settled by hour.

Region

DMS for Kafka instances in different regions cannot communicate with each other over an intranet. Select a nearest location for low latency and fast access.

AZ

An AZ is a physical region where resources use independent power supply and networks. AZs are physically isolated but interconnected through an internal network.

Select one AZ or at least three AZs. The AZ setting is fixed once the instance is created.

- Select the bundle.

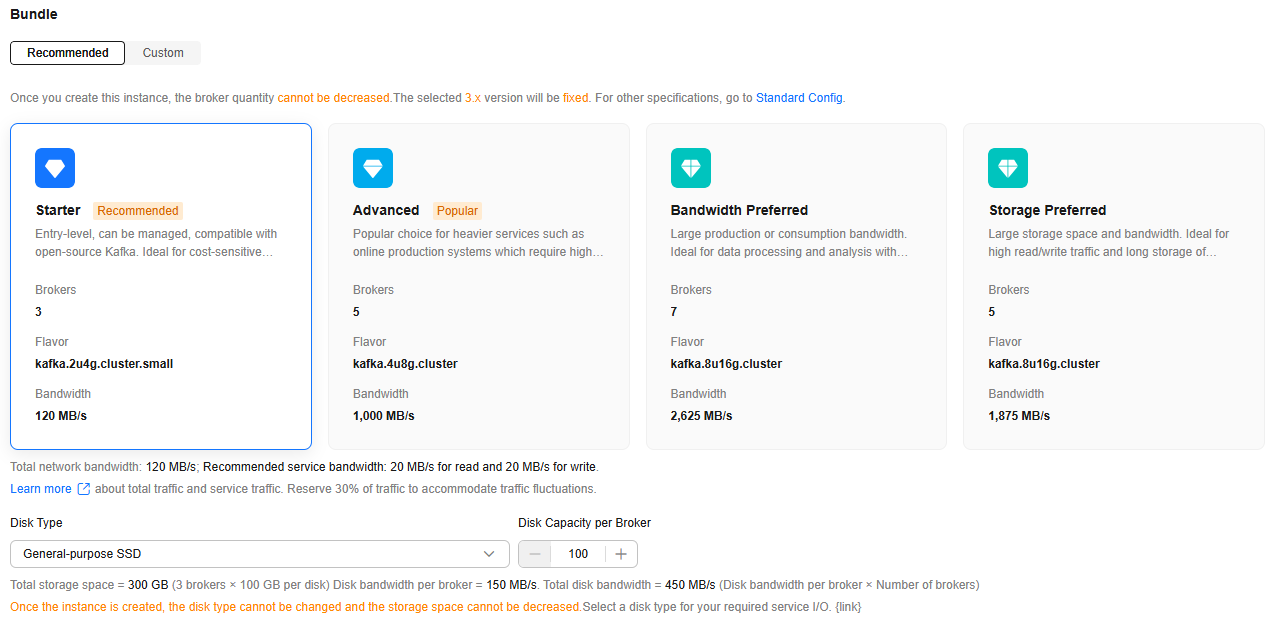

- Recommended: Select a preset DMS for Kafka bundle as required. Specify the disk type and capacity as required. The disk type cannot be changed once the Kafka instance is created.

The storage space is consumed by message replicas, logs, and metadata. Specify the storage space based on the expected service message size, the number of replicas, and the reserved disk space. Each Kafka broker reserves 33 GB disk space for storing logs and metadata.

Disks are formatted when an instance is created. As a result, the actual available disk space is 93% to 95% of the total disk space.

The disk supports high I/O, ultra-high I/O, Extreme SSD, and General Purpose SSD types. For more information, see Disk Types and Performance.

Figure 1 Recommended

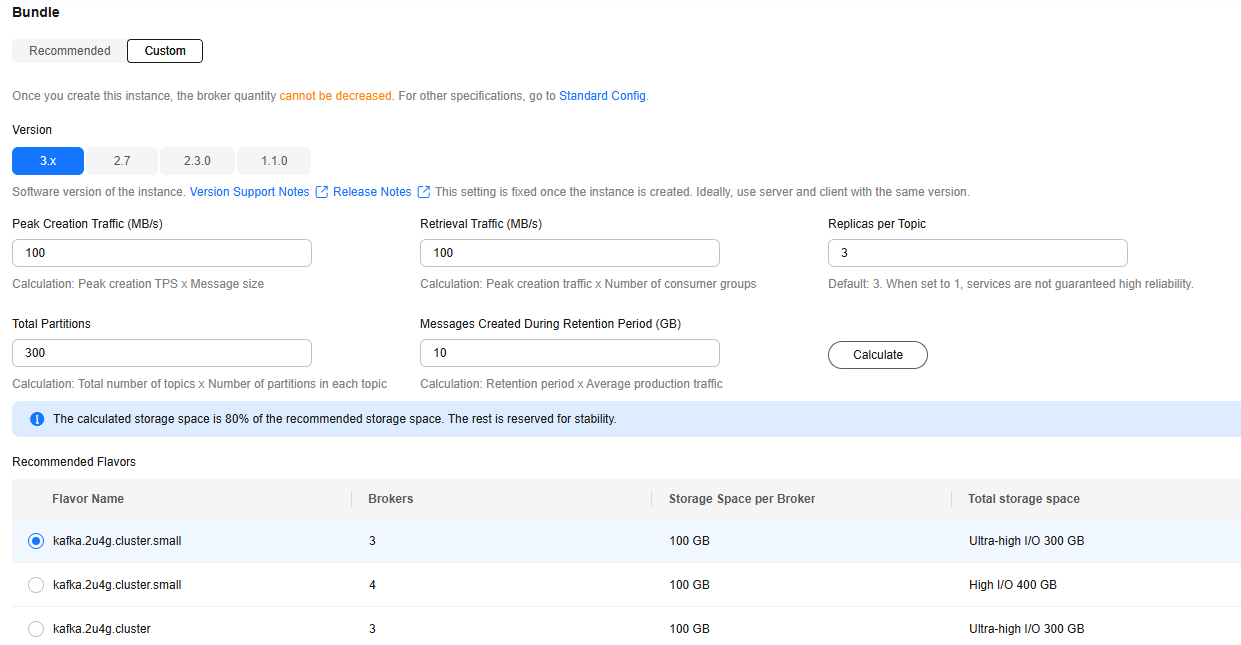

- Custom: The system calculates Brokers and Storage Space per Broker, and provides Recommended Specifications based on your selected version and specified parameters: Peak Creation Traffic, Retrieval Traffic, Replicas per Topic, Total Partitions, and Messages Created During Retention Period.

Figure 2 Specification calculation

- Recommended: Select a preset DMS for Kafka bundle as required. Specify the disk type and capacity as required. The disk type cannot be changed once the Kafka instance is created.

- Set the network information.

Table 4 Instance network parameters Parameter

Description

VPC

Select a created or shared VPC.

A VPC provides an isolated virtual network for your Kafka instances. You can configure and manage the network as required. To create a VPC, click Create VPC on the right. The Create VPC dialog box is displayed. For details, seeCreating a VPC.

After the Kafka instance is created, its VPC cannot be changed.

Subnet

Select a created or shared subnet. To create a security group, click Create Subnet on the right. The Create Subnet dialog box is displayed. For details, see Creating a Subnet for an Existing VPC.

After the Kafka instance is created, its subnet cannot be changed.

The Kafka instance supports IPv6 after it is enabled for the subnet.

IPv6

This parameter is displayed after IPv6 is enabled for the subnet. The instance with IPv6 enabled can be accessed on a client using IPv6 addresses.

SASL_SSL cannot be manually configured for instances with IPv6 enabled.

The IPv6 setting is fixed once the instance is created.

Available in CN East2, CN South-Guangzhou, and CN East-Shanghai1 regions.

Private IP Addresses

Select Auto or Manual.

- Auto: The system automatically assigns an IP address from the subnet.

- Manual: Select IP addresses from the drop-down list. If the number of selected IP addresses is less than the number of brokers, the remaining IP addresses will be automatically assigned.

This parameter is not displayed when IPv6 is enabled.

Security Group

Select a created security group.

A security group is a set of rules for accessing a Kafka instance. You can click Create Security Group on the right. The Create Security Group dialog box is displayed. Set security group parameters by referring to Creating a Security Group.

Before accessing a Kafka instance on the client, configure security group rules based on the access mode. For details about security group rules, see Table 2.

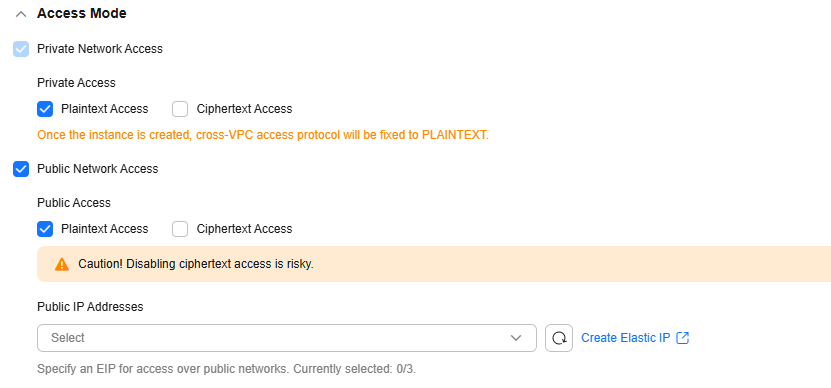

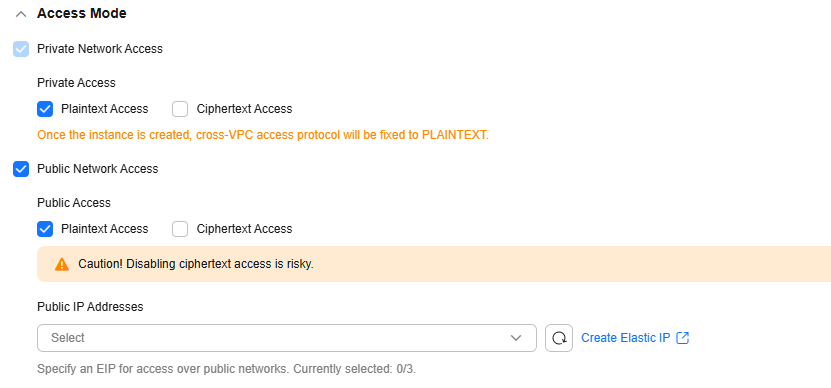

- Configure the instance access mode.

Figure 3 Instance access mode

Table 5 Instance access mode parameters Parameter

Sub-Parameter

Description

Private Network Access

Access Method

There are two methods:

- Plaintext access: Clients connect to the Kafka instance without SASL authentication.

- Ciphertext access: Clients connect to the Kafka instance with SASL authentication.

Enabling Ciphertext Access requires the Kafka security protocol, SSL username, password, and SASL PLAIN.

Once enabled, private network access cannot be disabled. Enable plaintext or ciphertext access, or both.

The value of the access mode determines that of the cross-VPC access protocol. The details are as follows:

- When Plaintext Access is enabled and Ciphertext Access is disabled, PLAINTEXT is used for Cross-VPC Access Protocol.

- When Ciphertext Access is enabled and Security Protocol is SASL_SSL, SASL_SSL is used for Cross-VPC Access Protocol.

- When Ciphertext Access is enabled and Security Protocol is SASL_PLAINTEXT, SASL_PLAINTEXT is used for Cross-VPC Access Protocol.

Fixed once the instance is created.

Public Network Access

Access Method

There are two methods:

- Plaintext access: Clients connect to the Kafka instance without SASL authentication.

- Ciphertext access: Clients connect to the Kafka instance with SASL authentication.

Enabling Ciphertext Access requires the Kafka security protocol, SSL username, password, and SASL PLAIN.

After public access is enabled, enable plaintext or ciphertext access, or both.

Public IP Addresses

Select the number of public IP addresses as required.

If EIPs are insufficient, click Create Elastic IP to create EIPs. Then, return to the Kafka instance purchase page and click

next to Public IP Address to refresh the public IP address list.

next to Public IP Address to refresh the public IP address list.Kafka instances only support IPv4 EIPs.

The Kafka security protocol, SSL username, password, and SASL/PLAIN mechanism are described as follows.

Table 6 Ciphertext access parameters Parameter

Value

Description

Security Protocol

SASL_SSL

SASL is used for authentication. Data is encrypted with SSL certificates for high-security transmission.

SASL_PLAINTEXT

SASL is used for authentication. Data is transmitted in plaintext for high performance.

SCRAM-SHA-512 authentication is recommended for plaintext transmission.

SSL Username

-

Username for a client to connect to a Kafka instance.

A username should contain 4 to 64 characters, start with a letter, and contain only letters, digits, hyphens (-), and underscores (_).

The username cannot be changed once ciphertext access is enabled.

Password

-

Password for a client to connect to a Kafka instance.

A password must meet the following requirements:

- Contains 8 to 32 characters.

- Contains at least three types of the following characters: uppercase letters, lowercase letters, digits, and special characters `~!@#$%^&*()-_=+\|[{}];:'",<.>? and spaces, and cannot start with a hyphen (-).

- Cannot be the username spelled forward or backward.

SASL Mechanism

-

- If PLAIN is disabled, the SCRAM-SHA-512 mechanism is used for username and password authentication.

- If PLAIN is enabled, both the SCRAM-SHA-512 and PLAIN mechanisms are supported. You can select either of them as required.

The SASL/PLAIN setting cannot be changed once ciphertext access is enabled.

What are SCRAM-SHA-512 and PLAIN mechanisms?

- SCRAM-SHA-512: uses the hash algorithm to generate credentials for usernames and passwords to verify identities. SCRAM-SHA-512 is more secure than PLAIN.

- PLAIN: a simple username and password verification mechanism.

- Configure advanced settings.

Table 7 Advanced configuration parameters Parameter

Description

Instance Name

You can customize a name that complies with the rules: 4–64 characters; starts with a letter; can contain only letters, digits, hyphens (-), and underscores (_).

Enterprise Project

This parameter is for enterprise users.

Enterprise projects facilitate project-level management and grouping of cloud resources and users. The default project is default.

Capacity Threshold Policy

Specify how messages are processed when the disk usage threshold (95%) is reached.- Automatically delete: Messages can be produced and consumed, but 10% of the earliest messages will be deleted to ensure sufficient disk space. This policy is suitable for scenarios where no service interruption can be tolerated. Data may be lost.

- Stop production: New messages cannot be produced, but existing messages can still be consumed. This policy is suitable for scenarios where no data loss can be tolerated.

Smart Connect

Configure Smart Connect.

Smart Connect is used for data synchronization between heterogeneous systems. You can configure Smart Connect tasks to synchronize data between Kafka and another cloud service or between two Kafka instances.

Enabling Smart Connect creates two brokers.

Automatic Topic Creation

Enable automatic Kafka topic creation if needed.

If this option is enabled, a topic will be automatically created when a message is produced in or consumed from a topic that does not exist. The default topic parameters are listed in Table 8.

- For cluster instances, after you change the value of the log.retention.hours (retention period), default.replication.factor (replica quantity), or num.partitions (partition quantity) parameter, the value will be used in later topics that are automatically created. For example, assume that num.partitions is changed to 5, an automatically created topic has parameters listed in Table 8.

- Unavailable for single-node instances.

Tags

Tags are used to identify cloud resources. When you have multiple cloud resources of the same type, you can use tags to classify them based on usage, owner, or environment.

If your organization has configured tag policies for DMS for Kafka, add tags to Kafka instances based on the policies. If a tag does not comply with the policies, Kafka instance creation may fail. Contact your organization administrator to learn more about tag policies.

- If you have predefined tags, select a predefined pair of tag key and value. You can click Create predefined tags to go to the Tag Management Service (TMS) console and view or create tags.

- You can also create new tags by specifying Tag key and Tag value.

Up to 20 tags can be added to each Kafka instance. For details about the requirements on tags, see Configuring Kafka Instance Tags.

Description

Enter a Description of the instance for 0–1024 characters.

Table 8 Topic parameters Parameter

Default Value (Single-node)

Default Value (Cluster)

Modified To (Cluster)

Partitions

1

3

5

Replicas

1

3

3

Aging Time (h)

72

72

72

Synchronous Replication

Disabled

Disabled

Disabled

Synchronous Flushing

Disabled

Disabled

Disabled

Message Timestamp

CreateTime

CreateTime

CreateTime

Max. Message Size (bytes)

10,485,760

10,485,760

10,485,760

- Specify the required duration.

This parameter is displayed only if the billing mode is yearly/monthly. If Auto-renew is selected, the instance will be renewed automatically.

- Monthly subscriptions auto-renew for 1 month every time.

- Yearly subscriptions auto-renew for 1 year every time.

- Click Confirm.

- Confirm the instance information, and read and agree to the Huawei Cloud Customer Agreement. If you have selected the yearly/monthly billing mode, click Pay Now and make the payment as prompted. If you have selected the pay-per-use mode, click Submit.

- Return to the instance list and check whether the Kafka instance has been created.

It takes 3 to 15 minutes to create an instance. During this period, the instance status is Creating.

- If the instance is created successfully, its status changes to Running.

- If the instance is in the Failed state, delete it by referring to Deleting Kafka Instances and try creating another one. If the instance creation fails again, contact customer service.

Instances that fail to be created do not occupy other resources.

- Go to the Buy Instance page.

- Set basic instance configurations on the Standard Config page.

Table 9 Basic instance configuration parameters Parameter

Description

Billing Mode

- Yearly/Monthly is a prepaid mode. You need to pay first, and will be billed for your subscription period.

- Pay-per-use is a postpaid mode. You can pay after using the service, and will be billed for your usage duration. The fees are calculated in seconds and settled by hour.

Region

DMS for Kafka instances in different regions cannot communicate with each other over an intranet. Select a nearest location for low latency and fast access.

AZ

An AZ is a physical region where resources use independent power supply and networks. AZs are physically isolated but interconnected through an internal network.

Select one AZ or at least three AZs. The AZ setting is fixed once the instance is created.

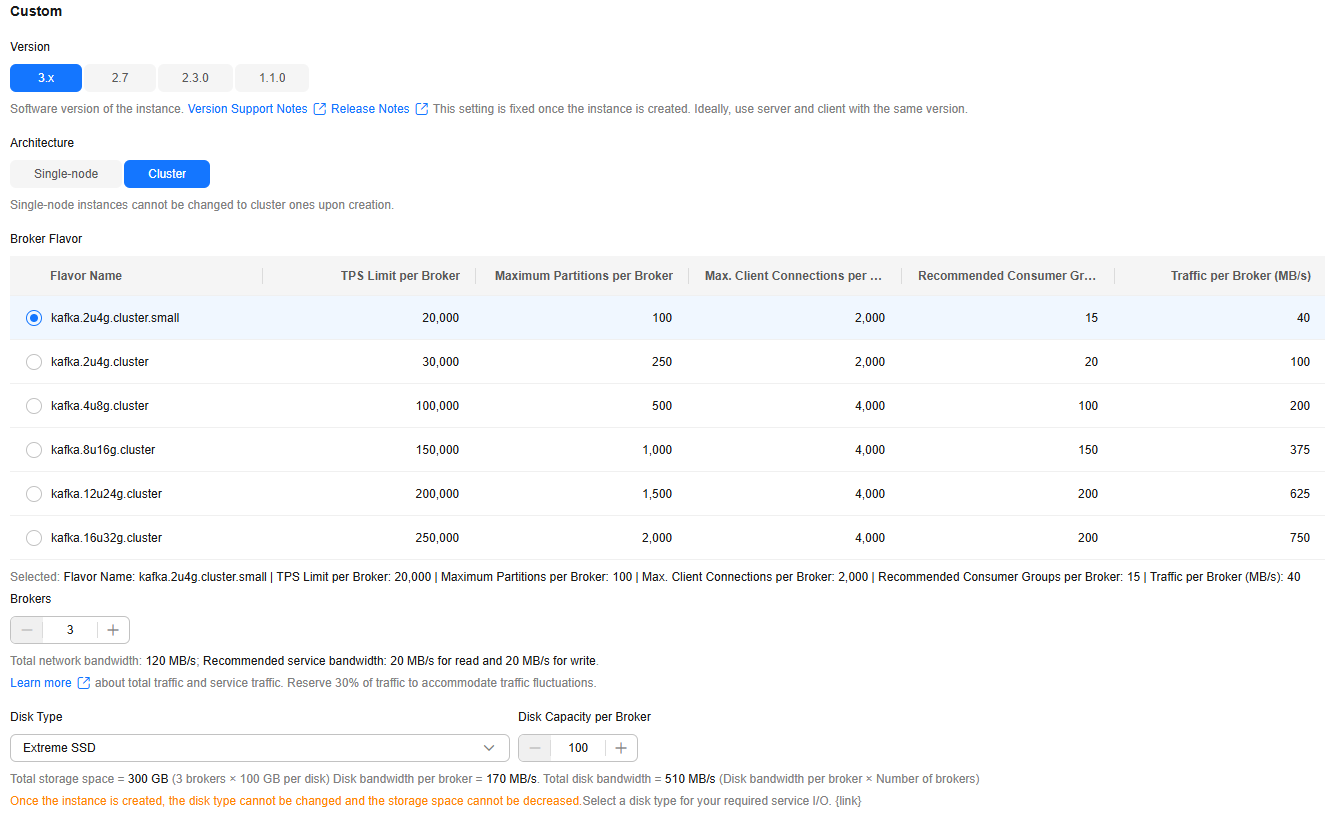

- Configure the following instance specifications:

Table 10 Instance specifications parameters Parameter

Description

Version

Kafka version, which can be 1.1.0, 2.7, or 3.x.

The version is fixed once the instance is created.

Architecture

Select Single-node or Cluster as required.

Single-node instances are available only in v2.7. See Comparing Single-node and Cluster Kafka Instances.

Broker Flavor

Select a broker flavor as required.

Maximum number of partitions per broker × Number of brokers = Maximum number of partitions of an instance. If the total number of partitions of all topics exceeds the upper limit of partitions, topic creation fails.

Brokers

Specify the broker quantity.

Disk Type

Select the disk type for Kafka data storage. The disk type cannot be changed once the Kafka instance is created.

Disk Capacity per Broker

Specify the disk size for Kafka data storage.

The storage space is consumed by message replicas, logs, and metadata. Specify the storage space based on the expected service message size, the number of replicas, and the reserved disk space. Each Kafka broker reserves 33 GB disk space for storing logs and metadata.

Disks are formatted when an instance is created. As a result, the actual available disk space is 93% to 95% of the total disk space.

The disk supports high I/O, ultra-high I/O, Extreme SSD, and General Purpose SSD types. For more information, see Disk Types and Performance.

Figure 4 Instance flavor

- Set the network information.

Table 11 Instance network parameters Parameter

Description

VPC

Select a created or shared VPC.

A VPC provides an isolated virtual network for your Kafka instances. You can configure and manage the network as required. To create a VPC, click Create VPC on the right. The Create VPC dialog box is displayed. For details, seeCreating a VPC.

After the Kafka instance is created, its VPC cannot be changed.

Subnet

Select a created or shared subnet. To create a security group, click Create Subnet on the right. The Create Subnet dialog box is displayed. For details, see Creating a Subnet for an Existing VPC.

After the Kafka instance is created, its subnet cannot be changed.

The Kafka instance supports IPv6 after it is enabled for the subnet.

IPv6

This parameter is displayed after IPv6 is enabled for the subnet. The instance with IPv6 enabled can be accessed on a client using IPv6 addresses.

SASL_SSL cannot be manually configured for instances with IPv6 enabled.

The IPv6 setting is fixed once the instance is created.

Available in CN East2, CN South-Guangzhou, and CN East-Shanghai1 regions.

Private IP Addresses

Select Auto or Manual.

- Auto: The system automatically assigns an IP address from the subnet.

- Manual: Select IP addresses from the drop-down list. If the number of selected IP addresses is less than the number of brokers, the remaining IP addresses will be automatically assigned.

This parameter is not displayed when IPv6 is enabled.

Security Group

Select a created security group.

A security group is a set of rules for accessing a Kafka instance. You can click Create Security Group on the right. The Create Security Group dialog box is displayed. Set security group parameters by referring to Creating a Security Group.

Before accessing a Kafka instance on the client, configure security group rules based on the access mode. For details about security group rules, see Table 2.

- Configure the instance access mode.

Figure 5 Instance access mode

Table 12 Instance access mode parameters Parameter

Sub-Parameter

Description

Private Network Access

Access Method

There are two methods:

- Plaintext access: Clients connect to the Kafka instance without SASL authentication.

- Ciphertext access: Clients connect to the Kafka instance with SASL authentication.

Enabling Ciphertext Access requires the Kafka security protocol, SSL username, password, and SASL PLAIN.

Once enabled, private network access cannot be disabled. Enable plaintext or ciphertext access, or both.

Ciphertext access is unavailable for single-node instances.

The value of the access mode determines that of the cross-VPC access protocol. The details are as follows:

- When Plaintext Access is enabled and Ciphertext Access is disabled, PLAINTEXT is used for Cross-VPC Access Protocol.

- When Ciphertext Access is enabled and Security Protocol is SASL_SSL, SASL_SSL is used for Cross-VPC Access Protocol.

- When Ciphertext Access is enabled and Security Protocol is SASL_PLAINTEXT, SASL_PLAINTEXT is used for Cross-VPC Access Protocol.

Fixed once the instance is created.

Public Network Access

Access Method

There are two methods:

- Plaintext access: Clients connect to the Kafka instance without SASL authentication.

- Ciphertext access: Clients connect to the Kafka instance with SASL authentication.

Enabling Ciphertext Access requires the Kafka security protocol, SSL username, password, and SASL PLAIN.

After public access is enabled, enable plaintext or ciphertext access, or both.

Ciphertext access is unavailable for single-node instances.

Public IP Addresses

Select the number of public IP addresses as required.

If EIPs are insufficient, click Create Elastic IP to create EIPs. Then, return to the Kafka instance purchase page and click

next to Public IP Address to refresh the public IP address list.

next to Public IP Address to refresh the public IP address list.Kafka instances only support IPv4 EIPs.

The Kafka security protocol, SSL username, password, and SASL/PLAIN mechanism are described as follows.

Table 13 Ciphertext access parameters Parameter

Value

Description

Security Protocol

SASL_SSL

SASL is used for authentication. Data is encrypted with SSL certificates for high-security transmission.

SASL_PLAINTEXT

SASL is used for authentication. Data is transmitted in plaintext for high performance.

SCRAM-SHA-512 authentication is recommended for plaintext transmission.

SSL Username

-

Username for a client to connect to a Kafka instance.

A username should contain 4 to 64 characters, start with a letter, and contain only letters, digits, hyphens (-), and underscores (_).

The username cannot be changed once ciphertext access is enabled.

Password

-

Password for a client to connect to a Kafka instance.

A password must meet the following requirements:

- Contains 8 to 32 characters.

- Contains at least three types of the following characters: uppercase letters, lowercase letters, digits, and special characters `~!@#$%^&*()-_=+\|[{}];:'",<.>? and spaces, and cannot start with a hyphen (-).

- Cannot be the username spelled forward or backward.

SASL Mechanism

-

- If PLAIN is disabled, the SCRAM-SHA-512 mechanism is used for username and password authentication.

- If PLAIN is enabled, both the SCRAM-SHA-512 and PLAIN mechanisms are supported. You can select either of them as required.

The SASL/PLAIN setting cannot be changed once ciphertext access is enabled.

What are SCRAM-SHA-512 and PLAIN mechanisms?

- SCRAM-SHA-512: uses the hash algorithm to generate credentials for usernames and passwords to verify identities. SCRAM-SHA-512 is more secure than PLAIN.

- PLAIN: a simple username and password verification mechanism.

- Configure advanced settings.

Table 14 Advanced configuration parameters Parameter

Description

Instance Name

You can customize a name that complies with the rules: 4–64 characters; starts with a letter; can contain only letters, digits, hyphens (-), and underscores (_).

Enterprise Project

This parameter is for enterprise users.

Enterprise projects facilitate project-level management and grouping of cloud resources and users. The default project is default.

Capacity Threshold Policy

Specify how messages are processed when the disk usage threshold (95%) is reached.- Automatically delete: Messages can be produced and consumed, but 10% of the earliest messages will be deleted to ensure sufficient disk space. This policy is suitable for scenarios where no service interruption can be tolerated. Data may be lost.

- Stop production: New messages cannot be produced, but existing messages can still be consumed. This policy is suitable for scenarios where no data loss can be tolerated.

Smart Connect

Configure Smart Connect.

Smart Connect is used for data synchronization between heterogeneous systems. You can configure Smart Connect tasks to synchronize data between Kafka and another cloud service or between two Kafka instances.

Enabling Smart Connect creates two brokers.

Single-node instances do not have this parameter.

Automatic Topic Creation

Enable automatic Kafka topic creation if needed.

If this option is enabled, a topic will be automatically created when a message is produced in or consumed from a topic that does not exist. The default topic parameters are listed in Table 15.

- For cluster instances, after you change the value of the log.retention.hours (retention period), default.replication.factor (replica quantity), or num.partitions (partition quantity) parameter, the value will be used in later topics that are automatically created. For example, assume that num.partitions is changed to 5, an automatically created topic has parameters listed in Table 15.

- Unavailable for single-node instances.

Tags

Tags are used to identify cloud resources. When you have multiple cloud resources of the same type, you can use tags to classify them based on usage, owner, or environment.

If your organization has configured tag policies for DMS for Kafka, add tags to Kafka instances based on the policies. If a tag does not comply with the policies, Kafka instance creation may fail. Contact your organization administrator to learn more about tag policies.

- If you have predefined tags, select a predefined pair of tag key and value. You can click View predefined tags to go to the Tag Management Service (TMS) console and view or create tags.

- You can also create new tags by specifying Tag key and Tag value.

Up to 20 tags can be added to each Kafka instance. For details about the requirements on tags, see Configuring Kafka Instance Tags.

Description

Enter a Description of the instance for 0–1024 characters.

Table 15 Topic parameters Parameter

Default Value (Single-node)

Default Value (Cluster)

Modified To (Cluster)

Partitions

1

3

5

Replicas

1

3

3

Aging Time (h)

72

72

72

Synchronous Replication

Disabled

Disabled

Disabled

Synchronous Flushing

Disabled

Disabled

Disabled

Message Timestamp

CreateTime

CreateTime

CreateTime

Max. Message Size (bytes)

10,485,760

10,485,760

10,485,760

- Specify the required duration.

This parameter is displayed only if the billing mode is yearly/monthly. If Auto-renew is selected, the instance will be renewed automatically.

- Monthly subscriptions auto-renew for 1 month every time.

- Yearly subscriptions auto-renew for 1 year every time.

- In Summary on the right, view the selected instance configuration.

- Click Confirm.

- Confirm the instance information, and read and agree to the Huawei Cloud Customer Agreement. If you have selected the yearly/monthly billing mode, click Pay Now and make the payment as prompted. If you have selected the pay-per-use mode, click Submit.

- Return to the instance list and check whether the Kafka instance has been created.

It takes 3 to 15 minutes to create an instance. During this period, the instance status is Creating.

- If the instance is created successfully, its status changes to Running.

- If the instance is in the Failed state, delete it by referring to Deleting Kafka Instances and try creating another one. If the instance creation fails again, contact customer service.

Instances that fail to be created do not occupy other resources.

Purchasing a Kafka Instance with Same Configurations

To purchase another Kafka instance with the same configuration as the current one, reuse the current configuration through the Buy Another function.

- Go to the Kafka console.

- Click

in the upper left corner and select a region.

in the upper left corner and select a region.

DMS for Kafka instances in different regions cannot communicate with each other over an intranet. Select a nearest location for low latency and fast access.

- Select a target Kafka instance and choose More > Buy Another in the Operation column.

- Adjust the automatically replicated parameter settings as required. For details, see Standard Config of a Single-node/Cluster Kafka Instance.

For security purposes, parameter settings involved in the following scenarios will not be replicated and a re-configuration is required:

- SSL username and password of a Kafka instance with ciphertext access enabled.

- Public IP addresses of a Kafka instance with public access enabled.

- Manually assigned IP addresses of a Kafka instance. Select Manual for Private IP Addresses again and specify private IP addresses.

- Name of a target Kafka instance.

- In Summary on the right, view the selected instance configuration.

- Click Confirm.

- Confirm the instance information, and read and agree to the Huawei Cloud Customer Agreement. If you have selected the yearly/monthly billing mode, click Pay Now and make the payment as prompted. If you have selected the pay-per-use mode, click Submit.

- Return to the instance list and check whether the Kafka instance has been created.

It takes 3 to 15 minutes to create an instance. During this period, the instance status is Creating.

- If the instance is created successfully, its status changes to Running.

- If the instance is in the Failed state, delete it by referring to Deleting Kafka Instances and try creating another one. If the instance creation fails again, contact customer service.

Instances that fail to be created do not occupy other resources.

Related Document

To purchase a Kafka instance by calling an API, see Creating a Kafka Instance.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot