AI Data Acceleration Engine Add-on

There are several key challenges that need to be addressed when using Kubernetes for AI and big data tasks, including excessive latency, inefficient bandwidth usage, insufficient data management, fragmented storage interfaces, and a lack of intelligent data awareness and scheduling resulting from the decoupling of storage and compute. To overcome these issues, CCE standard and Turbo clusters integrate the AI Data Acceleration Engine add-on, built on Fluid. This add-on enables functions like dataset abstraction, data orchestration, and application orchestration. It empowers AI and big data applications to efficiently use stored data without altering existing applications, thanks to transparent data management and optimized scheduling. For more information about Fluid, see Fluid Overview.

Prerequisites

- A CCE standard or Turbo cluster of v1.28 or later is available. For details about how to buy a cluster, see Buying a CCE Standard/Turbo Cluster.

- You have enabled OBS and DCS.

Notes and Constraints

- This add-on uses templates and images from the open-source community. Since it is based on community versions, there may be defects. CCE periodically synchronizes with updated community versions to address known issues. Check whether your service requirements can be met.

- This add-on only supports data caching via the JuiceFS storage engine. JuiceFS is a cloud native distributed file system that transforms object storage (like OBS) into high-performance local disks. It provides multi-level cache acceleration and integrates seamlessly into CCE, making it ideal for the massive data scenarios such as AI training and big data analytics.

- This add-on is being deployed. To view the regions where this add-on is available, see the console.

- This add-on is in the OBT phase. You can experience the latest add-on features. However, the stability of this add-on version has not been fully verified, and the CCE SLA is not valid for this version.

Billing

- Installing the add-on does not cost anything.

- In this example, you will need to purchase a DCS Redis instance and an OBS bucket, which you will be billed for. For details, see DCS (for Redis) Price Calculator and OBS Price Calculator.

Installing the Add-on

- Log in to the CCE console and click the cluster name to access the cluster console.

- In the navigation pane, choose Add-ons. Locate AI Data Acceleration Engine on the right and click Install.

- In the lower right corner of the Install Add-on page, click Install. If the status of the AI Data Acceleration Engine add-on changes to Running, the add-on has been installed.

Components

|

Component |

Description |

Resource Type |

|---|---|---|

|

application-controller |

Schedules and runs application pods that use datasets. It retrieves cache from the runtime and schedules the pods that use datasets to nodes with cached data. |

Deployment |

|

csi-nodeplugin-fluid |

A storage component of the add-on, which runs in containers. It is decoupled from service containers and provides observability for storage resources. |

DaemonSet |

|

dataset-controller |

Manages the lifecycle of datasets, including binding cache engines. |

Deployment |

|

fluid-crd-upgrader |

A CRD upgrade component of the add-on. It updates CRDs in add-on upgrade scenarios based on the add-on versions. |

Job |

|

fluid-webhook |

Replaces the PVC with a FUSE sidecar and ensures the FUSE pod starts first in environments where CSI plugins cannot be used. |

Deployment |

|

juicefsruntime-controller |

Manages and schedules JuiceFS runtime-related resources like computing tasks and configurations. It can, for example, dynamic scales cache nodes based on system loads. |

Deployment |

How to Use the Add-on

JuiceFS has an architecture where service data and metadata are stored separately. In this example, the data used by the service pod is stored in OBS, and the metadata generated by JuiceFS is stored in DCS for Redis. This example shows how to use JuiceFS to abstract data stored in OBS into a dataset and provide the dataset for workloads through a JuiceFSRuntime.

|

Procedure |

Description |

|---|---|

|

The OBS bucket functions as a data source. It stores service data to be used by pods. |

|

|

The DCS Redis instance stores the metadata generated by JuiceFS. |

|

|

The secret is used as the identity credential for JuiceFS to access the OBS bucket and DCS Redis instance to read and store related data. |

|

|

The dataset defines and manages data sources and access policies, ensuring workloads in the cluster use stored data appropriately and efficiently. After the dataset is created, the system will create a worker pod to initialize OBS data and convert the data into the JuiceFS directory format. |

|

|

The JuiceFSRuntime integrates the JuiceFS storage volumes into the cluster in an efficient, scalable manner; provides data caching, access acceleration, and automatic management; and ensures that applications can access and use data stored in the dataset efficiently. CCE only supports CSI mode, meaning the dataset is cached in a FUSE pod, which is then mounted to a service pod as a PVC. Typically, after creating the JuiceFSRuntime, the FUSE pod, PV, and PVC are generated automatically. Lazy startups are used for the FUSE pod, which is launched with the service pod. |

|

|

Create a service pod and use an automatically created PVC and PV. |

A FUSE pod is created alongside the service pod. The internal data of the FUSE pod can be used by the service pod via a PVC. |

- Create an OBS bucket to store service data.

- Log in to the OBS console. In the navigation pane, choose Object Storage.

- In the upper right corner of the page, click Create Bucket and configure related parameters as required. The following is an example.

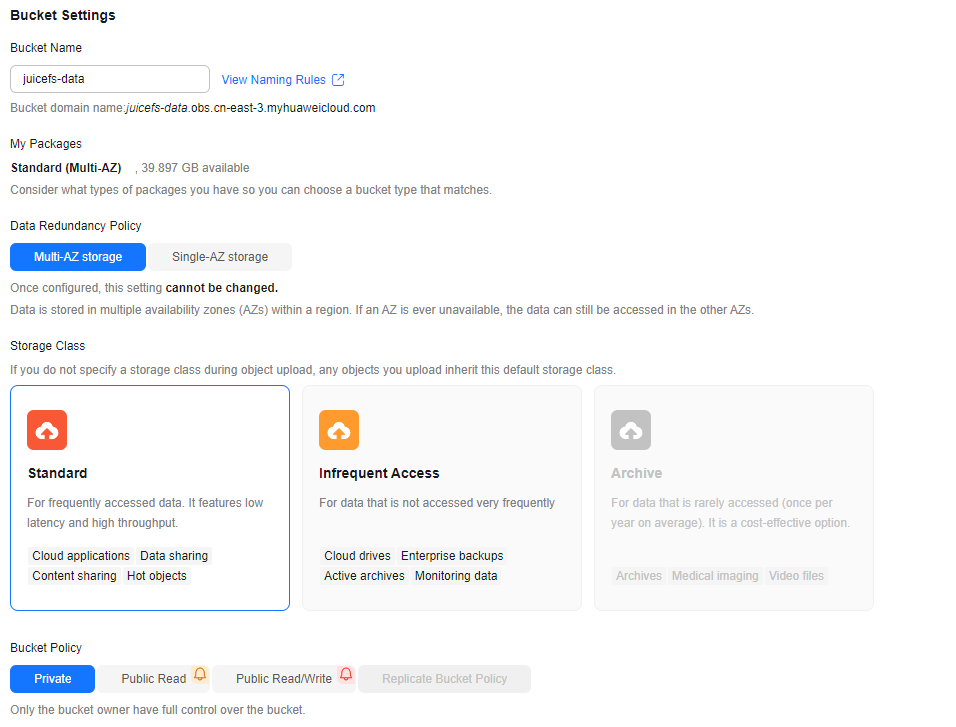

Figure 1 Creating a bucket

Table 3 Parameters Parameter

Example Value

Description

Bucket Name

juicefs-data

The name of a bucket, which must be unique across all users. After a bucket is created, its name cannot be changed.

Naming rules:

- Each OBS bucket name must be 3 to 63 characters long. Only lowercase letters, digits, hyphens (-), and periods (.) are allowed.

- An OBS bucket name cannot start or end with a period (.) or hyphen (-), and cannot contain two consecutive periods (..) or contain a period (.) and a hyphen (-) adjacent to each other.

- An OBS bucket name cannot be an IP address.

Data Redundancy Policy

Multi-AZ storage

The data redundancy policy of a bucket. The policy cannot be modified after the bucket is created.

- Multi-AZ storage: Data is stored in multiple AZs to achieve higher reliability.

- Single-AZ storage: Data is stored in a single AZ, which costs less.

For details about the performance comparison between multi-AZ and single-AZ storage, see Comparison of Storage Classes.

Storage Class

Standard

The storage class of a bucket. Different storage classes meet different needs for performance and costs.

- Standard: For storing a large number of hot files or small files that are frequently accessed (multiple times per month on average) and require fast access.

- Infrequent Access: For storing data that is less frequently accessed (less than 12 times per year on average), but when needed, the access has to be fast.

- Archive: For archiving data that is rarely accessed (once a year on average) and does not require fast access.

- Deep Archive: For storing data that is very rarely accessed and does not require fast access.

For details, see Storage Class.

Bucket Policy

Private

The read and write permissions of a bucket.

- Private: Only users granted permissions by the bucket ACL can access the bucket.

- Public Read: Anyone can read objects in the bucket.

- Public Read/Write: Anyone can read, write, or delete objects in the bucket.

- Replicate Bucket Policy: The policy of the selected source bucket will be applied to the bucket you are creating. This option is only available if you have selected a bucket as the replication source.

- After configuring the parameters, click Create Now in the lower right corner.

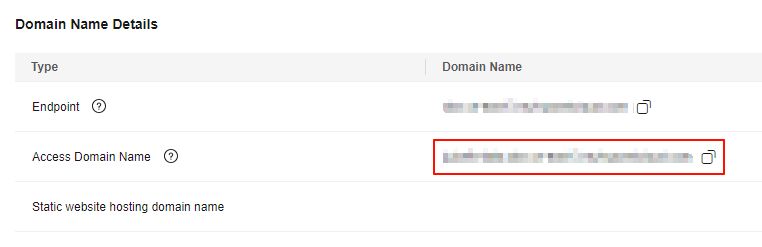

- After the bucket is created, click the bucket name in the bucket list. On the page displayed, choose Overview in the navigation pane. In the Domain Name Details area, you can see the access domain name of the bucket. The access domain name will be used to configure the secret that allows access from JuiceFS.

Figure 2 Checking the access domain name of a bucket

- Create a DCS Redis instance to store metadata generated by JuiceFS, such as file or directory attributes and data block mapping.

- On the Buy DCS Instance page, click the Quick Config tab and configure parameters as required. The following is an example. (This example describes only mandatory parameters. For details about more parameters, see Buying a DCS Redis Instance.)

Figure 3 Creating a DCS Redis instance

Table 4 Parameters Parameter

Example Value

Description

Specifications Settings

Basic - 16GB

Select an edition with the specifications you require. Each edition includes the product type, memory specifications, version, and instance type. For details about the differences between these specifications, see Buying a DCS Redis Instance.

VPC

vpc-default

Select the same VPC as the CCE cluster.

Subnet

subnet-observe

Select an existing subnet.

- After the configuration is complete, click Next in the lower right corner. After confirming the specifications, click Submit in the lower right corner. If the status of the DCS Redis instance changes to Running, the instance has been created.

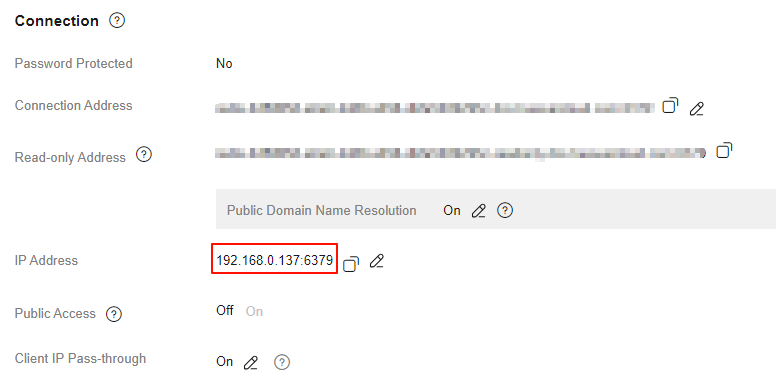

- Click the name of the created DCS Redis instance. In the Connection area on the right of the Basic Information page, check the IP address of the instance. This IP address will be used to configure the secret that allows access from JuiceFS.

Figure 4 Checking the IP address of a DCS Redis instance

- On the Buy DCS Instance page, click the Quick Config tab and configure parameters as required. The following is an example. (This example describes only mandatory parameters. For details about more parameters, see Buying a DCS Redis Instance.)

- Install kubectl on an existing ECS and access a cluster using kubectl. For details, see Accessing a Cluster Using kubectl.

- Create a secret for JuiceFS to access the OBS bucket and DCS Redis instance.

- Create a YAML file for a secret.

vim juicefs-secret.yamlThe file content is as follows:

apiVersion: v1 kind: Secret metadata: name: juicefs-secret-test3 type: Opaque stringData: name: juicefs-obs-test3 metaurl: "redis://192.168.0.137:6379/0" storage: obs bucket: "https://<OBS bucket access address>" access-key: <Access key ID> secret-key: <Secret access key> envs: "{TZ: Asia/Shanghai}" # Set the time zone of the mount pod. The default time zone is UTC.

Table 5 Parameters Parameter

Example Value

Description

metaurl

"redis://192.168.0.137:6379/0"

Specifies the metadata storage location. For details about how to obtain the IP address of a DCS Redis instance, see 2.c.

storage

obs

Specifies the storage type used by a file system. The value is fixed at obs in Huawei Cloud.

bucket

"https://<OBS bucket access address>"

Specifies the storage access address of an OBS bucket. For details, see 1.d.

access-key

<Access key ID>

Specifies the access key ID of an IAM user. It is used as the identity authentication information for accessing the storage. For details, see My Credentials

secret-key

<Secret access key>

Specifies the secret access key of an IAM user. It is used as the identity authentication information for accessing the storage. For details, see My Credentials.

- Create the secret.

kubectl create -f juicefs-secret.yamlIf information similar to the following is displayed, the resource has been created:

secret/juicefs-secret-test3 created

- Create a YAML file for a secret.

- Create a dataset for defining the data source.

- Create a YAML file for a dataset.

vim dataset.yamlThe file content is shown below. This example describes only some parameters.

apiVersion: data.fluid.io/v1alpha1 kind: Dataset metadata: name: jfsdemo spec: mounts: # Only one mount can be defined per dataset. If multiple mounts are required, separate datasets must be created. - name: juicefs-obs-test3 mountPoint: "juicefs:///demo" options: bucket: "https://<OBS access domain name>" storage: "obs" # The value is fixed at obs. encryptOptions: # Configure a storage access credential for a dataset. - name: metaurl valueFrom: secretKeyRef: name: juicefs-secret-test3 key: metaurl - name: access-key valueFrom: secretKeyRef: name: juicefs-secret-test3 key: access-key - name: secret-key valueFrom: secretKeyRef: name: juicefs-secret-test3 key: secret-keyTable 6 Parameters Parameter

Example Value

Description

mounts.name

juicefs-obs-test3

The name of an object used to store a dataset in an OBS bucket

mountPoint

"juicefs:///demo"

A subdirectory in JuiceFS where data will be stored. The value must start with juicefs://, for example, juicefs:///demo. In this example, /demo refers to a subdirectory within JuiceFS, and juicefs:/// represents the root directory of JuiceFS.

encryptOptions

N/A

JuiceFS authentication information, which must be the same as the key in the secret created previously.

- Create the dataset.

kubectl create -f dataset.yamlIf information similar to the following is displayed, the resource has been created:

dataset.data.fluid.io/jfsdemo created

- Check whether the worker pod has been created. The worker pod is automatically created by the AI Data Acceleration Engine add-on after the dataset is created to initialize OBS data and convert the data into the JuiceFS directory format.

kubectl get pod

If information similar to the following is displayed, the worker pod has been created:

NAME READY STATUS RESTARTS AGE jfsdemo-worker-0 1/1 Running 0 15h

- Create a YAML file for a dataset.

- Create a JuiceFSRuntime to ensure that applications can efficiently access and use data stored in the dataset. A JuiceFSRuntime can only be bound to one dataset, and both must share the same name for proper functionality. If multiple underlying data sources are needed, separate JuiceFSRuntimes and corresponding datasets must be created.

- Create a YAML file for a JuiceFSRuntime.

vim runtime.yamlThe file content is shown below. This example describes only some parameters.

apiVersion: data.fluid.io/v1alpha1 kind: JuiceFSRuntime metadata: name: jfsdemo spec: replicas: 1 tieredstore: levels: - mediumtype: SSD path: /cache quota: 40960 low: "0.1"

Table 7 Parameters Parameter

Example Value

Description

mediumtype

SSD

Specifies the type of the storage device used by the cache directory. The value can be MEM, SSD, or HDD.

This field is only a flag and does not necessarily indicate that the underlying storage is memory, SSD, or HDD. The actual storage medium depends on the path setting.

NOTE:If the value is MEM, the AI Data Acceleration Engine add-on automatically configures the memory requests and limits of containers based on the quota setting to ensure that the containers have proper memory requests.

path

/cache

A path for storing the local cache.

If multiple disks are used for caching, multiple directories can be specified using colons (:). To use a memory device, set this parameter to /dev/shm.

quota

40960

The maximum cache capacity per worker pod (cache cluster member), in MiB. The total available space in a cache cluster is obtained by multiplying this value by the number of replicas.

low

"0.1"

The minimum percentage of the space that can be left in a cache disk. The default value is 0.2. The example value is 0.1, which means that up to 90% of the space can be used in a cache disk.

- Create the JuiceFSRuntime.

kubectl create -f runtime.yamlIf information similar to the following is displayed, the resource has been created:

juicefsruntime.data.fluid.io/jfsdemo created

- Create a YAML file for a JuiceFSRuntime.

- Check whether the created dataset and JuiceFSRuntime are functioning properly.

- Check the status of the JuiceFSRuntime.

kubectl get juicefs

If information similar to the following is displayed and the worker phase of the JuiceFSRuntime is in the Ready state, jfsdemo is functioning properly:

The FUSE pod of JuiceFS is in lazy startup mode and is only created when needed.

NAME WORKER PHASE FUSE PHASE AGE jfsdemo Ready Ready 25h

- Check the status of the dataset.

kubectl get dataset

If information similar to the following is displayed and the dataset is in the Bound state, the dataset is functioning properly:

NAME UFS TOTAL SIZE CACHED CACHE CAPACITY CACHED PERCENTAGE PHASE AGE jfsdemo 0.00B 0.00B 40.00KiB 0.0% Bound 25h

- Check the status of the JuiceFSRuntime.

- After the dataset and JuiceFSRuntime are ready, view the related information of the PV and PVC that are automatically created by the AI Data Acceleration Engine add-on for the dataset.

- Check the PVC information.

kubectl get pvc

Information similar to the following is displayed:

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE jfsdemo Bound default-jfsdemo 100Pi RWX fluid <unset> 25h

- Check the PV information.

kubectl get pv

Information similar to the following is displayed:

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE default-jfsdemo 100Pi RWX Retain Bound default/jfsdemo fluid <unset> 25h

The preceding command output indicates that the PVC and PV have been automatically bound, meaning the storage is ready for direct mounting to the pod.

- Check the PVC information.

- Create a service pod and associate it with the preceding PVC and PV.

- Create a YAML file for a workload.

vim pod.yamlThe file content is as follows:

apiVersion: v1 kind: Pod metadata: name: demo-app spec: containers: - name: demo image: nginx volumeMounts: - mountPath: /data name: demo # The name of the volume to be mounted volumes: - name: demo persistentVolumeClaim: claimName: jfsdemo # The PVC that has been automatically created

- Create the pod.

kubectl create -f pod.yamlIf information similar to the following is displayed, the resource has been created:

pod/demo-app created

- Create a YAML file for a workload.

- Check the statuses of the service pod and FUSE pod.

kubectl get pod

If information similar to the following is displayed, the service pod and FUSE pod are started and the PVC is mounted properly to the pod.

NAME READY STATUS RESTARTS AGE demo-app 1/1 Running 0 3s jfsdemo-fuse-twwdh 1/1 Running 0 3s jfsdemo-worker-0 1/1 Running 0 17h

Helpful Links

You can learn the basic knowledge of Fluid through Fluid Overview.

Release History

|

Add-on Version |

Supported Cluster Version |

New Feature |

Community Version |

|---|---|---|---|

|

1.0.5 |

v1.28 v1.29 v1.30 v1.31 |

CCE standard and Turbo clusters support the AI Data Acceleration Engine add-on. |

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot