Fluid Overview

Fluid is a cloud native distributed dataset orchestration and acceleration engine designed for big data and AI applications. Through transparent data management and optimized scheduling, it enables AI and big data workloads to efficiently use stored data without requiring modifications to existing applications. Fluid supports automatic data scheduling, cache acceleration, and auto scaling to enhance data access efficiency and promote efficient storage and computing collaboration in large-scale distributed environments.

Basic Concepts

- Dataset: a type of resource in Fluid that allows you to define resource metadata and attributes, including location, format, version, and data access permissions. You can integrate datasets with various storage engines like Alluxio and JuiceFS for unified data management and efficient access.

- Dataset operations: Fluid supports key data operations on datasets, such as preloading, migration, and cache cleaning. These operations are managed through Fluid CRDs. They optimize data access performance and maintain the data lifecycle.

- Runtime: a custom Fluid resource that defines the runtime environment for applications. It specifies compute resource configurations, including memory, CPUs, GPU types, and storage, needed by data processing tasks. Computing tasks can be efficiently managed and scheduled through runtime CRDs.

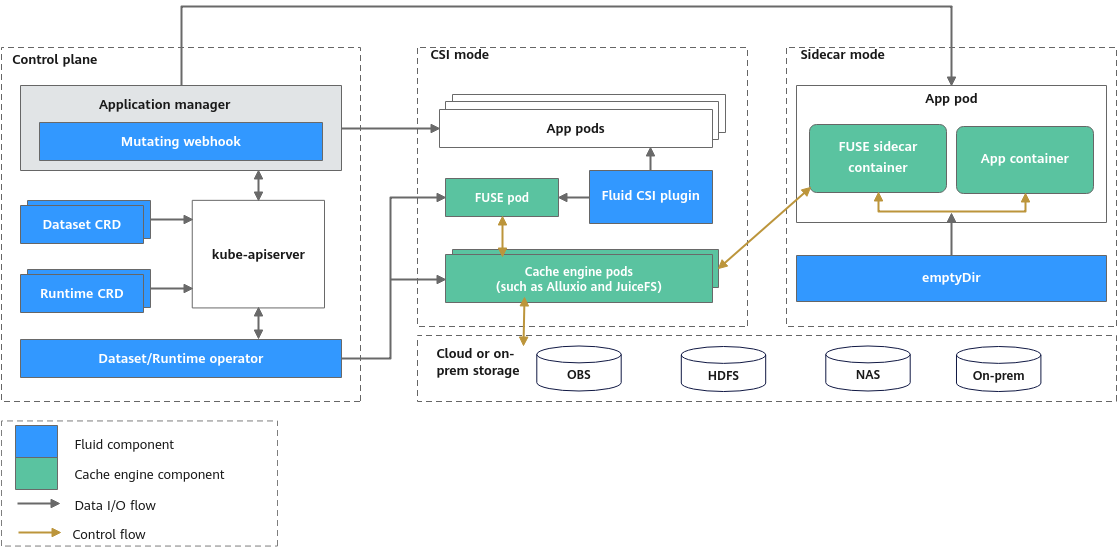

- CSI mode: a Kubernetes storage management approach that standardizes storage interfaces. It enables Kubernetes to seamlessly integrate various storage systems, including block storage, file storage, and object storage, to manage storage resources through CSI. This mode supports pod-level containerized storage mounting through FUSE pods, simplifying the integration between applications and storage. This makes it particularly effective for handling persistent storage.

- Sidecar mode: a Kubernetes storage management approach designed to enhance the functionality of a main container by deploying a sidecar container (such as a FUSE sidecar container) within the same pod. The sidecar container extends features like file system mounting and cache acceleration to the main container. This mode is lightweight and flexible, making it ideal for file system mounting and temporary storage. However, it may introduce high resource overhead.

Existing Challenges in Kubernetes Clusters

Running AI and big data workloads in Kubernetes clusters presents several challenges.

- Data access bottlenecks: Kubernetes adopts a decoupled compute and storage architecture to separate resources. However, remote data access introduces significant performance losses. In AI training scenarios, where massive data is frequently read, network latency and bandwidth limitations become critical bottlenecks, restricting computing efficiency.

- Weakness in data management: Kubernetes uses CSI to abstract storage resources but lacks advanced data management capabilities. In machine learning scenarios, key requirements like dataset feature definition, version control, permissions management, preprocessing, and I/O acceleration are not natively supported. This forces data scientists to develop additional tool chains for effective data handling.

- Multi-form CSI compatibility: Kubernetes deployment models, including native, edge, and serverless, support different CSI plugins, resulting in inconsistencies. For example, third-party CSI plugins are often incompatible with serverless environments, limiting available storage functions.

Functions

Fluid provides a suite of features to address high data access latency and complex multi-source data management in cloud native environments.

- Dataset abstraction: Fluid leverages native Kubernetes APIs to encapsulate underlying heterogeneous storage systems (such as HDFS, object storage, and distributed file systems) into a unified logical dataset. This abstraction narrows storage differences and enables transparent access. Essentially, it acts as a universal data adapter, allowing applications to access various heterogeneous storage systems through unified APIs without concerns about storage location, data transmission path, or acceleration mechanisms.

- Dataset orchestration: Fluid caches data onto compute nodes beforehand using dynamic scheduling cache engines (such as Alluxio and JuiceFS), minimizing data transmission distance and improving access efficiency.

- Application orchestration: Integrated with the Kubernetes scheduler, Fluid ensures computing tasks are preferentially assigned to nodes where data has already been cached. This reduces network transmission overhead and enhances computing efficiency.

- Automatic data O&M: Fluid supports key data operations like prefetching, migration, and backup through CRDs. It allows configuration of various triggering modes, including one-time, scheduled, and event-driven to integrate seamlessly with automated O&M systems.

- Data scalability and scheduling: By combining data caching with auto scaling and affinity scheduling, Fluid significantly improves data access performance.

- CSI compatibility: Fluid supports multiple Kubernetes environments, including native, edge, serverless clusters, and multi-cluster deployments. Different storage clients can operate within various environments.

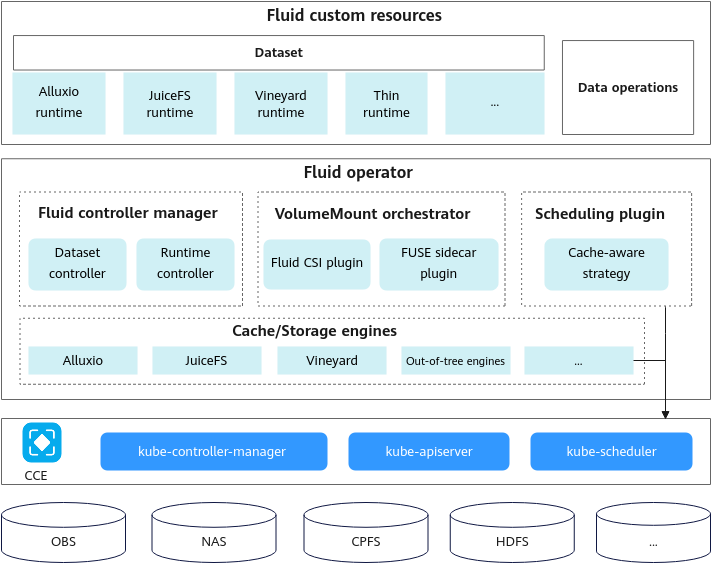

System Architecture

- Architecture Design

Fluid is built on the Kubernetes ecosystem and consists of several key components.

Figure 2 Fluid components

Table 1 Components Component

Description

Dataset CRD

Defines the source, access mode, and cache policy of a dataset. With dataset CRDs, you can create, update, delete, and monitor datasets in Kubernetes for efficient data management.

Runtime CRD

Manages the lifecycle of the underlying cache engine, including creation, scaling, and preloading, and supports multiple distributed cache systems.

Dataset controller

Manages the lifecycle of datasets, including binding cache engines.

Runtime controller

Manages and schedules runtime-related resources like computing tasks and configurations, for example, dynamic scaling of cache nodes based on system load.

Application manager

Schedules and runs application pods that use datasets. It consists of:

- Scheduler, which obtains cache information through the runtime and preferentially schedules pods that use datasets to nodes with cached data

- Webhook, which replaces the PVC with an FUSE sidecar and ensures that the FUSE pod is started first when CSI plugins are unavailable

Runtime plugin

Provides extended functionality for specific runtime environments. It can work alongside runtime CRDs for resource management, scheduling optimization, and execution environment customization. Runtime plugin enables Kubernetes to customize the execution mode of computing tasks based on different application needs, optimizing resource utilization and execution efficiency.

CSI plugin

Runs in containerized mode, decoupled from service containers for independent storage management, and enhances observability of storage resources, allowing real-time monitoring of storage performance and health in Kubernetes clusters.

- Data and Computing Collaboration

- Cache preloading: Declarative APIs are used to load remote data into the cache engine beforehand to prevent the first-access delays.

- Auto scaling: The number of cache nodes is dynamically adjusted based on the data access pressure. For example, during peak training hours, Fluid automatically adds some cache nodes to ensure smooth performance.

- Support for multiple protocols: Fluid is compatible with interfaces like POSIX and HDFS and adapts to multiple computing frameworks such as Spark and TensorFlow.

Application Scenarios

Fluid is a native distributed dataset orchestrator and cache accelerator for Kubernetes. It is well-suited for data-intensive applications, particularly for big data and AI applications. Its core application scenarios include:

- Machine learning and AI training

- Accelerating data reads: Fluid eliminates bottlenecks in reading massive small files using memory cache. It can, for example, reduce data access latency by more than 50% during image training.

- Managing data versions: Fluid supports parallel training and quick switching of datasets of multiple versions using dataset abstraction.

- Big data analytics

- Optimizing cross-storage joint queries: Fluid caches HDFS and object storage data jointly and supports Spark tasks' transparent access to multi-source data.

- Enhancing elastic resource utilization: Fluid dynamically adjusts cached resources based on the analysis of task loads. For example, it can save costs by scaling in resources at nights.

- Hybrid cloud and edge computing

- Optimizing data migration: In cross-cluster scenarios, Fluid minimizes data synchronization time using cache preloading.

- Accelerating edge nodes: Fluid caches hot data onto edge nodes for low-latency, real-time computing.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot