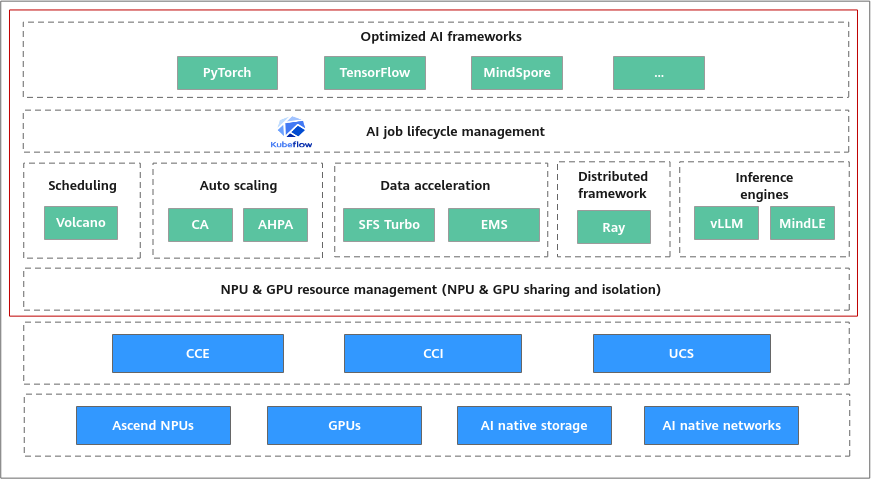

Cloud Native AI Suite Overview

The cloud native AI suites are built on Huawei Cloud CCE and offer a ready-to-use solution for AI training and inference, covering resource management, workload scheduling, task management, data acceleration, and service deployment. They deliver full-stack support and optimization for AI workloads.

AI Resource Management

The CCE AI Suite (NVIDIA GPU) and CCE AI Suite (Ascend NPU) add-ons enable efficient access and scheduling of heterogeneous compute resources such as GPUs, NPUs, and RDMA over Converged Ethernet (RoCE). These add-ons provide hardware acceleration for compute-intensive workloads like AI and HPC.

|

Add-on |

Description |

|---|---|

|

This add-on efficiently manages and schedules the NVIDIA GPU hardware resources. Through the integration of multiple modules, it ensures secure, effective utilization of GPU devices by containerized applications. It abstracts physical GPU resources into compute units that can be scheduled by CCE, particularly for high-performance tasks like AI training and graphics rendering. It also supports GPU virtualization, enabling dynamic resource allocation and enhancing resource utilization. |

|

|

This add-on facilitates the management and scheduling of Ascend AI processors. Integrated with the CloudMatrix ecosystem, it supports core functions such as NPU device reporting and topology awareness, delivering out-of-the-box acceleration for AI training and inference tasks. Additionally, NPU virtualization improves resource efficiency. |

AI Workload Scheduling

The Volcano Scheduler add-on optimizes AI workloads through features like job scheduling, job management, and queue management. It supports advanced scheduling capabilities such as priority-based scheduling, topology-aware scheduling, and queue resource management and scheduling. These features reduce idle node resource fragmentation and maximize cluster utilization.

|

Add-on |

Description |

|---|---|

|

Volcano is a CNCF-incubated scheduler designed for Kubernetes and specialized in intelligent scheduling and resource optimization for AI training and big data processing. It provides advanced capabilities such as queue management, priority-based task management, and auto scaling, significantly improving cluster resource utilization and task execution efficiency. Unlike the community edition, the Volcano Scheduler add-on is tightly integrated with CCE and the CloudMatrix ecosystem. This integration significantly enhances the efficiency of virtual GPU and NPU scheduling while optimizing CloudMatrix network topology awareness. |

AI Task Management

CCE integrates the cloud native AI engines Kubeflow and Kuberay, to deliver robust AI development support. This integration enables comprehensive management of the entire AI task lifecycle, optimizing resource utilization and significantly enhancing task execution efficiency.

|

Add-on |

Description |

|---|---|

|

This add-on is a Kubernetes-based machine learning workflow orchestration system. It delivers a scalable, component-based framework for developing AI pipelines. It facilitates containerized deployment of popular frameworks like TensorFlow and PyTorch, enabling seamless integration of processes such as data preprocessing, model training, and hyperparameter tuning. Additionally, it offers highly reliable AI R&D pipelines that can be automatically scaled in or out, empowering enterprises to rapidly create end-to-end machine learning solutions with efficiency and ease. |

|

|

This add-on acts as a Ray computing framework controller for Kubernetes, simplifying distributed AI task deployment. Key features include automatic Ray cluster creation, node resource isolation, auto scaling, and self-healing for faults. Fully integrated with CCE clusters, it enables rapid setup of HA Ray clusters for large-scale model training and real-time inference, significantly boosting resource utilization and development efficiency. |

AI Data Acceleration

The AI Data Acceleration Engine add-on offers capabilities including dataset abstraction, data orchestration, and application orchestration. It empowers AI and big data applications to efficiently use stored data without altering existing applications, thanks to transparent data management and optimized scheduling.

|

Add-on |

Description |

|---|---|

|

This add-on acts as a data acceleration engine, leveraging declarative APIs to automatically manage data caching. Its core capabilities include:

|

AI Service Deployment

The AI service deployment framework is made up of the AI Inference Framework, AI Inference Gateway, and kagent add-ons. AI Inference Framework allows you to easily start inference instances using APIs, offering features like elastic load balancing and auto scaling for efficient operation in a cloud native environment. These inference instances, deployed by AI Inference Framework, can deliver inference services through AI Inference Gateway to handle high-concurrency requests, ensuring stable, efficient services while meeting real-world application needs. Additionally, kagent integrates AI Inference Framework to provide an out-of-the-box agent development experience.

|

Add-on |

Description |

|---|---|

|

This cloud native add-on is designed for managing the AI model lifecycle. It enables you to customize AI model registration, deployment, and scheduling using declarative APIs, ensuring efficient execution of inference tasks. |

|

|

This add-on is an open-source programming framework that integrates the capabilities of AI agents into the cloud native environments. Designed for DevOps and platform engineers, kagent enables AI agents to operate seamlessly within CCE clusters. |

|

|

This add-on, developed by the Kubernetes community using the Gateway API, is a solution for managing inference traffic. It can dynamically distribute traffic and implement grayscale releases based on AI service characteristics like model name, inference priority, and model version to meet various service needs. |

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot