Auto Scaling Overview

As applications increasingly run on Kubernetes, the ability to rapidly scale out during peak times and scale in during off-peak hours becomes crucial for efficiently managing resources and reducing costs.

Auto scaling is widely used in CCE. Typical use cases are as follows:

- Online service scaling: Pods and nodes are automatically scaled out during peak hours (for example, holidays and promotions) to handle increased user requests, and scaled in during off-peak hours to cut costs.

- Large-scale computing training: The number of pods and nodes is dynamically adjusted according to the demands of computing tasks to accelerate execution.

- Deep learning GPU training and inference: GPU resources are dynamically allocated to optimize the efficiency of training and inference tasks. GPU nodes are automatically added or removed as needed to enhance resource utilization.

- Scheduled or periodic resource adjustment: Pods and nodes are automatically scaled at specific times to accommodate scheduled tasks. Resources are dynamically adjusted based on task requirements to ensure smooth execution.

Auto Scaling in CCE

CCE supports auto scaling for workloads and nodes.

- Workload scaling involves adjusting the number or specifications of pods at the scheduling layer to adapt to changes in workload demands. For example, the number of pods can be automatically increased during peak hours to handle more user requests and then scaled down during off-peak hours to reduce costs.

- Node scaling involves dynamically adding or reducing compute resources (such as ECSs) at the resource layer based on the scheduling status of pods. This approach ensures that clusters are well-resourced for high loads and minimizes waste during low demand.

Workload scaling and node scaling can work separately or together. For details, see Using HPA and CA for Auto Scaling of Workloads and Nodes.

Components

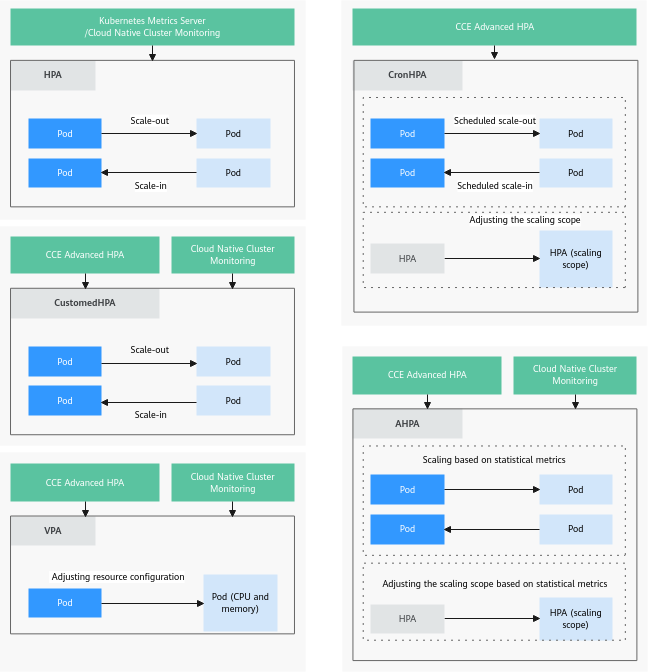

Workload Scaling Types

|

Type |

Component |

Description |

Reference |

|---|---|---|---|

|

HPA |

HorizontalPodAutoscaler (built-in Kubernetes component) |

HorizontalPodAutoscaler is a built-in component of Kubernetes for Horizontal Pod Autoscaling (HPA). CCE incorporates the application-level cooldown time window and scaling threshold functions into Kubernetes HPA. |

|

|

CustomedHPA |

An enhanced auto scaling feature, used for auto scaling of Deployments based on metrics (CPU usage and memory usage) or at a periodic interval (a specific time point every day, every week, every month, or every year). |

||

|

CronHPA |

CronHPA can scale in or out a cluster at a fixed time. It can work with HPA policies to periodically adjust the HPA scaling scope, implementing workload scaling in complex scenarios. |

||

|

VPA |

Vertical Pod Autoscaler in Kubernetes. |

||

|

AHPA |

Advanced Horizontal Pod Autoscaler, which performs scaling beforehand based on historical data. |

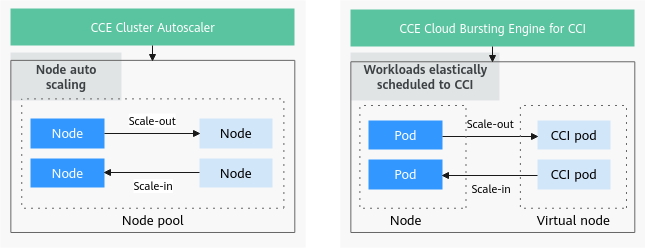

Node Scaling Types

|

Component Name |

Description |

Application Scenario |

Reference |

|---|---|---|---|

|

An open-source Kubernetes component for horizontal scaling of nodes, which is optimized by CCE in scheduling, auto scaling, and costs. |

Online services, deep learning, and large-scale computing with limited resource budgets |

||

|

Used to extend Kubernetes APIs to serverless container platforms (such as CCI), which means you no longer have to worry about node resources. |

Online traffic surge, CI/CD, big data, and more |

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot