Integrating AWS ALB Logs into SecMaster

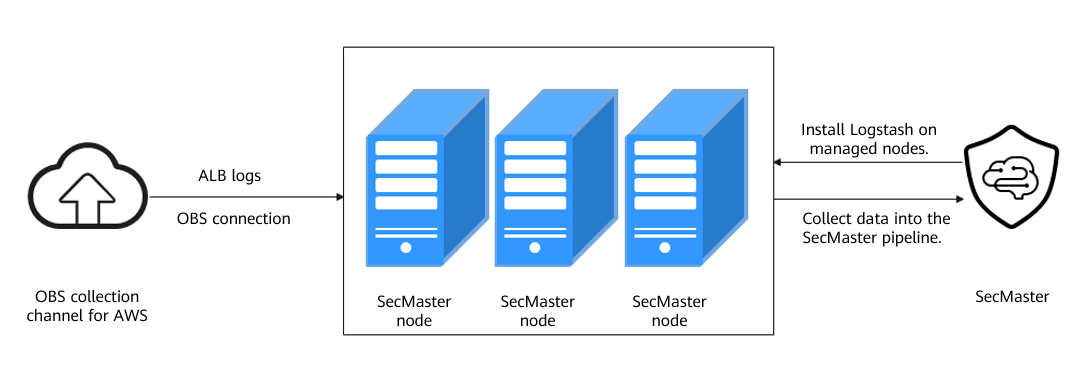

This section describes how to integrate Amazon Web Services (AWS) Application Load Balancer (ALB) logs into SecMaster.

SecMaster can integrate AWS object storage logs. It uses the AWS ALB log parser to parse logs and transfer them into the SecMaster pipeline. After enabling log integration, you can query them on the page.

|

Step |

Description |

|---|---|

|

(Optional) Step 1: Buy an ECS |

Buy an ECS for installing the log collector. |

|

(Optional) Step 2: Buy a Data Disk |

Buy a data disk to ensure that there is enough space for running the log collector. The data disk and ECS must be in the same AZ, and the data disk capacity cannot be less than 100 GB. |

|

(Optional) Step 3: Attach a Data Disk |

You need to attach a data disk to the ECS used for the log collector to make sure there is enough space for running the log collector. |

|

Create a non-administrator IAM user and password for logging in to SecMaster from the log collector. |

|

|

Step 5: Configure Network Connection |

Before collecting data, you need to establish the network connection between the tenant VPC and SecMaster. |

|

Manage the log collector node (ECS) on SecMaster. |

|

|

Configure the log collection process. |

|

|

Step 8: Create a Log Storage Pipeline |

Create a log storage location (pipeline) in SecMaster for log storage and analysis. |

|

Step 9: Configure a Connector |

Configure the log source and destination parameters. |

|

Step 10: Add a Parser |

Parameters for adding a parser Create a parser from template AWS ALB log. |

|

Step 11: Configure a Log Collection Channel |

Connect all function components to ensure that SecMaster and the log collector work properly. |

|

Step 12: Perform Security Query and Analysis |

After logs are integrated into the SecMaster pipeline, you can query logs on SecMaster. |

Notes and Constraints

- The component controller (isap-agent) of the log collector can only run on a Linux x86 or Arm ECS.

- Only a non-administrator IAM user can log in to the console and check information for installing the component controller.

- The collection channel that reads OBS files can be deployed only on a single node. Multi-node file read records cannot be synchronized. If there is a large amount of data, you can configure multiple collection channels with certain prefix and deploy them on different nodes.

Prerequisites

- You have enabled the paid SecMaster and created a workspace.

For details, see Buying SecMaster and Creating a Workspace.

Integrating AWS ALB Logs into SecMaster

- Prepare an ECS and install the log collector on the ECS for log collection. Make sure the system disk capacity for the ECS is not less than 50 GB.

If you already have an ECS that meets the requirements, skip this step.

If you need to buy an ECS, see Buying an ECS.

- Buy data disks to ensure that the log collector has sufficient running space.

An idle data disk with capacity not less than 100 GB is required for the ECS you plan to install the log collector. This data disk is used for collection management. The data disk must be in the same AZ as the ECS.

If you have purchased an ECS and configured data disks by referring to Buying an ECS, skip this step. Otherwise, refer to Buying a Data Disk to buy for a data disk.

- Attach the data disk to the ECS that meets the requirements.

Attach the data disk to the ECS that meets the requirements to ensure that the log collector has sufficient running space. For details, see Attaching a Data Disk.

Skip this step in any of the following scenarios:

- Scenario 1: You have purchased an ECS and a data disk that meet the requirements by referring to (Optional) Step 1: Buy an ECS and the disk has been attached to the ECS.

- Scenario 2: You already have an ECS that meets the requirements (not purchased by referring to (Optional) Step 1: Buy an ECS), and a data disk that meets the requirements and is purchased based on (Optional) Step 2: Buy a Data Disk. The data disk has been attached to the ECS during the purchase.

- Create a non-administrator IAM account.

IAM authentication is used for tenant log collection. So you need to create an IAM user (machine-machine account) with the minimum permission to access SecMaster APIs. MFA must be disabled for the IAM user. This account is used by the tenant-side log collector to log in to and access SecMaster. For details, see Creating a Non-Administrator IAM Account.

- Before collecting data, you need to establish the network connection between the tenant VPC and SecMaster. For details, see Step 5: Configure Network Connection.

- Install the component controller (isap-agent) and manage log collector nodes (ECSs) to SecMaster. For details, see Installing the Component Controller (isap-agent).

- Install the log collection component (Logstash) and configure the log collection process. For details, see Installing the Log Collection Component (Logstash).

- Create a log storage location (pipeline) in SecMaster for log storage and analysis.

(Optional) Add a data space for importing data. If a third-party data storage space is available, you do not need to add a data space.

(Optional) Add a storage pipeline in the new data space for importing data. If a storage pipeline is available, you do not need to add a storage pipeline.- Go to the target workspace management page. In the navigation pane on the left, choose .

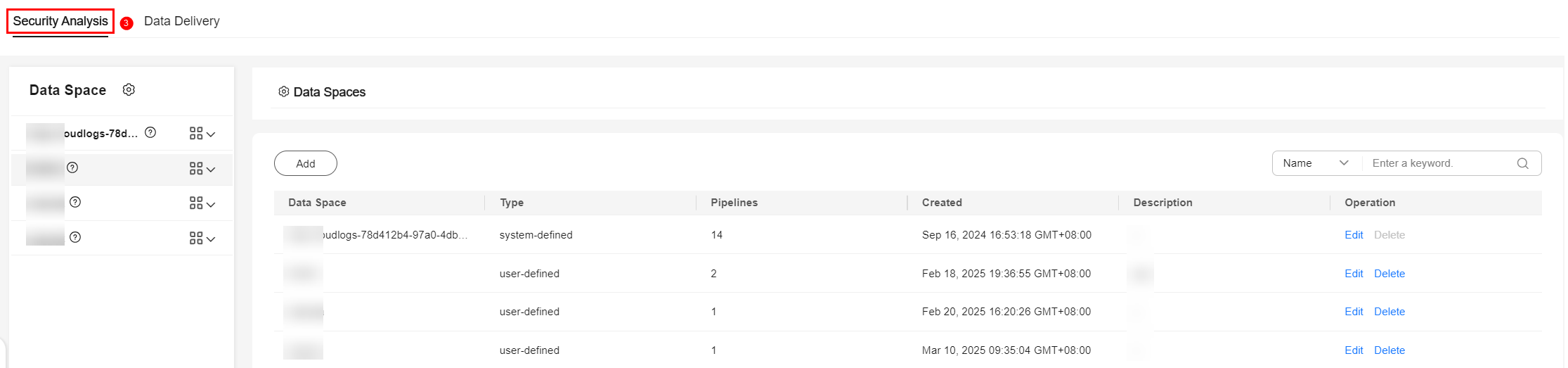

Figure 2 Accessing the Security Analysis tab

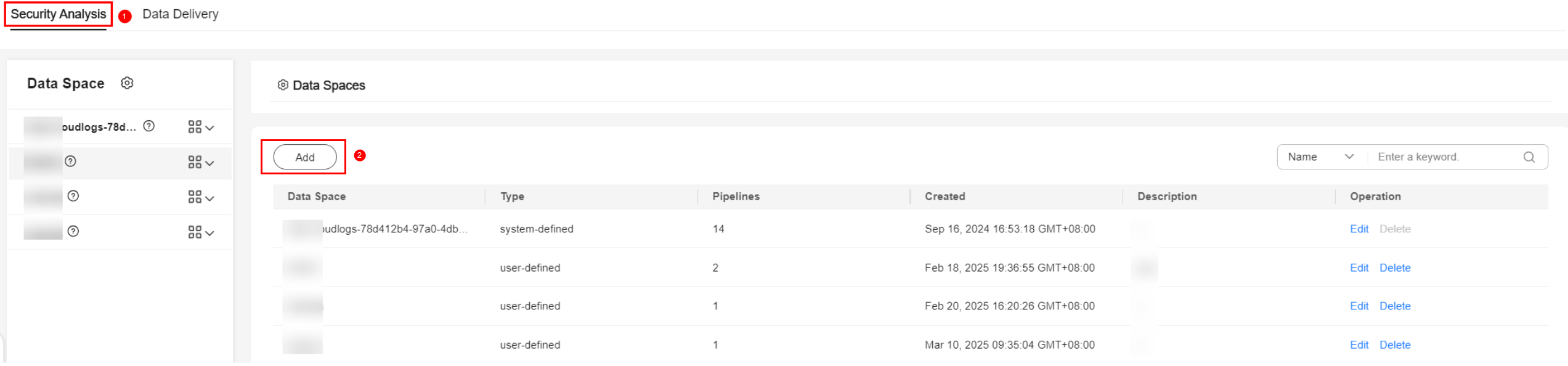

- Add a data space.

- In the upper left corner of the data space list, click Add. The Add Data Space panel is displayed on the right.

Figure 3 Add Data Space

- On the Add Data Space panel, set the parameters for the new data space.

Table 1 Add Data Space Parameter

Description

Data Space

Enter a data space name. The name must meet the following requirements:

- The name can contain 5 to 63 characters.

- Only letters, numbers, and hyphens (-) are allowed. The name cannot start or end with a hyphen (-) or contain consecutive hyphens (-).

- The name cannot be the same as any other data space name on Huawei Cloud.

Example: DataTransfer

Description

(Optional) Remarks of the data space.

- Click OK.

- In the upper left corner of the data space list, click Add. The Add Data Space panel is displayed on the right.

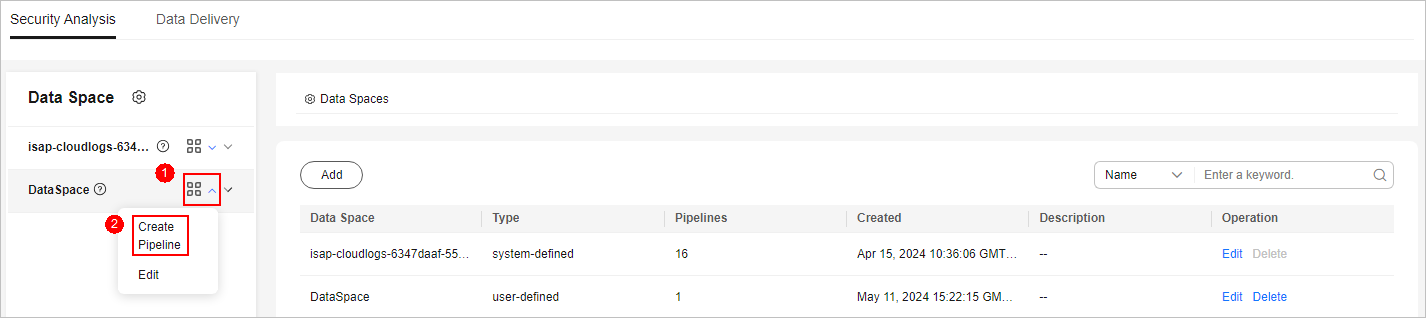

- In the data space navigation tree on the left, locate the data space added in 8.b, click

next to the data space name, and select Create Pipeline from the drop-down list.

Figure 4 Creating a pipeline

next to the data space name, and select Create Pipeline from the drop-down list.

Figure 4 Creating a pipeline

- On the Create Pipeline page, configure pipeline parameters. For details about the parameters, see Table 2.

Table 2 Creating a pipeline Parameter

Description

Data Space

Data space to which the pipeline belongs, which is generated by the system by default.

Pipeline Name

Name of the pipeline. The name must meet the following requirements:

- The name can contain 5 to 63 characters.

- Only letters, numbers, and hyphens (-) are allowed. The name cannot start or end with a hyphen (-) or contain consecutive hyphens (-).

- The name must be unique in the data space.

Pipeline name aws_alb_log is recommended.

Shards

The number of shards of the pipeline. The value ranges from 1 to 64. Retain the default value.

An index can potentially store a large amount of data that exceeds the hardware limits of a single node. To solve this problem, Elasticsearch subdivides your index into multiple pieces called shards. When creating an index, you can specify the number of shards as required. Each shard is hosted on any node in the cluster, and each shard is an independent and fully functional index.

Lifecycle

Lifecycle of data in the pipeline. Value range: 7 to 180

Retain the default value.

Description

Remarks on the pipeline. This parameter is optional.

- Click OK. After the pipeline is created, you can click the data space name to check the created pipeline.

You are advised to use different pipelines to store data from different sources so that you can query and analyze the data.

- Go to the target workspace management page. In the navigation pane on the left, choose .

- Configure the connector. You need to configure log source and log destination parameters.

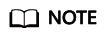

- Go to the workspace management page. In the navigation pane on the left, choose .

Figure 5 Accessing the Connections page

- Add a source for the data connection.

- On the Connections tab, click Add.

- Configure the data connection source details.

This section uses OBS as an example. For more connection types, see Connector Rules.

Table 3 Log source settings Parameter

Description

Connection Method

Select Source.

Connection Type

Select OBS.

Title

Name of the data connection source. In this example, set it to in-s3-aws-alb.

Description

Enter a brief description of the data source. In this example, "AWS ALB Log" is used.

Region

Region where the AWS OBS bucket is located, for example, us-east-2.

Bucket

OBS bucket name.

endpoint

OBS endpoint, for example, https://s3.us-east-2.amazonaws.com. The format is fixed. Replace the region with the actual one.

AK

AK

SK

SK

Prefix

Log object directory, for example, AWSLogs/aws-account-id/elasticloadbalancing/.

Temporary_directory

System running cache, for example, /tmp.

Packet label

Source tag, for example, aws-alb.

Memory path

Memory path. This parameter is used to prevent full-text traversal caused by restart.

- After the setting is complete, click Confirm in the lower right corner of the page.

- Add a destination for the data connection.

- On the Connections tab, click Add.

- Configure the data connection destination details.

Table 4 Log transfer destination Parameter

Description

Connection Method

Select Destination.

Connection Type

Select SecMaster.

Title

Enter a custom name for the data connection destination. In this example, set it to out-pipe-aws-alb-log.

Description

Enter a custom description of the log data destination. In this example, "AWS ALB Log" is used.

Type

User-defined log destination type. Select Tenant.

Pipe

Select the pipe created in 8. In this example, select aws_alb_log.

Domain_name

Enter the domain account information of the IAM user used to log in to the console.

User_name

Enter the user information of the IAM user used to log in to the console.

User_password

Enter the password of the current login IAM user.

Advanced Settings

No configuration is required.

- After the setting is complete, click Confirm in the lower right corner of the page.

- Go to the workspace management page. In the navigation pane on the left, choose .

- Parameters for adding a parser Create a parser from template AWS ALB log.

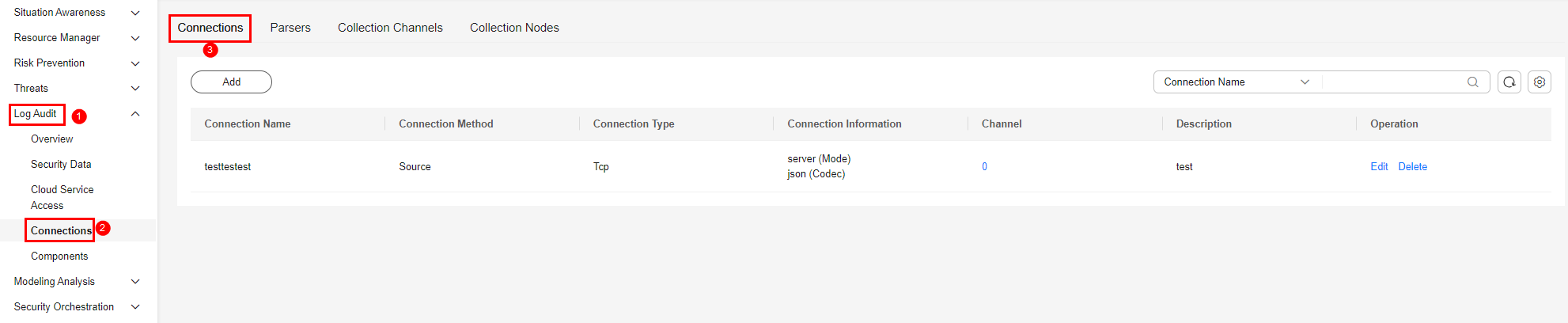

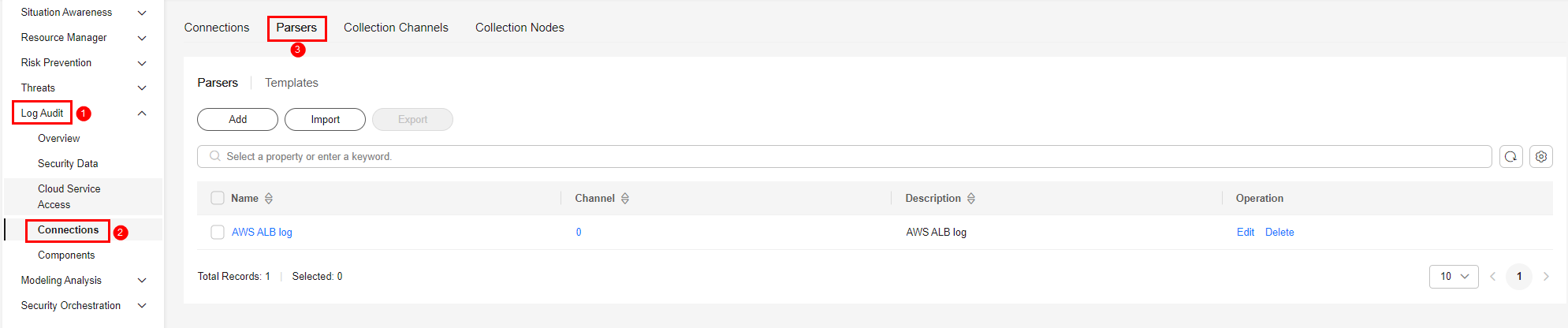

- Go to the target workspace management page. In the navigation pane on the left, choose . On the displayed page, click the Parsers tab.

Figure 6 Accessing the Parsers tab

- On the Parsers tab, click the Templates tab. In the Operation column of the parser template named AWS ALB log, click Create by Template. The Create Parser page is displayed.

- On the Create Parser page, configure parser parameters.

- Name: Retain the default value, which is AWS ALB log.

- Description: Retain the default value. The default value is AWS ALB log.

- Parsing rule: Retain the default value.

- Click OK. Return to the page to check the created parser.

Figure 7 Checking the created parser

- Configure log collection channels, connect each functional component, and ensure that SecMaster and log collectors work properly.

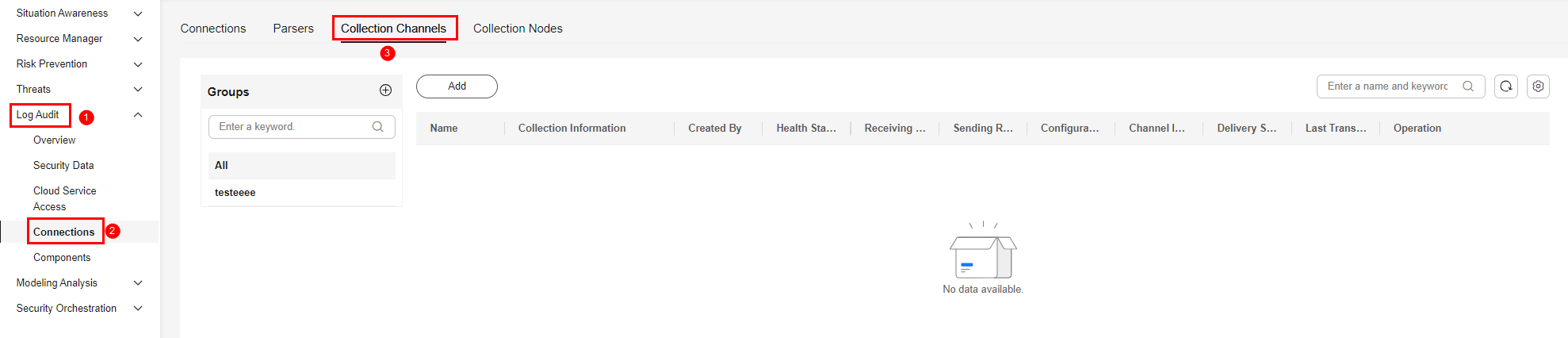

- Go to the workspace management page. In the navigation pane, choose . On the displayed page, click the Collection Channels tab.

Figure 8 Accessing the Collection Channels tab

- Add a log collection channel group.

- On the Collection Channels tab, click

on the right of Groups.

on the right of Groups. - Enter a group name, for example, AWS ALB logs, and click

.

.

- On the Collection Channels tab, click

- Create a log collection channel.

- On the right of the group list, click Add.

- In the Configure Basic Configuration step, configure basic information.

Table 5 Basic information parameters Parameter

Description

Basic Information

Title

Enter a custom collection channel name. In this example, "AWS ALB logs" is used.

Channel grouping

Select the group created in 11.b. In this example, "AWS ALB logs" is used.

(Optional) Description

Enter the description of the collection channel.

Configure Source

Source Name

Select the log source name added in 9. In this example, select in-s3-aws-alb.

After you select a source, the system automatically generates the information about the selected source.

Destination Configuration

Destination Name

Select the log destination name added in 9. In this example, select out-pipe-aws-alb-log.

After you select a destination, the system automatically generates the information about the selected destination.

- Click Next in the lower right corner of the page.

- On the displayed Configure Parser page, select the AWS ALB log parser and click Next in the lower right corner of the page.

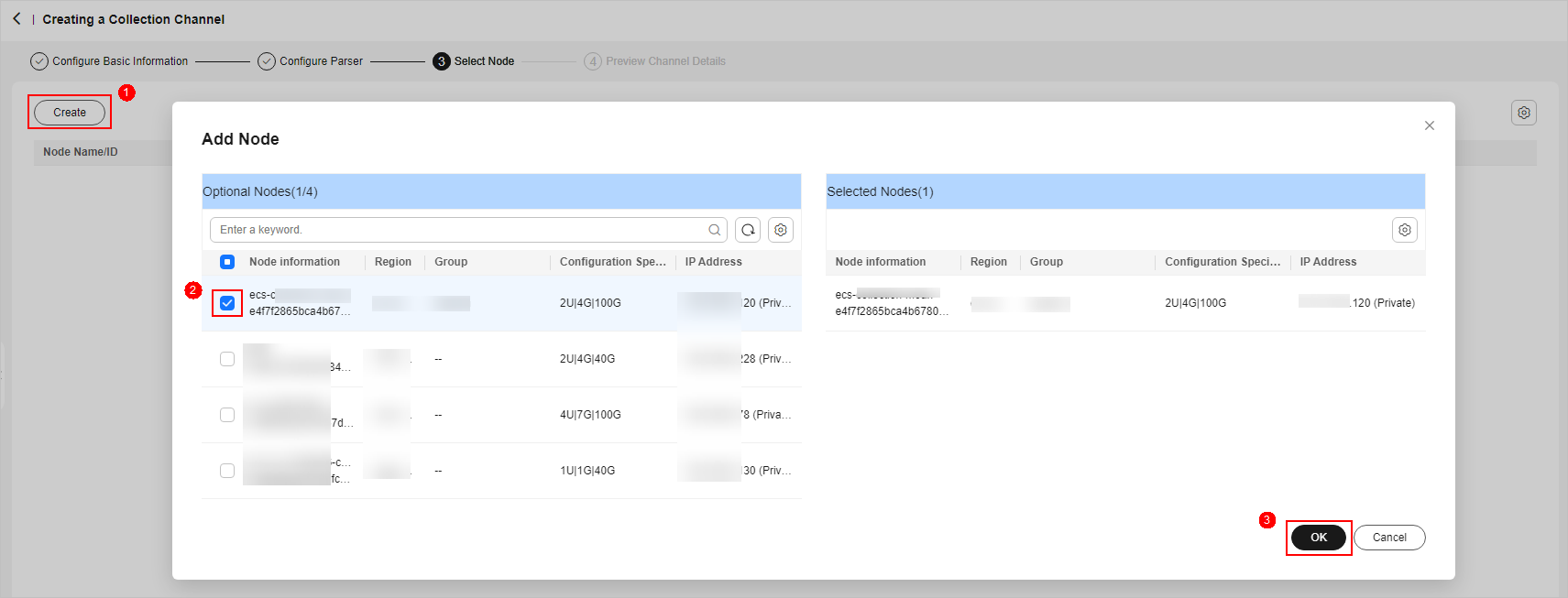

- On the Select Node page, click Create. In the displayed dialog box, select the ECS node managed in 6 and click OK.

Figure 9 Selecting a node

- Click Next in the lower right corner of the page.

- On the Preview Channel Details page, confirm the configuration and click Save and Execute.

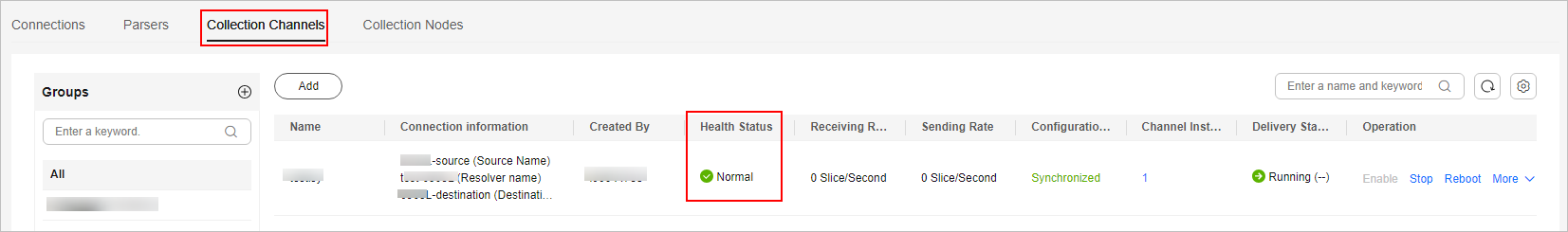

- On the Collection Channels tab, if the health status of a collection channel is Normal, the collection channel is successfully delivered.

Figure 10 Collection channels configured

- Go to the workspace management page. In the navigation pane, choose . On the displayed page, click the Collection Channels tab.

- After logs are integrated into the SecMaster pipeline, query logs on SecMaster.

- Go to the target workspace management page. In the navigation pane on the left, choose . Select the pipeline (aws_alb_log in this example) created or used in 8 to view the data after log parsing.

- For details about how to query and analyze logs, see Querying and Analyzing Logs.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot