Scaling In a Cluster

You can scale in your clusters on the console to release unnecessary compute and storage resources provided by DWS.

- Scale-in is supported only by pay-per-use clusters of version 8.1.1.300 and later. For clusters billed in yearly/monthly mode, the function is supported only in version 8.2.1 and later.

- By default, scaled in nodes are charged by quantity.

- When you scale in a storage-compute coupled data warehouse cluster, you can only modify the same storage specifications as used by the cluster.

Impact on the System

- Before the scale-in, close the client connections that have created temporary tables, because temporary tables created before or during the scale-in will become invalid and operations performed on these temporary tables will fail. Temporary tables created after the scale-in will not be affected.

- If you start a scale-in, an automated snapshot will be created for the cluster before scale-in. If you do not need the snapshot, you can disable the automated backup function on the scale-in page.

- Ensure that the skew rate is below 10% before scale-in and there is no specific guideline for the dirty page rate. However, it is advisable to maintain a skew rate of 20–30% for large tables exceeding 50 GB in size.

- When scaling in a cluster, several functions are disabled, including cluster restart, cluster scale-out, snapshot creation, node management, intelligent O&M, resource management, parameter modification, security configurations, log service, database administrator password resetting, and cluster deletion.

- During offline scale-in, stop all services or run only a few query statements. During table redistribution, a shared lock is added to tables. All insert, update, and delete operations as well as DDL operations on the tables are blocked for a long time, which may cause a lock wait timeout. After a table is redistributed, you can access the table. Do not perform queries that take more than 20 minutes during the redistribution (the default time for applying for the write lock during redistribution is 20 minutes). Otherwise, data redistribution may fail due to lock wait timeout.

- During online scale-in, you can perform insert, update, and delete operations on tables, but data updates may still be blocked for a short period of time. Redistribution consumes lots of CPU and I/O resources, which will greatly impact job performance. Therefore, perform redistribution when services are stopped or during periods of light load.

- If a node is deleted while DDL statements are being executed (to create a schema or function) during online scale-in, errors may occur because the DN cannot be found. To resolve this issue, you can simply retry the statements.

- If a cluster scale-in fails, the database does not automatically roll back the scale-in operation, and no O&M operations can be performed. In this case, you need to click the Scale In on the console to try again.

- In the cloud native 9.0.2 scale-out scenario, if the number of buckets allocated to each DN is not between [3, 20], the system adjusts the number of buckets. You can view the number of buckets using the GUC parameter table_buckets.

- Currently, the bucket scaling supports only the offline mode. The procedure is the same as that of the existing scaling procedure. The system automatically determines and executes the bucket scaling process.

- During scaling, the cluster will be restarted and all connections will be closed. The restart takes several minutes.

- After the restart is complete, the database can be read but cannot be written until data redistribution is complete.

Prerequisites

- The cluster is in Available state, is not read-only, and there is no data being redistributed in the cluster.

- A cluster configuration file has been generated, and configuration information is consistent with the current cluster configuration.

- Before the scale-in operation starts, the value of default_storage_nodegroup is installation.

- The cluster is configured in the ring mode. A ring is the smallest unit for scale-in. Four or five hosts form a ring. The primary, standby, and secondary DNs are deployed in this ring. If the current cluster has only one cluster ring, scale-in is not supported and the scale-in button is unavailable.

- The scale-in host does not contain the GTM, ETCD, or CM Server component.

- There are no CNs on the nodes to be scaled in.

- Scale-in does not support rollback but supports retry. A data redistribution failure after a scale-in does not affect services. You can complete scale-in at other appropriate time. Otherwise, unbalanced data distribution will persist for a long time.

- Before redistribution, ensure that the data_redis schema in the corresponding database is reserved for redistribution and that no user operation on it or its tables is allowed. During redistribution, data_redis is used. After the operation is complete, the schema will be deleted. User tables (if any) in the schema will also be deleted.

- gs_cgroup cannot be used during scale-in.

- Before the scale-in, check the remaining capacity of the cluster. The nodes remaining in a scale-in must have sufficient space to store the data of the entire cluster. Otherwise, the scale-in cannot be properly performed.

- The used physical disk space on each node is less than 80%.

- All the users and roles use less than 80% of resource quota in total.

- The estimated space usage after scale-in must be less than 80%.

- The available space is 1.5 times larger than the maximum size of a single table.

- Automatic removal of faulty CNs is disabled during the scale-in and is enabled after the scale-in is complete.

Procedure

- Log in to the DWS console.

- Choose Dedicated Clusters > Clusters. By default, all clusters of the user are displayed.

- In the Operation column of the target cluster, choose More > Scale Node > Scale In.

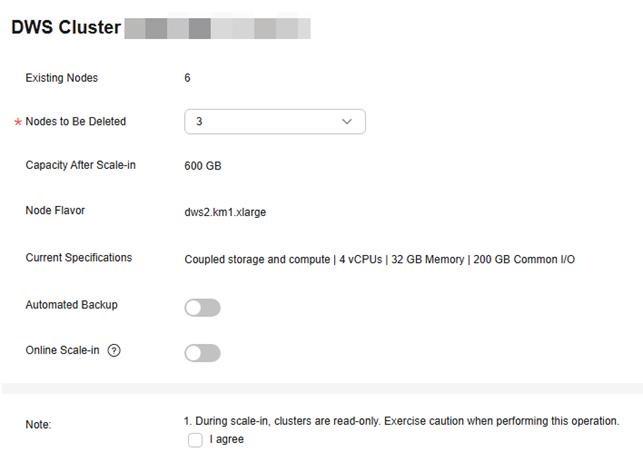

- You can select the number of nodes to be scaled in. Automated Backup and Online Scale-in are disabled by default. The storage-compute decoupled cluster does not have the Automated Backup switch.

Before scaling in the cluster, it is crucial to verify if it meets the inspection conditions. Click Immediate Inspection to complete the inspection and proceed to the next step only if it passes. For more information, see Viewing Inspection Results.

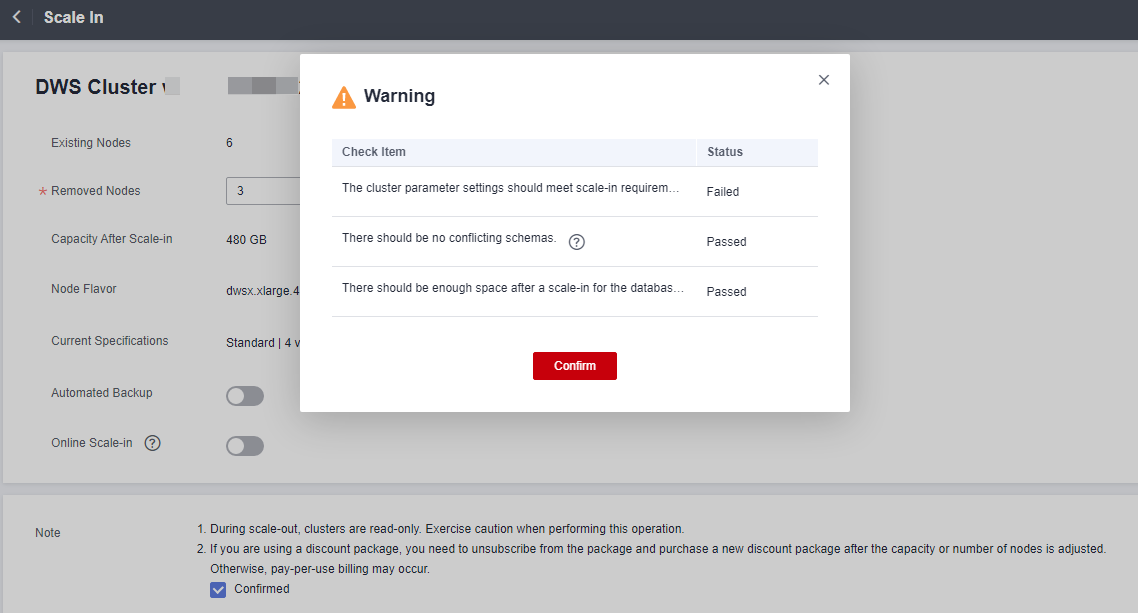

- Click Next: Confirm. The system will check the cluster status before scale-in. If your cluster fails the check, an error message will be displayed.

- After the check is passed, click Confirm to return to the cluster list. The cluster status is Scaling in. Wait for a while.

- (Yearly/Monthly billing mode) After the cluster scale-in is complete, you will be prompted to delete idle nodes. Click OK to delete idle nodes.

- On the Delete Node page, view the resource information and click Submit.

- (Yearly/Monthly billing mode) On the displayed resource confirmation page, confirm the refund information and click Submit.

- After the scale-in of a pay-per-use cluster is complete, specified nodes will be automatically removed in the background. For a yearly/monthly cluster, you need to manually delete the nodes.

- If the cluster parameters fail the check, the scale-in will fail. To avoid this problem, ensure your parameter settings are correct.

- If schemas fail the check, the scale-in will fail. To avoid this problem, check whether any schema that conflicts with the scale-in exists.

- If the disk space fails the check, the scale-in may fail or the cluster may become read-only after the scale-in. To avoid this problem, increase your cluster disk capacity.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot