Containerized Applications

IEF delivers containerized applications to edge nodes. This section describes how to create a custom edge application.

Constraints

- When the disk usage of an edge node exceeds 70%, the image reclamation mechanism is started to reclaim the disk space occupied by the container image. In this case, if you deploy a containerized application, the application container will take a long time to start. Therefore, properly plan the disk space of the edge node before deploying the containerized application.

- When you create a containerized application, the edge node pulls a container image from SWR. If the image is too large and the edge node has a limited download bandwidth, the image fails to be pulled. In this case, a message will be displayed on the IEF console, indicating that the containerized application fails to be created. However, the image pull will not be interrupted. After the container image is pulled successfully, the containerized application will be automatically created. To prevent such a problem, you can pull the container image to the edge node and then create a containerized application.

- The architecture of the container image to be used must be the same as that of the edge node on which a containerized application is to be deployed. For example, if the edge node uses x86, the container image must also use x86.

- In a platinum service instance, the number of application instances can be changed to zero.

Creating an Edge Application

- Log in to the IEF console, and click Switch Instance on the Dashboard page to select a platinum service instance.

- In the navigation pane, choose Edge Applications > Containerized Applications. Then, click Create Containerized Application in the upper right corner.

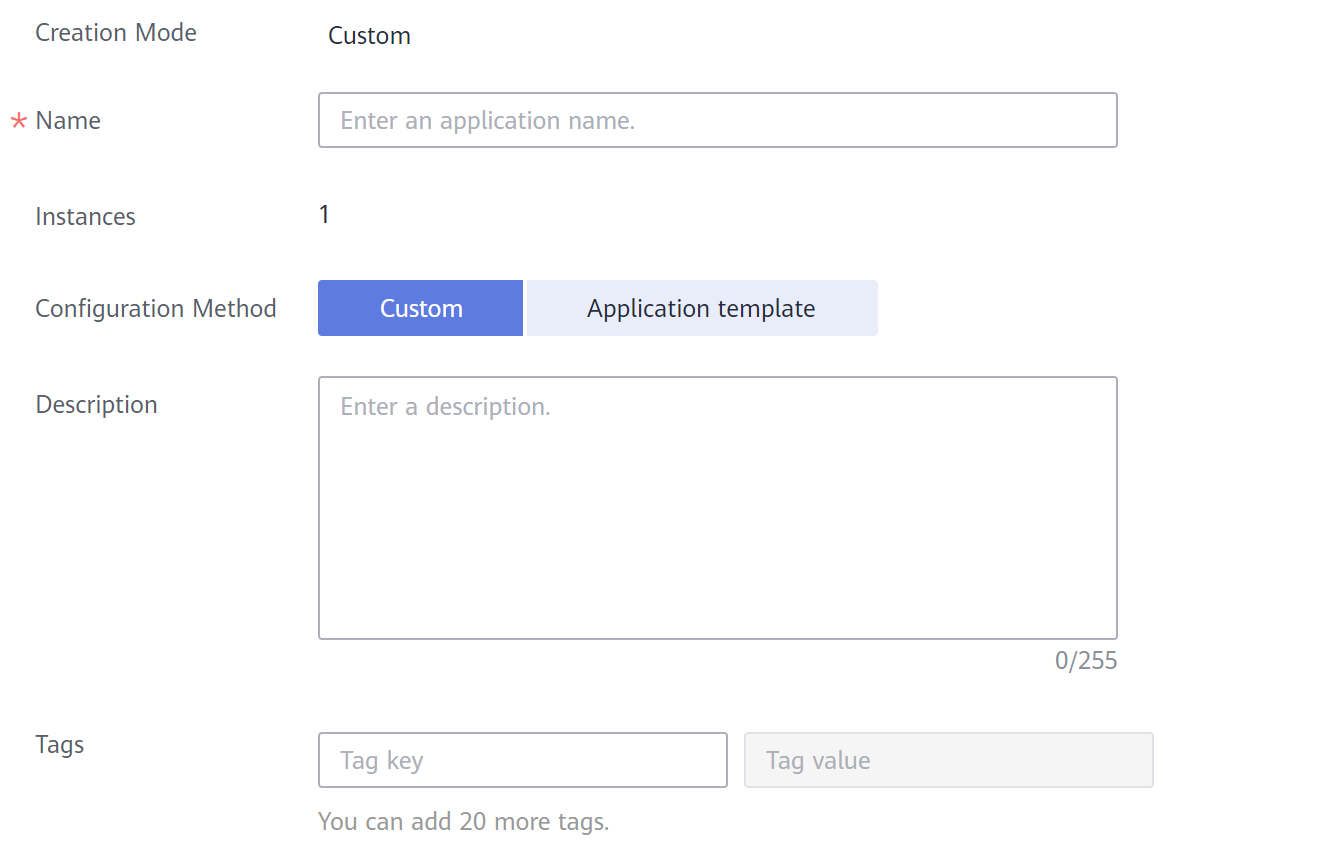

- Specify basic information.

- Name: name of a containerized application.

- Instances: number of instances of a containerized application. Multiple instances are allowed.

- Configuration Method

- Custom: Configure the application step by step. For details, see 4 to 6.

- Application template: Select a predefined application template and modify it based on your requirements. This method reduces repeated operations. The configurations in a template are the same as those set in 4 to 6. For details on how to create a template, see Application Templates.

- Tags

Tags can be used to classify resources, facilitating resource management.

Figure 1 Basic information

- Configure containers.

Click Use Image under the image to be used to deploy the application.

- My Images: shows all the images you have created in SWR.

- Shared Images: displays images shared by other users. Shared images are managed and maintained in SWR. For details, see Sharing a Private Image.

After selecting an image, specify container configurations.

- Image Version: Select the version of the image to be used to deploy the application.

Do not use version latest when deploying containers in the production environment. Otherwise, it will be difficult to determine the version of the running image and to roll back the application properly.

- Container Specifications: Specify CPU, memory, Ascend AI accelerator card, and GPU quotas.

- Ascend AI accelerator card: The AI accelerator card configuration of the containerized application must be the same as that of the edge node actually deployed. Otherwise, the application will fail to be created. For details, see Registering an Edge Node.

For NPUs after virtualization partition, only one virtualized NPU can be mounted to a container. The virtualized NPU can be allocated to another container only after the original container quits.

The following table lists the NPU types supported by AI accelerator cards.

Table 1 NPU types Type

Description

Ascend 310

Ascend 310 chips

Ascend 310B

Ascend 310B chips

Figure 2 Container configuration

You can also configure the following advanced settings for the container:

- Command

A container image has metadata that stores image details. If lifecycle commands and arguments are not set, IEF runs the default commands and arguments provided during image creation, that is, ENTRYPOINT and CMD in the Dockerfile.

If the commands and arguments used to run a container are set during application creation, the default commands ENTRYPOINT and CMD are overwritten during image building. The rules are as follows:

Table 2 Commands and arguments used to run a container Image ENTRYPOINT

Image CMD

Command to Run a Container

Arguments to Run a Container

Command Executed

[touch]

[/root/test]

Not set

Not set

[touch /root/test]

[touch]

[/root/test]

[mkdir]

Not set

[mkdir]

[touch]

[/root/test]

Not set

[/opt/test]

[touch /opt/test]

[touch]

[/root/test]

[mkdir]

[/opt/test]

[mkdir /opt/test]

Figure 3 Command

- Command

Enter an executable command, for example, /run/start.

If there are multiple commands, separate them with spaces. If the command contains a space, enclose the command in quotation marks ("").

In the case of multiple commands, you are advised to run /bin/sh or other shell commands. Other commands are used as arguments.

- Arguments

Enter the argument that controls the container running command, for example, --port=8080.

If there are multiple arguments, separate them with line breaks.

- Command

- Security Options

- You can enable Privileged Mode to grant root permissions to the container for accessing host devices (such as GPUs and FPGAs).

- RunAsUser Switch

By default, IEF runs the container as the user defined during image building.

You can specify a user to run the container by turning this switch on, and entering the user ID (an integer ranging from 0 to 65534) in the text box displayed. If the OS of the image does not contain the specified user ID, the application fails to be started.

- Environment Variables

An environment variable affects the way a running container will behave. Variables can be modified after workload deployment. Currently, environment variables can be manually added, imported from secrets or ConfigMaps, or referenced from hostIP.

- Added manually: Customize a variable name and value.

- Added from Secret: You can customize a variable name. The variable value is referenced from secret configuration data. For details on how to create a secret, see Secrets.

- Added from ConfigMap: You can customize a variable name. The variable value is referenced from ConfigMap configuration data. For details on how to create a ConfigMap, see ConfigMaps.

- Variable reference: The variable value is referenced from hostIP, that is, the IP address of an edge node.

IEF does not encrypt the environment variables you entered. If the environment variables you attempt to configure contain sensitive information, you need to encrypt them before entering them and also need to decrypt them when using them.

IEF does not provide any encryption and decryption tools. If you need to configure cypher text, choose your own encryption and decryption tools.

- Data Storage

You can define a local volume and mount the local storage directory of the edge node to the container for persistent data storage.

Currently, the following four types of local volumes are supported:

- hostPath: used for mounting a host directory to the container. hostPath is a persistent volume. After an application is deleted, the data in hostPath still exists in the local disk directory of the edge node. If the application is re-created later, previously written data can still be read after the directory is mounted.

You can mount the application log directory to the var/IEF/app/log/{appName} directory of the host. In the directory name, {appName} indicates the application name. The edge node will upload the .log and .trace files in the /var/IEF/app/log/{appName} directory to AOM.

The mount directory is the log path of the application in the container. For example, the default log path of the Nginx application is /var/log/nginx. The permission must be set to Read/Write.

Figure 4 Log volume mounting

- emptyDir: a simple empty directory used for storing transient data. It can be created in hard disks or memory. The application can read files from and write files into the directory. emptyDir has the same lifecycle as the application. If the application is deleted, the data in emptyDir is deleted along with it.

- configMap: a type of resources that store configuration details required by the application. For details on how to create a ConfigMap, see ConfigMaps.

- secret: a type of resources that store sensitive data, such as authentication, certificate, and key details. For details on how to create a secret, see Secrets.

- The container path cannot be a system directory, such as / or /var/run. Otherwise, an exception occurs. You are advised to mount the container to an empty directory. If the directory is not empty, ensure that the directory does not contain any files that affect container startup. Otherwise, the files will be replaced, making it impossible for the container to be properly started. As a result, the application creation will fail.

- If the container is mounted into a high-risk directory, you are advised to use an account with minimum permissions to start the container. Otherwise, high-risk files on the host machine may be damaged.

- hostPath: used for mounting a host directory to the container. hostPath is a persistent volume. After an application is deleted, the data in hostPath still exists in the local disk directory of the edge node. If the application is re-created later, previously written data can still be read after the directory is mounted.

- Health Check

Health check regularly checks the status of containers or workloads.

- Liveness Probe: The system executes the probe to check if a container is still alive, and restarts the instance if the probe fails. Currently, the system probes a container by HTTP request or command and determines whether the container is alive based on the response from the container.

- Readiness Probe: The system invokes the probe to determine whether the instance is ready. If the instance is not ready, the system does not forward requests to the instance.

For details, see Health Check Configuration.

- Click Next.

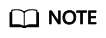

Select a deployment object. Currently, two deployment objects are supported.

- Manually specify

Figure 5 Specifying an edge node

- Automatically schedule

The containerized application will be automatically scheduled in the edge node group based on the resource usage of the nodes. You can also set a restart policy to determine whether the application instance is re-scheduled onto other available nodes in the edge node group when the edge node where the application instance originally runs is unavailable.

If more than 55% of nodes is unavailable in a node group, automatic migration will stop.

You can also configure scheduling policies. For details, see Affinity and Anti-Affinity Scheduling.

Figure 6 Automatic scheduling

Time Window: indicates how long the node will stay bound to the node with the specified taint. If this parameter is not configured, the default value is 300. During the fault time window, nodes can still be scheduled.

You can also configure the following advanced settings for the container:

- Restart Policy

- Always restart: The system restarts the container regardless of whether it had quit normally or unexpectedly.

This policy is used for a node group.

- Restart upon failure: The system restarts the container only if it had previously quit unexpectedly.

- Do not restart: The system does not restart the container regardless of whether it had quit normally or unexpectedly.

You are allowed to upgrade a containerized application and modify its instance quantity and access configuration only after you select Always restart.

- Always restart: The system restarts the container regardless of whether it had quit normally or unexpectedly.

- Host PID

Enabling this function allows containers to share the PID namespace with the host where the edge node resides. In this way, you can perform container operations on the edge node, for example, starting and stopping a container. Similarly, you can perform operations related to the edge node in containers, for example, starting and stopping a process of the edge node.

This function can only be enabled on edge nodes running software v2.8.0 or later.

- Manually specify

- Click Next.

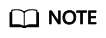

Container access supports bridged network and host network.

If the ports of containers deployed on the same edge node conflict, the containers will fail to be started.

- Bridged network

- The container uses an independent virtual network. Configure relationships between container and host ports to enable external communications. After port mapping is configured, traffic destined for a host port is distributed to the mapping container port. For example, if container port 80 is mapped to host port 8080, the traffic destined for host port 8080 will be directed to container port 80.

- You can select a host NIC to enable the port bound to this NIC to communicate with the container port. Note that port mapping does not support NICs with IPv6 addresses.

- The host port in the port mapping can be specified or automatically assigned. Automatically assigning the host port prevents container startup failures caused by port conflicts of multiple instances.

For automatic port assigning, enter a proper port range to avoid port conflicts.

- Host network

The network of the host (edge node) is used. To be specific, the container and the host use the same IP address, and network isolation is not required between them.

- Service

Click Add Service, enter a Service name and access port, and set Container Port to the one set in port mapping. The protocol can be HTTP or TCP.Figure 7 Adding a Service

If Bridged network is selected for Network, Add Service is not available before you specify ports in Port Mapping.

A Service defines more refined access mode for applications. You can create a Service after an application is created. For details, see Creating a Service.

- Bridged network

- Click Next. Confirm the specifications of the containerized application and click Create.

Querying Application O&M Information

After an application is deployed, you can view the CPU and memory information of the application on the IEF console. You can also go to the Container Monitoring page on the AOM console to view additional metrics, and add or view alarms in Alarm Center.

- Log in to the IEF console, and click Switch Instance on the Dashboard page to select a platinum service instance.

- In the navigation pane, choose Edge Applications > Containerized Applications. Then, click an application name.

- Click the Monitoring tab to view the monitoring details for the application.

Figure 8 Application monitoring details

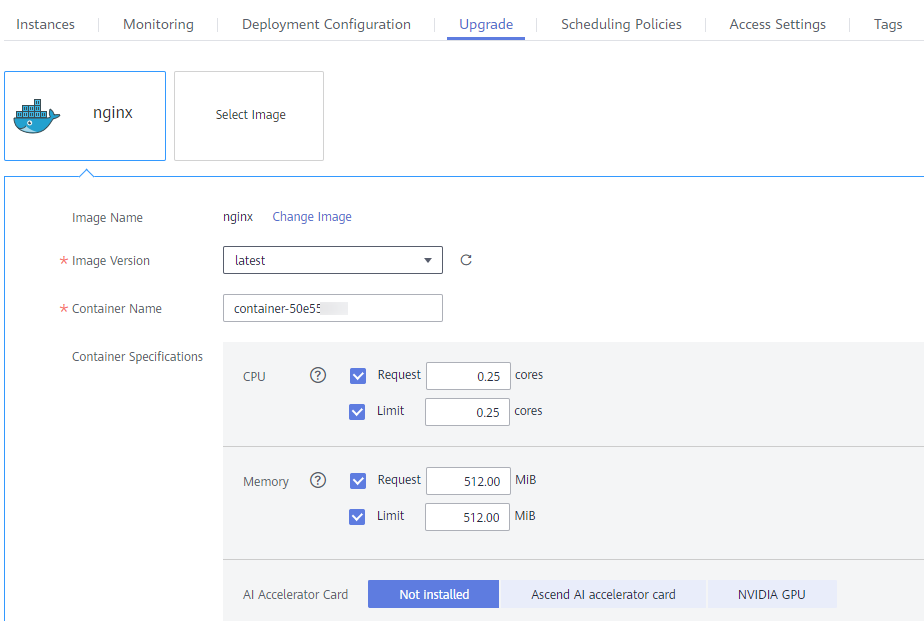

Upgrading an Application

After an application is deployed, you can upgrade it. Rolling upgrades are used. That is, a new application instance is created first, and then the old application instance is deleted.

- Log in to the IEF console, and click Switch Instance on the Dashboard page to select a platinum service instance.

- In the navigation pane, choose Edge Applications > Containerized Applications. Then, click an application name.

- Click the Upgrade tab and modify the container configurations. The parameter settings are the same as those in 4.

Figure 9 Upgrading an application

- Click Submit.

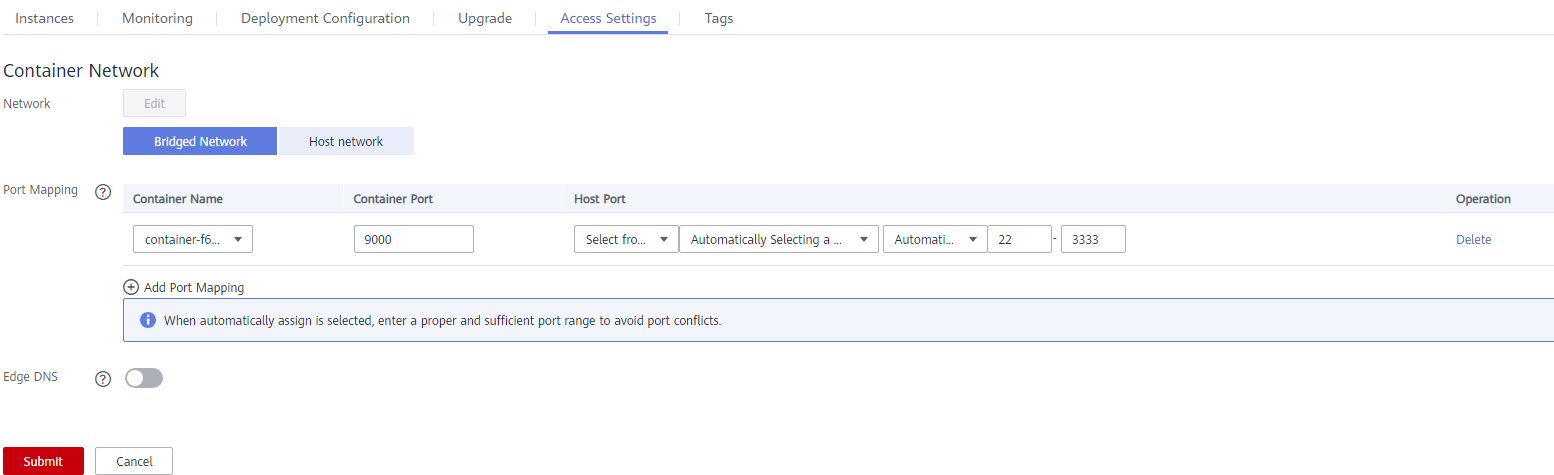

Modifying Access Settings

After an application is deployed, you can modify the access settings of the application.

- Log in to the IEF console, and click Switch Instance on the Dashboard page to select a platinum service instance.

- In the navigation pane, choose Edge Applications > Containerized Applications. Then, click an application name.

- Click the Access Settings tab and modify the configurations. The parameter settings are the same as those in 6.

Figure 10 Modifying access settings

- Click Submit.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot