AI Inference Framework Add-on

Introduction

AI Inference Framework is a cloud native add-on for full lifecycle management of AI models. It enables you to specify custom AI model registration, deployment, and scheduling using declarative APIs, ensuring efficient execution of inference tasks.

Prerequisites

- You have created a CCE standard or Turbo cluster of v1.28 or later, and there is available Snt9B NPU on any node in the cluster.

- You have installed the LeaderWorkerSet add-on. For details, see LeaderWorkerSet Add-on.

- You have installed the CCE AI Suite (Ascend NPU) and Volcano add-ons. For details, see CCE AI Suite (NVIDIA GPU) and Volcano Scheduler.

- You have purchased a DDS instance or deployed a MongoDB database in the cluster to store data generated by inference instances.

- You have purchased an OBS bucket before model deployment. For details, see Creating an OBS Bucket.

Notes and Constraints

- This add-on is being deployed. To view the regions where this add-on is available, see the console.

- This add-on is in the OBT phase. You can experience the latest add-on features. However, the stability of this add-on version has not been fully verified, and the CCE SLA is not valid for this version.

Installing the Add-on

- Log in to the CCE console and click the cluster name to access the cluster console.

- In the navigation pane, choose Add-ons. On the displayed page, locate AI Inference Framework and click Install.

- On the Install Add-on page, configure the specifications.

Table 1 Add-on specifications Parameter

Description

Version

Select a version as needed.

Add-on Specifications

Currently, only the default specifications are supported.

mongodbUrl

Address for accessing the DDS instance or the MongoDB database in the cluster. For details, see Prerequisites.

enableLocalStorage

Whether to use the database in the cluster.

- true: The MongoDB database in the cluster is used.

- false: The DDS instance is used.

- Click Install in the lower right corner. If the status is Running, the add-on has been installed.

Components

|

Component |

Description |

Resource Type |

|---|---|---|

|

frontend |

Provides a unified API gateway for forwarding user requests. When you create this component, the AI Inference Framework add-on automatically creates a ClusterIP Service named frontend-service to provide stable network access. |

Deployment |

|

model-job-executor |

Manages full lifecycle of AI models, such as model registration, deployment, and deletion. |

Deployment |

|

chat-job-executor |

Executes AI inference jobs and provides low-latency and high-concurrency model inference services. |

Deployment |

|

task-executor-manager |

Implements dynamic scheduling and fault isolation of hardware resources such as GPUs and NPUs based on the current system resource load. |

Deployment |

Model Templates

The AI Inference Framework add-on provides preset templates for mainstream AI models. You can call the templates using declarative APIs to quickly deploy models without manually compiling complex configuration files. The templates contain parameters, resource requirements, and hardware configurations of common models, effectively lowering the threshold for model deployment.

There are preset templates for the mainstream models below. You can deploy and use related models by referring to Model Deployment.

|

Model Type |

NPU Resource Type |

|---|---|

|

DeepSeek-R1-Distill-Qwen-7B |

huawei.com/ascend-1980 |

|

DeepSeek-R1-Distill-Llama-8B |

huawei.com/ascend-1980 |

|

DeepSeek-R1-Distill-Qwen-14B |

huawei.com/ascend-1980 |

|

DeepSeek-R1-Distill-Qwen-32B |

huawei.com/ascend-1980 |

|

DeepSeek-R1-Distill-Llama-70B |

huawei.com/ascend-1980 |

|

QwQ-32B |

huawei.com/ascend-1980 |

|

Qwen2.5-Coder-32B-Instruct |

huawei.com/ascend-1980 |

|

Qwen2.5-32B-Instruct |

huawei.com/ascend-1980 |

|

Qwen2.5-72B-Instruct |

huawei.com/ascend-1980 |

The following is an example YAML for a preset template (supported by the AI Inference Framework add-on, and you do not need to perform any operations):

apiVersion: leaderworkerset.x-k8s.io/v1

kind: LeaderWorkerSet

metadata:

namespace: ${namespace}

name: ${name}

labels:

xds/version: ${helm_revision}

spec:

replicas: ${replicas}

leaderWorkerTemplate:

leaderTemplate:

metadata:

labels:

xds/instanceType: head

xds/teGroupId: ${te_group_id}

xds/modelId: ${model_id}

xds/teGroupType: ${te_group_type}

spec:

containers:

- name: ascend-vllm-arm64

image: ${leader_image}

env:

- name: HF_HUB_OFFLINE

value: "1"

- name: INFER_MODE

value: DEFAULT

- name: USE_MASKFREE_ATTN

value: "1"

- name: MASKFREE_ATTN_THRESHOLD

value: "16384"

args:

[

"--model",

"deepseek-ai/DeepSeek-R1-Distill-Qwen-7B",

"--max-num-seqs",

"256",

"--max-model-len",

"2048",

"--max-num-batched-tokens",

"4096",

"--block-size",

"128",

"--gpu-memory-utilization",

"0.9",

"--trust-remote-code",

"--served-model-name",

"${model_name}"

]

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: "8"

memory: 72Gi

huawei.com/ascend-1980: "1"

requests:

cpu: "1"

memory: 64Gi

huawei.com/ascend-1980: "1"

ports:

- containerPort: 8000

volumeMounts:

- name: models

mountPath: /workspace/deepseek-ai/DeepSeek-R1-Distill-Qwen-7B

subPath: ${model_path}

readOnly: true

readinessProbe:

initialDelaySeconds: 5

periodSeconds: 5

failureThreshold: 3

httpGet:

path: /health

port: 8000

livenessProbe:

initialDelaySeconds: 180

periodSeconds: 5

failureThreshold: 3

httpGet:

path: /health

port: 8000

volumes:

- name: models

persistentVolumeClaim:

claimName: ${pvc_name}

size: 1

workerTemplate:

spec:

containers: []

Model Deployment

The AI Inference Framework add-on provides a complete AI model deployment solution. You can use declarative APIs to quickly register, configure resources, and deploy models. In this example, the DeepSeek-R1-Distill-Qwen-7B model is used to describe how to use this add-on to deploy an AI model, covering the entire process from preparation to deployment verification.

- Log in to the NPU node in the cluster and use kubectl to access the cluster by referring to Accessing a Cluster Using kubectl.

- Create a PVC and store the model weight file in the PVC. The following uses PVCs for object storage volumes as an example. In subsequent steps, model weights will be mounted to the corresponding inference instance through the PVC to improve inference performance.

Two types of PVCs are supported. Their differences are as follows:

Two types of PVCs are supported. Their differences are as follows:- PVCs for object storage volumes: used to store model weight files for a long time. This type of PVC features low cost but slow access speed. When using a PVC of the object storage type (such as OBS), you are advised to mount a PVC of the SFS Turbo type as the cache to accelerate model loading.

- PVCs for SFS Turbo volumes: used for model weight file caching, which provides low-latency access and shortens model loading time.

- Create a PVC for the OBS bucket. For details, see Using an Existing OBS Bucket Through a Static PV.

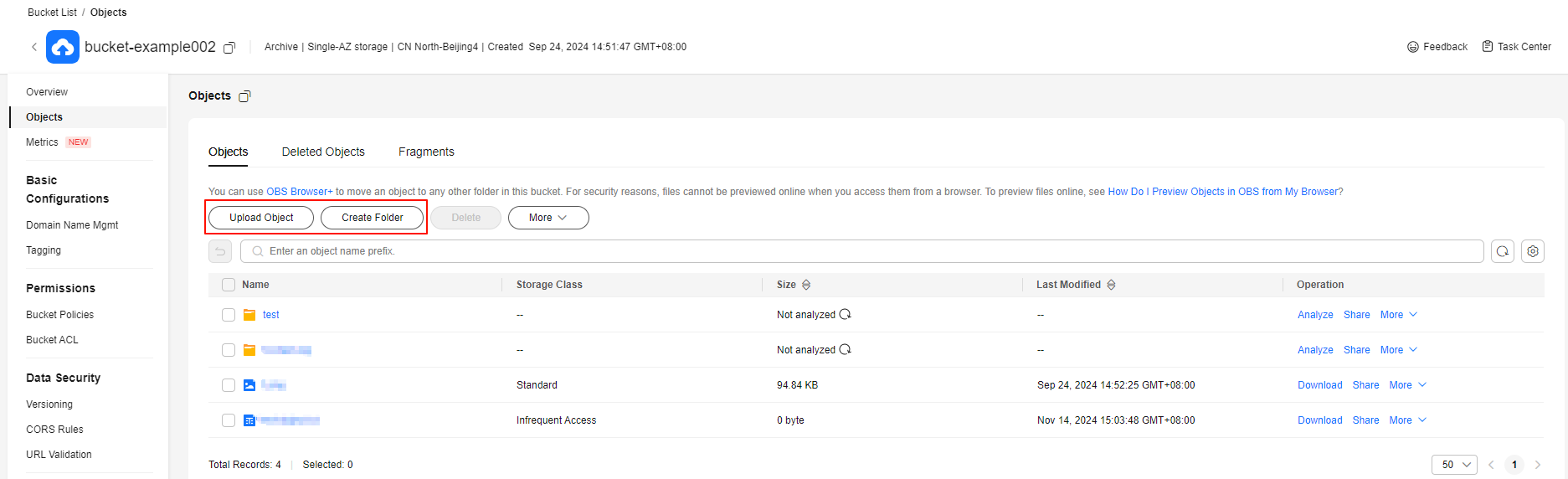

- Log in to the OBS console. In the navigation pane, choose Buckets. On the page that is displayed, click the bucket name to go to the Objects page. Click Upload Object to upload the model weight file to the corresponding path. You will be billed for the requests when you upload an object to the bucket. After the upload is successful, you will be billed for the object storage.

Figure 1 Uploading the model weight file

- Run the following command to register the model metadata using an API to define the basic information, resource requirements, storage configuration, and service level object (SLO) of the model. After the registration is successful, the AI Inference Framework add-on will create an AI model instance and Service in the cluster.

curl --location 'https://{endpoint}/v1/models' \ --header 'Content-Type: application/json' \ --data '{ "name": "DeepSeek-R1-Distill-Qwen-7B", # Name of the model to be created. You can change the name as needed. "type": "DeepSeek-R1-Distill-Qwen-7B", "resource_type": "huawei.com/ascend-1980", # Hardware type "version": "1.0.0", "max_instance": 4, # Maximum number of model instance replicas that can be scaled "min_instance": 2, # Minimum number of model instance replicas that can be scaled "pvc": { "name": "release-models", "path": "deepseek-ai/DeepSeek-R1-Distill-Qwen-7B" # Path of the model weight file in the PVC }, "slo": { "target_time_to_first_token_p95": 1000, # Time when 95% of requests receive first responses (ms) "target_time_per_output_token_p95": 20 # Processing time per token (ms) }, "description": "This is model description." }'

Table 4 Parameter description Parameter

Example Value

Description

endpoint

frontend-service.kube-system.cluster.local

Access address of frontend-service, which can be accessed using the Service name or cluster-scoped IP address.

resource_type

"huawei.com/ascend-1980"

Resource type. Only huawei.com/ascend-1980 is supported.

max_instance

4

Maximum number of model instance replicas that can be scaled.

min_instance

2

Minimum number of model instance replicas that can be scaled.

pvc

- "name": "release-models",

- "path": "deepseek-ai/DeepSeek-R1-Distill-Qwen-7B"

Enter the PVC name obtained in 2 and the storage path of the model weight file in the PVC.

slo

- "target_time_to_first_token_p95": 1000

- "target_time_per_output_token_p95": 20

Service performance objectives. For example, target_time_to_first_token_p95 specifies the time when 95% of requests receive first responses, and target_time_per_output_token_p95 specifies processing time per token.

When the performance exceeds the preset value, the system automatically scales the model instance. For details about auto scaling of model instances, see Model Instance Auto Scaling.

- Obtain the status of the registered model instance through APIs, including the number of replicas and health.

curl --location 'https://{endpoint}/v1/models' # Queries the statuses of all models. curl --location 'http://{endpoint}/v1/models/d97051120764490daf846053e16d4f25' # Queries the status of a specified model.If the model status is ACTIVE, the model is running normally.

{"model":{"id":"d97051120764490daf846053e16d4f25","name":"DeepSeek-R1-Distill-Qwen-7B","type":"DeepSeek-R1-Distill-Qwen-7B","resource_type":"huawei.com/ascend-1980","max_instance":2,"min_instance":1,"pvc":{"name":"release-models","path":"deepseek-ai/DeepSeek-R1-Distill-Qwen-7B"},"slo":{"target_time_to_first_token_p95":1000,"target_time_per_output_token_p95":20},"description":"This is model description.","status":"ACTIVE","status_code":"","status_msg":"","created_at":"2025-04-29T17:10:08.673Z","updated_at":"2025-04-29T18:31:07.164Z"}} - Send an inference request to the registered model instance.

Before requesting inference, ensure that the model is ready. If the model is not ready, the request may fail or there may be an error.

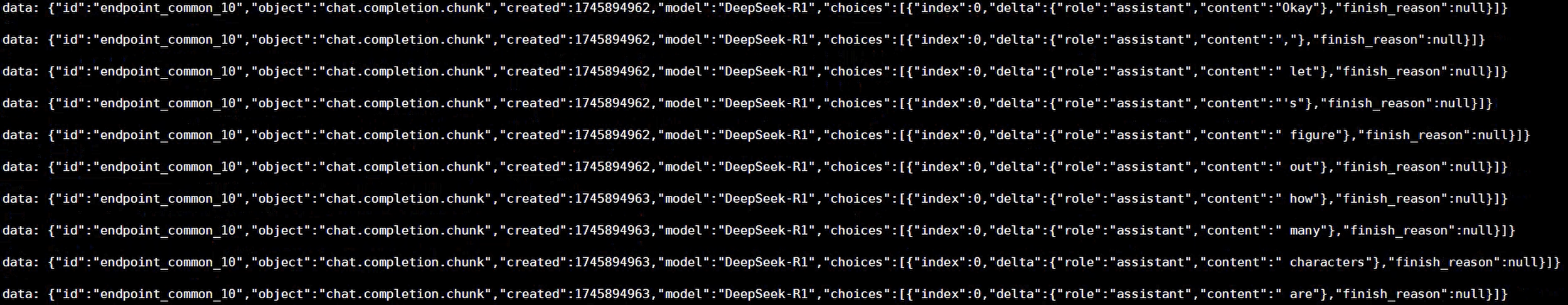

curl --location 'https://{endpoint}/v1/chat/completions' \ --header 'Content-Type: application/json' \ --data '{ "model": "DeepSeek-R1", "messages": [ { "role": "user", "content": "How many letters r are there in strawberry?" } ], "stream": true }'

Table 5 Parameter description Parameter

Example Value

Description

messages

- "role": "user"

- "content": "How many letters r are there in strawberry?"

Information such as roles and problems for obtaining the corresponding results.

stream

true

Dialog mode. The options are as follows:

- true: The stream output mode is enabled, and the generated token is returned in real time.

- false: The stream output mode is disabled. All tokens are returned at the same time after being generated.

Information similar to the following is displayed.

- If the current model instance cannot meet the requirements, update the configuration of the registered model instance using a PUT request. For example, change the maximum number of replicas of the model instance from 4 to 6.

curl --location --request PUT 'https://{endpoint}/v1/models/d97051120764490daf846053e16d4f25' \ --header 'Content-Type: application/json' \ --data '{ "max_instance": 6 # Changes the maximum number of replicas from 4 to 6. }'Information similar to the following is displayed:

{"model":{"id":"d97051120764490daf846053e16d4f25","name":"DeepSeek-R1-Distill-Qwen-7B","type":"DeepSeek-R1-Distill-Qwen-7B","resource_type":"huawei.com/ascend-1980","max_instance":6,"min_instance":1,"pvc":{"name":"release-models","path":"deepseek-ai/DeepSeek-R1-Distill-Qwen-7B"},"slo":{"target_time_to_first_token_p95":1000,"target_time_per_output_token_p95":20},"description":"This is model description.","status":"ACTIVE","status_code":"","status_msg":"","created_at":"2025-04-29T17:10:08.673Z","updated_at":"2025-04-29T18:31:07.164Z"}} - Delete the model instance using a DELETE request to release resources. Deletion is irreversible. You are advised to stop the service before deleting it.

curl --location --request DELETE 'https://{endpoint}/v1/models/a3c390d311f0455f8e0bc18074404a44'If no error information is displayed, the deletion is successful.

Now you have completed the full lifecycle management of a model, from registration to deletion.

Add-on Features

The AI Inference Framework add-on supports model instance auto scaling and Key-Value (KV) Cache.

Implementation of KV Cache in the vLLM framework

The AI Inference Framework add-on implements KV Cache based on vLLM (high-performance inference framework). The core features are as follows:

- Dynamic blocking

- Blocking strategy: The input sequence is divided into fixed-size blocks (specified by --block-size parameter). The KV value in each block is independently computed and cached.

- Advantages:

- Dynamic blocking flexibly adjusts the block size to balance memory usage and computing efficiency.

- Dynamic blocking is suitable for input sequences of different lengths (such as dialog history and long text generation).

- Memory optimization

- GPU memory usage: The --gpu-memory-utilization parameter (default value: 0.9) is used to dynamically allocate GPU memory to maximize the GPU resource usage.

- Shared cache: Multiple parallel requests can share a KV cache to reduce repeated computing.

- Parallel inference

- Multiple sequences: The --max-num-seqs parameter defines the maximum number of requests (for example, 256) that can be processed by a single instance in parallel.

- Sharded deployment: For ultra-large models (such as a 70B-parameter model), distributed inference is implemented through model sharding and KV cache sharding.

Release History

|

Add-on Version |

Supported Cluster Version |

New Feature |

|---|---|---|

|

1.0.0 |

v1.28 v1.29 v1.30 v1.31 |

CCE standard and Turbo clusters support the AI Inference Framework add-on. |

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot