Installing and Using xGPU

This section describes how to install and use xGPU.

Constraints

- xGPU is supported only on NVIDIA Tesla T4, V100, and L2.

- The HCE kernel version is 5.10 or later.

- xGPU is supported by CUDA 12.2.0 to 12.8.0.

- NVIDIA driver 535.54.03, 535.216.03, or 570.86.15 has been installed on GPU-accelerated ECSs.

- Docker 18.09.0-300 or later has been installed on GPU-accelerated ECSs.

- Limited by GPU virtualization, compute isolation of xGPUs cannot be used for rendering. You need to use a native scheduling policy for rendering.

- Limited by GPU virtualization, when applications in a container are initialized, the GPU compute monitored by NVIDIA System Management Interface (nvidia-smi) may exceed the upper limit of the GPU compute available for the container.

- When the first CUDA application is created, a percentage of the GPU memory (about 3 MiB on NVIDIA Tesla T4) is requested from each GPU. This GPU memory is considered as the management overhead and is not controlled by the xGPU service.

- xGPU cannot be used on bare metal servers and VMs configured with a passthrough NIC at the same time.

- GPU memory isolation provided by xGPU cannot be used for GPU memory applications through unified virtual memory (UVM) by calling CUDA API cudaMallocManaged(). For more information, see NVIDIA official document. Use other methods to request GPU memory. For example, call cudaMalloc().

- xGPU allows users to disable GPU memory application through UVM. For details, see the description of the uvm_disable parameter.

Installing xGPU

To install xGPU, contact technical support.

You are advised to use xGPU through CCE. For details, see GPU Virtualization.

Using xGPU

The following table describes the environment variables of xGPU. When creating a container, you can specify the environment variables to configure the GPU compute and memory that a container engine can obtain using xGPU.

|

Environment Variable |

Value Type |

Description |

Example |

|---|---|---|---|

|

GPU_IDX |

Integer |

Specifies the GPU that can be used by a container. |

Assigning the first GPU to a container: GPU_IDX=0 |

|

GPU_CONTAINER_MEM |

Integer |

Specifies the size of the GPU memory that will be used by a container, in MiB. |

Allocating 5,120 MiB of the GPU memory to a container: GPU_CONTAINER_MEM=5120 |

|

GPU_CONTAINER_QUOTA_PERCENT |

Integer |

Specifies the percentage of the GPU compute that will be allocated to a container from a GPU. The GPU compute is allocated at a granularity of 1%. It is recommended that the minimum GPU compute be greater than or equal to 4%. |

Allocating 50% of the GPU compute to a container: GPU_CONTAINER_QUOTA_PERCEN=50 |

|

GPU_POLICY |

Integer |

Specifies the GPU compute isolation policy used by the GPU.

For details, see GPU Compute Scheduling Examples. |

Setting the GPU compute isolation policy to fixed scheduling: GPU_POLICY=1 |

|

GPU_CONTAINER_PRIORITY |

Integer |

Specifies the priority of a container.

|

Creating a high-priority container: GPU_CONTAINER_PRIORITY=1 |

NVIDIA Docker can use GPUs. It allows NVIDIA GPUs to be used by containers. The following uses NVIDIA Docker to create two containers as an example to describe how to use xGPUs.

|

Parameter |

Container 1 |

Container 2 |

Description |

|---|---|---|---|

|

GPU_IDX |

0 |

0 |

Two containers use the first GPU. |

|

GPU_CONTAINER_QUOTA_PERCENT |

50 |

30 |

Allocate 50% of GPU compute to container 1 and 30% of GPU compute to container 2. |

|

GPU_CONTAINER_MEM |

5120 |

1024 |

Allocate 5,120 MiB of the GPU memory to container 1 and 1,024 MiB of the GPU memory to container 2. |

|

GPU_POLICY |

1 |

1 |

Set the first GPU to use fixed scheduling. |

|

GPU_CONTAINER_PRIORITY |

1 |

0 |

Give container 1 a high priority and container 2 a low priority. |

Example:

docker run --rm -it --runtime=nvidia -e GPU_CONTAINER_QUOTA_PERCENT=50 -e GPU_CONTAINER_MEM=5120 -e GPU_IDX=0 -e GPU_POLICY=1 -e GPU_CONTAINER_PRIORITY=1 --shm-size 16g -v /mnt/:/mnt nvcr.io/nvidia/tensorrt:19.07-py3 bash docker run --rm -it --runtime=nvidia -e GPU_CONTAINER_QUOTA_PERCENT=30 -e GPU_CONTAINER_MEM=1024 -e GPU_IDX=0 -e GPU_POLICY=1 -e GPU_CONTAINER_PRIORITY=0 --shm-size 16g -v /mnt/:/mnt nvcr.io/nvidia/tensorrt:19.07-py3 bash

Viewing procfs Directory

At runtime, xGPU generates and manages multiple proc file systems (procfs) in the /proc/xgpu directory. The following are operations for you to view xGPU information and configure xGPU settings.

- Run the following command to view the node information:

ls /proc/xgpu/ 0 container version uvm_disable

The following table describes the information about the procfs nodes.

Table 3 Directory description Directory

Read/Write Type

Description

0

Read and write

xGPU generates a directory for each GPU on a GPU-accelerated ECS. Each directory uses a number as its name, for example, 0, 1, and 2. In this example, there is only one GPU, and the directory ID is 0.

container

Read and write

xGPU generates a directory for each container running in the GPU-accelerated ECSs.

version

Read-only

xGPU version.

uvm_disable

Read and write

Controls whether to disable the option that allows all containers to request GPU memory through UVM. The default value is 0.

- 0: This option is enabled.

- 1: This option is disabled.

- Run the following command to view the items in the directory of the first GPU:

ls /proc/xgpu/0/ max_inst meminfo policy quota utilization_line utilization_rate xgpu1 xgpu2

The following table describes the information about the GPU.

Table 4 Directory description Directory

Read/Write Type

Description

max_inst

Read and write

Specifies the maximum number of containers. The value ranges from 1 to 25. This parameter can be modified only when no container is running.

meminfo

Read-only

Specifies the total available GPU memory.

policy

Read and write

Specifies the GPU compute isolation policy used by the GPU. The default value is 1.

- 0: native scheduling. The GPU compute is not isolated.

- 1: fixed scheduling

- 2: average scheduling

- 3: preemptive scheduling

- 4: weight-based preemptive scheduling

- 5: hybrid scheduling

- 6: weighted scheduling

For details, see GPU Compute Scheduling Examples.

quota

Read-only

Specifies the total weight of the GPU compute.

utilization_line

Read and write

Specifies the GPU usage threshold for online containers to suppress offline containers.

If the GPU usage exceeds the value of this parameter, online containers completely suppress offline containers. If the GPU usage does not exceed the value of this parameter, online containers partially suppress offline containers.

utilization_rate

Read only

Specifies the GPU usage.

xgpuIndex

Read and write

Specifies the xgpu subdirectory of the GPU.

In this example, xgpu1 and xgpu2 belong to the first GPU.

- Run the following command to view the directory of a container:

ls /proc/xgpu/container/ 9418 9582

The following table describes the content in the directory.

Table 5 Directory description Directory

Read/Write Type

Description

containerID

Read and write

Specifies the container ID.

xGPU generates an ID for each container during container creation.

- Run the following commands to view the directory of each container:

ls /proc/xgpu/container/9418/ xgpu1 uvm_disable ls /proc/xgpu/container/9582/ xgpu2 uvm_disable

The following table describes the content in the directory.

Table 6 Directory description Directory

Read/Write Type

Description

xgpuIndex

Read and write

Specifies the xgpu subdirectory of the container.

In this example, xgpu1 is the subdirectory of container 9418, and xgpu2 is the subdirectory of container 9582.

uvm_disable

Read and write

Controls whether to disable the option that only allows a container to request GPU memory through UVM. The default value is 0.

- 0: This option is enabled.

- 1: This option is disabled.

- Run the following command to view the xgpuIndex directory:

ls /proc/xgpu/container/9418/xgpu1/ meminfo priority quota

The following table describes the content in the directory.

Table 7 Directory description Directory

Read/Write Type

Description

meminfo

Read-only

Specifies the GPU memory allocated using xGPU and the remaining GPU memory.

For example, 3308MiB/5120MiB, 64% free indicates that 5,120 MiB of memory is allocated and 64% is available.

priority

Read and write

Specifies the priority of a container. The default value is 0.

- 0: low priority

- 1: high priority

This parameter is used in hybrid scenarios where there are both online and offline containers. High-priority containers can preempt the GPU compute of low-priority containers.

quota

Read-only

Specifies the percentage of the GPU compute allocated using xGPU.

For example, 50 indicates that 50% of the GPU compute is allocated using xGPU.

|

Command |

Description |

|---|---|

|

echo 1 > /proc/xgpu/0/policy |

Changes the scheduling policy to weight-based preemptive scheduling for the first GPU. |

|

cat /proc/xgpu/container/$containerID/$xgpuIndex/meminfo |

Queries the memory allocated to a container. |

|

cat /proc/xgpu/container/$containerID/$xgpuIndex/quota |

Queries the GPU compute weight specified for a container. |

|

cat /proc/xgpu/0/quota |

Queries the weight of the remaining GPU compute on the first GPU. |

|

echo 20 > /proc/xgpu/0/max_inst |

Sets the maximum number of containers allowed on the first GPU to 20. |

|

echo 1 > /proc/xgpu/container/$containerID/$xgpuIndex/priority |

Sets the xGPU in a container to high priority. |

|

echo 40 > /proc/xgpu/0/utilization_line |

Sets the suppression threshold of the first GPU to 40%. |

|

echo 1 > /proc/xgpu/container/$containerID/uvm_disable |

Controls whether to disable the option that allows containers to use xGPU to request GPU memory through UVM. |

Upgrading xGPU

You can perform cold upgrades on xGPU.

Uninstalling xGPU

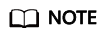

Monitoring Containers Using xgpu-smi

You can use xgpu-smi to view xGPU information, including the container ID, GPU compute usage and allocation, and GPU memory usage and allocation.

The following shows the xgpu-smi monitoring information.

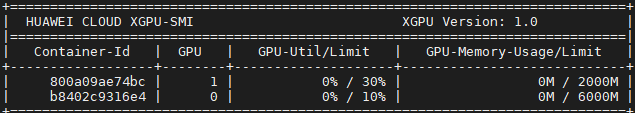

You can run the xgpu-smi -h command to view the help information about the xgpu-smi tool.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot