Overview

xGPU is a GPU virtualization and sharing service developed by Huawei Cloud. This service isolates GPU resources, ensuring service security and helping save costs by improving GPU resource utilization.

Architecture

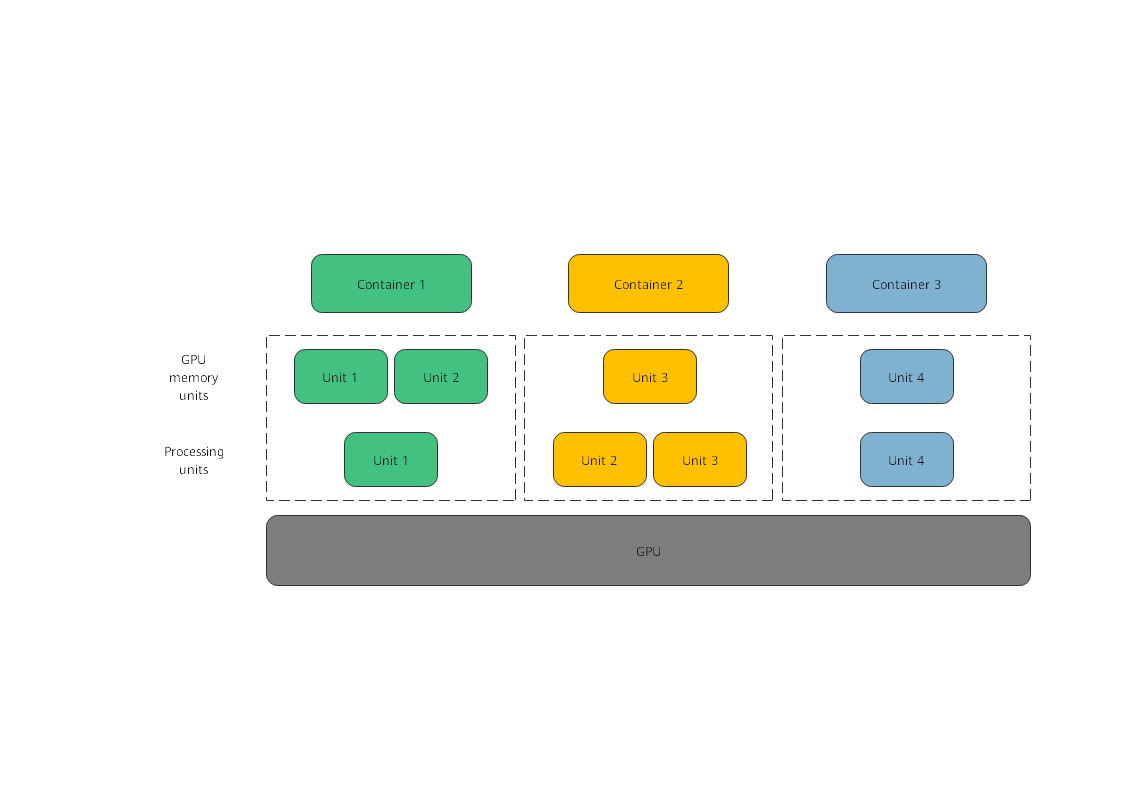

xGPU uses the in-house kernel drivers to provide vGPUs for containers. This service can isolate the GPU memory and compute while delivering high performance, ensuring that GPU hardware are fully used for training and inference. You can run commands to easily configure vGPUs in a container.

Why Using xGPU

- Lower cost

As GPU technology develops, the price of a single GPU is rising though it can offer larger GPU compute. In some scenarios, an AI application does not require an entire GPU. xGPU enables multiple containers to share one GPU and isolates GPU resources to keep services securely isolated, improving GPU hardware utilization and reducing the resource cost.

- Flexible resource allocation

xGPU allows you to flexibly allocate physical GPU resources based on your service requirements.

- You can allocate resources by GPU memory or compute as required.

Figure 2 GPU resource allocation

- You can isolate either of GPU memory or compute, and specify weights to allocate the GPU compute. The GPU compute is allocated at a granularity of 1%. It is recommended that the minimum GPU compute be greater than or equal to 4%.

- You can allocate resources by GPU memory or compute as required.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot