Comprehensive Monitoring of DCGM Metrics

It is essential for O&M personnel to monitor large-scale Kubernetes-based GPU devices. By tracking GPU metrics, the O&M personnel can gain valuable insights into the overall utilization, health status, and workload performance of the entire cluster, thereby facilitating swift issue resolution, optimized GPU resource allocation, and enhanced resource efficiency. Moreover, data scientists and AI algorithm engineers can leverage relevant monitoring metrics to learn about the organization's GPU usage patterns, ultimately supporting informed capacity planning and task scheduling decisions.

The latest NVIDIA offerings enable the utilization of the Data Center GPU Manager (DCGM) for managing large-scale GPU clusters. The CCE AI Suite (NVIDIA GPU) add-on, version 2.7.40 or later, is built on NVIDIA DCGM, providing advanced GPU monitoring functionalities. DCGM offers a broad spectrum of GPU monitoring metrics, featuring:

- GPU behavior monitoring

- GPU configuration management

- GPU policy management

- GPU health diagnosis

- Statistics on GPUs and threads

- Configuration and monitoring for NVSwitches

This section leverages CCE Cloud Native Cluster Monitoring and DCGM-Exporter to monitor GPUs in comprehensive scenarios. For details about commonly used metrics, see GPU Metrics Provided by DCGM. For more details, see dcgm-exporter.

Prerequisites

A NVIDIA GPU node is running properly in the cluster.

Notes and Constraints

- Before enabling DCGM-Exporter, ensure the CCE AI Suite (NVIDIA GPU) version is 2.7.40 or later.

- Before collecting DCGM metrics, ensure the CCE Cloud Native Cluster Monitoring version is 3.12.0 or later.

Step 1: Enable DCGM-Exporter

- Log in to the CCE console and click the cluster name to access the cluster console. In the navigation pane, choose Add-ons. Locate the CCE AI Suite (NVIDIA GPU) add-on on the right and click Install or Edit.

- Enable Use DCGM-Exporter to Observe DCGM Metrics. Then, DCGM-Exporter will be deployed as a DaemonSet on GPU nodes.

- Configure other parameters for the add-on and click Install or Update. For details about parameter settings, see CCE AI Suite (NVIDIA GPU).

Step 2: Collect DCGM Metrics

By default, the metrics exposed by DCGM-Exporter are not collected and reported by Prometheus. After installing the CCE Cloud Native Cluster Monitoring add-on, you need to go to the Settings and manually enable data collection. The following cases are involved:

- The target preset policy is disabled: If the CCE Cloud Native Cluster Monitoring add-on is not installed, or it has been installed but its preset policy is not enabled in Settings, install the add-on and enable DCGM-Exporter data collection on the ServiceMonitor Policies tab in Settings.

- The target preset policy is enabled: If CCE Cloud Native Cluster Monitoring has been installed and its preset policy has been enabled in Settings, you need to enable DCGM-Exporter data collection on the Preset Policies tab.

If the CCE Cloud Native Cluster Monitoring add-on is not installed, or it has been installed but its preset policy is not enabled in Settings, perform the following steps to enable data collection:

- Log in to the CCE console and click the cluster name to access the cluster console.

- In the navigation pane, choose Cluster > Add-ons, locate Cloud Native Cluster Monitoring, and click Install. In the upper part of the Install Add-on page, choose 3.12.0 or later for the add-on version. To report the collected GPU data to AOM, enable Report Monitoring Data to AOM and choose the target AOM instance. The GPU data is collected from custom metrics, and uploading such data to AOM incurs fees. For details, see Pricing Details.

For details about other parameters, see Cloud Native Cluster Monitoring.

- Click Install. If the add-on is in the Running state, the installation is successful.

- In the navigation pane, choose Cluster > Settings. Then, click the Monitoring tab. In the Collection Settings area, find ServiceMonitor Policies and click Manage.

- On the Collection Settings page, click the search box, select Name, choose dcgm-exporter from the drop-down list, and enable it.

If you have installed Cloud Native Cluster Monitoring and enabled its preset policy in Settings, the preset ServiceMonitor and PodMonitor will be deleted, preventing ServiceMonitor from collecting DCGM-Exporter data. To collect the DCGM-Exporter data, perform the following steps:

- Log in to the CCE console and click the cluster name to access the cluster console.

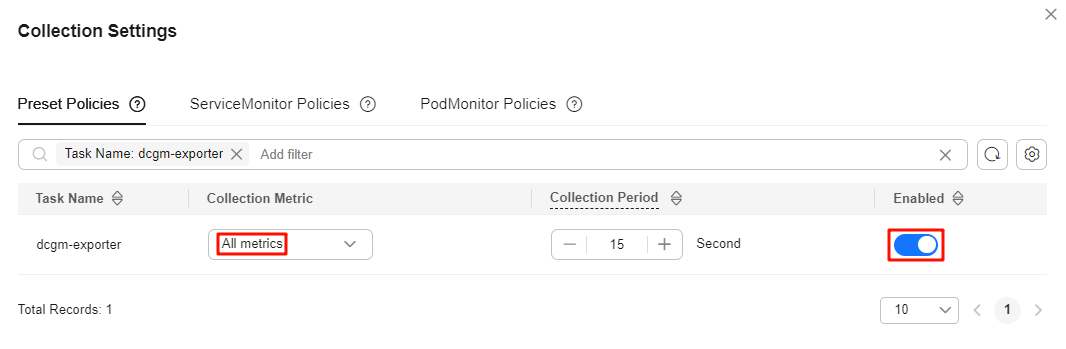

- In the navigation pane, choose Cluster > Settings. Then, click the Monitoring tab. On the Preset Policies tab in the Collection Settings area, click Manage.

- On the Collection Settings page, click the search box, select Name, and choose dcgm-exporter from the drop-down list. In the search result, choose All metrics for Collection Metric column and enable DCGM-Exporter.

Step 3: View DCGM Metrics on AOM

To view DCGM metrics on AOM, ensure that the function of reporting monitoring data to AOM has been enabled in Cloud Native Cluster Monitoring. The GPU data is collected from custom metrics, and uploading such data to AOM incurs fees. For details, see Pricing Details.

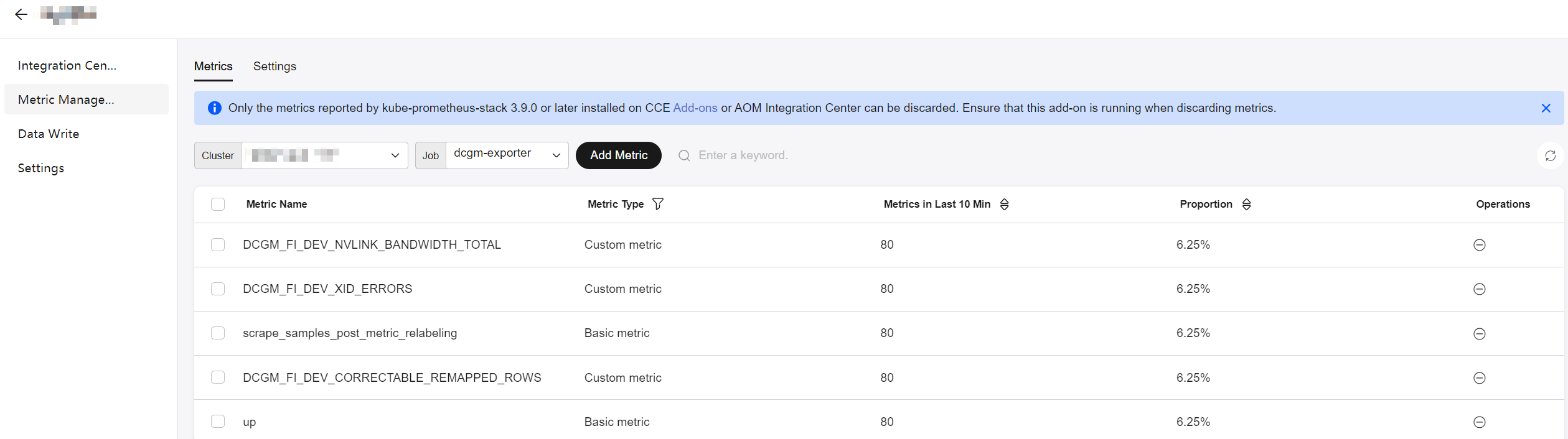

- Go to the AOM console and select the target AOM instance in the instance list.

- On the Metrics tab, choose the target cluster name from the Cluster drop-down list and enter DCGM in the search box to view DCGM metrics.

Step 4: Use Grafana to View DCGM Metrics

To configure the DCGM metric dashboard using Grafana, install Grafana and complete the necessary configurations on the Grafana GUI. Follow the steps below for details:

- In the navigation pane, choose Add-ons. Then, locate Grafana and click Install. On the installation page, enable Interconnect with AOM and Public Access. Set the interconnected AOM instance to the one used by Cloud Native Cluster Monitoring.

- Click Install. If the add-on is in the Running state, the installation is successful. After the installation is complete, click Access to access the Grafana GUI. Enter the username and password when you access the Grafana GUI for the first time. The default username and password are both admin. After logging in, reset the password as prompted.

- In the upper left corner of the page, click

. Then, click

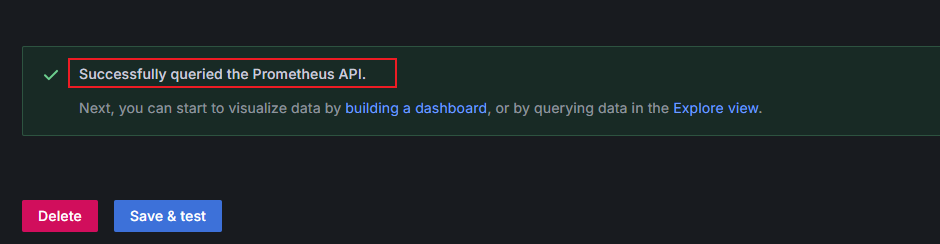

. Then, click  on the left of Connections. Click Data sources to access the Data sources page.

on the left of Connections. Click Data sources to access the Data sources page. - In the data source list, click prometheus-aom. Click Save & test at the bottom of the prometheus-aom data source page to check the data source connectivity. If "Successfully queried the Prometheus API" is displayed, the connectivity test has been passed.

- NVIDIA provides the NVIDIA DCGM Exporter Dashboard to display DCGM metrics. You can go to NVIDIA DCGM Exporter Dashboard and click Download JSON on the right.

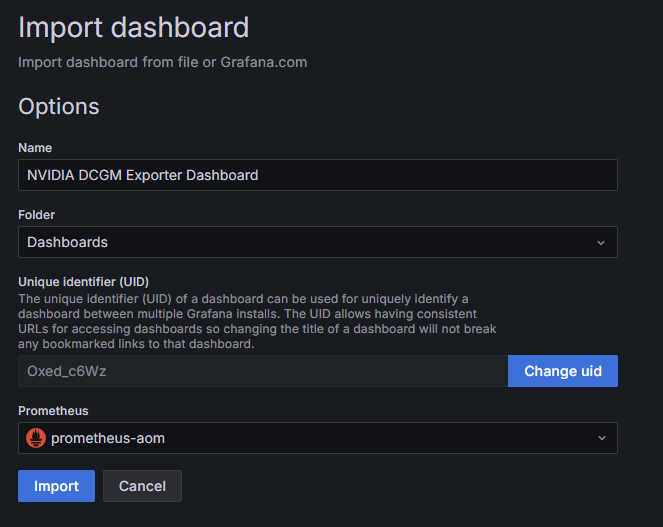

Go back to the Grafana GUI, click

in the upper left corner of the page to open the menu bar and click Dashboards. In the upper right corner of the Dashboards page, click New and choose Import from the drop-down list. On the Import dashboard page, upload the downloaded JSON file, choose the prometheus-aom data source from Prometheus, and click Import.

in the upper left corner of the page to open the menu bar and click Dashboards. In the upper right corner of the Dashboards page, click New and choose Import from the drop-down list. On the Import dashboard page, upload the downloaded JSON file, choose the prometheus-aom data source from Prometheus, and click Import.For more details about how to import a dashboard to Grafana, see Manage dashboards.

- View the imported dashboard. Click

in the upper right corner to save the dashboard.

in the upper right corner to save the dashboard.

Appendix: Troubleshooting DCGM-Exporter Faults

Check the running status.

- On the NVIDIA GPU details page, check whether the target pod is running.

- Check pod logs for the HTTP server listening status.

- Run the curl command in the cluster to access DCGM-Exporter and check whether data can be obtained.

- Check DCGM-Exporter's pod IP address.

kubectl get po -A -owide | grep dcgm

- Check data. In the following command, 10.1.1.15 is the obtained pod IP address:

curl 10.1.1.15:9400/metrics | head

- Check DCGM-Exporter's pod IP address.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot