Migrating Data from Hive to DLI

This section describes how to use the CDM data synchronization function to migrate data from MRS Hive to DLI. Data of other MRS Hadoop components can be bidirectionally synchronized between CDM and DLI.

Prerequisites

- You have created a DLI SQL queue.

When you create a queue, set its Type to For SQL.

- You have created an MRS security cluster that contains the Hive component.

- In this example, the MRS cluster and component versions are as follows:

- Cluster version: MRS 3.1.0

- Hive version: 3.1.0

- Hadoop version: 3.1.1

- In this example, Kerberos authentication is enabled when the MRS cluster is created.

- In this example, the MRS cluster and component versions are as follows:

- You have created a CDM cluster. For details about how to create a cluster, see Creating a CDM Cluster.

- If the destination data source is an on-premises database, you need the Internet or Direct Connect. When using the Internet, ensure that an EIP has been bound to the CDM cluster, the security group of CDM allows outbound traffic from the host where the off-cloud data source is located, the host where the data source is located can access the Internet, and the connection port has been enabled in the firewall rules.

- If the data source is MRS or GaussDB(DWS) on a cloud, the network must meet the following requirements:

i. If the CDM cluster and the cloud service are in different regions, a public network or a dedicated connection is required for enabling communication between the CDM cluster and the cloud service. If the Internet is used for communication, ensure that an EIP has been bound to the CDM cluster, the host where the data source is located can access the Internet, and the port has been enabled in the firewall rules.

ii. If the CDM cluster and the cloud service are in the same region, VPC, subnet, and security group, they can communicate with each other by default. If the CDM cluster and the cloud service are in the same VPC but in different subnets or security groups, you must configure routing rules and security group rules.

For details about how to configure routes, see Configure routes. For details about how to configure security groups, see section Security Group Configuration Examples.

iii. The cloud service instance and the CDM cluster belong to the same enterprise project. If they do not, you can modify the enterprise project of the workspace.

In this example, the VPC, subnet, and security group of the CDM cluster are the same as those of the MRS cluster.

Step 1: Prepare Data

- Create a Hive table in the MRS cluster and insert data in the table.

- Log in to MRS Manager by referring to Accessing FusionInsight Manager.

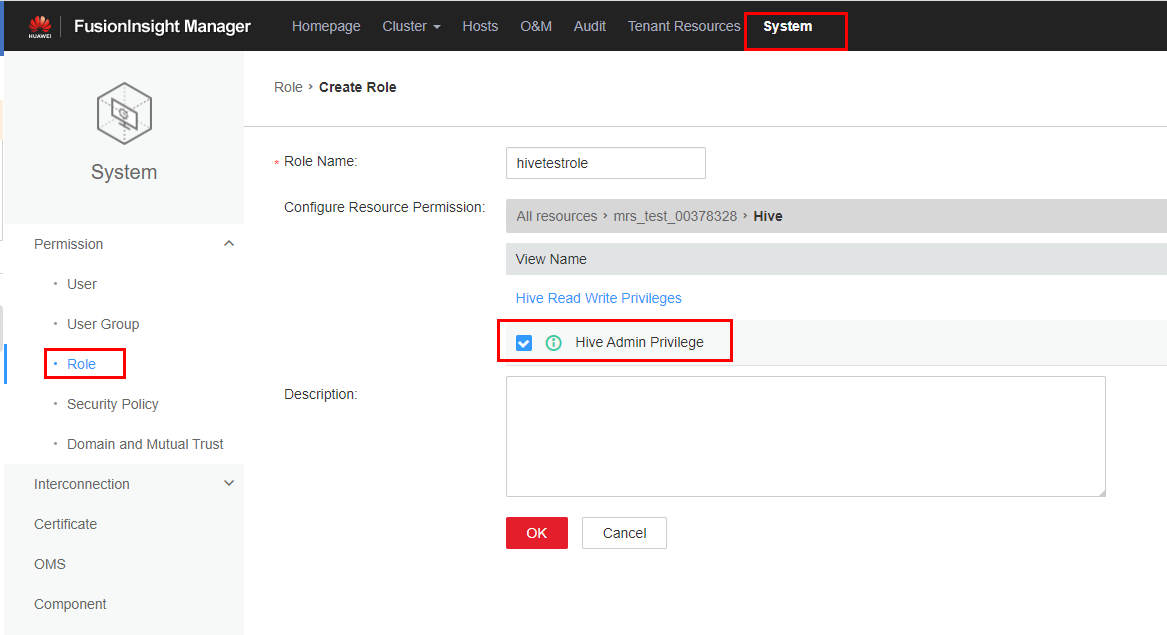

- On MRS Manager, click System in the top navigation pane. On the page displayed, choose Permission > Role from the left navigation pane. On the displayed page, configure the following parameters:

- Role Name: Enter a role name, for example, hivetestrole.

- Configure Resource Permission: Select the MRS cluster name and then Hive. Select Hive Admin Privilege.

Figure 1 Creating a Hive role

For details about how to create a role, see Creating a Role.

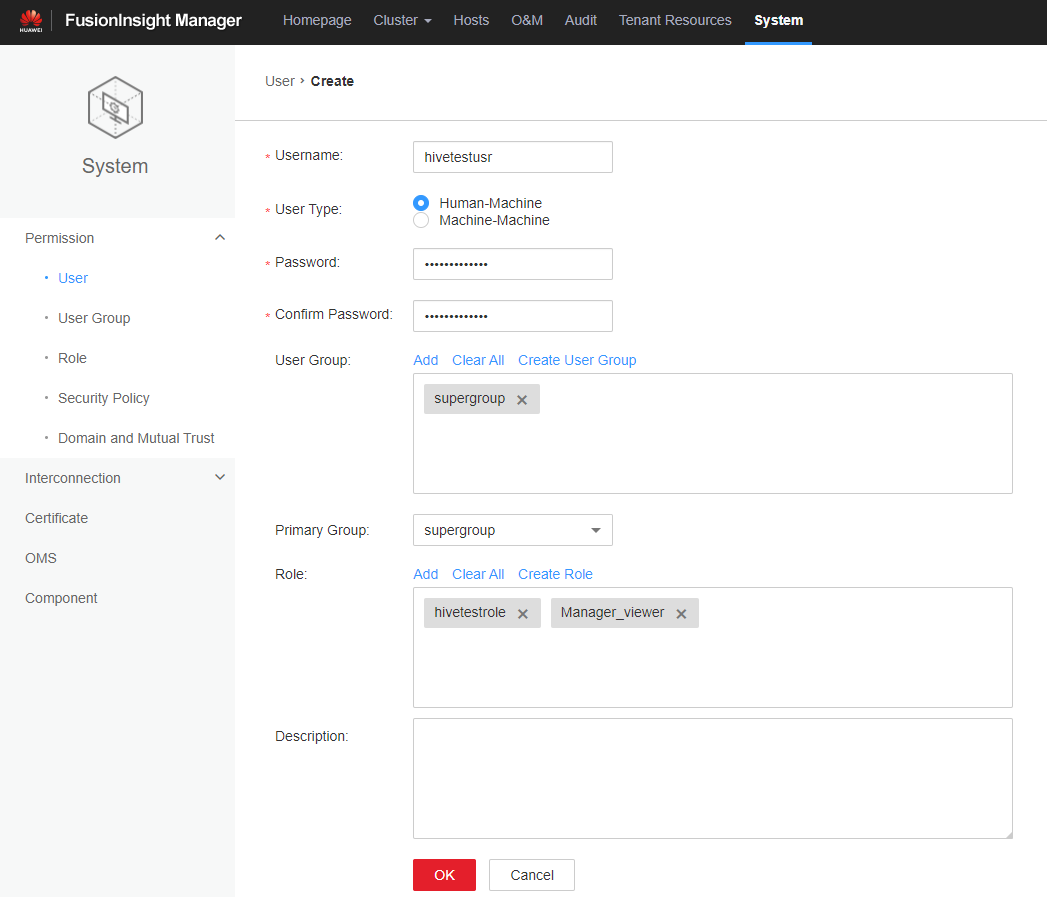

- On the MRS Manager console, click System in the top navigation pane. On the displayed page, choose Permission > User from the left navigation pane. On the displayed page, set the following parameters:

- Username: Enter a username. In this example, enter hivetestusr.

- User Type: Select Human-Machine.

- Password and Confirm Password: Enter the password of the current user and enter it again.

- User Group and Primary Group: Select supergroup.

- Role: Select the role created in 2 and the Manager_viewer role.

Figure 2 Creating a Hive User

- Download and install the Hive client by referring to Installing an MRS Client. For example, the Hive client is installed in the /opt/hiveclient directory on the active MRS node.

- Go to the client installation directory as user root.

For example, run the cd /opt/hiveclient command.

- Run the following command to set environment variables:

- Run the following command to authenticate the user created in 3 as Kerberos authentication has been enabled for the current cluster:

kinit <Username in 3>

Example: kinit hivetestusr

- Run the following command to connect to Hive:

- Create a table and insert data into it.

Run the following statement to create a table:

create table user_info(id string,name string,gender string,age int,addr string);

Run the following statements to insert data into the table:insert into table user_info(id,name,gender,age,addr) values("12005000201", "A", "Male", 19, "City A"); insert into table user_info(id,name,gender,age,addr) values ("12005000202","B","male",20,"City B"); insert into table user_info(id,name,gender,age,addr) values ("12005000202","B","male",20,"City B");

In the preceding example, data is migrated by creating a table and inserting data. To migrate an existing Hive database, run the following commands to obtain Hive database and table information:

- Run the following command on the Hive client to obtain database information:

- Switch to the Hive database from which data needs to be migrated.

- Run the following command to display information about all tables in the database:

- Run the following command to query the creation statement of the Hive table:

The queried table creation statements must be processed to comply with the DLI table creation syntax before being executed.

- Create a database and table on DLI.

- Log in to the DLI management console and click SQL Editor. On the displayed page, set Engine to spark and Queue to the created SQL queue.

Enter the following statement in the editing window to create a database, for example, the migrated DLI database testdb: For details about the syntax for creating a DLI database, see Creating a Database.

create database testdb;

- Create a table in the database.

You need to edit the table creation statement obtained by running show create table hive table name in MRS Hive to ensure the statement complies with the table creation syntax of DLI. For details about the table creation syntax, see Creating a DLI Table Using the DataSource Syntax.

create table user_info(id string,name string,gender string,age int,addr string);

- Log in to the DLI management console and click SQL Editor. On the displayed page, set Engine to spark and Queue to the created SQL queue.

Step 2: Migrate Data

- Create a CDM connection to MRS Hive.

- Create a connection to link CDM to the data source MRS Hive.

- Log in to the CDM console, choose Cluster Management. On the displayed page, locate the created CDM cluster, and click Job Management in the Operation column.

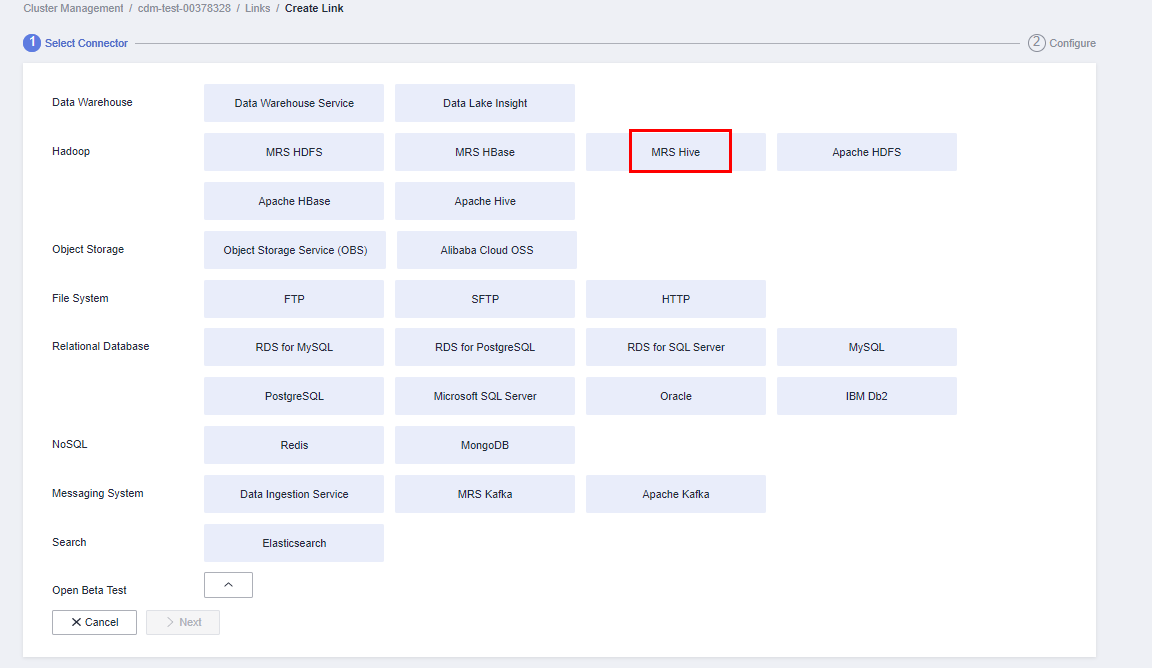

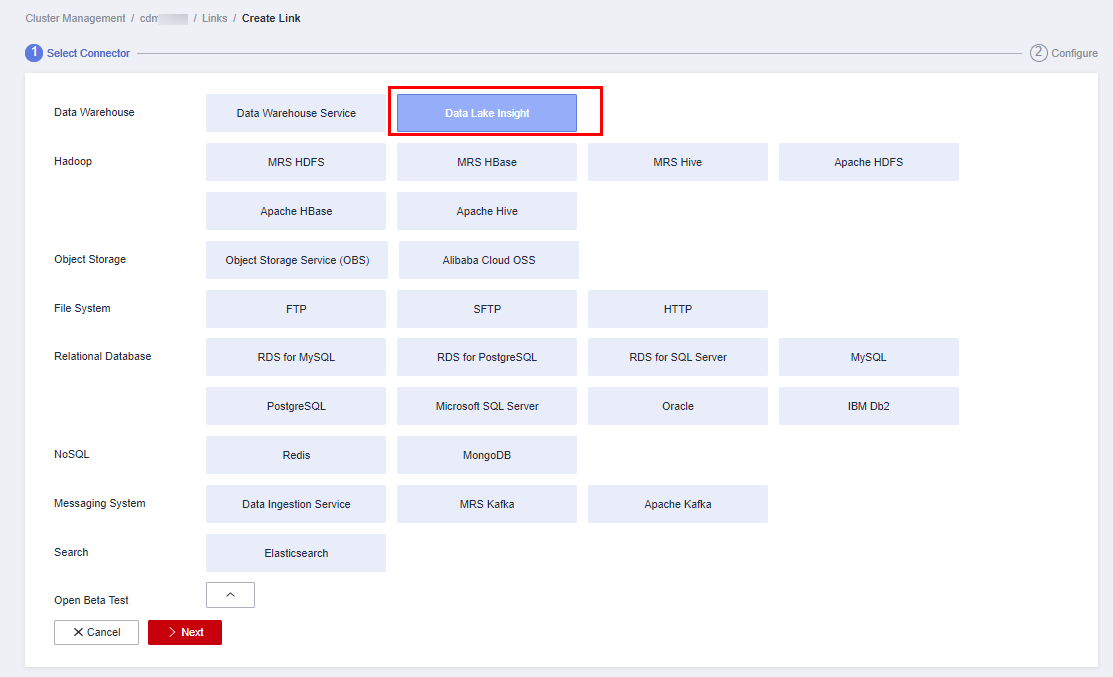

- On the Job Management page, click the Links tab, and click Create Link. On the displayed page, select MRS Hive and click Next.

Figure 3 Selecting the MRS Hive connector

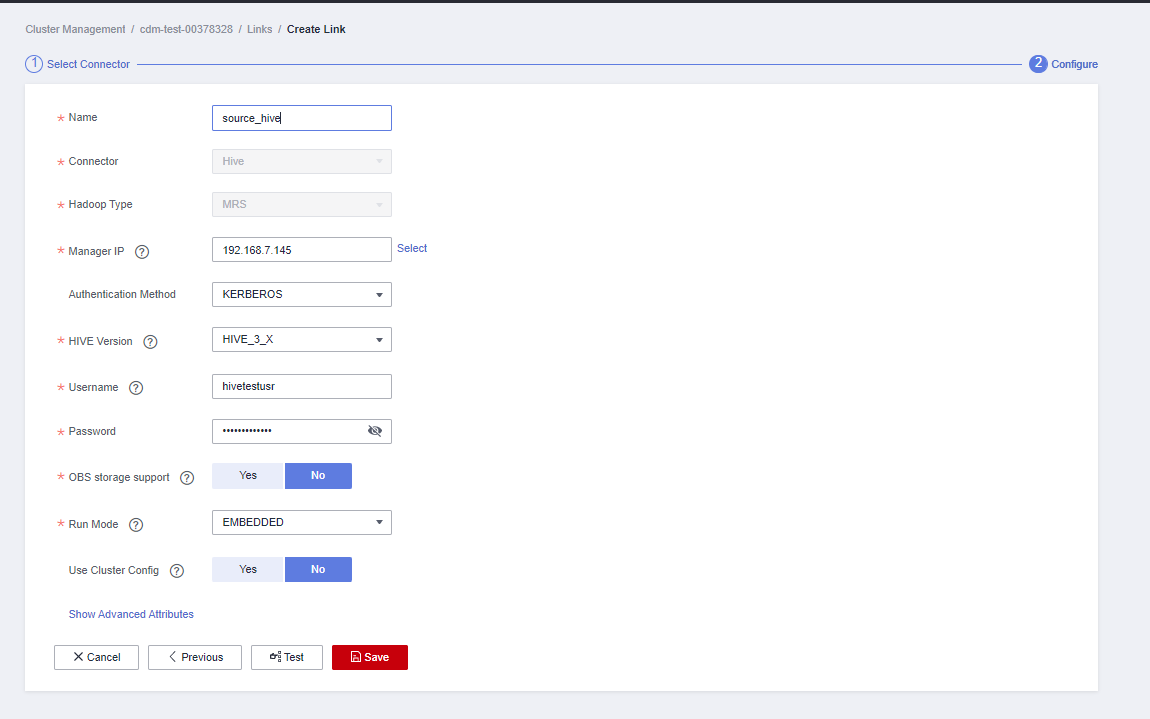

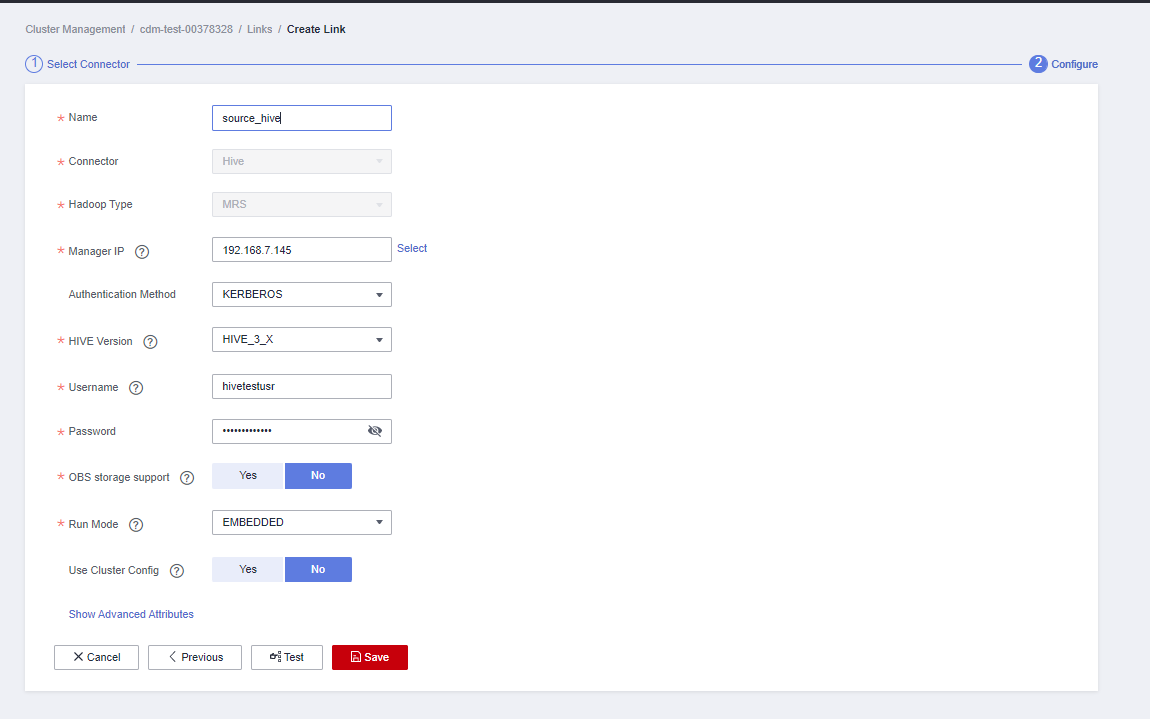

- Configure the connection. The following table describes the required parameters.

Table 1 MRS Hive connection configurations Parameter

Value

Name

Name of the MRS Hive data source, for example, source_hive

Manager IP

Click Select next to the text box and select the MRS Hive cluster. The Manager IP address is automatically specified.

Authentication Method

Set this parameter to KERBEROS if Kerberos authentication is enabled for the MRS cluster. Set this parameter to SIMPLE if the MRS cluster is a common cluster.

In this example, set this parameter to KERBEROS.

Hive Version

Set this parameter to the Hive version you have selected during MRS cluster creation. If the current Hive version is 3.1.0, set this parameter to HIVE_3_X.

Username

Name of the MRS Hive user created on 3

Password

Password of the MRS Hive user

Retain default values for other parameters.Figure 4 Configuring the connection to MRS Hive

- Click Save to complete the configuration.

- Create a connection to link CDM to DLI.

- Log in to the CDM console, choose Cluster Management. On the displayed page, locate the created CDM cluster, and click Job Management in the Operation column.

- On the Job Management page, click the Links tab, and click Create Link. On the displayed page, select Data Lake Insight and click Next.

Figure 5 Selecting the DLI connector

- Configure the connection parameters.

Figure 6 Configuring connection parameters

After the configuration is complete, click Save.

- Create a connection to link CDM to the data source MRS Hive.

- Create a CDM migration job.

- Log in to the CDM console, choose Cluster Management. On the displayed page, locate the created CDM cluster, and click Job Management in the Operation column.

- On the Job Management page, choose the Table/File Migration tab and click Create Job.

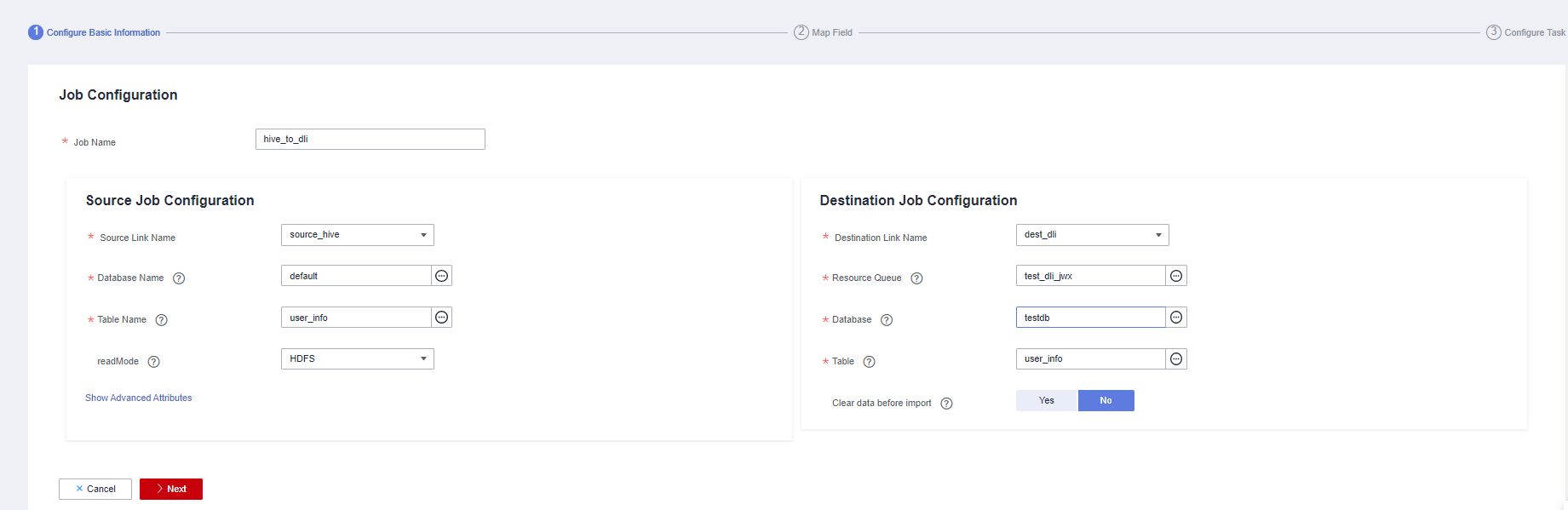

- On the Create Job page, specify job information.

Figure 7 Configuring the CDM job

- Job Name: Name of the data migration job, for example, hive_to_dli

- Set parameters required for Source Job Configuration.

Table 2 Source job configuration parameters Parameter

Value

Source Link Name

Select the name of the data source created in 1.a.

Database Name

Select the name of the MRS Hive database you want to migrate to DLI. For example, the default database.

Table Name

Select the name of the Hive table. In this example, a database created on DLI and the user_info table are selected.

readMode

In this example, HDFS is selected.

Two read modes are available: HDFS and JDBC. By default, the HDFS mode is used. If you do not need to use the WHERE condition to filter data or add new fields on the field mapping page, select the HDFS mode.

The HDFS mode shows good performance, but in this mode, you cannot use the WHERE condition to filter data or add new fields on the field mapping page.

The JDBC mode allows you to use the WHERE condition to filter data or add new fields on the field mapping page.

For details about parameter settings, see From Hive.

- Set parameters required for Destination Job Configuration.

Table 3 Destination job configuration parameters Parameter

Value

Destination Link Name

Select the DLI data source connection created in 1.b.

Resource Queue

Select a created DLI SQL queue.

Database

Select a created DLI database. In this example, database testdb created in Create a database and table on DLI is selected.

Table

Select the name of a table in the database. In this example, table user_info created in Create a database and table on DLI is created.

Clear data before import

Whether to clear data in the destination table before data import. In this example, set this parameter to No.

If this parameter is set to Yes, data in the destination table will be cleared before the task is started.

For details about parameter settings, see To DLI.

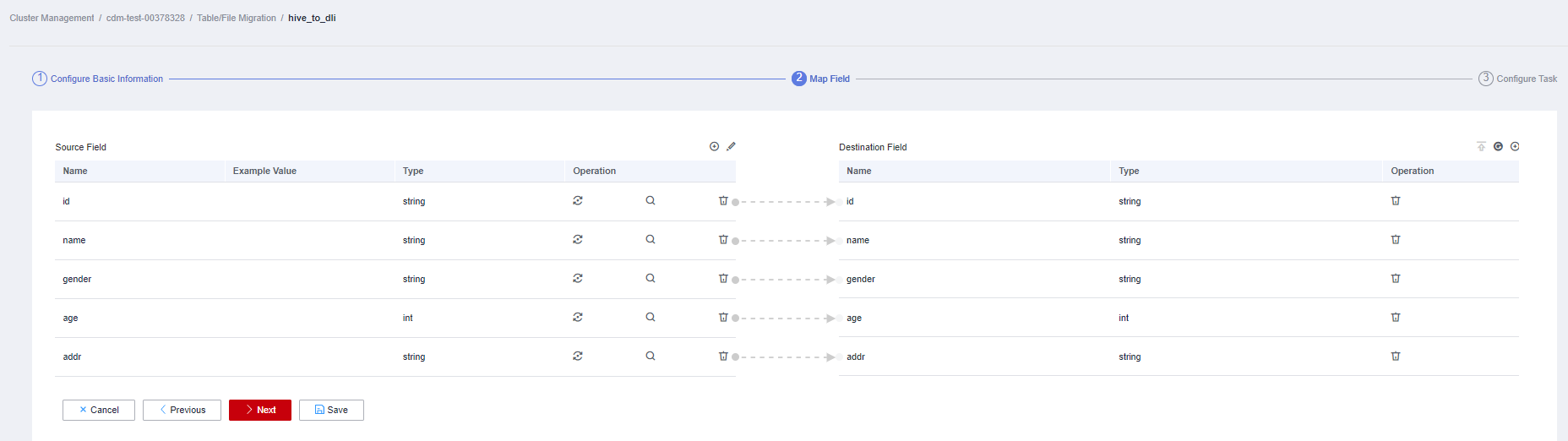

- Click Next. The Map Field page is displayed. CDM automatically matches the source and destination fields.

- If the field mapping is incorrect, you can drag the fields to adjust the mapping.

- If the type is automatically created at the migration destination, you need to configure the type and name of each field.

- CDM allows for field conversion during migration. For details, see Field Conversion.

Figure 8 Field mapping

- Click Next and set task parameters. Generally, retain the default values of all parameters.

In this step, you can configure the following optional functions:

- Retry Upon Failure: If the job fails to be executed, you can determine whether to automatically retry. Retain the default value Never.

- Group: Select the group to which the job belongs. The default group is DEFAULT. On the Job Management page, jobs can be displayed, started, or exported by group.

- Scheduled Execution: For details about how to configure scheduled execution, see Scheduling Job Execution. Retain the default value No.

- Concurrent Extractors: Enter the number of extractors to be concurrently executed. Retain the default value 1.

- Write Dirty Data: Specify this parameter if data that fails to be processed or filtered out during job execution needs to be written to OBS. Before writing dirty data, create an OBS link. You can view the data on OBS later. Retain the default value No so that dirty data is not recorded.

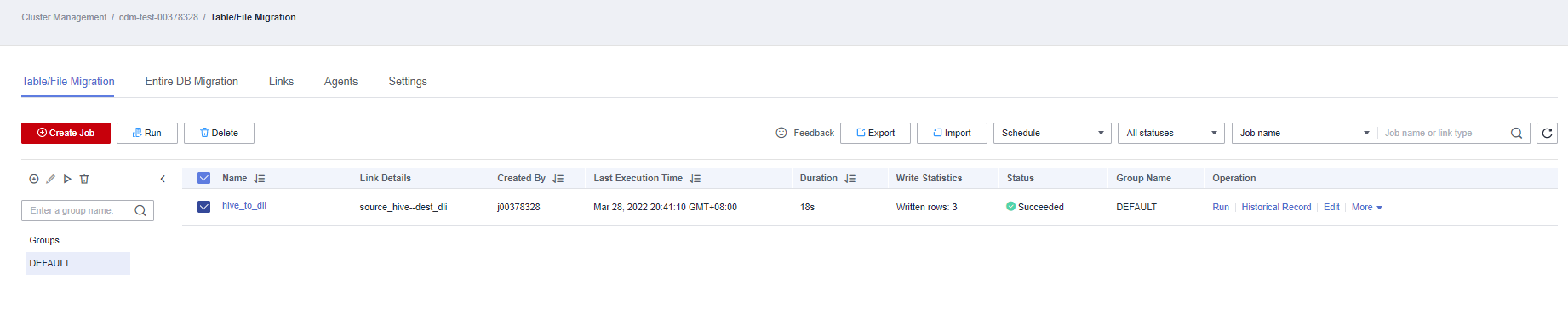

- Click Save and Run. On the Job Management page, you can view the job execution progress and result.

Figure 9 Job progress and execution result

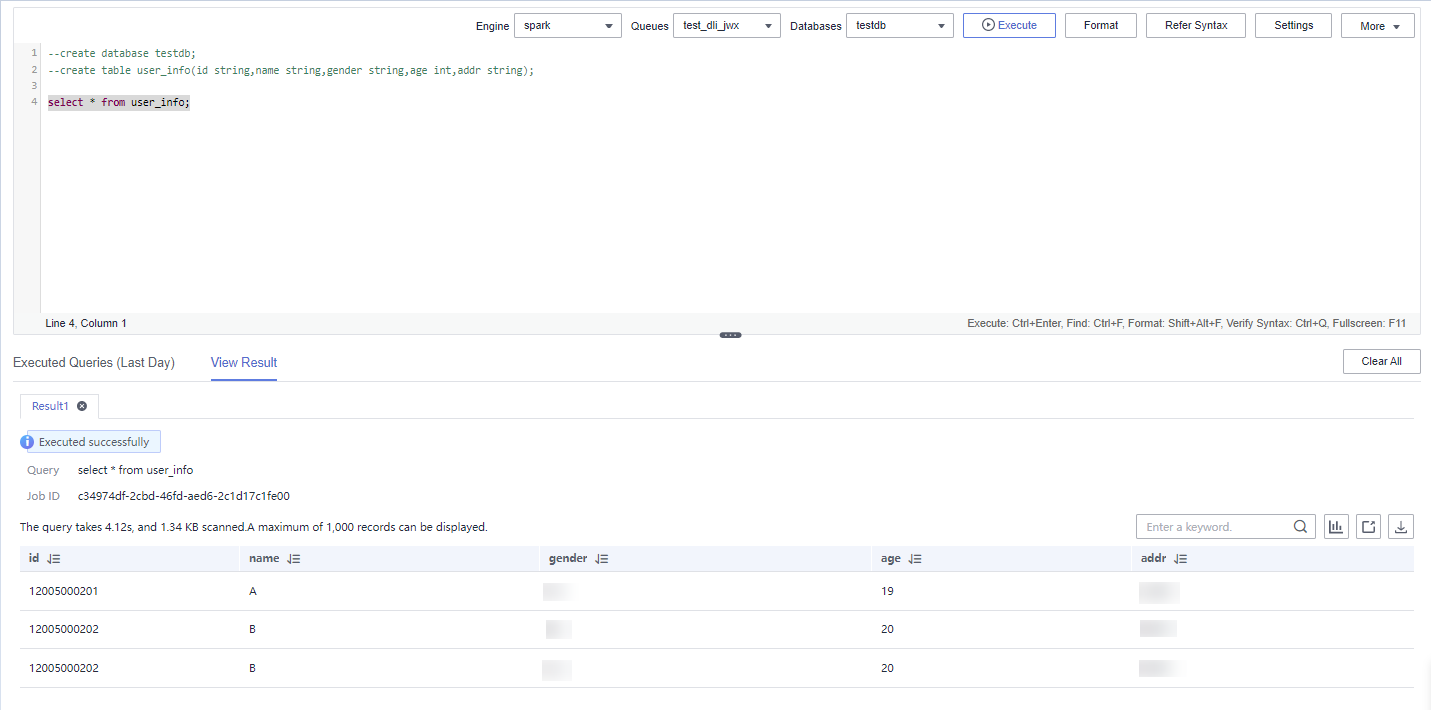

Step 3: Query Results

select * from user_info;

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot