Changing the Consistency Level of a Proxy Instance

Scenarios

TaurusDB read/write splitting distributes SELECT queries to read replicas to reduce load on the primary node. The primary node and read replicas share the underlying storage, ensuring eventual consistency of the read replicas. However, data updates on the primary node still need to be synchronized to read replicas through redo logs, which may cause latency due to write pressure. As a result, under eventual consistency, SELECT queries distributed to read replicas may not return the latest data.

To solve this problem, TaurusDB provides eventual consistency, session consistency, and global consistency.

You can configure a consistency level when creating a proxy instance. You can also change the consistency level of a proxy instance after it is created.

This section describes how to change the consistency level of a proxy instance.

Consistency Levels

- Function

After a proxy instance is created, requests for SELECT operations are routed to different nodes based on their read weights. Because there is a replication latency between the primary node and each read replica and the replication latency varies for different read replicas, the result returned by each SELECT statement may be different when you repeatedly execute a SELECT statement within a session. In this case, only eventual consistency is ensured.

- Use case

You can select eventual consistency if you want to offload read requests from the primary node to read replicas or if read requests far outnumber write requests in your workloads.

- Function

To eliminate data inconsistencies caused by eventual consistency, session consistency is provided. Session consistency ensures the result returned by each SELECT statement in a session is the data that was updated after the last write request.

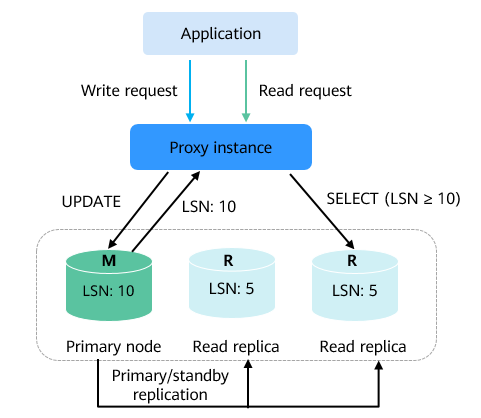

- Principles

Proxy instances record the log sequence number (LSN) of each node and session. When data in a session is updated, a proxy instance records the LSN of the primary node as a session LSN. When a read request arrives subsequently, the proxy instance compares the session LSN with the LSN of each node and routes the request to a node whose LSN is at least equal to the session LSN. This ensures session consistency.

In session consistency, if there is significant replication latency between the primary node and read replicas and the LSN of each read replica is smaller than the session LSN, requests for SELECT operations will be routed to the primary node. In this case, the primary node is heavily loaded.

Figure 1 Principle of session consistency

- Use case

The higher the consistency level, the heavier the load on the primary node. If your application requires that data written within a session be immediately available for query, you can select session consistency.

- Function

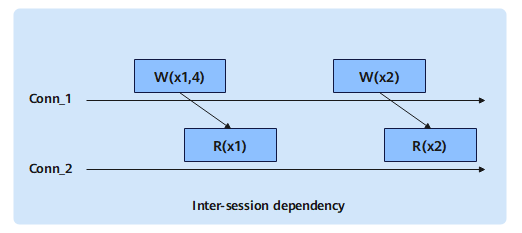

Session consistency ensures that data updated before a read request is executed can be queried in a given session. For example, in Figure 2, read request R(x1) depends on the updated data of write request W(x1,4). However, in some situations, there are dependencies between sessions. For example, in Figure 3, R(x1) over Conn_2 depends on the updated data of W(x1,4) over Conn_1. In this case, session consistency cannot ensure the consistency of query results. TaurusDB provides global consistency to solve this problem, which ensures data consistency in different sessions. It means that once data is written, you can immediately query the updated data.

- Principles

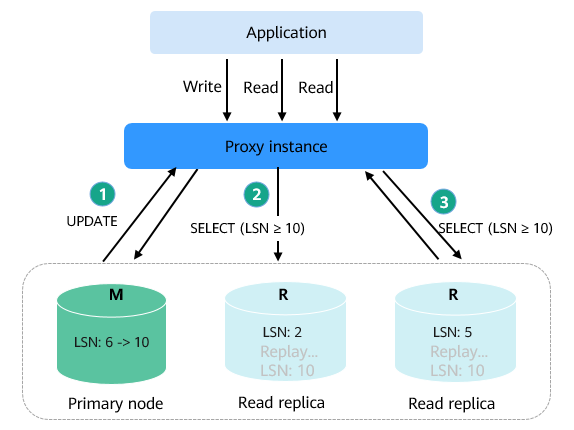

TaurusDB utilizes minimal replication latency and shared distributed log storage to provide strong consistency read for read replicas at the pure kernel level. When global consistency is enabled, the LSN of the primary node is updated to the latest one after a data update operation is complete in a session. When a read request is received, a proxy instance routes the request to an expected node based on its routing policy. Due to the replication latency between the primary node and read replicas, the LSNs of read replicas may still lag that of the primary node. In this case, the kernel immediately accelerates the LSN updates of the read replicas and completes the LSN synchronization between the read replicas and the primary node within milliseconds. Once synchronization is complete, the read request is then responded to. This ensures that the read request can access any data that has been updated up to the moment the request was initiated.

As shown in the following figure, after an UPDATE statement is sent to the primary node, the primary node's LSN increases from 6 to 10. For the first SELECT statement sent to a read replica, the latest results are only returned after the read replica's LSN increases from 2 to 10. Similarly, for the second SELECT statement sent to another read replica, the latest results are only returned after the read replica's LSN increases from 5 to 10.

Figure 4 Principle of global consistency

- Use case

When there is notable latency between the primary node and read replicas, choosing global consistency may cause more requests to be routed to the primary node. This increased load on the primary node may lead to higher workload latency. So, you are advised to select global consistency when there are more read operations than write operations.

- Parameter setting

There are two parameters related to global consistency.

Table 1 Global consistency parameters Parameter

Description

consistTimeout

The timeout period for updating the LSNs of read replicas to the latest LSN of the primary node.

Value range: 0 to 60000, in milliseconds; default value: 20

consistTimeoutPolicy

If the LSNs of read replicas cannot be updated to the latest LSN of the primary node within the specified value of the consistTimeout parameter, a proxy instance performs the operation that is specified by the consistTimeoutPolicy parameter.

Value range:

- 1 (default value): The proxy instance sends read requests to the primary node.

- 0: The proxy instance returns an error.

ERROR 7550: Query execution was interrupted, wait replication complete timeout,please retry

- Precautions

- Global consistency takes effect only for new connections and supports only proxy connections.

- The global consistency switch is bound to a proxy instance. You can enable global consistency for one or more proxy instances (if any).

- Once global consistency is enabled for a read-only proxy instance, you cannot modify consistTimeoutPolicy. If you try to modify this parameter, an error message will be sent to the client.

- Global consistency is not available when query cache is enabled for read replicas.

- Enabling global consistency and choosing the default consistTimeoutPolicy parameter may reduce the read/write performance of the entire database cluster due to replication latency between the primary node and read replicas. You can adjust the consistTimeout parameter to tune performance.

- If global consistency is enabled, read replicas lag the primary node, and the read replicas are heavily loaded, the number of active connections on the read replicas increases and the read performance of the DB instance deteriorates. You are advised to set the routing policy of the proxy instance to load balancing to distribute read requests based on the number of active connections. In this way, the read performance of the DB instance can be fully utilized.

- If global consistency is enabled and the CPU is fully utilized due to heavy load, workloads may be intermittently interrupted.

Supported Versions

- To use session consistency, the kernel versions of TaurusDB instances must be 2.0.54.1 or later, and the kernel versions of proxy instances must be 2.7.4.0 or later.

- Global consistency is only available when the TaurusDB instance kernel is 2.0.57.240900 or later and the proxy instance kernel is 2.25.06.000 or later.

For details about how to check the kernel version, see How Can I Check the Version of a TaurusDB Instance?

Constraints

- Global consistency is in the OBT phase. To use it, submit a service ticket.

- After the consistency level is changed, you must manually reboot the proxy instance or re-establish the connection to the proxy instance on the management console. For details about how to reboot a proxy instance, see Rebooting a Proxy Instance.

Procedure

- Log in to the TaurusDB console.

- On the Instances page, click the instance name.

- In the navigation pane, choose Database Proxy.

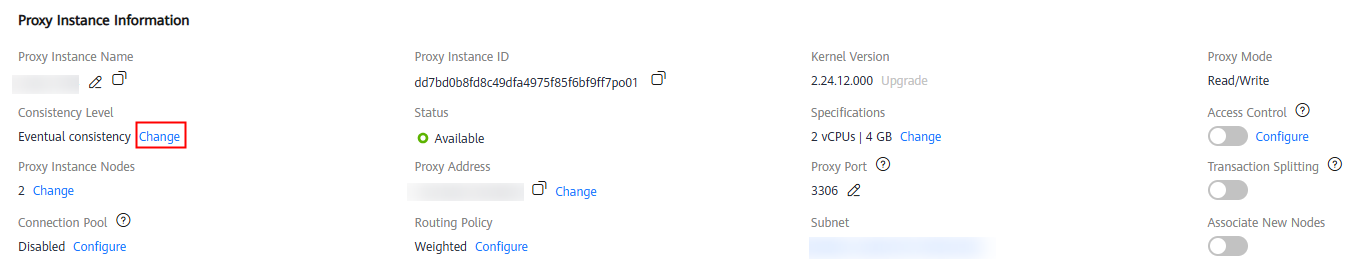

- Click a proxy instance name to go to the Basic Information page. In the Proxy Instance Information area, click Change under Consistency Level.

Figure 5 Changing a consistency level

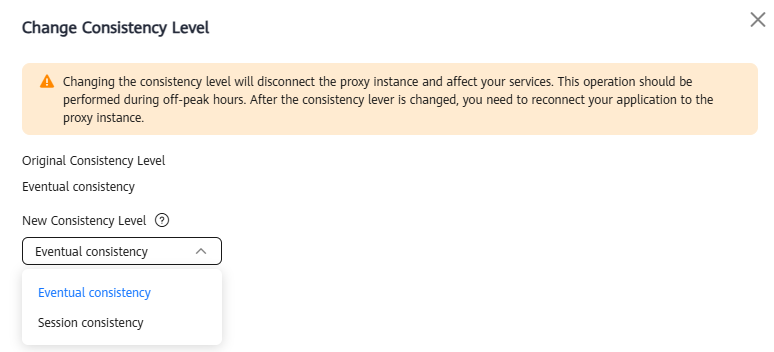

- Click the drop-down arrow and select the required consistency level.

Figure 6 Changing a consistency level

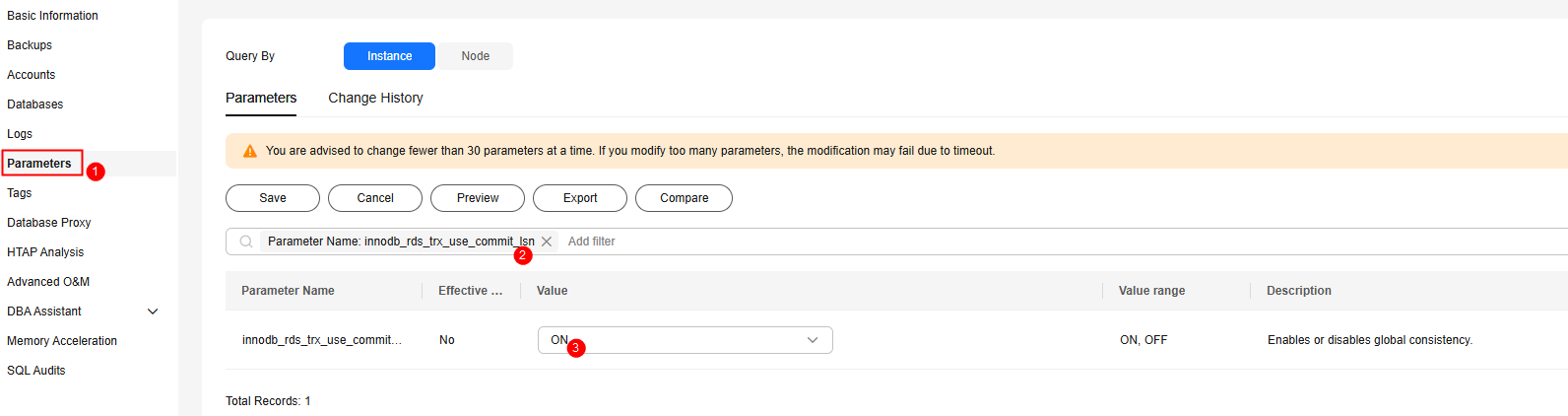

If you select Global consistency, set innodb_rds_trx_use_commit_lsn to ON on the Parameters page of the TaurusDB instance. This parameter controls whether to enable global consistency.

Figure 7 Enabling global consistency

- Click OK. The proxy instance status changes to Changing consistency level.

- After several minutes, check that the proxy instance status becomes Available and the consistency level is updated.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot