Using APIs to Call a Third-Party Model

After a pre-trained or trained model is deployed, you can use the text dialog API to call the model. The third-party inference service can be invoked using Pangu inference APIs (V1 inference APIs) or OpenAI APIs (V2 inference APIs). The authentication modes of V1 and V2 APIs are different, and the request body and response body are slightly different.

|

API Type |

API URI |

|---|---|

|

V1 Inference API |

/v1/{project_id}/deployments/{deployment_id}/chat/completions |

|

V2 Inference API |

/api/v2/chat/completions |

Obtaining the Call Path

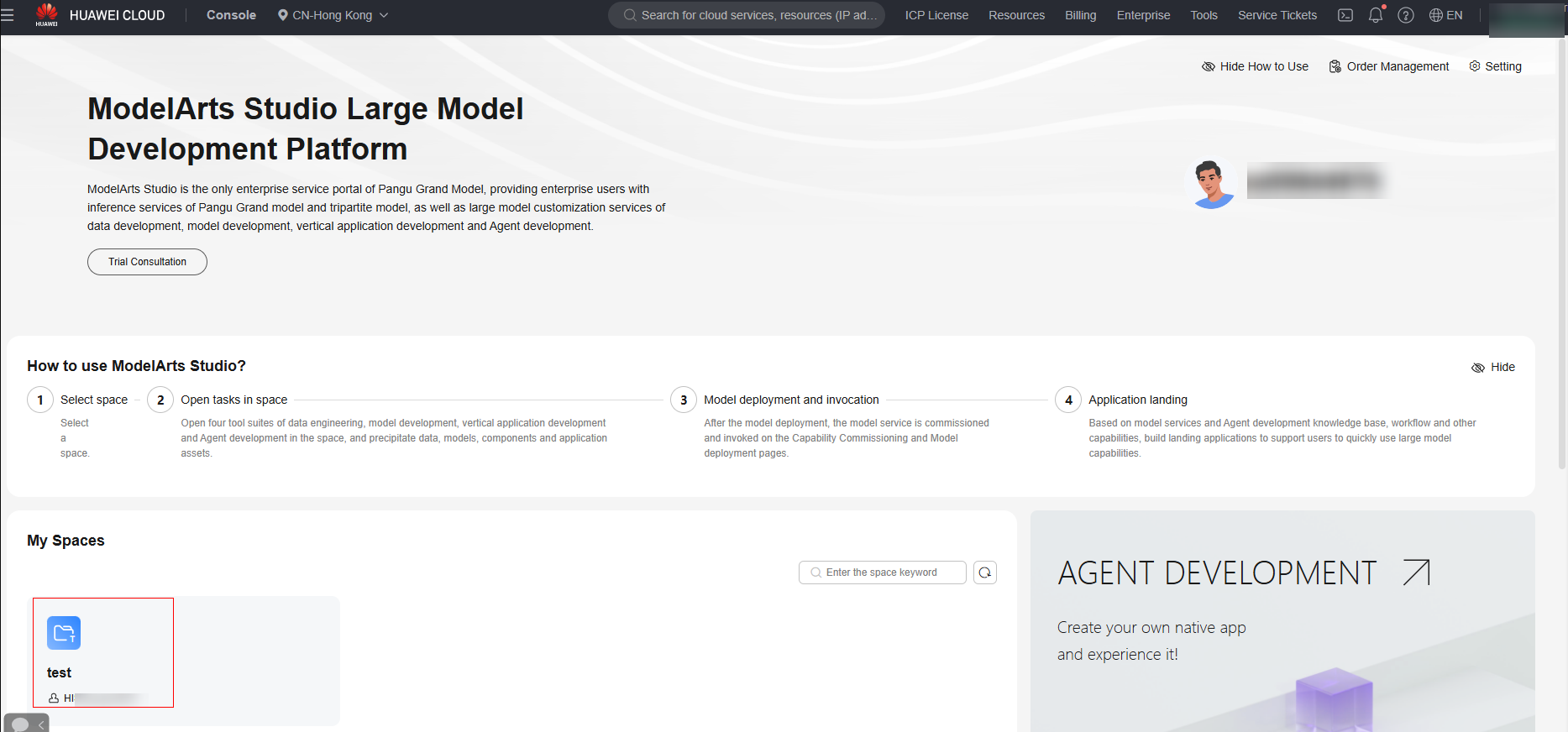

- Log in to ModelArts Studio Large Model Deveopment Platform. In the My Spaces area, click the required workspace.

Figure 1 My Spaces

- Obtain the call path.

In the navigation pane, choose Model Development > Model Deployment.

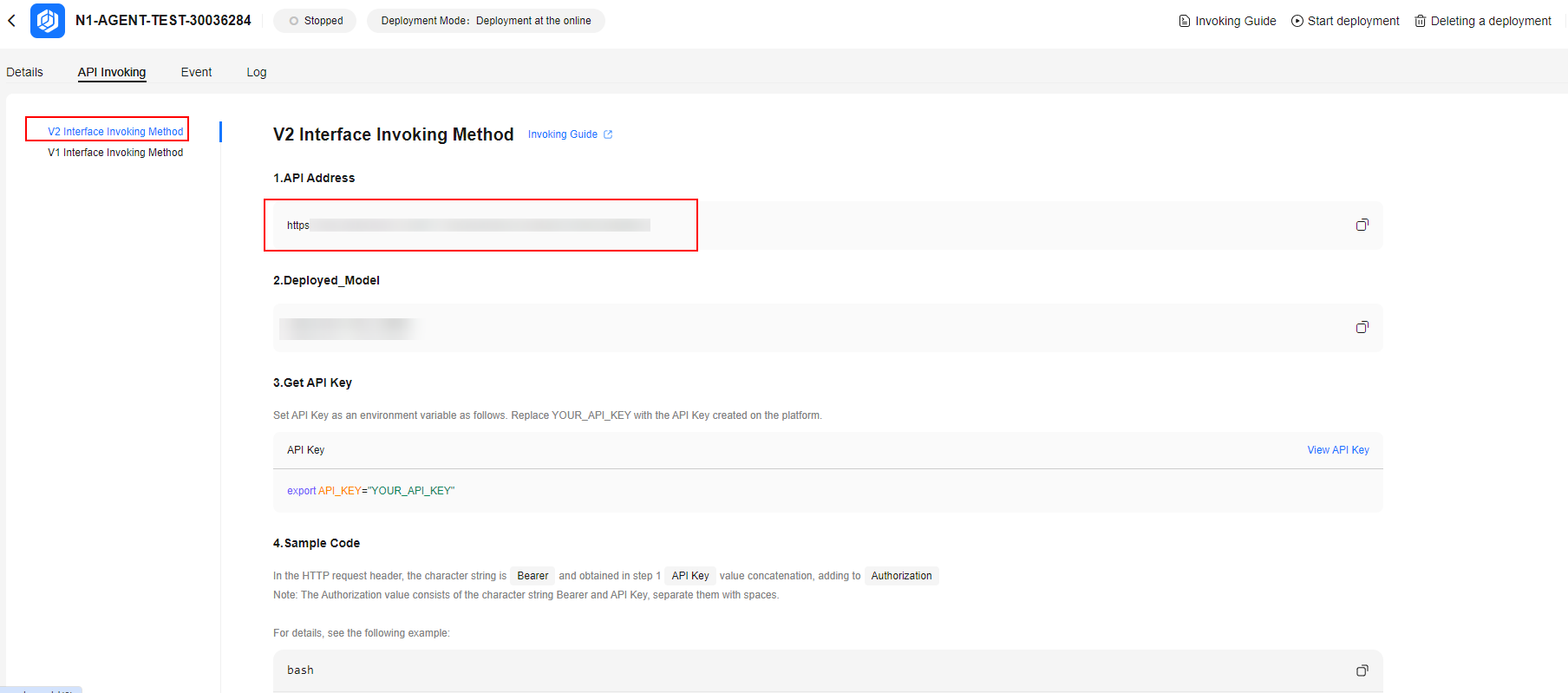

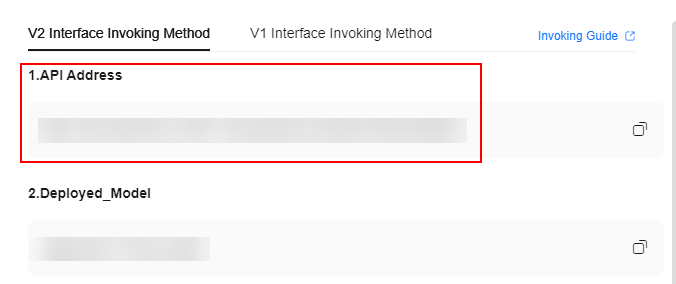

- Obtain the call path of the deployed model. On the My service tab page, click the name of a model in the Running state. On the API call tab page, obtain the model call path and call the model based on the calling method on the tab page, as shown in Figure 2.

- Obtain the call path of the preset service. On the Preset service tab page, select the NLP model to be called and click Call Path. In the Call Path dialog box, obtain the model call path, as shown in Figure 3.

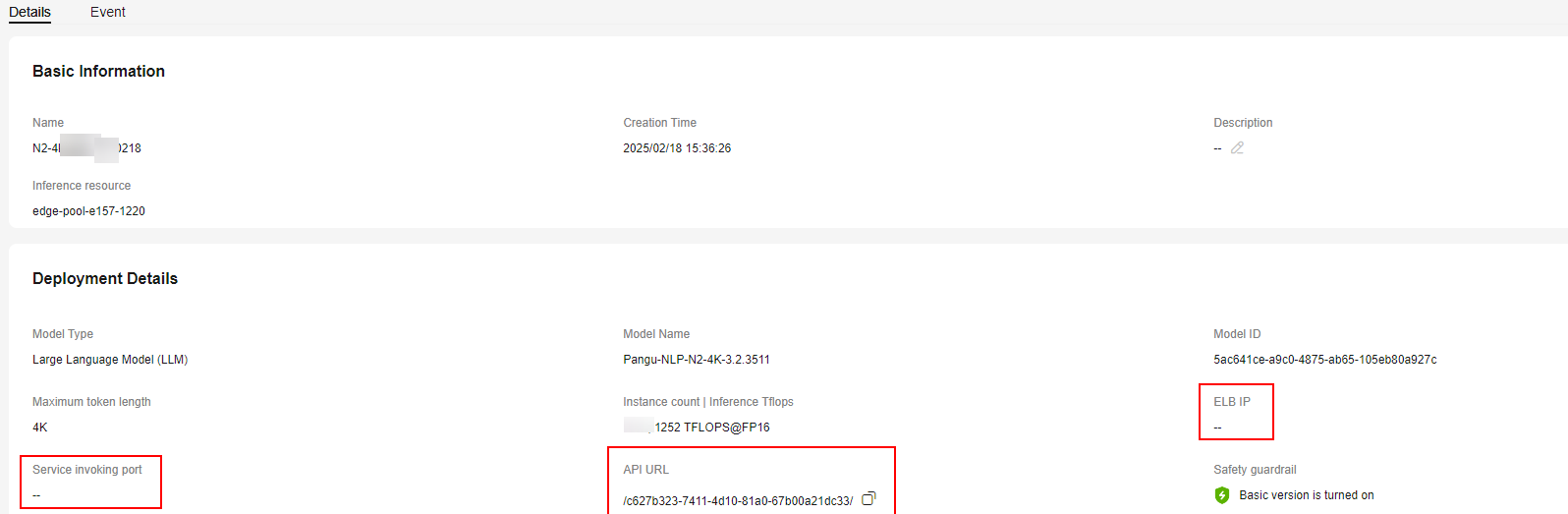

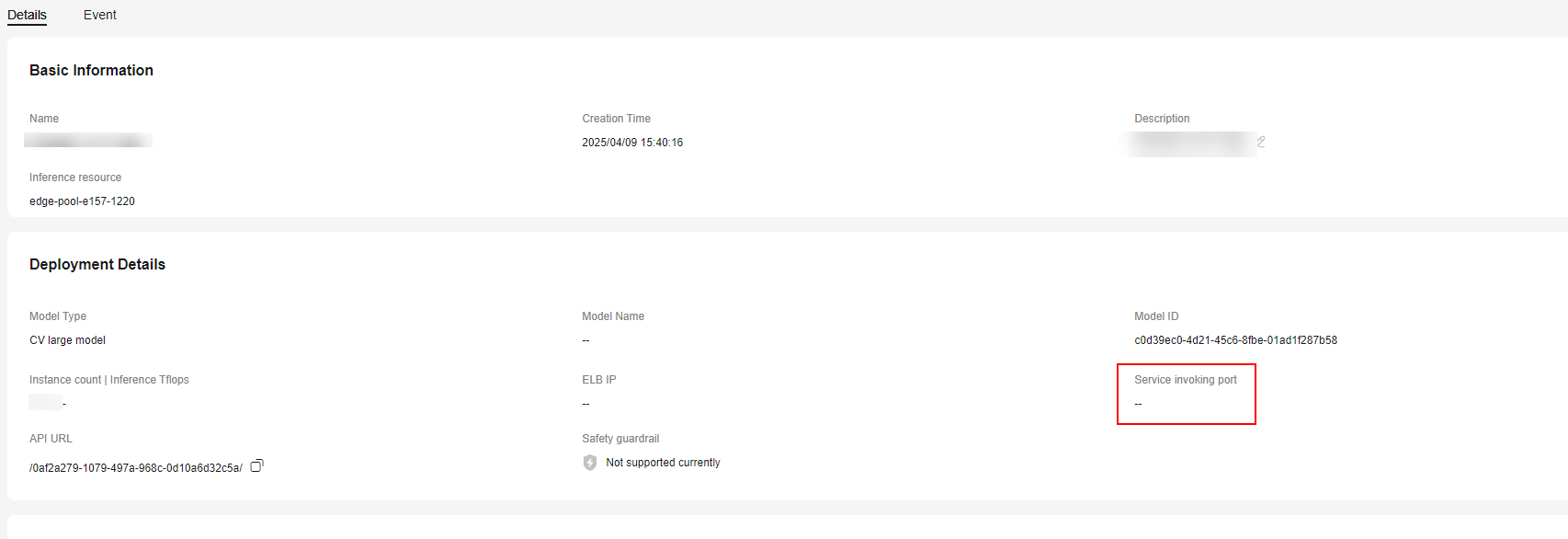

Obtain the call path of the model in edge deployment mode. On the My service tab page, click the name of a model in the Running state. On the Details tab page, you can obtain the model call path.

Load balancing mode:

The model path is http://{ELB IP address}:{ELB load port}/{API URL}/{Inference API URL}. The ELP IP address must be the corresponding public IP address. The following figure shows how to obtain each part.

Node mode:

The model path is http://{Node IP address}:{Host port}/{Inference API URL}. The node IP address is the IP address of the worker node in the edge pool. The following figure shows how to obtain each part.

Using Postman to Call APIs

- Create a POST request in Postman and enter the model call path. For details, see Obtaining the Call Path.

- There are two authentication modes for calling APIs: token authentication and API key authentication. API key authentication is used when the API service deployed by a user needs to be opened to other users. The original token authentication cannot be used. In this case, API key authentication can be used to call requests.

Set request header parameters by referring to Table 2.

Table 2 Request header parameters Authentication Mode

Parameter

Value

Token-based authentication

Content-Type

application/json

X-Auth-Token

Obtain the token by following the instructions provided in section "Calling REST APIs for Authentication" > "Token-based Authentication" in API Reference.

API key authentication for V1 inference APIs

Content-Type

application/json

X-Apig-AppCode

API key. To obtain the API key, perform the following steps:

- Log in to ModelArts Studio and access the required workspace.

- In the navigation pane, choose System Management & Stats > Application Access. On the displayed page, click Create Application Access in the upper right corner.

- In the application configuration area, select a deployed model and click OK.

- Obtain the API key in the API Key column on the application access page.

API key authentication for V2 inference APIs

Content-Type

application/json

Authorization

A character string consisting of Bearer and the API key obtained from created application access. A space is required between Bearer and the API key. For example, Bearer d59******9C3.

Figure 4 shows how to set the request header parameters for token authentication.

- Click Body, select raw, refer to the following code, and enter the request body.

{ "messages": [ { "content": "Introduce the Yangtze River and its typical fish species." } ], "temperature": 0.9, "max_tokens": 600} - Click Send on Postman to send the request. If the returned status code is 200, the NLP model API is successfully called.

API Key Authentication

If the inference service deployed by a user needs to be opened to other users, the original token-based authentication is not supported. In this case, API key authentication can be used to call APIs.

API key authentication is a quick response mechanism that authenticates an API call by adding the X-Apig-AppCode parameter (value: API key) to the HTTP request header. The API service only verifies the API key.

To obtain the API key, perform the following steps:

- Log in to ModelArts Studio and access the required workspace.

- In the navigation pane, choose System Management & Stats > Application Access. On the displayed page, click Create Application Access in the upper right corner.

- In the Associated Services area, select All services or specify a deployed inference service, set the application access name and description, and click OK.

- Obtain the API key in the API Key column on the application access page.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot