Using the Experience Center Function to Call a Third-Party Model

The Experience Center function allows you to call preset or trained third-party models. Before using this function, deploy the model. For details, see Creating a Third-Party Model Deployment Task.

The text dialog capability of the third-party model can be implemented by using the Experience Center function. That is, you enter a question in the text box, and the model outputs the corresponding answer based on your question. The procedure is as follows:

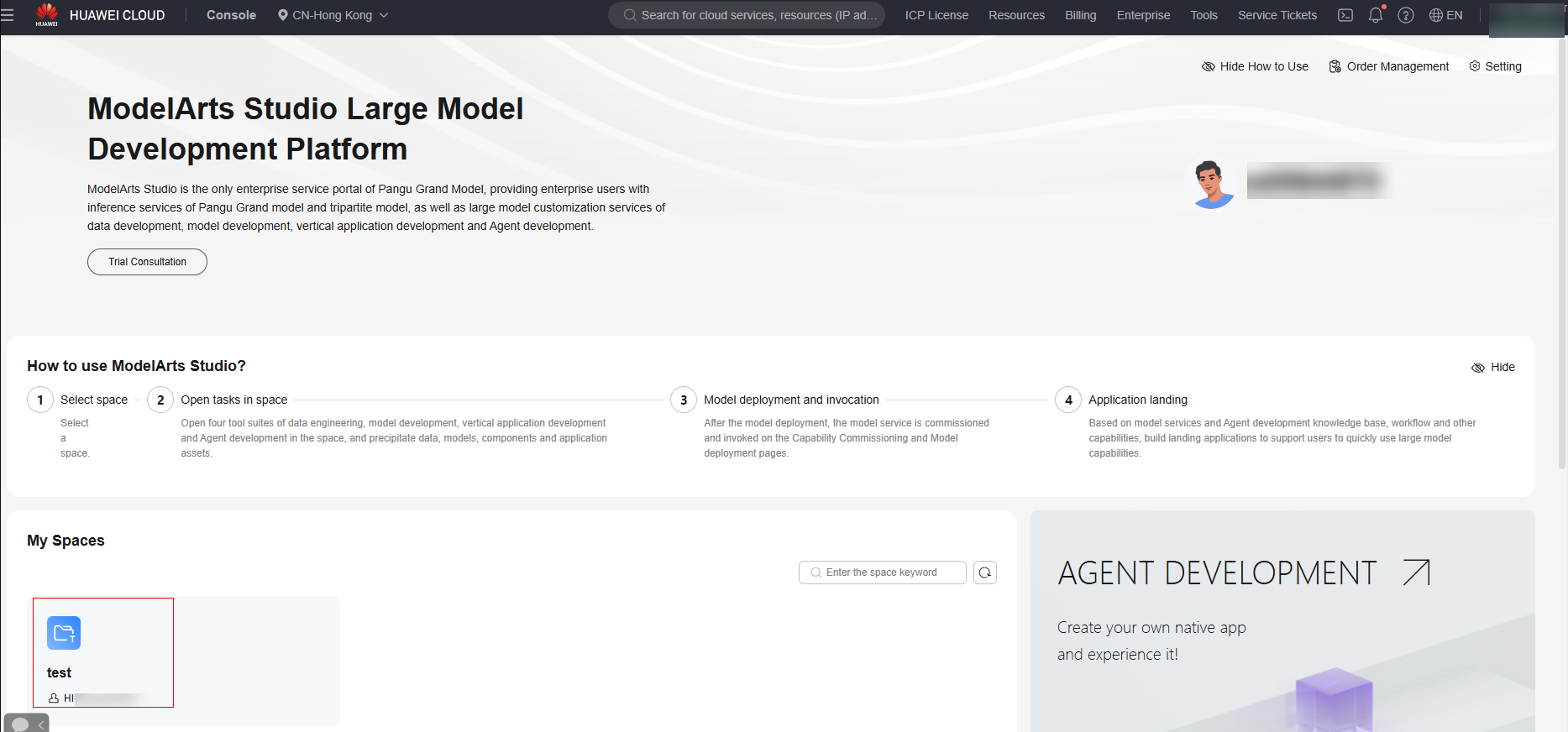

- Log in to ModelArts Studio Large Model Deveopment Platform. In the My Spaces area, click the required workspace.

Figure 1 My Spaces

- In the navigation pane, choose Experience Center > Text Dialog.

- Select the service to be called. You can select a preset service or a service from My Services.

- Enter the system persona. For example, "You are an AI assistant." If it is not specified, the default system persona will be used.

- Set parameters on the right of the page. For details about the parameters, see Table 1.

Table 1 Parameters for third-party models on Experience Center Parameter

Description

temperature

It controls the diversity and creativity of the generated text. Increasing the value of this parameter makes the model output more diversified and creative.

Nuclear sampling

It controls the quality and diversity of the generated text. Increasing the value of this parameter makes the model output more diversified.

Topic repetition control

Penalty given to repetition in the generated text. Increasing the value of this parameter enables the model to switch topics more frequently, thereby preventing the generation of duplicate content.

Vocabulary repetition control

It controls how the model penalizes new words based on their existing frequency. Increasing the value of this parameter reduces the reuse of the same word in the model and enables the model to use more diversified words for expressions.

Output maximum token length

It controls the length of the reply generated by the model. Generally, a large value of max_tokens can generate a complete reply, but may increase the risk of generating irrelevant or duplicate content. A smaller value of max_tokens can generate a simple reply, but may also cause incomplete or incoherent content. Select a proper value based on the scenario.

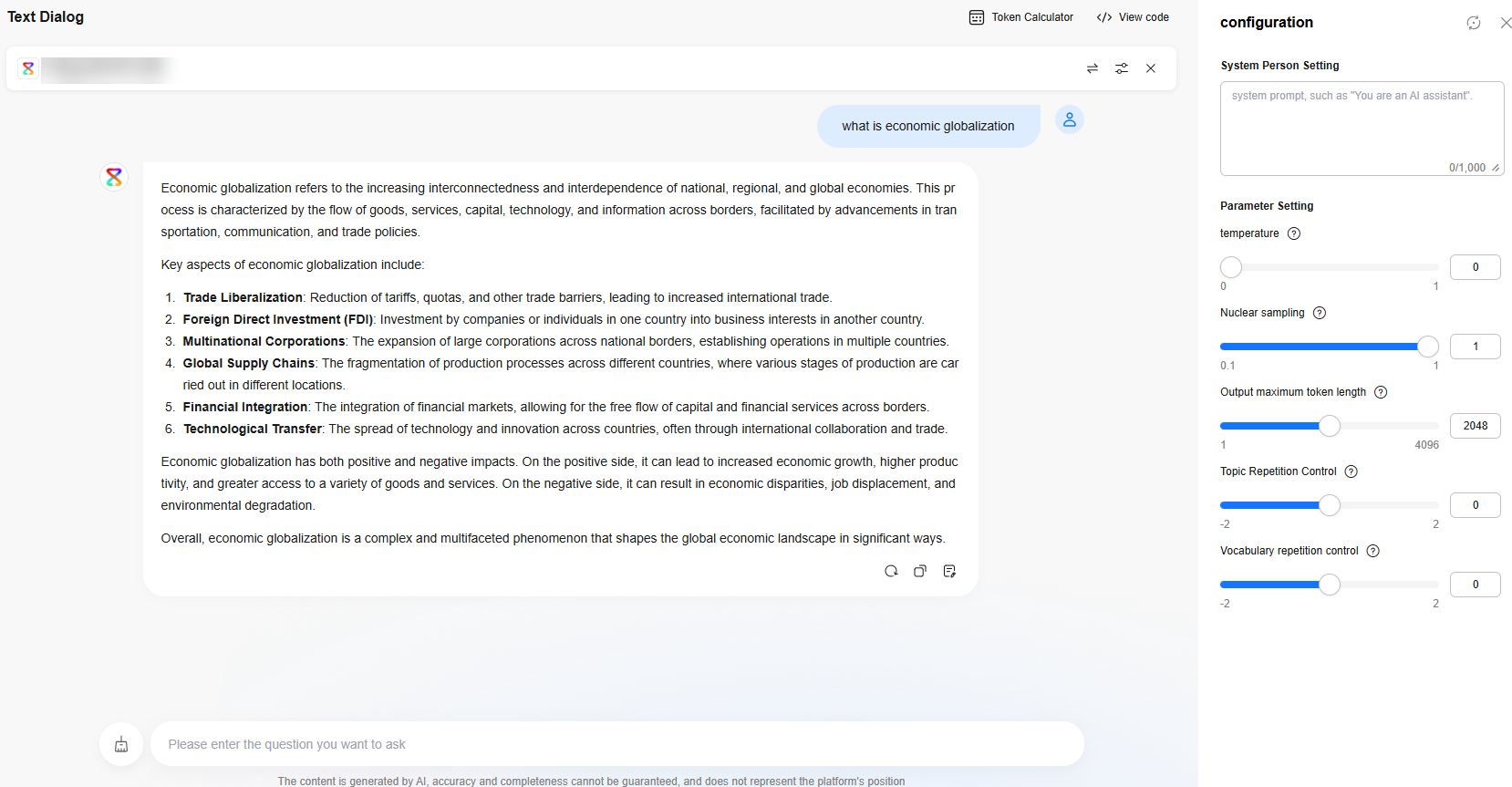

- As shown in Figure 2, if you enter a dialog and click Generate, the model outputs the corresponding answer.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot