Process of Using PanguLM

Introduction to the PanguLM Service

The PanguLM service includes third-party large models and ModelArts Studio large model development platform. This "model + model development platform" product portfolio enables customers across various industries to rapidly develop and deploy large models in a one-stop manner.

ModelArts Studio Large Model Development Platform is a comprehensive large-model development platform introduced by the PanguLM service. It seamlessly integrates data management, model training, and model deployment, enabling developers to efficiently develop and deploy large models with ease. This platform is equipped with three toolchains: data engineering, model development, and application development. These toolchains empower developers to fully leverage the capabilities of Pangu models.

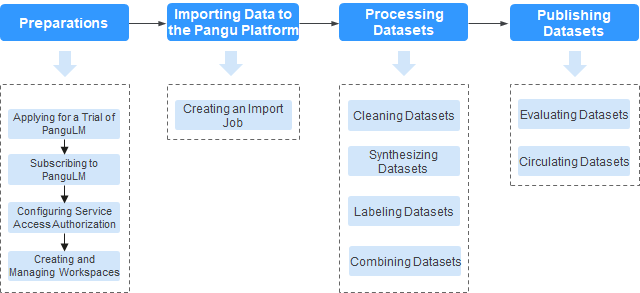

Process of Using Data Engineering

ModelArts Studio provides the data engineering capability to help you construct high-quality datasets, facilitating better model predictions and decision-making.

Figure 1 and Table 1 illustrate the process of using data engineering.

|

Procedure |

Step |

Description |

Reference |

|---|---|---|---|

|

Preparations |

Applying for a trial of the PanguLM service |

The PanguLM service is available for trial use. You can use this service only after your trial application is approved. |

Applying for Trial Use of ModelArts Studio Large Model Development Platform |

|

Subscribing to the PanguLM service |

Before using the PanguLM service, you need to subscribe to the service. |

||

|

Configuring service access authorization |

To support data storage and model training, you need to grant PanguLM the permission to access OBS. |

||

|

Creating and managing Pangu workspaces |

Create and centrally manage workspaces. |

||

|

Importing data to the Pangu platform |

Creating an import task |

Import data stored in OBS into the platform for centralized management, facilitating subsequent processing or publishing. |

|

|

Processing datasets |

Processing datasets |

Use dedicated processing operators to preprocess data, ensuring it meets the model training standards and service requirements. Different types of datasets utilize operators specially designed for removing noise and redundant information, to enhance data quality. |

|

|

Generating synthetic datasets |

Using either a preset or custom data instruction, process the original data, and generate new data based on a specified number of epochs. This process can extend the dataset to some extent and enhance the diversity and generalization capability of the trained model. |

||

|

Labeling datasets |

Add accurate labels to unlabeled datasets to ensure high-quality data required for model training. The platform supports both manual annotation and AI pre-annotation. You can choose an appropriate annotation method based on your needs. The quality of data labeling directly impacts the training effectiveness and accuracy of the model. |

||

|

Combining datasets based on a specific ratio |

Dataset combination involves combining multiple datasets based on a specific ratio and generating a processed dataset. A proper ratio ensures the diversity, balance, and representativeness of datasets and avoids issues resulting from uneven data distribution. |

||

|

Publishing datasets |

Evaluating datasets |

The platform offers predefined evaluation standards for multiple types of data. You can choose from these predefined standards or customize evaluation standards as needed to precisely improve data quality, ensure that data meets high standards, and enhance model performance. |

|

|

Publishing datasets |

Data publishing refers to publishing a single dataset in a specific format as a published dataset for subsequent model training operations. Datasets can be published in standard or Pangu format.

|

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot