Importing Log Files from OBS to LTS (Beta)

Log files stored in OBS buckets can be imported back to LTS at a time or periodically, so you can search for, analyze, and process them in LTS.

OBS log file import to LTS is not a real-time pipeline and does not apply to service scenarios with high real-time requirements.

Currently, this function is in closed beta test and cannot be applied for.

Importing Files in a Single OBS Bucket to LTS

- Log in to the LTS console.

- In the navigation pane, choose Log Ingestion > Ingestion Center. On the displayed page, select Cloud services under Types. Hover the cursor over the OBS card and click Ingest Log (LTS) to access the configuration page.

Alternatively, choose Log Ingestion > Ingestion Management in the navigation pane. Click Create. On the displayed page, select Cloud services under Types. Hover the cursor over the OBS card and click Ingest Log (LTS) to access the configuration page.

- Select a log group from the Log Group drop-down list. If there is no desired log group, click Create Log Group. For details, see Managing Log Groups.

- Select a log stream from the Log Stream drop-down list. If there is no desired log stream, click Create Log Stream. For details, see Managing Log Streams.

- Click Next: Configurations.

- On the Configurations page, set parameters by referring to Table 1.

Table 1 Configuring the collection Type

Parameter

Description

Basic Settings

Collection Configuration Name

Enter a name containing 1 to 64 characters. Only letters, digits, hyphens (-), underscores (_), and periods (.) are allowed. It cannot start with a period or underscore, or end with a period.

Task Monitoring

Enabled by default.

It logs task execution statuses to log stream lts-system/lts-obs2lts-statistics, allowing you to view OBS file import data on LTS's Task Monitoring Center and configure alarm rules to promptly detect any import issues.

OBS Data Source Configuration

OBS Bucket

Select the OBS bucket from which log files are imported to LTS.

If encryption has been enabled for the selected OBS bucket, select a key name and select I grant LTS permission to use Key Management Service (KMS) to decrypt logs. From the drop-down list under Key Name, select all keys required for OBS file import. Any incorrect or omitted key will fail the log import.

Folder Prefix

Enter the prefix (your_prefix/) or the full path (your_prefix/file.gz) of the OBS files to be imported for better locating.

Only files with an original size (before compression) of no larger than 5 GB per file can be imported.

You can obtain the folder prefix of logs as follows:

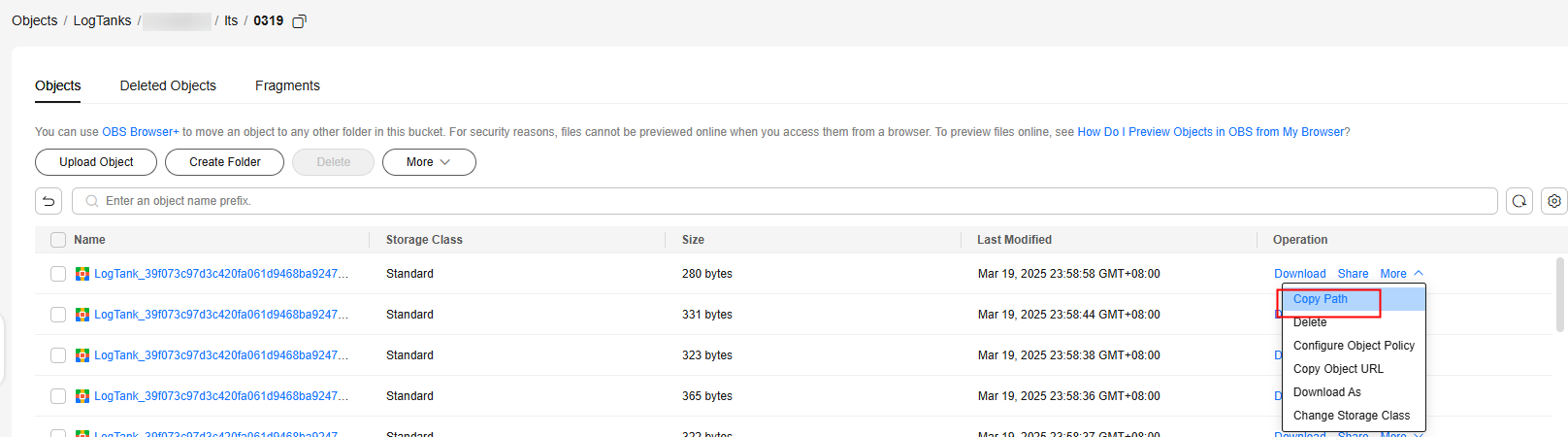

- In the bucket list on OBS Console, click the desired bucket to go to the Objects page.

- On the Objects page, locate the OBS file to be imported to LTS and click More > Copy Path in the Operation column. (The example is for reference only.)

To import logs in the 0319 folder, if the copied path is LogTanks/cn-north-4/lts/0319/LogTank_39f073c97d3c420fa061d9468ba9247c_2025-03-19T15-56-00Z_05d6486f96e43f8a.gz, the folder prefix is LogTanks/cn-north-4/lts/0319.

Figure 1 Copying a path

Regular Expression for File Filtering

Enter a regular expression for filtering files, so that only files with names matching the regular expression will be imported. If no regular expression is specified, files are not filtered.

Assume that there are files aab and aba in the directory:

- To match only file aab, use regular expression aab, aa, ^aab, or aa.

- To match only file aba, use regular expression aba, ^aba, or ^ab. Do not use ab, which will also match aab.

- To match both aab and aba, use regular expression ab or a.*.

- If there are regular keywords, they must be escaped. For example, {} must be escaped to \{\}.

Compressed Format

Auto check, non-compression, and gzip/zip/snappy compression are supported. If you select ZIP, only zip packages containing a single file without any folders are supported.

Import Interval

- One-off: LTS imports files only once and does not detect new files. Only OBS files in the Standard storage class can be imported. If you want to import OBS files in the Archive storage class, restore them to the Standard storage class first.

- Custom interval: LTS automatically detects new files and imports them at a fixed interval.

If you enable Restore Archived Files, OBS files in the Archive storage class can be restored. This option must be enabled for Archive files. Restoring Archive files takes some time (an expedited restore of Archive files takes 1 to 5 minutes). For details, see Object Restore Option and Time Required. The first time you click Preview, it might time out. Try clicking it again.

- If a periodic task starts its first scan of files on OBS, the range of the last modification time of the files is (First run time of the period – Fixed interval time, First run time of the period]. For example, if the periodic task starts for the first time at 12:00:00 and the fixed time interval configured for the OBS import task is 10 minutes, then the last modification time of the OBS files scanned for the first time will be within the interval (11:50:00, 12:00:00]. The second period runs at 12:10:00, so the last modification time of the files scanned will be within the interval (12:00:00, 12:10:00].

- If a one-off task fails to process a file, it will not parse or report any other scanned files to LTS.

- If a custom-interval task is disabled and then enabled again, the monitoring data continuity can be maintained for up to one day.

- If a custom-interval task fails to process files in an interval, it will not parse or report any other files scanned during that interval to LTS.

Filter by File Modification Time

When Import Interval is set to One-off, files can be filtered by modification time.

- If you select All, files are not filtered by modification time.

- If you select From a certain time, set a start time. Files will be filtered by the set time.

- If you select Specified time period, set a start time and end time. Files will be filtered based on the specified time range.

Data Format Configuration

Log File Code

The log file encoding format can be UTF-8 or GBK.

UTF-8 encoding is a variable-length encoding mode and represents Unicode character sets. GBK, an acronym for Chinese Internal Code Extension Specification, is a Chinese character encoding standard that extends both the ASCII and GB2312 encoding systems.

Extraction Mode

Select an extraction policy based on your log type for LTS to parse logs in OBS files.

When parsing logs exceeding 1 MB in OBS, LTS applies the following rules based on the log type:

- If a single-line log exceeds 1 MB, the excess part of that line will be truncated and discarded.

- If a multi-line block in a multi-line log exceeds 1 MB, the excess part of that block will be truncated and discarded.

- Logs in ORC format are parsed as single lines. If a single line exceeds 1 MB, the entire line will be discarded.

- Logs in JSON format are parsed as single lines. If a single line exceeds 1 MB, the entire line will be discarded.

Extraction mode description:

- Single-line - full-text: collects the full text of single-line logs without structuring parsing. To perform structuring parsing on logs, complete OBS file import and then configure structuring parsing by referring to Setting Cloud Structuring Parsing.

- Multi-line - full-text: collects the full text of multi-line logs (such as stack logs) without structuring parsing. To perform structuring parsing on logs, complete OBS file import and then configure structuring parsing by referring to Setting Cloud Structuring Parsing.

- ORC: collects logs in ORC format.

If Custom Time is disabled, the time when logs are collected is used as the log time.

If Custom Time is enabled, you can specify a field to set the log time. Set the time field key name, value, and time format, and click

to verify your settings. If the imported data is written to the Cluster Switch System (CSS), LTS does not support you to set a time two days ago for ORC logs. For details about the custom time format, see Custom Time.

to verify your settings. If the imported data is written to the Cluster Switch System (CSS), LTS does not support you to set a time two days ago for ORC logs. For details about the custom time format, see Custom Time. - JSON: collects logs in JSON format.

If Custom Time is disabled, the time when logs are collected is used as the log time.

If Custom Time is enabled, you can specify a field to set the log time. Set the time field key name, value, and time format, and click

to verify your settings. If the imported data is written to the CSS, LTS does not support you to set a time two days ago for JSON logs. For details about the custom time format, see Custom Time.

to verify your settings. If the imported data is written to the CSS, LTS does not support you to set a time two days ago for JSON logs. For details about the custom time format, see Custom Time.Set 1 to 4 JSON parsing layers. The value must be an integer and is 1 by default. This function expands the fields of a JSON log. For example, for raw log {"key1":{"key2":"value"}}, if you choose to parse it into 1 layer, the log will become {"key1":{"key2":"value"}}; if you choose to parse it into 2 layers, the log will become {"key1.key2":"value"}.

- After the setting is complete, click Preview in the lower right corner. The preview function displays only the first 10 lines of the first file that meets the conditions.

LTS allows you to preview files no larger than 10 MB. If a message indicating that the file is too large is displayed, you can temporarily modify the file regular expression filtering rule and specify a file smaller than 10 MB for preview. After the preview is complete, restore the filtering rule to the original configuration.

- Check the result preview in the lower part. If the result is correct, click Submit.

- The created ingestion configuration will be displayed. During closed beta testing, a maximum of 10 running ingestion tasks are supported.

- After a one-off task for importing data from OBS to LTS is complete, you are advised to wait for 5 minutes before toggling on Ingestion Configuration for it or creating a new task.

- Click its name to view its details.

- Click Modify in the Operation column to modify the ingestion configuration. Ingestion configurations whose import interval is One-off cannot be modified.

You can quickly navigate and modify settings by clicking Select Log Stream or Configurations in the navigation tree at the top of the page. After making your modifications, click Submit to save the changes. This operation is available for all log ingestion modes except CCE logs, ServiceStage containerized application logs, and self-built Kubernetes cluster logs.

- Click Configure Tag in the Operation column to add a tag.

- Click Copy in the Operation column to copy the ingestion configuration.

- Click Delete in the Operation column to delete the ingestion configuration.

Deleting an ingestion configuration may lead to log collection failures, potentially resulting in service exceptions related to user logs. In addition, the deleted ingestion configuration cannot be restored. Exercise caution when performing this operation.

- To stop log collection of an ingestion configuration, toggle off the switch in the Ingestion Configuration column to disable the configuration. To restart log collection, toggle on the switch in the Ingestion Configuration column.

Disabling an ingestion configuration may lead to log collection failures, potentially resulting in service exceptions related to user logs. Exercise caution when performing this operation.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot