Developing a DLI Spark Job in DataArts Studio

Huawei Cloud DataArts Studio provides a one-stop data governance platform that integrates with DLI for seamless data integration and development, enabling enterprises to manage and control their data effectively.

This section describes how to develop a DLI Spark job using DataArts Factory of DataArts Studio.

Procedure

- Obtain a demo JAR file of the Spark job and associate it with DataArts Factory on the DataArts Studio console.

- On the DataArts Studio console, create a DataArts Factory job and submit the Spark job through the DLI Spark node.

Environment Preparations

- Prepare a DLI resource environment.

- Configure a DLI job bucket.

Before using DLI, you need to configure a DLI job bucket. The bucket is used to store temporary data generated during DLI job running, such as job logs and results.

For details, see Configuring a DLI Job Bucket.

- Prepare a JAR file and upload it to an OBS bucket.

The Spark job code used in this example comes from the Maven repository (download address: https://repo.maven.apache.org/maven2/org/apache/spark/spark-examples_2.10/1.1.1/spark-examples_2.10-1.1.1.jar). This Spark job is used to calculate the approximate value of π.

After obtaining the JAR file of the Spark job code, upload the JAR file to the OBS bucket. In this example, the storage path is obs://dlfexample/spark-examples_2.10-1.1.1.jar.

- Create an elastic resource pool and create general-purpose queues within it.

An elastic resource pool offers compute resources (CPU and memory) required for running DLI jobs, which can adapt to the changing demands of services.

You can create general-purpose queues within an elastic resource pool to submit Spark jobs. These queues are associated with specific jobs and data processing tasks, and serve as the basic unit for resource allocation and usage within the pool. This means queues are specific compute resources required for executing jobs.

For details, see Creating an Elastic Resource Pool and Creating Queues Within It.

- Configure a DLI job bucket.

- Prepare a DataArts Studio resource environment.

- Buy a DataArts Studio instance.

Buy a DataArts Studio instance before submitting a DLI job using DataArts Studio.

For details, see Buying a DataArts Studio Basic Package.

- Access the DataArts Studio instance's workspace.

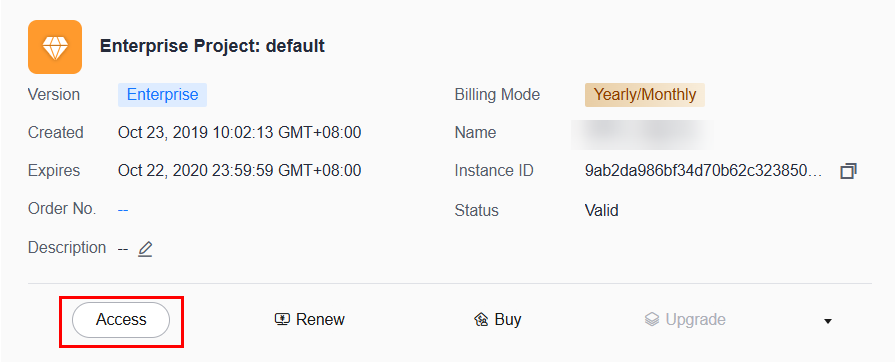

- After buying a DataArts Studio instance, click Access.

Figure 1 Accessing a DataArts Studio instance

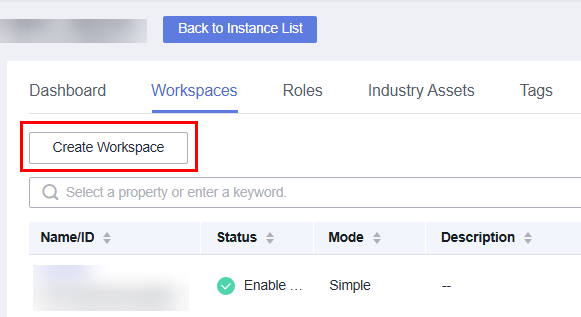

- Click the Workspaces tab to access the data development page.

By default, a workspace named default is created for the user who has purchased the DataArts Studio instance, and the user is assigned the administrator role. You can use the default workspace or create one.

For how to create a workspace, see Creating and Managing a Workspace.

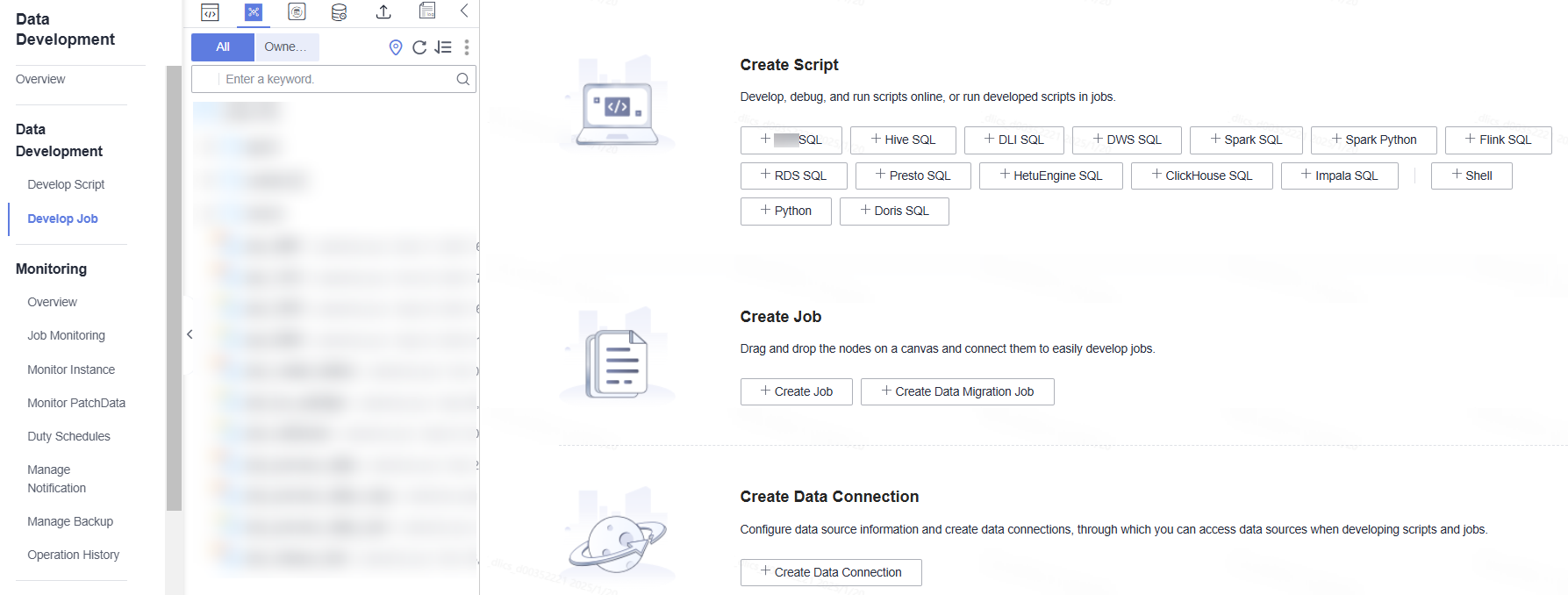

Figure 2 Accessing the DataArts Studio instance's workspace Figure 3 Accessing DataArts Studio's data development page

Figure 3 Accessing DataArts Studio's data development page

- After buying a DataArts Studio instance, click Access.

- Buy a DataArts Studio instance.

Step 1: Obtain the Spark Job Code

- After obtaining the JAR file of the Spark job code, upload the JAR file to the OBS bucket. The storage path is obs://dlfexample/spark-examples_2.10-1.1.1.jar.

- On the DataArts Studio console, locate a workspace and click DataArts Factory.

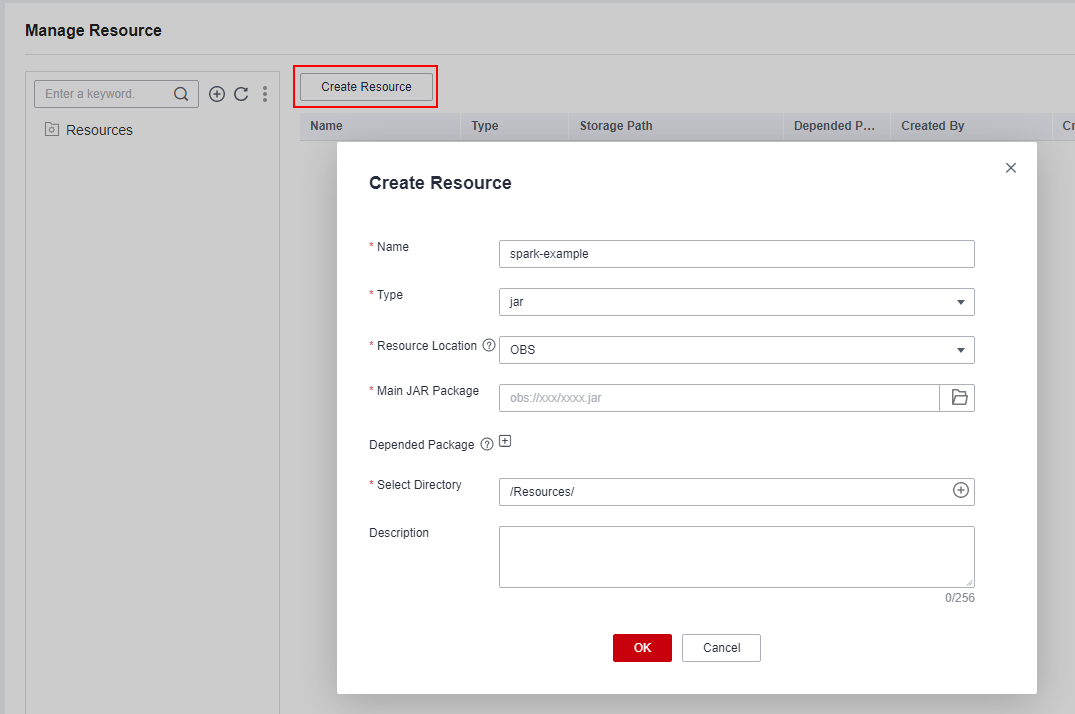

- In the navigation pane on the left, choose Configuration > Manage Resource.

- On the displayed page, click Create Resource, create a resource named spark-example on DataArts Factory, and associate it with the JAR file obtained in 1.

Figure 4 Creating a resource

Step 2: Submit a Spark Job

You need to create a job in DataArts Factory and submit the Spark job using the DLI Spark node.

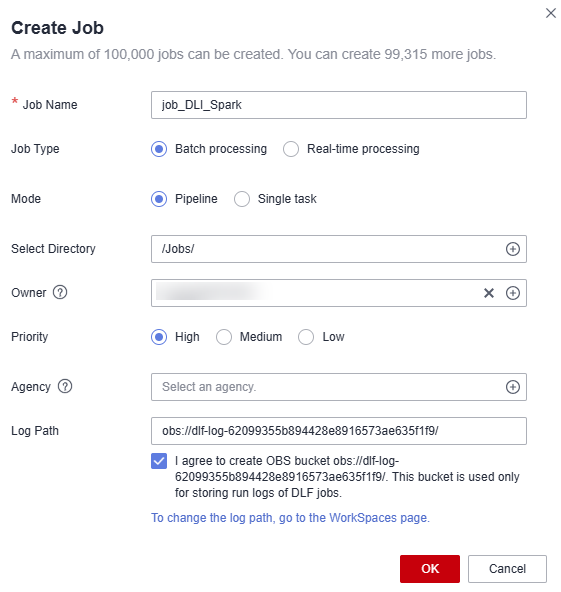

- In the navigation pane on the left, choose Data Development > Develop Job. In the displayed job list, locate the target directory, right-click it, and select Create Job. In the dialog box that appears, set Job Name to job_DLI_Spark and set other parameters as needed.

Figure 5 Creating a job

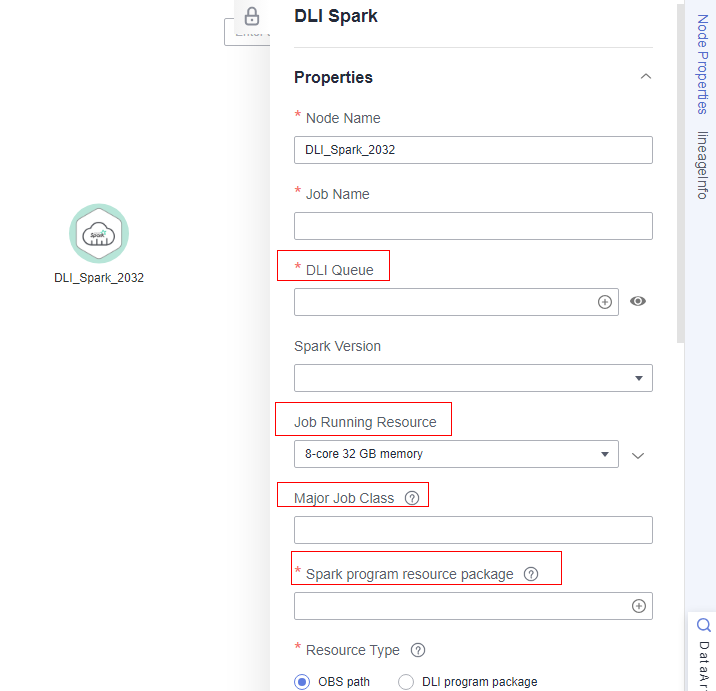

- Go to the job development page, drag the DLI Spark node to the canvas, and click the node to configure its properties.

Figure 6 Configuring node properties

Description of key properties:

- DLI Queue: Select a DLI queue.

- Job Running Resource: Maximum CPU and memory resources that can be used by a DLI Spark node.

- Major Job Class: major class of a DLI Spark node. In this example, the major class is org.apache.spark.examples.SparkPi.

- Spark program resource package: Select the resources created in 4.

- Click

to test the job.

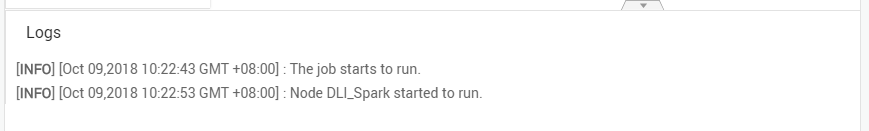

Figure 7 Job logs (for reference only)

to test the job.

Figure 7 Job logs (for reference only)

- If there are no errors in the logs, save and submit the job.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot