Configuring a Range of Listening Ports for LoadBalancer Services

When creating a LoadBalancer Service, you can specify a port range for the ELB listener. This allows the listener to receive requests on ports within the specified range and forward them to the target backend servers.

This function requires ELB. Before using this function, check whether ELB supports full-port listening and forwarding for layer-4 protocols in the current region.

Prerequisites

- A Kubernetes cluster is available and the cluster version meets the following requirements:

- v1.23: v1.23.18-r0 or later

- v1.25: v1.25.13-r0 or later

- v1.27: v1.27.10-r0 or later

- v1.28: v1.28.8-r0 or later

- v1.29: v1.29.4-r0 or later

- v1.30: v1.30.1-r0 or later

- To create a cluster using commands, ensure kubectl is used. For details, see Accessing a Cluster Using kubectl.

Precautions

You can configure a range of listening ports only when a dedicated load balancer is used and the TCP, UDP, or TLS protocol is selected.

Creating a LoadBalancer Service and Configuring a Range of Listening Ports

- Log in to the CCE console and click the cluster name to access the cluster console.

- Choose Services & Ingresses in the navigation pane, click the Services tab, and click Create Service in the upper right corner.

- Configure Service parameters. In this example, only mandatory parameters are listed. For details about how to configure other parameters, see Using the CCE Console.

- Service Name: Specify a Service name, which can be the same as the workload name.

- Service Type: Select LoadBalancer.

- Selector: Add a label and click Confirm. The Service will use this label to select pods. You can also click Reference Workload Label to use the label of an existing workload. In the dialog box that is displayed, select a workload and click OK.

- Load Balancer: Select a load balancer type and creation mode.

- In this example, only dedicated load balancers are supported.

- This section uses an existing load balancer as an example. For details about the parameters for automatically creating a load balancer, see Table 1.

- Port

- Protocol: Select TCP or UDP.

- Container Port: the port that the workload listens on. For example, Nginx uses port 80 by default.

- Service Port: the port used by the Service. In this example, select Listen on ports and configure a port range. All the requests received on the ports within this range will be forwarded to the backend server. Configure the port range from 1 to 65535. You can add a maximum of 10 non-overlapping ranges of listening ports.

- Frontend Protocol: For a dedicated load balancer, to use TLS, the type of the load balancer must be Network (TCP/UDP/TLS).

Figure 1 Configuring listening port ranges

- Click OK.

- Use kubectl to access the cluster. For details, see Accessing a Cluster Using kubectl.

- Create a YAML file named service-test.yaml. The file name can be customized.

vi service-test.yaml

An example YAML file of a Service associated with an existing load balancer is as follows:apiVersion: v1 kind: Service metadata: name: service-test labels: app: test version: v1 namespace: default annotations: kubernetes.io/elb.class: performance # A dedicated load balancer is required. kubernetes.io/elb.id: <your_elb_id> # Replace it with the ID of your existing load balancer. kubernetes.io/elb.port-ranges: '{"cce-service-0":["100,200", "300,400"], "cce-service-1":["500,600", "700,800"]}' # Configure ranges of listening ports. spec: selector: app: test version: v1 externalTrafficPolicy: Cluster ports: - name: cce-service-0 targetPort: 80 # Replace it with your container port. nodePort: 0 port: 100 # If a port range is configured for listening, this parameter becomes invalid. However, it still needs to be assigned a unique value. By default, it is set to the starting port number of the range. protocol: TCP - name: cce-service-1 targetPort: 81 # Replace it with your container port. nodePort: 0 port: 500 # If a port range is configured for listening, this parameter becomes invalid. However, it still needs to be assigned a unique value. protocol: TCP type: LoadBalancer loadBalancerIP: <your_elb_ip> # Replace it with the private IP address of your existing load balancer.Table 1 Parameters for listening to ports within a range Parameter

Mandatory

Type

Description

kubernetes.io/elb.port-ranges

Yes

String

If a dedicated load balancer is used and the TCP, UDP, or TLS protocol is selected, you can create a listener that listens to ports within a certain range from 1 to 65535. You can add a maximum of 10 port ranges that do not overlap for each listener.

The parameter value is in the following format, where ports_name and port must be unique:

'{"<ports_name_1>":["<port_1>,<port_2>","<port_3>,<port_4>"], "<ports_name_2>":["<port_5>,<port_6>","<port_7>,<port_8>"]}'For example, the port names are cce-service-0 and cce-service-1, and the listener listens to ports 100–200 and 300–400, and 500–600 and 700–800, respectively.

'{"cce-service-0":["100,200", "300,400"], "cce-service-1":["500,600", "700,800"]}' - Create the Service.

kubectl create -f service-test.yaml

If information similar to the following is displayed, the Service has been created:

service/service-test created

Verifying Configuration

- Log in to the CCE console and click the cluster name to access the cluster console.

- In the navigation pane, choose Services & Ingresses. Locate the row that contains the created Service and click the load balancer name to go to the ELB console.

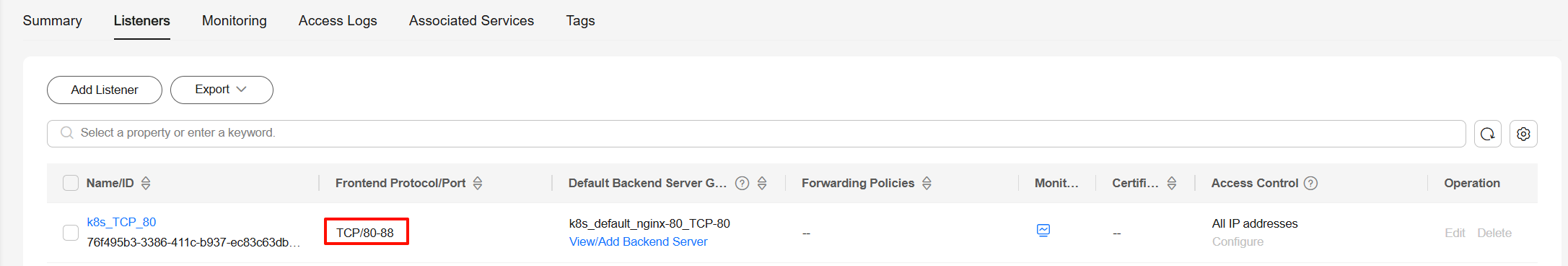

- On the Listeners tab page, check the port number of the new listener.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot