Improving UCS Dual-Cluster Reliability

Application Scenarios

Large enterprises require multi-cluster multi-active solutions to narrow down the fault domains to reduce logical errors, ensure compatibility with the original ecosystem, and minimize the adaptation workload in service release and O&M.

UCS provides dual-cluster multi-active DR to ensure that service availability is not affected when any AZ or cluster is faulty.

Constraints

- You must have at least two available CCE Turbo clusters of v1.21 or later (ucs01 and ucs02 in the following example), with each deployed in a separate AZ.

- UCS can be deployed only in the primary region of the primary/standby region environment, and does not support cross-region DR.

Solution Architecture

- UCS control plane:

- You can deploy the UCS control plane in three AZs using cluster federation. For details, see Enabling Cluster Federation.

- The UCS control plane manages the ucs01 and ucs02 clusters, which are added to a fleet. For details, see Managing Fleets.

- Two CCE Turbo clusters:

- Deploy the master nodes in three AZs. For details about how to create a cluster, see Buying a CCE Turbo Standard/Turbo Cluster.

- Create node pools in AZ 1 and AZ 2 for worker nodes. For details about how to create a node pool, see Creating a Node Pool.

- Install the CCE Cluster Autoscaler, CCE Advanced HPA, CoreDNS, and other add-ons in the clusters. For details, see Add-on Overview.

- Load balancers provided by ELB:

- Deploy load balancers in multiple AZs. For details, see Single-AZ Load Balancer Check.

- ELB distributes the traffic to multiple pods based on the configured routing policies. In addition, when the health check is enabled, ELB distributes the traffic only to pods that are running normally. For details, see Load Balancing Algorithms.

- Application deployment: Connect to the UCS control plane through the CI/CD pipeline, create a Deployment, an HPA policy, and a PropagationPolicy, and release the Deployment using MCI. For details, see Using kubectl for Cluster HA Deployment.

Fault Tolerance of Containers

The fault tolerance of containers allows you to configure health checks and automatic restarts, which ensure high availability and reliability of containerized applications. Application deployment must comply with the following rules.

|

Item |

Rules |

Description |

|---|---|---|

|

Stateless applications |

Applications must be stateless and idempotent. |

Statelessness means there are multiple service modules (processes) that treat each request independently. If an application is running in multiple pods, the response from each pod is the same. These pods do not store service context, such as sessions and logins. They process each request based on the carried data. Idempotence means that using the same parameter to call the same API multiple times will produce the same result as calling the API once. |

|

Application replicas |

The number of replicas for each workload must meet the service capacity planning and availability requirements.

|

Application replicas work with the multi-cluster solution to meet the high availability requirements of applications on the live network. This makes it possible for isolating fault domains by cluster or AZ. |

|

Application health checks |

Each application must be configured with a:

There must be an appropriate check interval and timeout.

|

If a container becomes faulty or cannot work normally, the system automatically restarts the container to improve application availability and reliability. |

|

Auto scaling |

Auto scaling must be supported, and HPA parameters must be configured as follows:

|

Resources can be used on demand, which minimizes resource waste. When traffic increases sharply or there is a cluster fault, resources can be automatically scaled out to ensure that services are not affected. |

|

Graceful shutdown |

Applications must support graceful shutdown. |

Graceful shutdown ensures that ongoing service operations are not affected after a stop instruction is sent to an application process. The application stops receiving access requests after receiving the stop instruction and shuts down after handling the received request and sending a success response. |

|

ELB health checks |

ELB can distribute traffic to the backend pods that work normally. An application must provide the health check interface, and the health check must be configured on ELB. |

If a pod or node is abnormal, or the AZ or cluster becomes faulty, traffic is only distributed to the healthy one to ensure successful service access. |

|

Container images |

The size of each container image cannot exceed 1 GB. The container image tag must use a specific version number. Do not use the latest tag. |

Small-sized images facilitate distribution and quick startup. Only specific version numbers can be used for image tag control. |

|

Resource quotas |

A resource quota must be twice the number of requested resources. |

This prevents application upgrade and scale-out failures due to insufficient resource quota. |

Fault Tolerance of Nodes

When a node becomes faulty, pods can be rescheduled to another healthy node automatically.

|

Item |

Rules |

Description |

|

Automatic eviction of unhealthy nodes |

If a node becomes unavailable, pods running on this node are evicted automatically after the tolerance time elapses. The default value is 300s. The configuration takes effect for all pods by default. You can configure different tolerance time for pods. |

Unless otherwise specified, keep the default configuration. If the specified tolerance time is too short, pods may be frequently migrated in scenarios like a network jitter. If the specified tolerance time is too long, services may be interrupted during this period after the node is faulty. |

|

Node auto scaling |

Scaling nodes means adding more resources. Cluster AutoScaling (CA) checks all pending pods and selects the most appropriate node pool for node scale-out based on the configured scaling policy. |

If cluster resources are insufficient, CA needs to scale out the nodes. If HPA reduces workloads, there are a large number of idle resources, and CA needs to scale in the nodes to release resources and avoid resource waste. The limit to the number of nodes that can be scaled by CA must be set based on the resource requirements during peak hours or the failure of a single cluster to ensure that there are sufficient nodes to handle traffic surges. |

(Optional) Using kubectl to Rectify Cluster or AZ Faults

UCS provides manual traffic switchover when a key system component of a cluster becomes faulty, a cluster is unavailable due to human factors such as improper cluster upgrade policies, incorrect upgrade configurations, and incorrect operations, or there is a fault in an AZ. You can create a Remedy object to redirect the MCI traffic from the faulty cluster.

To rectify faults using kubectl, take the following steps:

- Use kubectl to connect to the federation. For details, see Using kubectl to Connect to a Federation.

- Create and edit the remedy.yaml file on the executor. The file content is as follows. For details about the parameter definition, see Table 1.

vi remedy.yaml

The following is an example YAML file for creating a Remedy object. If the trigger condition is left empty, traffic switchover is triggered unconditionally. The cluster federation controller will immediately redirect the traffic from ucs01. After the cluster fault is rectified and the Remedy object is deleted, the traffic is routed back to ucs01. This ensures the fault of a single cluster does not affect service availability.apiVersion: remedy.karmada.io/v1alpha1 kind: Remedy metadata: name: foo spec: clusterAffinity: clusterNames: - ucs01 actions: - TrafficControlTable 1 Remedy parameters Parameter

Description

spec.clusterAffinity.clusterNames

List of clusters controlled by the policy. The specified action is performed only for clusters in the list. If this parameter is left blank, no action is performed.

spec.decisionMatches

Trigger condition list. When a cluster in the list meets any trigger conditions, the specified action is performed. If this parameter is left blank, the specified action is triggered unconditionally.

conditionType

Type of a trigger condition. Only ServiceDomainNameResolutionReady (domain name resolution of CoreDNS reported by CPD) is supported.

operator

Judgment logic. Only Equal (equal to) and NotEqual (not equal to) are supported.

conditionStatus

Status of a trigger condition.

actions

Action to be performed by the policy. Currently, only TrafficControl (traffic control) is supported.

- After the cluster fault is rectified, delete the Remedy object.

kubectl delete remedy foo

- Check that the traffic is routed back to ucs01.

Using kubectl for Cluster HA Deployment

Prerequisites

- You have used kubectl to connect to the federation. For details, see Using kubectl to Connect to a Federation.

- You have created a dedicated load balancer and bound an EIP to it. For details, see Creating a Dedicated Load Balancer.

The following is an example YAML file. Modify the parameters based on site requirements.

Procedure:

To implement cluster HA deployment, you need to specify resource delivery rules. The file content is as follows:

apiVersion: policy.karmada.io/v1alpha1

kind: ClusterPropagationPolicy

metadata:

name: karmada-global-policy # Policy name

spec:

resourceSelectors: # Resources associated with the distribution policy. Multiple resource objects can be distributed at the same time.

- apiVersion: apps/v1 # group/version

kind: Deployment # Resource type

- apiVersion: apps/v1

kind: DaemonSet

- apiVersion: v1

kind: Service

- apiVersion: v1

kind: Secret

- apiVersion: v1

kind: ConfigMap

- apiVersion: v1

kind: ResourceQuota

- apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

- apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

- apiVersion: autoscaling.cce.io/v2alpha1

kind: CronHorizontalPodAutoscaler

priority: 0 # The larger the value, the higher the priority.

conflictResolution: Overwrite # conflictResolution declares how potential conflicts should be handled when a resource that is being propagated already exists in the target cluster. The default value is Abort, which means propagation is stopped to avoid unexpected overwrite. Overwrite indicates forcible overwriting.

placement: # Placement rule that controls the clusters where the associated resources are distributed.

clusterAffinity: # Cluster affinity

clusterNames: # Cluster name used for cluster selection

- ucs01 # Cluster name. Change it to the actual cluster name in the environment.

- ucs02 # Cluster name. Change it to the actual cluster name in the environment.

replicaScheduling: # Instance scheduling policy

replicaSchedulingType: Divided # Instance splitting

replicaDivisionPreference: Weighted # Splits instances by cluster weight.

weightPreference: # Weight option

staticWeightList: # Static weights. The weight of ucs01 is 1 (about half of the replicas are allocated to this cluster), and the weight of ucs02 is 1 (about half of the replicas are allocated to this cluster).

- targetCluster: # Target cluster

clusterNames:

- ucs01 # Cluster name. Change it to the actual cluster name in the environment.

weight: 1 # The cluster weight is 1.

- targetCluster: # Target cluster

clusterNames:

- ucs02 # Cluster name. Change it to the actual cluster name in the environment.

weight: 1 # The cluster weight is 1.

clusterTolerations: # Cluster tolerance. When any master nodes in a cluster are unhealthy or unreachable, pods are not evicted from the nodes.

- key: cluster.karmada.io/not-ready

operator: Exists

effect: NoExecute

- key: cluster.karmada.io/unreachable

operator: Exists

effect: NoExecute

(Optional) If an HPA is created, you need to split the minimum number of instances in the HPA. The following is an example YAML file.

In the YAML file, clusterNum indicates the number of clusters. In this example, there are two clusters. You can set this parameter based on site requirements.

apiVersion: config.karmada.io/v1alpha1

kind: ResourceInterpreterCustomization

metadata:

name: hpa-min-replica-split-ric

spec:

customizations:

replicaResource:

luaScript: |

function GetReplicas(obj)

clusterNum = 2

replica = obj.spec.minReplicas

if ( obj.spec.minReplicas == 1 )

then

replica = clusterNum

end

return replica, nil

end

replicaRevision:

luaScript: |

function ReviseReplica(obj, desiredReplica)

obj.spec.minReplicas = desiredReplica

return obj

end

target:

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

Create a ConfigMap. The following is an example YAML file.

apiVersion: v1 kind: ConfigMap metadata: name: demo-configmap namespace: default #Namespace. The default value is default. data: foo: bar

Create a Deployment. The following is an example YAML file.

apiVersion: apps/v1

kind: Deployment

metadata:

name: demo

namespace: default #Namespace. The default value is default.

labels:

app: demo

spec:

replicas: 2

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

selector:

matchLabels:

app: demo

template:

metadata:

labels:

app: demo

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- demo

topologyKey: kubernetes.io/hostname

containers:

- env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

- name: POD_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

envFrom:

- configMapRef:

name: demo-configmap

name: demo

- image: nginx # If you select an image from Open Source Images, enter the image name. If you select an image from My Images, obtain the image path from SWR.

command:

- /bin/bash

args:

- '-c'

- 'sed -i "s/nginx/podname: $POD_NAME podIP: $POD_IP/g" /usr/share/nginx/html/index.html;nginx "-g" "daemon off;"'

imagePullPolicy: IfNotPresent

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 100m

memory: 100Mi

Create an HPA. The following is an example YAML file.

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:

name: demo-hpa

namespace: default #Namespace. The default value is default.

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: demo

minReplicas: 2

maxReplicas: 4

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 30

behavior:

scaleDown:

policies:

- type: Pods

value: 2

periodSeconds: 100

- type: Percent

value: 10

periodSeconds: 100

selectPolicy: Min

stabilizationWindowSeconds: 300

scaleUp:

policies:

- type: Pods

value: 2

periodSeconds: 15

- type: Percent

value: 20

periodSeconds: 15

selectPolicy: Max

stabilizationWindowSeconds: 0

Create a Service. The following is an example YAML file.

apiVersion: v1

kind: Service

metadata:

name: demo-svc

namespace: default #Namespace. The default value is default.

spec:

type: ClusterIP

selector:

app: demo

sessionAffinity: None

ports:

- name: http

protocol: TCP

port: 8080

targetPort: 8080

Create an MCI. The following is an example YAML file.

apiVersion: networking.karmada.io/v1alpha1

kind: MultiClusterIngress

metadata:

name: demo-mci # MCI name

namespace: default #Namespace. The default value is default.

annotations:

karmada.io/elb.id: xxx # TODO: Specify the load balancer ID.

karmada.io/elb.projectid: xxx #TODO: Specify the project ID of the load balancer.

karmada.io/elb.port: "8080" #TODO: Specify the load balancer listening port.

karmada.io/elb.health-check-flag: "on"

karmada.io/elb.health-check-option.demo-svc: '{"protocol":"TCP"}'

spec:

ingressClassName: public-elb # Load balancer type, which is a fixed value.

rules:

- host: demo.localdev.me # Exposed domain name. TODO: Change it to the actual address.

http:

paths:

- backend:

service:

name: demo-svc # Service name

port:

number: 8080 # Service port

path: /

pathType: Prefix # Prefix matching

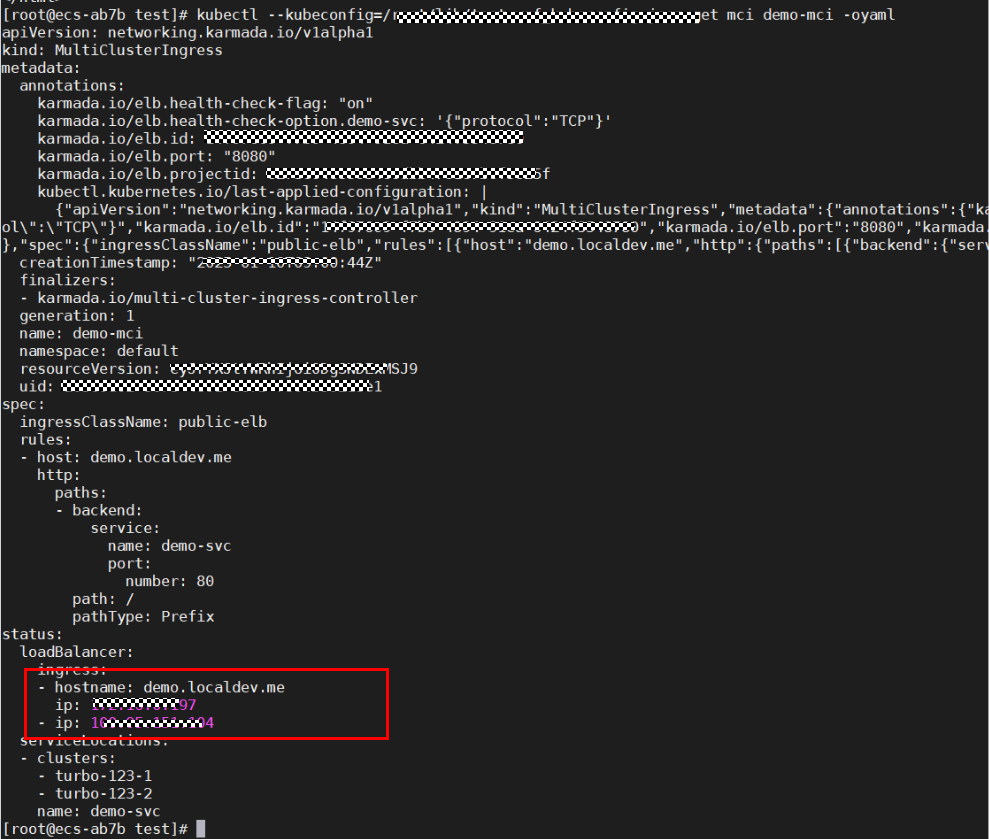

Verifying Dual-Cluster HA

Run the following command on the executor to verify dual-cluster HA.

- Obtain the host name and the EIP and private IP address of the load balancer.

kubectl get mci demo-mci -oyaml

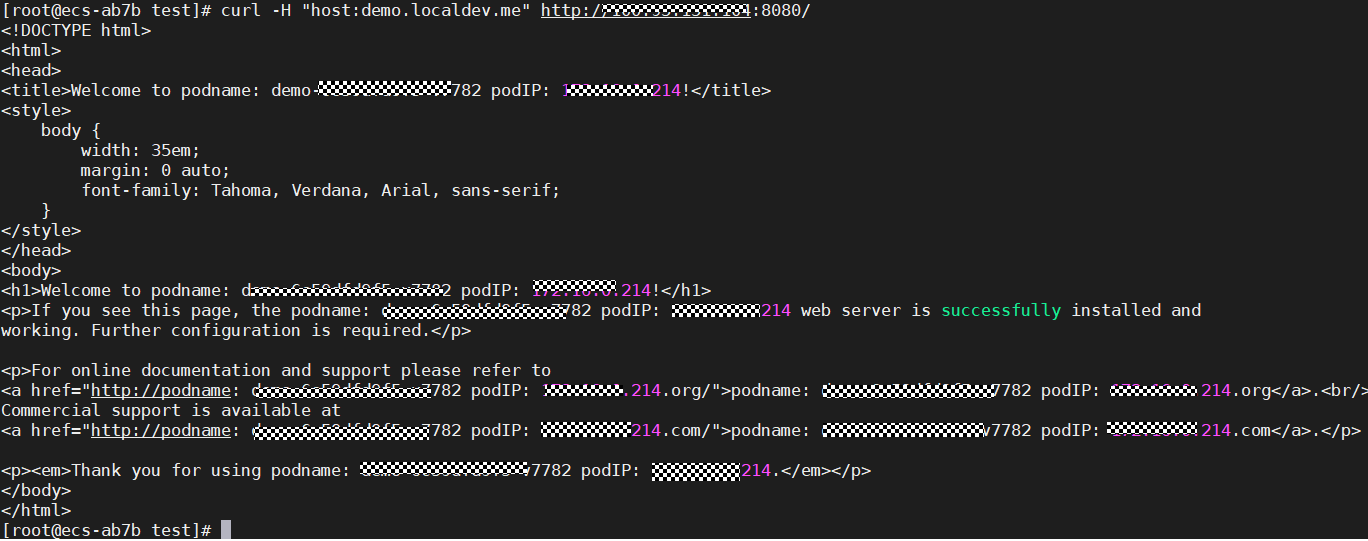

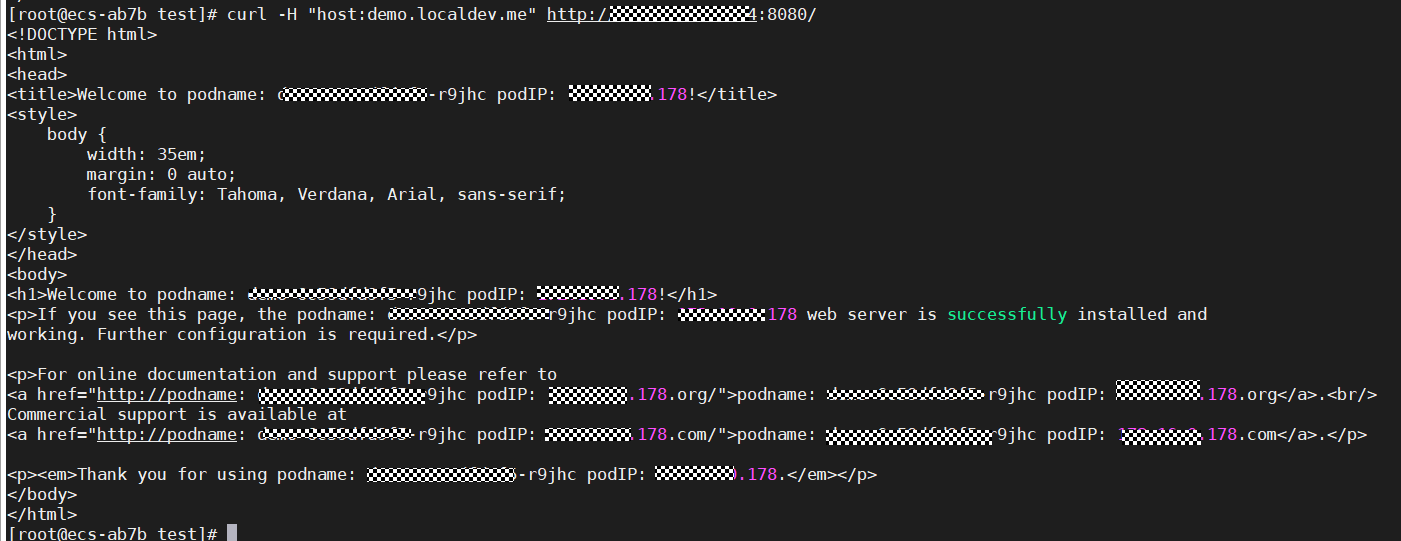

- If different pod names and IDs are displayed in the command output, the access to both clusters is successful.

curl -H "host:demo.localdev.me" http://[ELBIP]:8080/

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot