Verifying the Consistency of Data Migrated from Delta Lake (with Metadata) to MRS Delta Lake

This section describes how to use MgC to verify the consistency of data migrated from self-built Delta Lake clusters to Huawei Cloud MRS Delta Lake clusters.

For Delta Lake clusters that have metadata storage, the metadata can be collected through data lake metadata collection tasks.

Preparations

Install the MgC Agent (formerly Edge), a tool used for data verification, in the source intranet environment and log in. For details, see Installing the MgC Agent on Linux.

Procedure

- Sign in to the MgC console.

- In the navigation pane on the left, choose Settings.

- Under Migration Projects, click Create Project.

Figure 1 Creating a project

- Set Project Type to Big data migration, enter a project name, and click Create.

Figure 2 Creating a big data migration project

- Connect the MgC Agent to MgC. For more information, see Connecting the MgC Agent to MgC.

- After the connection is successful, add the username/password pairs for accessing the source Delta Lake executor and the target MRS Delta Lake executor to the MgC Agent. For more information, see Adding Resource Credentials.

- In the navigation pane, choose Migrate > Big Data Verification. In the navigation pane, under Project, select the project created in step 4.

- If you are performing a big data verification with MgC for the first time, select your MgC Agent to enable this feature. Click Select MgC Agent. In the displayed dialog box, select the MgC Agent you connected to MgC from the drop-down list.

Ensure that the selected MgC Agent is always Online and Enabled before your verification is complete.

- In the Features area, click Migration Preparations.

- Choose Connection Management and click Create Connection.

Figure 3 Creating a connection

- Select Delta Lake (with metadata) and click Next.

- Set connection parameters based on Table 1 and click Test. If the test is successful, the connection is set up.

Table 1 Parameters for creating a connection to Delta Lake (with metadata) Parameter

Configuration

Connection To

Select Source.

Connection Name

The default name is Delta-Lake-with-metadata-4 random characters (including letters and numbers). You can also customize a name.

MgC Agent

Select the MgC Agent connected to MgC in step 5.

Executor Credential

Select the source Delta Lake executor credential added to the MgC Agent in step 6.

Executor IP Address

Enter the IP address for connecting to the executor.

Executor Port

Enter the port for connecting to the executor. The default port is 22.

Spark Client Directory

Enter the absolute path of the bin directory on the Spark client.

Environment Variable Address

Enter the absolute path of the environment variable file, for example, /opt/bigdata/client/bigdata_env. If this field is not left blank, the environment variable file is automatically sourced before commands are executed.

SQL File Location

Enter a directory for storing the SQL files generated for consistency verification. You must have the read and write permissions for the directory.

NOTICE:After the migration is complete, you need to manually clear the folders generated at this location to release storage space.

- After the connection test is successful, click Confirm. The cloud service connection is set up.

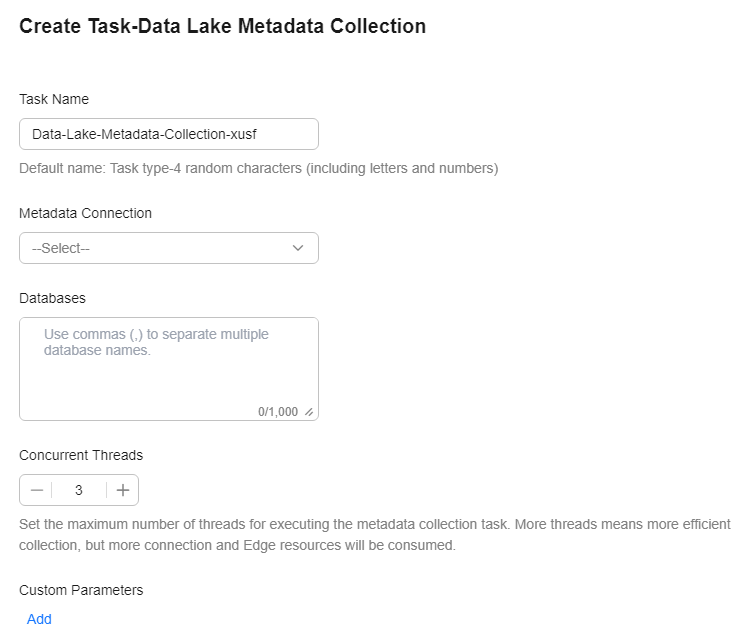

- Choose Metadata Management and click Create Data Lake Metadata Collection Task.

Figure 4 Create Data Lake Metadata Collection Task

- Set a data lake metadata collection task based on Table 2 and click Confirm.

Table 2 Parameters for configuring a metadata collection task Parameter

Configuration

Task Name

The default name is Data-Lake-Metadata-Collection-Task-4 random characters (including letters and numbers). You can also customize a name.

Metadata Connection

Select the connection created in step 12.

Databases

Enter the names of the databases whose metadata needs to be collected. If no database name is specified, the metadata of all databases is collected.

Concurrent Threads

Set the maximum number of threads for executing the collection. The default value is 3. The value ranges from 1 to 10. Configuring more concurrent threads means more efficient collection, but more connection and MgC Agent resources will be consumed.

Custom Parameters

You can customize parameters to specify the tables and partitions to collect or set criteria to filter tables and partitions.

- If the metadata source is Alibaba Cloud EMR, add the following parameter:

- Parameter: conf

- Value: spark.sql.catalogImplementation=hive

- If the source is Alibaba Cloud EMR Delta Lake 2.2 and is accessed through Delta Lake 2.3 dependencies, add the following parameter:

- Parameter: master

- Value: local

- If you are creating a verification task for an Alibaba Cloud EMR Delta Lake 2.1.0 cluster that uses Spark 2.4.8, add the following parameter:

- Parameter: mgc.delta.spark.version

- Value: 2

- If the source is Alibaba Cloud EMR and is configured with Spark 3 to process Delta Lake data, add the following parameter:

- Parameter: jars

- Value: '/opt/apps/DELTALAKE/deltalake-current/spark3-delta/delta-core_2.12-*.jar,/opt/apps/DELTALAKE/deltalake-current/spark3-delta/delta-storage-*.jar'

CAUTION:

Replace the parameter values with the actual environment directory and Delta Lake version.

- If the metadata source is Alibaba Cloud EMR, add the following parameter:

- Under Tasks, review the created metadata collection task and its settings. You can modify the task by choosing More > Modify in the Operation column.

Figure 5 Managing a metadata collection task

- Click Execute Task in the Operation column to run the task. Each time the task is executed, a task execution is generated.

- Click View Executions in the Operation column. Under Task Executions, you can view the execution records of the task and the status and collection result of each task execution. When a task execution enters a Completed status and the collection results are displayed, you can view the list of databases and tables extracted from collected metadata on the Tables tab.

Figure 6 Managing task executions

- In the Features area, click Table Management.

- Under Table Groups, click Create. Configure the parameters for creating a table group and click Confirm.

Table 3 Parameters for creating a table group Parameter

Description

Table Group

User-defined

Metadata Connection

Select the connection created in step 12.

CAUTION:A table group can only contain tables coming from the same metadata source.

Verification Rule

Select a rule that defines the method for verifying data consistency and the inconsistency tolerance. You can View More to see the details about the verification rules provided by MgC.

Description (Optional)

Enter a description to identify the table group.

- On the Table Management page, click the Tables tab, select the data tables to be added to the same table group, and choose Option > Add Tables to Group above the list. In the displayed dialog box, select the desired table group and click Confirm.

You can manually import information of incremental data tables to MgC. For details, see Creating a Table Group and Adding Tables to the Group.

- Create a connection to the source and target executors separately. For details, see Creating an Executor Connection. Select the source and target executor credentials added to the MgC Agent in step 6.

- Create a data verification task for the source and target Delta Lake clusters, respectively, and execute the tasks. For more information, see Creating and Executing Verification Tasks. During the task creation, select the table group created in step 20.

- On the Select Task Type page, choose Delta Lake.

- Select a verification method. For details about each verification method, see Verification Methods.

- On the Select Task Type page, choose Delta Lake.

- Wait until the task executions enter a Completed status. You can view and export the task execution results on the Verification Results page. For details, see Viewing and Exporting Verification Results.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot