Configuring Log Indexing

An index is a storage structure used to query and analyze logs. Different index settings generate different query and analysis results. Configure index settings to fit your service requirements.

Indexing Types

LTS supports full-text and field indexing. For details, see Table 1.

|

Indexing Type |

Description |

|---|---|

|

Index Whole Text |

LTS splits all field values of an entire log into multiple words when this function is enabled.

|

|

Index Fields |

Query logs by specified field names and values (Key:Value).

|

The following is a typical example log. The value of the content field is the original log content. Use commas (,) to parse the original log content into three fields: level, status, and message.

In the example log, hostName, hostIP, and pathFile are common system reserved fields. For details about the system fields, see System Reserved Fields.

{

"hostName":"epstest-xx518",

"hostIP":"192.168.0.31",

"pathFile":"stdout.log",

"content":"error,400,I Know XX",

"level":"error",

"status":400,

"message":"I Know XX"

}

Billing

Creating indexes incurs index traffic. For details, see Billing Items.

Precautions

- Either whole text indexing or index fields must be configured.

- After the index function is disabled, the storage space of historical indexes is automatically cleared after the data storage period of the current log stream expires.

- LTS creates index fields for certain system reserved fields by default. For details, see System Reserved Fields.

- Different index settings generate different query and analysis results. Configure index settings to fit your service requirements. Full-text indexing and field indexing do not affect each other.

- After the index configuration is modified, the modification takes effect only for newly written log data.

- Before the field indexing feature was released, fields available for SQL analysis were derived from cloud structuring parsing. After it was released, these fields will instead be sourced directly from field indexing as long as field indexing is configured. Therefore, any modification to your field indexing settings may alter query results across existing visual charts, dashboards, SQL alarms, scheduled SQL jobs, and Grafana ingestion. Exercise caution before making changes.

Configuring Full-Text Indexing

- Log in to the LTS console. The Log Management page is displayed by default.

- Click the target log group or log stream to access the log stream details page.

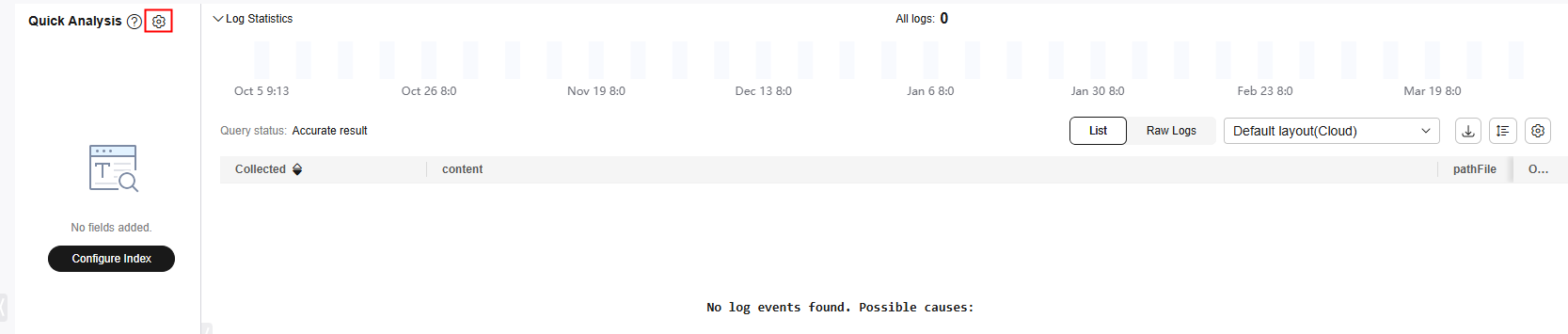

- On the Log Search tab page, click

next to Quick Analysis to go to the Index Settings tab page.

Figure 1 Index settings

next to Quick Analysis to go to the Index Settings tab page.

Figure 1 Index settings

- Set index parameters by referring to Table 2. Index Whole Text is enabled by default.

Table 2 Custom full-text indexing parameters Parameter

Description

Example

Index Whole Text

Indicates full-text indexing is applied.

Enable

Case-Sensitive

Indicates whether letters are case-sensitive during query.

- If this function is enabled, the query is case-sensitive. For example, if the example log contains Know, you can query the log only with Know.

- If this function is disabled, the query is case-insensitive. For example, if the example log contains Know, you can also query the log with KNOW or know.

Enable

Include Chinese

Indicates whether to distinguish between Chinese and English during query.

- After the function is enabled, if the log contains Chinese characters, the Chinese content is split based on unigram segmentation and the English content is split based on delimiters.

- Unigram segmentation is to split a Chinese string into Chinese characters.

- The advantage of unigram segmentation is efficient word segmentation of massive logs, and other Chinese segmentation methods have great impact on the write speed.

- If Include Chinese is enabled, unigram segmentation is used for Chinese characters (each Chinese character is segmented separately). To obtain more accurate search results, use phrases with the syntax #"phrase to be searched for".

- After this function is disabled, all content is split based on delimiters.

For example, assume that the log content is:

error,400,I Know TodayIsMonday.

- After this function is disabled, the English content is split based on delimiters. The log is split into error, 400, I, Know, and TodayIsMonday. You can search for the log using the word error or TodayIsMonday.

- After this function is enabled, the background analyzer of LTS splits the log into error, 400, I, Know, Today, Is, and Monday. You can search for the log using the word error or Today.

Delimiters

Splits the log content into multiple words based on the specified delimiters. If the default settings cannot meet your requirements, you can customize delimiters.

If you leave Delimiters blank, the field value is regarded as a whole. You can search for logs only through a complete string or by fuzzy match.

Click Preview the see the effect.

For example, assume that the log content is:

error,400,I Know TodayIsMonday.

- If no delimiter is set, the entire log is regarded as a string error,400,I Know TodayIsMonday. You can search for the log only by the complete string error,400,I Know TodayIsMonday or by fuzzy match error,400,I K*.

- If the delimiter is set to a comma (,), the raw log is split into: error, 400, and I Know TodayIsMonday. You can search for the log by fuzzy or exact match, for example, error, 400, I Kn*, and TodayIs*.

- If the delimiters are set to a comma (,) and space, the raw log is split into: error, 400, I, Know, TodayIsMonday. You can search for the log by fuzzy or exact match, for example, Know, and TodayIs*.

ASCII Delimiters

Click Add ASCII Delimiter and enter an ASCII value and control character by referring to ASCII Table.

-

- After configuring full-text indexing, click OK.

Configuring Index Fields

You can add a maximum of 500 index fields. A maximum of 100 subfields can be added for JSON fields.

- Specify the number of samples for quick analysis. The value ranges from 100,000 (default) to 10 million. Quick analysis provides a fast overview by sampling field value statistics rather than analyzing all log data. The more logs sampled, the slower the analysis.

- Automatically configuring index fields: On this page, click Auto Configure under Index Fields to have LTS generate index fields based on the first log event in the last 15 minutes or common system reserved fields (such as hostIP, hostName, and pathFile), and manually add or delete fields as needed.

- After you click Auto Configure, LTS obtains the intersection of the raw logs and system fields generated in the last 15 minutes by default, and combines the intersection with current structured fields and tag fields to form the table data below Index Fields.

- If no raw log is generated in the last 15 minutes, LTS obtains hostIP, hostName, pathFile, structured fields, and tag fields to form the table data.

- When you configure structuring on the Index Settings page in the process of creating an ECS log ingestion configuration, the category, hostName, hostId, hostIP, hostIPv6, and pathFile fields are automatically added to Index Fields even if you have not clicked Auto Configure. A field will not be added if the same one already exists.

- When you configure structuring on the Index Settings page in the process of creating an ECS log ingestion configuration, the category, clusterId, clusterName, nameSpace, podName, containerName, appName, hostName, hostId, hostIP, hostIPv6, and pathFile fields are automatically added to Index Fields even if you have not clicked Auto Configure. A field will not be added if the same one already exists.

- Adding a single index field: Click Add Field under Index Fields to configure field indexing. For details, see Table 3.

- Add fields extracted from structured logs to analyze statistics. Whenever the name of a field is changed on the log structuring page, the field must be re-added here.

- The indexing parameters take effect only for the current field.

- Index fields that do not exist in log content are invalid.

Table 3 Custom index field parameters Parameter

Description

Field

Log field name, such as level in the example log.

- A field name can contain only letters, digits, hyphens (-), underscores (_), and periods (.). Do not start or end with a period, or end with double underscores (__).

- LTS creates index fields for certain system reserved fields by default. For details, see System Reserved Fields.

- For system fields, system is displayed next to their names.

Action

Displays the field status, such as New, Retain, Modify, and Delete. After the index field is changed, click Compare to view the differences between the original and modified configurations.

- New fields cannot be modified.

- When you modify the settings of Type, Alias, Case-Sensitive, Common Delimiters, ASCII Delimiters, Include Chinese, or Quick Analysis, the system compares the modified settings with the original settings and changes the action to modified.

- After you click OK, the fields whose action is deleted are not saved.

- In the search box under Index Fields, you can search for fields by field name, action, or type.

- You can select multiple fields and click Batch Configure to configure the following parameters in batches: Action, Type, Case-Sensitivity, and Custom Delimiters.

Type

- Data type of the log field value. The options are string, long, json, and float.

- Fields of long and float types do not support Case-Sensitivity, Include Chinese, and Delimiters.

Alias

You can set aliases for fields. Properly configured aliases can make your logs easier to read and maintain, improving query efficiency.

Aliases are only applicable for SQL search and analysis. They cannot be used for keyword search.

Case-Sensitive

Indicates whether letters are case-sensitive during query.

- If this function is enabled, the query is case-sensitive. For example, if the message field in the example log contains Know, you can query the log only with message:Know.

- If this function is disabled, the query is case-insensitive. For example, if the message field in the example log contains Know, you can also query the log with message:KNOW or message:know.

Common Delimiters

Splits the log content into multiple words based on the specified delimiters. If the default settings cannot meet your requirements, you can customize delimiters.

If you leave Delimiters blank, the field value is regarded as a whole. You can search for logs only through a complete string or by fuzzy match.

For example, the content of the message field in the example log is I Know TodayIsMonday.

- If no delimiter is set, the entire log is regarded as a string I Know TodayIsMonday. You can search for the log only by the complete string message:I Know TodayIsMonday or by fuzzy search message:I Know TodayIs*.

- If the delimiter is set to a space, the raw log is split into: I, Know, and TodayIsMonday. You can find the log by fuzzy search or exact words, for example, message:Know, or message: TodayIsMonday.

ASCII Delimiters

Click Add ASCII Delimiter and enter an ASCII value and control character by referring to ASCII Table.

Include Chinese

Indicates whether to distinguish between Chinese and English during query.

- After the function is enabled, if the log contains Chinese characters, the Chinese content is split based on unigram segmentation and the English content is split based on delimiters.

- Unigram segmentation is to split a Chinese string into Chinese characters.

- The advantage of unigram segmentation is efficient word segmentation of massive logs, and other Chinese segmentation methods have great impact on the write speed.

- If Include Chinese is enabled, unigram segmentation is used for Chinese characters (each Chinese character is segmented separately). To obtain more accurate search results, use phrases with the syntax #"phrase to be searched for".

- After this function is disabled, all content is split based on delimiters.

For example, the content of the message field in the example log is I Know TodayIsMonday.

- After this function is disabled, the English content is split based on delimiters. The log is split into I, Know, and TodayIsMonday. You can search for the log using the word message:Know or message:TodayIsMonday.

- After this function is enabled, the background analyzer of LTS splits the log into I, Know, Today, Is, and Monday. You can search for the log using the word message:Know or message:Today.

Quick Analysis

By default, this option is enabled, indicating that this field will be sampled and collected. For details, see Creating a Quick Analysis Task.

- The principle of quick analysis is to collect statistics on 100,000 logs that match the search criteria, not all logs.

- The maximum length of a field for quick analysis is 2,000 bytes.

- The quick analysis field area displays the first 100 records.

Operation

Click

to delete the target field.

to delete the target field. - Click OK.

System Reserved Fields

During log collection, LTS adds information such as the collection time, log type, and host IP address to logs in the form of Key-Value pairs. These fields are system reserved fields.

- When using APIs to write log data or add ICAgent configurations, avoid using the same field names as reserved field names to prevent issues such as duplicate field names and incorrect queries.

- Currently, LTS adds system reserved fields to log data for free. For more information, see Price Calculator.

- A custom log field must not contain double underscores (__) in its name. If it does, indexing cannot be configured for the field.

- You cannot configure case sensitivity, Chinese character inclusion, or delimiters for system reserved fields under Index Fields. By default, these fields automatically adopt the settings configured under Index Whole Text.

|

Field |

Data Format |

Index and Statistics Settings |

Description |

|---|---|---|---|

|

collectTime |

Integer, Unix timestamp (ms) |

Index setting: After indexing is enabled, a field index is created for collectTime by default. The index data type is long. Enter collectTime : xxx during the query. |

Indicates the time when logs are collected by ICAgent. Example: "collectTime":"1681896081334" indicates 2023-04-19 17:21:21 when converted into standard time. |

|

__time__ |

Integer, Unix timestamp (ms) |

Index setting: After indexing is enabled, a field index is created for time by default. The index data type is long. This field cannot be queried. |

Log time refers to the time when a log is displayed on the console. Example: "__time__":"1681896081334" indicates 2023-04-19 17:21:21 when converted into standard time. By default, the collection time is used as the log time. You can also customize the log time. |

|

lineNum |

Integer |

Index setting: After indexing is enabled, a field index is created for lineNum by default. The index data type is long. |

Line number (offset), which is used to sort logs. Non-high-precision logs are generated based on the value of collectTime. The default value is collectTime * 1000000 + 1. For high-precision logs, the value is the nanosecond value reported by users. Example: "lineNum":"1681896081333991900" |

|

category |

String |

Index setting: After indexing is enabled, a field index is created for category by default. The index data type is string, and the delimiters are empty. Enter category: xxx during the query. |

Log type, indicating the source of the log. Example: The field value of logs collected by ICAgent is LTS, and that of logs reported by a cloud service such as DCS is DCS. |

|

clusterName |

String |

Index setting: After indexing is enabled, a field index is created for clusterName by default. The index data type is string, and the delimiters are empty. Enter clusterName: xxx during the query. |

Cluster name, used in the Kubernetes scenario. Example: "clusterName":"epstest" |

|

clusterId |

String |

Index setting: After indexing is enabled, a field index is created for clusterId by default. The index data type is string, and the delimiters are empty. Enter clusterId: xxx during the query. |

Cluster ID, used in the Kubernetes scenario. Example: "clusterId":"c7f3f4a5-xxxx-11ed-a4ec-0255ac100b07" |

|

nameSpace |

String |

Index setting: After indexing is enabled, a field index is created for nameSpace by default. The index data type is string, and the delimiters are empty. Enter nameSpace: xxx during the query. |

Namespace used in the Kubernetes scenario. Example: "nameSpace":"monitoring" |

|

appName |

String |

Index setting: After indexing is enabled, a field index is created for appName by default. The index data type is string, and the delimiters are empty. Enter appName: xxx during the query. |

Component name, that is, the workload name in the Kubernetes scenario. Example: "appName":"alertmanager-alertmanager" |

|

serviceID |

String |

Index setting: After indexing is enabled, a field index is created for serviceID by default. The index data type is string, and the delimiters are empty. Enter serviceID: xxx during the query. |

Workload ID in the Kubernetes scenario. Example: "serviceID":"cf5b453xxxad61d4c483b50da3fad5ad" |

|

podName |

String |

Index setting: After indexing is enabled, a field index is created for podName by default. The index data type is string, and the delimiters are empty. Enter podName: xxx during the query. |

Pod name in the Kubernetes scenario. Example: "podName":"alertmanager-alertmanager-0" |

|

podIp |

String |

Index setting: After indexing is enabled, a field index is created for podIp by default. The index data type is string, and the delimiters are empty. Enter podIp: xxx during the query. |

Pod IP address in the Kubernetes scenario. Example: "podIp":"10.0.0.145" |

|

containerName |

String |

Index setting: After indexing is enabled, a field index is created for containerName by default. The index data type is string, and the delimiters are empty. Enter containerName: xxx during the query. |

Container name used in the Kubernetes scenario. Example: "containerName":"config-reloader" |

|

hostName |

String |

Index setting: After this function is enabled, a field index is created for hostName by default. The index data type is string, and the delimiters are empty. Enter hostName: xxx during the query. |

Indicates the host name where ICAgent resides. Such as "hostName":"epstest-xx518" in the example. |

|

hostId |

String |

Index setting: After this function is enabled, a field index is created for hostId by default. The index data type is string, and the delimiters are empty. Enter hostId: xxx during the query. |

Indicates the host ID where ICAgent resides. The ID is generated by ICAgent. Such as "hostId":"318c02fe-xxxx-4c91-b5bb-6923513b6c34" in the example. |

|

hostIP |

String |

Index setting: After this function is enabled, a field index is created for hostIP by default. The index data type is string, and the delimiters are empty. Enter hostIP: xxx during the query. |

Host IP address where the log collector resides (applicable to IPv4 scenario) Such as "hostIP":"192.168.0.31" in the example. |

|

hostIPv6 |

String |

Index setting: After this function is enabled, a field index is created for hostIPv6 by default. The index data type is string, and the delimiters are empty. Enter hostIPv6: xxx during the query. |

Host IP address where the log collector resides (applicable to IPv6 scenario) Such as "hostIPv6":"" in the example. |

|

pathFile |

String |

Index setting: After this function is enabled, a field index is created for pathFile by default. The index data type is string, and the delimiters are empty. Enter pathFile: xxx during the query. |

File path is the path of the collected log file. Such as "pathFile":"stdout.log" in the example. |

|

content |

String |

Index setting: After Index Whole Text is enabled, the delimiters defined in Index Whole Text are used to delimit the value of the content field. The content field can be configured as an index field. |

Original log content. Example: "content":"level=error ts=2023-04-19T09:21:21.333895559Z" |

|

__receive_time__ |

Integer, Unix timestamp (ms) |

Index setting: After this function is enabled, a field index is created for __receive_time__ by default. The index data type is long. |

Time when a log is reported to the server, which is same as the time when the LTS collector receives the log. |

|

__client_time__ |

Integer, Unix timestamp (ms) |

Index setting: After indexing is enabled, a field index is created for __client_time__ by default. The index data type is long. |

Time when the client reports a device log. |

|

_content_parse_fail_ |

String |

Index setting: After indexing is enabled, a field index is created for _content_parse_fail_ by default. The index data type is string, and the default delimiter is used. Enter _content_parse_fail_: xxx during the query. |

Content of the log that fails to be parsed. |

|

__time |

Integer, Unix timestamp (ms) |

The __time field cannot be configured as an index field. |

N/A |

|

logContent |

String |

The logContent field cannot be configured as an index field. |

N/A |

|

logContentSize |

Integer |

The logContentSize field cannot be configured as an index field. |

N/A |

|

logIndexSize |

Integer |

The logIndexSize field cannot be configured as an index field. |

N/A |

|

groupName |

String |

The groupName field cannot be configured as an index field. |

N/A |

|

logStream |

String |

The logStream field cannot be configured as an index field. |

N/A |

ASCII Table

|

ASCII Value |

Character |

ASCII Value |

Character |

ASCII Value |

Character |

ASCII Value |

Character |

|---|---|---|---|---|---|---|---|

|

0 |

NUL (Null) |

32 |

(Space) |

64 |

@ |

96 |

` |

|

1 |

SOH (Start of heading) |

33 |

! |

65 |

A |

97 |

a |

|

2 |

STX (Start of text) |

34 |

" |

66 |

B |

98 |

b |

|

3 |

ETX (End of text) |

35 |

# |

67 |

C |

99 |

c |

|

4 |

EOT (End of transmission) |

36 |

$ |

68 |

D |

100 |

d |

|

5 |

ENQ (Enquiry) |

37 |

% |

69 |

E |

101 |

e |

|

6 |

ACK (Acknowledge) |

38 |

& |

70 |

F |

102 |

f |

|

7 |

BEL (Bell) |

39 |

' |

71 |

G |

103 |

g |

|

8 |

BS (Backspace) |

40 |

( |

72 |

H |

104 |

h |

|

9 |

HT (Horizontal tab) |

41 |

) |

73 |

I |

105 |

i |

|

10 |

LF (Line feed) |

42 |

* |

74 |

J |

106 |

j |

|

11 |

VT (Vertical tab) |

43 |

+ |

75 |

K |

107 |

k |

|

12 |

FF (Form feed) |

44 |

, |

76 |

L |

108 |

l |

|

13 |

CR (Carriage return) |

45 |

- |

77 |

M |

109 |

m |

|

14 |

SO (Shift out) |

46 |

. |

78 |

N |

110 |

n |

|

15 |

SI (Shift in) |

47 |

/ |

79 |

O |

111 |

o |

|

16 |

DLE (Data link escape) |

48 |

0 |

80 |

P |

112 |

p |

|

17 |

DC1 (Device control 1) |

49 |

1 |

81 |

Q |

113 |

q |

|

18 |

DC2 (Device control 2) |

50 |

2 |

82 |

R |

114 |

r |

|

19 |

DC3 (Device control 3) |

51 |

3 |

83 |

S |

115 |

s |

|

20 |

DC4 (Device control 4) |

52 |

4 |

84 |

T |

116 |

t |

|

21 |

NAK (Negative acknowledge) |

53 |

5 |

85 |

U |

117 |

u |

|

22 |

SYN (Synchronous idle) |

54 |

6 |

86 |

V |

118 |

v |

|

23 |

ETB (End of transmission block) |

55 |

7 |

87 |

W |

119 |

w |

|

24 |

CAN (Cancel) |

56 |

8 |

88 |

X |

120 |

x |

|

25 |

EM (End of medium) |

57 |

9 |

89 |

Y |

121 |

y |

|

26 |

SUB (Substitute) |

58 |

: |

90 |

Z |

122 |

z |

|

27 |

ESC (Escape) |

59 |

; |

91 |

[ |

123 |

{ |

|

28 |

FS (File separator) |

60 |

< |

92 |

\ |

124 |

| |

|

29 |

GS (Group separator) |

61 |

= |

93 |

] |

125 |

} |

|

30 |

RS (Record separator) |

62 |

> |

94 |

^ |

126 |

~ |

|

31 |

US (Unit separator) |

63 |

? |

95 |

_ |

127 |

DEL (Delete) |

Helpful Links

- After configuring log indexing, you can use the log alarm function of LTS to set alarm rules to notify you of exceptions in the ingested logs. For details, see Configuring Log Alarm Rules.

- You can configure indexing for log streams by calling LTS APIs. For details, see Creating an Index for a Specified Log Stream.

- You can query the indexing configurations of log streams by calling LTS APIs. For details, see Querying the Index Configurations of a Specified Log Stream.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot