Configuring a Job for Synchronizing Data from Apache Kafka to MRS Kafka

Supported Source and Destination Database Versions

|

Source Database |

Destination Database |

|---|---|

|

Kafka cluster (2.7 and 3.x) |

Kafka cluster (2.7 and 3.x) |

Database Account Permissions

Before you use DataArts Migration for data synchronization, ensure that the source and destination database accounts meet the requirements in the following table. The required account permissions vary depending on the synchronization task type.

|

Type |

Required Permissions |

|---|---|

|

Source database account |

N/A |

|

Destination database account |

The MRS user must have the read and write permissions on corresponding Kafka topics, that is, the user must belong to the kafka/kafkaadmin/kafkasuperuser user group.

NOTE:

A common Kafka user can access a topic only after being granted the read and write permissions on the topic by the Kafka administrator. |

- You are advised to create independent database accounts for DataArts Migration task connections to prevent task failures caused by password modification.

- After changing the account passwords for the source or destination databases, modify the connection information in Management Center as soon as possible to prevent automatic retries after a task failure. Automatic retries will lock the database accounts.

Supported Synchronization Objects

The following table lists the objects that can be synchronized using different links in DataArts Migration.

|

Type |

Note |

|---|---|

|

Synchronization objects |

All Kafka topic messages can be synchronized, but the messages cannot be synchronized after being parsed and reassembled. |

Important Notes

In addition to the constraints on supported data sources and versions, connection account permissions, and synchronization objects, you also need to pay attention to the notes in the following table.

|

Type |

Restriction |

|---|---|

|

Database |

|

|

Usage |

General: During real-time synchronization, the IP addresses, ports, accounts, and passwords cannot be changed. Incremental synchronization phase: In the entire database migration scenario, you need to increase the number of concurrent jobs based on the number of topic partitions to be synchronized. Otherwise, a memory overflow may occur. Troubleshooting: If any problem occurs during task creation, startup, full synchronization, incremental synchronization, or completion, rectify the fault by referring to . |

|

Other |

N/A |

Procedure

This section uses real-time synchronization from Apache Kafka to MRS Kafka as an example to describe how to configure a real-time data migration job. Before that, ensure that you have read the instructions described in Check Before Use and completed all the preparations.

- Create a real-time migration job by following the instructions in Creating a Real-Time Migration Job and go to the job configuration page.

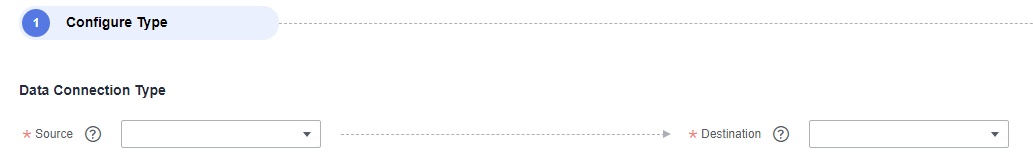

- Select the data connection type. Select Apache_Kafka for Source and MRS_Kafka for Destination.

Figure 1 Selecting the data connection type

- Select a job type. The default migration type is Real-time. The migration scenario can only be Entire DB.

Figure 2 Setting the migration job type

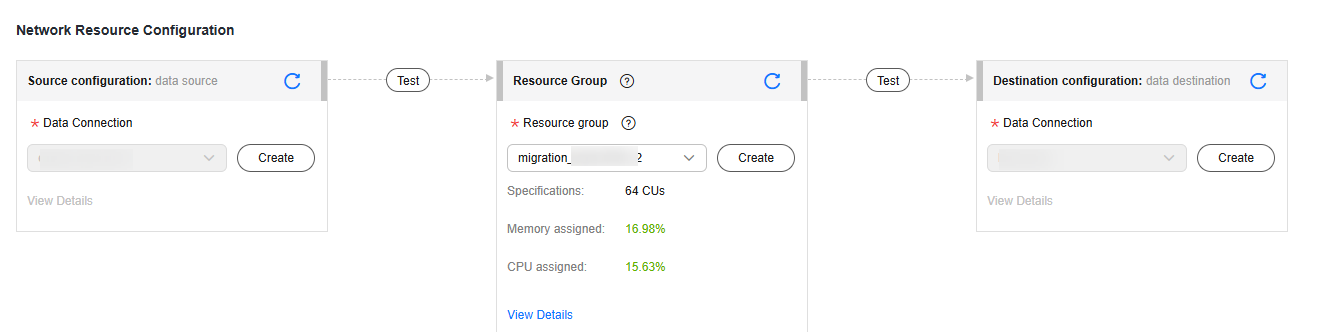

- Configure network resources. Select the created DMS for Kafka and OBS data connections and the migration resource group for which the network connection has been configured.

Figure 3 Selecting data connections and a migration resource group

If no data connection is available, click Create to go to the Manage Data Connections page of the Management Center console and click Create Data Connection to create a connection. For details, see Configuring DataArts Studio Data Connection Parameters.

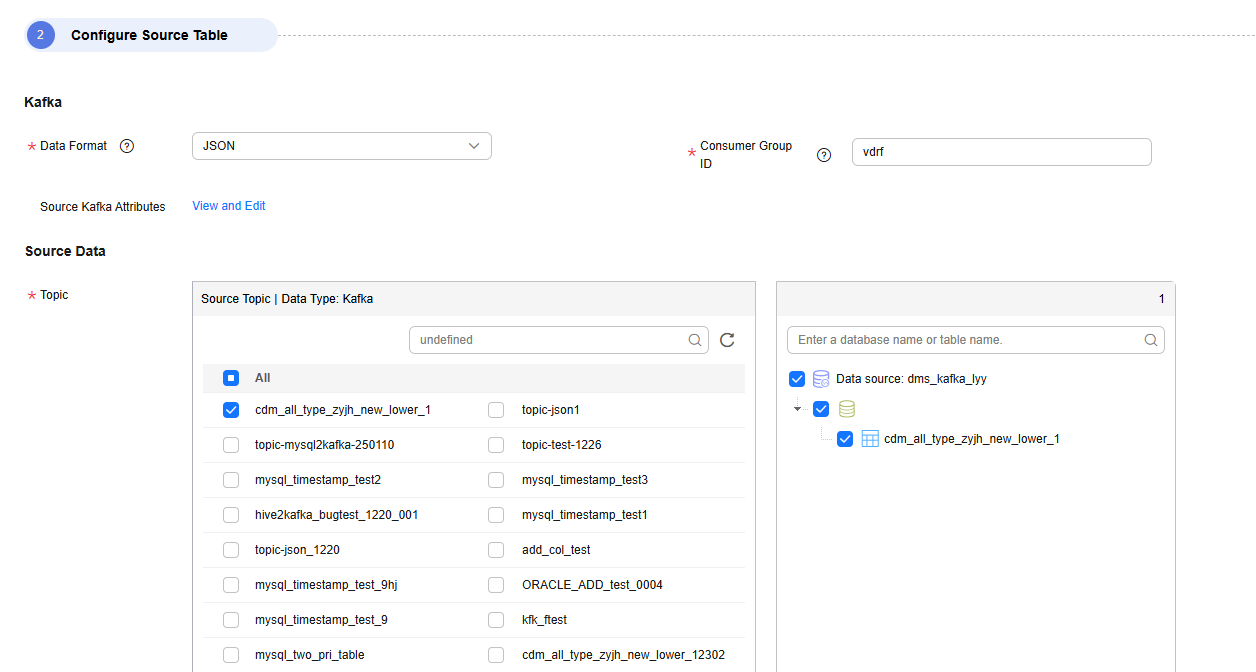

- Configure source parameters.

- Select the Kafka topics to be synchronized.

Figure 4 Selecting the Kafka topics to be synchronized

- Consumer Group ID

A consumer subscribes to a topic. A consumer group consists of one or more consumers. DataArts Migration allows you to specify the Kafka consumer group to which a consumption action belongs.

- Source Kafka Attributes

You can add Kafka configuration items with the properties. prefix. The job automatically removes the prefix and transfers configuration items to the underlying Kafka client. For details about the parameters, see the configuration descriptions in Kafka documentation.

- Select the Kafka topics to be synchronized.

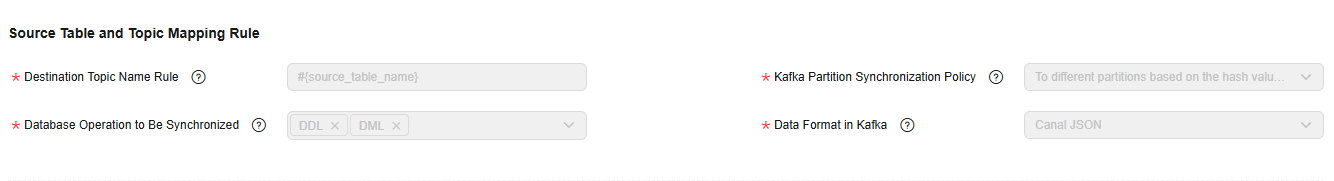

- Configure destination parameters.

Figure 5 Kafka destination parameters

- Destination Topic Name Rule

Configure the rule for mapping source MySQL database tables to destination Kafka topics. You can specify a fixed topic or use a built-in variable to synchronize data from source tables to topics.

The following built-in variable can be used: #{source_Topic_name}

- Kafka Partition Synchronization Policy

The following three policies are available for synchronizing source data to specified partitions of destination Kafka topics:

- To partition 0

- To the partition corresponding to the source partition: Source messages are delivered to their corresponding destination partitions. This policy ensures that the message sequence remains unchanged.

- To different partitions in polling mode: The Kafka sticky partitioning policy is used to evenly deliver messages to all destination partitions. This policy cannot keep the message sequence unchanged.

- Partitions of New Topic

If the destination Kafka does not have the corresponding topic, the topic automatically created by DataArts Migration has three partitions.

- Destination Kafka Attributes

You can set Kafka attributes and add the properties. prefix. The job will automatically remove the prefix and transfer the attributes to the Kafka client. For details about the parameters, see the configuration descriptions in the Kafka documentation.

- Destination Topic Name Rule

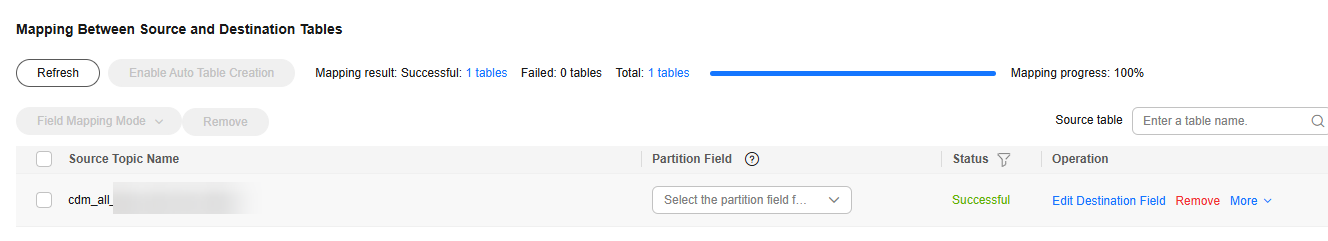

- Refresh the mapping between the source table and destination table, and check whether the mapping between the source topic and destination topic is correct. You can change the name of the destination topic as needed. One source topic can be mapped to one destination topic, or multiple source topics can be mapped to one destination topic.

Figure 6 Mapping between source and destination tables

- Configure task parameters.

Table 5 Task parameters Parameter

Description

Default Value

Execution Memory

Memory allocated for job execution, which automatically changes with the number of CPU cores

8GB

CPU Cores

Value range: 2 to 32

For each CPU core added, 4 GB execution memory and one concurrency are automatically added.

2

Maximum Concurrent Requests

Maximum number of jobs that can be concurrently executed. This parameter does not need to be configured and automatically changes with the number of CPU cores.

1

Adding custom attributes

You can add custom attributes to modify some job parameters and enable some advanced functions. For details, see Job Performance Optimization.

N/A

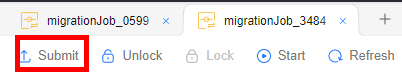

- Submit and run the job.

After configuring the job, click Submit in the upper left corner to submit the job.

Figure 7 Submitting the job

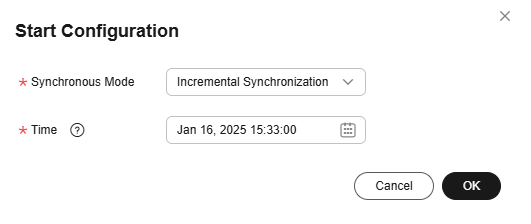

After submitting the job, click Start on the job development page. In the displayed dialog box, set required parameters and click OK.

Figure 8 Starting the job

Table 6 Parameters for starting the job Parameter

Description

Offset Parameter

- Earliest: Data consumption starts from the earliest offset of the Kafka topic.

- Latest: Data consumption starts from the latest offset of the Kafka topic.

- Start/End time: Data consumption starts from the offset of the Kafka topic obtained based on the time.

Time

This parameter is required if Offset is set to Start/End time. It specifies the start time of synchronization.

NOTE:If you set a time earlier than the earliest offset of Kafka messages, data consumption starts from the earliest offset by default.

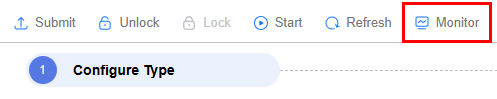

- Monitor the job.

On the job development page, click Monitor to go to the Job Monitoring page. You can view the status and log of the job, and configure alarm rules for the job. For details, see Real-Time Migration Job O&M.

Figure 9 Monitoring the job

Performance Optimization

If the synchronization speed is too slow, rectify the fault by referring to Job Performance Optimization.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.