Setting Up Scheduling for a Job

This section describes how to set up scheduling for an orchestrated job.

- If the processing mode of a job is batch processing, configure scheduling types for jobs. Three scheduling types are supported: run once, run periodically, and event-based. For details, see Setting Up Scheduling for a Job Using the Batch Processing Mode.

- If the processing mode of a job is real-time processing, configure scheduling types for nodes. Three scheduling types are supported: run once, run periodically, and event-based. For details, see Setting Up Scheduling for Nodes of a Job Using the Real-Time Processing Mode.

Prerequisites

- You have performed the operations in Developing a Pipeline Job or Developing a Batch Processing Single-Task SQL Job.

- You have locked the job. Otherwise, you must click Lock so that you can develop the job. A job you create or import is locked by you by default. For details, see the lock function.

Constraints

- Set an appropriate value for this parameter. A maximum of five instances can be concurrently executed in a job. If the start time of a job instance is later than the configured job execution time, the job instances in the subsequent batch will be queued. As a result, the job execution costs a longer time than expected. For CDM and ETL jobs, the recurrence must be at least 5 minutes. In addition, the recurrence should be adjusted based on the data volume of the job table and the update frequency of the source table.

- If you use DataArts Studio DataArts Factory to schedule a CDM migration job and configure a scheduled task for the job in DataArts Migration, both configurations take effect. To ensure unified service logic and avoid scheduling conflicts, enable job scheduling in DataArts Factory and do not configure a scheduled task for the job in DataArts Migration.

Setting Up Scheduling for a Job Using the Batch Processing Mode

Three scheduling types are available: Run once, Run periodically, and Event-based. The procedure is as follows:

Click the Scheduling Setup tab on the right of the canvas to expand the configuration page and configure the scheduling parameters listed in Table 1.

|

Parameter |

Description |

|---|---|

|

Scheduling Type |

Scheduling type of the job. Available options include:

|

|

Enable Dry Run |

If you select this option, the job will not be executed, and a success message will be returned. |

|

Task Groups |

Select a configured task group. For details, see Configuring Task Groups. Do not select is selected by default. If you select a task group, you can control the maximum number of concurrent nodes in the task group in a fine-grained manner in scenarios where a job contains multiple nodes, a data patching task is ongoing, or a job is rerunning. Example 1: The maximum number of concurrent tasks in the task group is set to 2, and a job has five nodes. When the job runs, only two nodes are running and the other nodes are waiting. Example 2: The maximum number of concurrent tasks in the task group is set to 2, and the number of concurrent periods for a PatchData job is set to 5. When the PatchData job runs, two PatchData job instances are running, and the other job instances are waiting to run. The waiting instances can be delivered normally after a period of time. Example 3: If the same task group is configured for multiple jobs, and the maximum number of concurrent tasks in the task group is set to 2, only two jobs are running and the other jobs are waiting. If the same task group is configured for multiple job nodes, the maximum number of concurrent tasks in the task group is set to 2, and there are five job nodes in total, two nodes are running and the other nodes are waiting.

NOTE:

For a pipeline job, you can configure a task group for each node or for the job. A task group configured for a node is prior to one configured for the job. |

|

Parameter |

Description |

|---|---|

|

From and to |

The period during which a scheduling task takes effect. You can set it to today or tomorrow by clicking the time box and then Today or Tomorrow. |

|

Recurrence |

The frequency at which the scheduling task is executed, which can be: Set an appropriate value for this parameter. A maximum of five instances can be concurrently executed in a job. If the start time of a job instance is later than the configured job execution time, the job instances in the subsequent batch will be queued. As a result, the job execution costs a longer time than expected. For CDM and ETL jobs, the recurrence must be at least 5 minutes. In addition, the recurrence should be adjusted based on the data volume of the job table and the update frequency of the source table. You can modify the scheduling period of a running job.

NOTE:

DataArts Studio does not support concurrent running of PatchData instances and periodic job instances of underlying services (such as CDM and DLI). To prevent PatchData instances from affecting periodic job instances and avoid exceptions, ensure that they do not run at the same time. |

|

Scheduling Calendar |

Select a scheduling calendar. The default value is Do not use. For details about how to configure a scheduling calendar, see Configuring a Scheduling Calendar.

|

|

OBS Listening |

If you enable this function, the system automatically listens to the OBS path for new job files. If you disable this function, the system no longer listens to the OBS path. Configure the following parameters:

|

|

Dependency job |

You can select jobs that are executed periodically in different workspaces as dependency jobs. The current job starts only after the dependency jobs are executed. You can click Parse Dependency to automatically identify job dependencies.

NOTE:

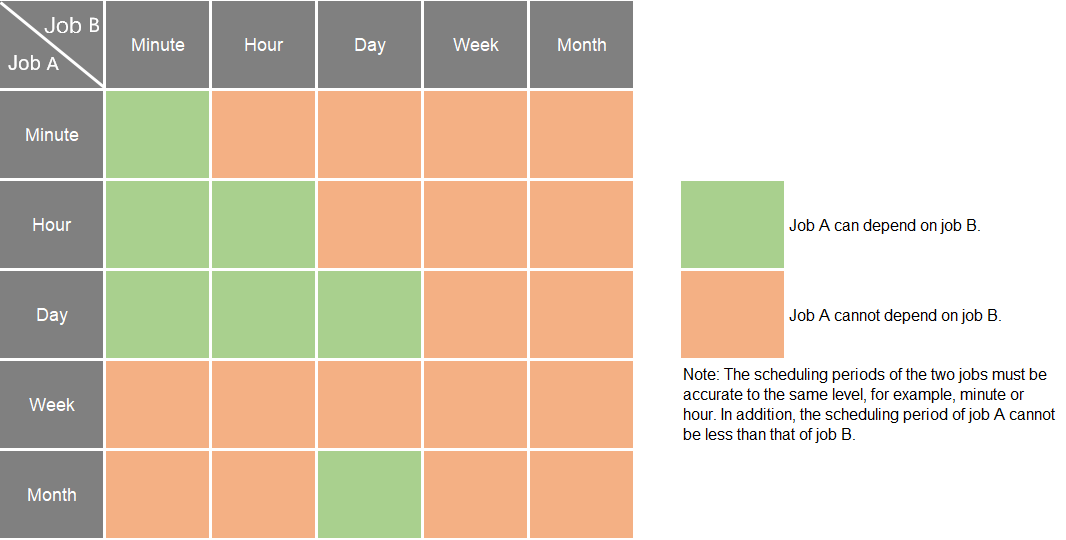

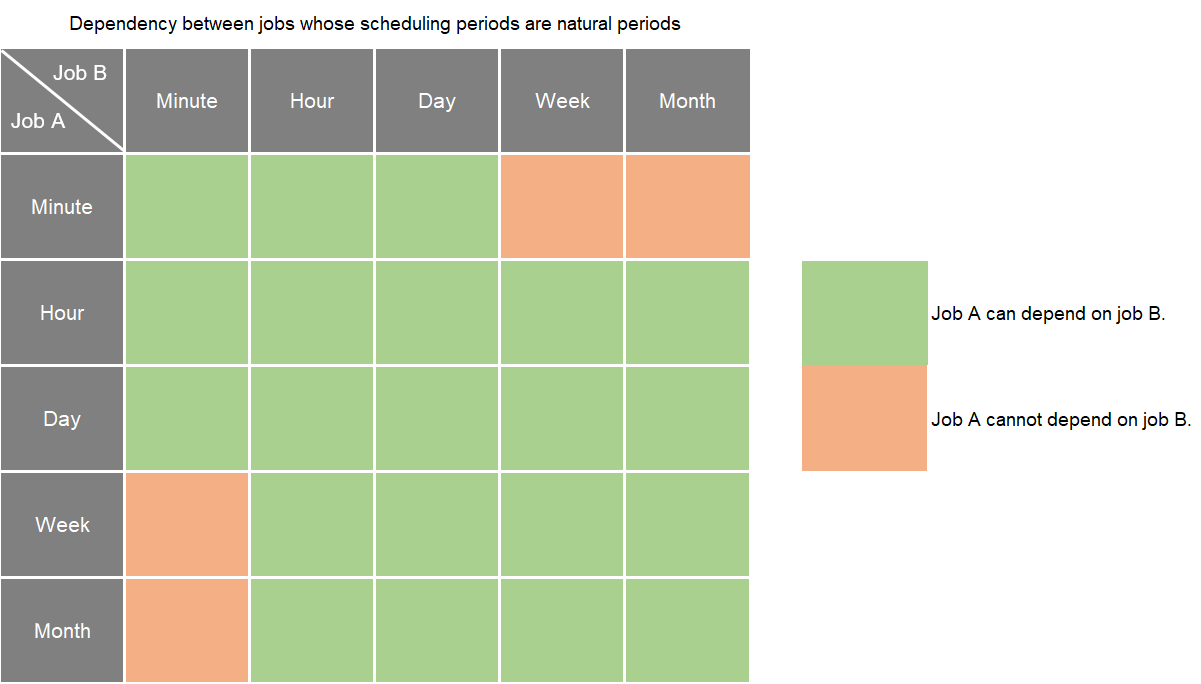

For details about job dependency rules across workspaces, see Job Dependency Rule. Currently, DataArts Factory supports two types of job dependency policies, that is, dependency between jobs whose scheduling periods are traditional periods and dependency between jobs whose scheduling periods are natural periods. You can select either of them. The scheduling periods for new DataArts Studio instances are natural periods.

Figure 1 Dependency between jobs whose scheduling periods are traditional periods

Figure 2 Dependency between jobs whose scheduling periods are natural periods

For details about the conditions for setting dependency jobs and how jobs run after dependency jobs are set, see Dependency Policies for Periodic Scheduling. |

|

Policy for Current job If Dependency job Fails |

Policy for processing the current job when one or more instances of its dependency job fail to be executed in its period.

For example, the recurrence of the current job is 1 hour and that of its dependency jobs is 5 minutes.

|

|

Run After Dependency job Ends |

If a job depends on other jobs, the job is executed only after its dependency job instances are executed within a specified time range. If the dependency job instances are not successfully executed, the current job is in waiting state. If you select this option, the system checks whether all job instances in the previous cycle have been executed before executing the current job. |

|

Dependency Job |

When configuring job dependencies, you can filter dependent jobs based on whether they are being scheduled. This prevents downstream job failures caused by upstream dependent jobs not being scheduled.

|

|

Dependency Cycle |

|

|

Cross-Cycle Dependency |

Dependency between job instances

|

|

Clear Waiting Instances |

|

|

Enable Dry Run |

If you select this option, the job will not be executed, and a success message will be returned. |

|

Task Groups |

Select a configured task group. For details, see Configuring Task Groups. Do not select is selected by default. If you select a task group, you can control the maximum number of concurrent nodes in the task group in a fine-grained manner in scenarios where a job contains multiple nodes, a data patching task is ongoing, or a job is rerunning.

NOTE:

For a pipeline job, you can configure a task group for each node or for the job. A task group configured for a node is prior to one configured for the job. |

|

Parameter |

Description |

|---|---|

|

Event Type |

Type of the event that triggers job running

|

|

Enable Dry Run |

If you select this option, the job will not be executed, and a success message will be returned. |

|

Task Groups |

Select a configured task group. For details, see Configuring Task Groups. Do not select is selected by default. If you select a task group, you can control the maximum number of concurrent nodes in the task group in a fine-grained manner in scenarios where a job contains multiple nodes, a data patching task is ongoing, or a job is rerunning.

NOTE:

For a pipeline job, you can configure a task group for each node or for the job. A task group configured for a node is prior to one configured for the job. |

|

Parameters for KAFKA event-triggered jobs |

|

|

Connection Name |

Before selecting a data connection, ensure that a Kafka data connection has been created in the Management Center. |

|

Topic |

Topic of the message to be sent to the Kafka. |

|

Concurrent Events |

Number of jobs that can be concurrently processed. The maximum number of concurrent events is 128. |

|

Event Detection Interval |

Interval at which the system detects the stream for new messages. The unit of the interval can be Seconds or Minutes. |

|

Access Policy |

Select the location where data is to be accessed:

|

|

Failure Policy |

Select a policy to be performed after scheduling fails.

|

|

Enable Dry Run |

If you select this option, the job will not be executed, and a success message will be returned. |

|

Task Groups |

Select a configured task group. For details, see Configuring Task Groups. Do not select is selected by default. If you select a task group, you can control the maximum number of concurrent nodes in the task group in a fine-grained manner in scenarios where a job contains multiple nodes, a data patching task is ongoing, or a job is rerunning.

NOTE:

For a pipeline job, you can configure a task group for each node or for the job. A task group configured for a node is prior to one configured for the job. |

|

Enable Dry Run |

If you select this option, the job will not be executed, and a success message will be returned. |

|

Task Groups |

Select a configured task group. For details, see Configuring Task Groups. Do not select is selected by default. If you select a task group, you can control the maximum number of concurrent nodes in the task group in a fine-grained manner in scenarios where a job contains multiple nodes, a data patching task is ongoing, or a job is rerunning.

NOTE:

For a pipeline job, you can configure a task group for each node or for the job. A task group configured for a node is prior to one configured for the job. |

Setting Up Scheduling for Nodes of a Job Using the Real-Time Processing Mode

Three scheduling types are available: Run once, Run periodically, and Event-based. The procedure is as follows:

Select a node. On the node development page, click the Scheduling Parameter Setup tab. On the displayed page, configure the parameters listed in Table 4.

|

Parameter |

Description |

|---|---|

|

Scheduling Type |

Scheduling type of the job. Available options include:

|

|

Parameters displayed when Scheduling Type is Run periodically |

|

|

From and to |

The period during which a scheduling task takes effect. |

|

Recurrence |

The frequency at which the scheduling task is executed, which can be:

For CDM and ETL jobs, the recurrence must be at least 5 minutes. In addition, the recurrence should be adjusted based on the data volume of the job table and the update frequency of the source table. You can modify the scheduling period of a running job. |

|

Cross-Cycle Dependency |

Dependency between job instances

|

|

Parameters displayed when Scheduling Type is Event-based |

|

|

Event Type |

Type of the event that triggers job running |

|

Connection Name |

Before selecting a data connection, ensure that a Kafka data connection has been created in the Management Center. This parameter is mandatory only when Event Type is set to KAFKA. |

|

Topic |

Topic of the message to be sent to the Kafka. This parameter is mandatory only when Event Type is set to KAFKA. |

|

Consumer Group |

A scalable and fault-tolerant group of consumers in Kafka. Consumers in a group share the same ID. They collaborate with each other to consume all partitions of subscribed topics. A partition in a topic can be consumed by only one consumer.

NOTE:

If you select KAFKA for Event Type, the consumer group ID is automatically displayed. You can also manually change the consumer group ID. |

|

Concurrent Events |

Number of jobs that can be concurrently processed. The maximum number of concurrent events is 10. |

|

Event Detection Interval |

Interval at which the system detects the stream for new messages. The unit of the interval can be Seconds or Minutes. |

|

Access Policy |

|

|

Failure Policy |

Select a policy to be performed after scheduling fails.

|

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.