DWS_2000000006 Node Data Disk Usage Exceeds the Threshold

Description

DWS collects the usage of all disks on each node in a cluster every 30 seconds.

- If the maximum disk usage in the last 10 minutes (configurable) exceeds 80% (configurable), a major alarm is reported. If the average disk usage is lower than 75% (that is, the alarm threshold minus 5%), this major alarm is cleared.

- If the maximum disk usage in the last 10 minutes (configurable) exceeds 85% (configurable), a critical alarm is reported. If the average disk usage is lower than 80% (alarm threshold minus 5%), this critical alarm is cleared.

If the maximum disk usage is always greater than the alarm threshold, the system generates an alarm again 24 hours later (configurable).

Attributes

|

Alarm ID |

Alarm Category |

Alarm Severity |

Alarm Type |

Service Type |

Auto Cleared |

|---|---|---|---|---|---|

|

DWS_2000000006 |

Tenant plane |

Urgent: > 85%; important: > 80% |

Operation alarm |

DWS |

Yes |

Parameters

|

Category |

Name |

Description |

|---|---|---|

|

Location information |

Name |

Node Data Disk Usage Exceeds the Threshold |

|

Type |

Operation alarm |

|

|

Generation time |

Time when the alarm is generated |

|

|

Other information |

Cluster ID |

Cluster details such as resourceId and domain_id |

Impact on the System

If the cluster data volume or temporary data spill size increases and the usage of any single disk exceeds 90%, the cluster becomes read-only, affecting customer services.

Possible Causes

- The service data volume increases rapidly, and the cluster disk capacity configuration cannot meet service requirements.

- Dirty data is not cleared in a timely manner.

- There are skew tables.

Handling Procedure

- Check the disk usage of each node.

- Log in to the DWS console.

- On the Alarms page, select the current cluster from the cluster selection drop-down list in the upper right corner and view the alarm information of the cluster in the last seven days. Locate the name of the node for which the alarm is generated and the disk information based on the location information.

- Choose Dedicated Clusters > Clusters, locate the row that contains the cluster for which the alarm is generated, and click Monitoring Panel in the Operation column.

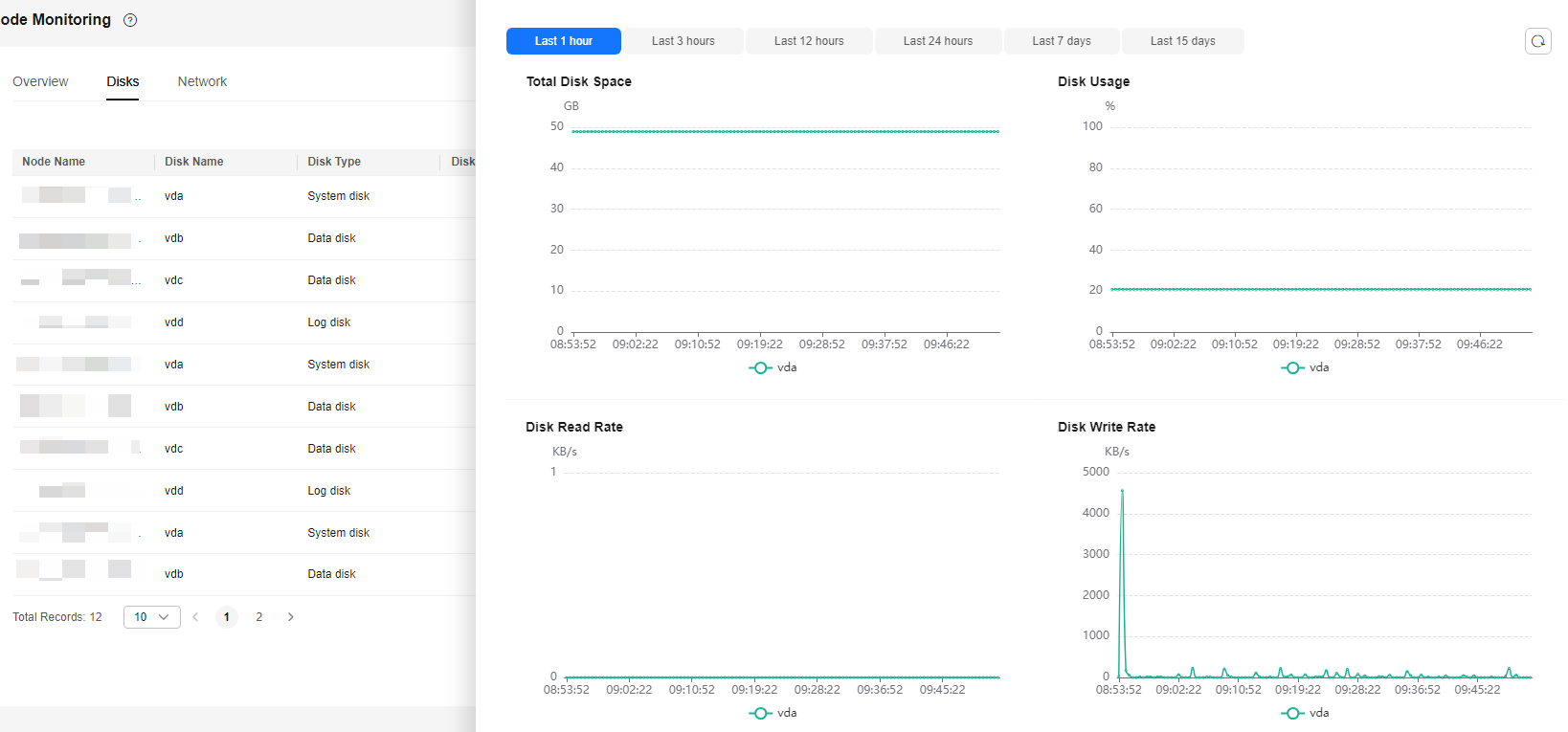

- Choose Monitoring > Node Monitoring > Disks to view the usage of each disk on the current cluster node. If you want to view the historical monitoring information about a disk on a node, click

on the right to view the disk performance metrics in the last 1, 3, 12, or 24 hours.

on the right to view the disk performance metrics in the last 1, 3, 12, or 24 hours.

- If the data disk usage frequently increases and then returns to normal in a short period of time, it indicates that the disk usage temporarily spikes due to service execution. In this case, you can adjust the alarm threshold through 2 to reduce the number of reported alarms.

- If the usage of a data disk exceeds 90%, read-only is triggered and error cannot execute INSERT in a read-only transaction is reported for write-related services. In this case, you can refer to 3 to delete unnecessary data.

- If the usage of more than half of the data disks in the cluster exceeds 70%, the data volume in the cluster is large. In this case, refer to 4 to clear data or perform Disk Capacity Expansion.

- If the difference between the highest and lowest data disk usage in the cluster exceeds 10%, refer to 5 to handle data skew.

- Check whether the alarm configuration is proper.

- Return to the DWS console, choose Monitoring > Alarm and click View Alarm Rule.

- Locate the row that contains Node Data Disk Usage Exceeds the Threshold and click Modify in the Operation column. On the Modifying an Alarm Rule page, view the configuration parameters of the current alarm.

- Adjust the alarm threshold and detection period. A higher alarm threshold and a longer detection period indicate a lower alarm sensitivity. For details about the GUI configuration, see Alarm Rules.

- If the data disk specification is high, you are advised to increase the threshold based on historical disk monitoring metrics. Otherwise, perform other steps. If the problem persists, you are advised to perform Disk Capacity Expansion.

- Check whether the cluster is in the read-only state.

- When a cluster is in read-only state, stop the write tasks to prevent data loss caused by disk space exhaustion.

- Return to the DWS console and choose Dedicated Clusters > Clusters. In the row of the abnormal cluster whose cluster status is Read-only, click .

- In the displayed dialog box, confirm the information and click OK to cancel the read-only state for the cluster. For details, see Removing the Read-only Status.

- After the read-only mode is disabled, use the client to connect to the database and run the DROP/TRUNCATE command to delete unnecessary data.

- Check whether the usage of more than half of the data disks in the cluster exceeds 70%.

- Run the VACUUM FULL command to clear data. For details, see Solution to High Disk Usage and Cluster Read-Only. Connect to the database, run the following SQL statement to query tables whose dirty page rate exceeds 30%, and sort the tables by size in descending order:

1 2 3 4 5

SELECT schemaname AS schema, relname AS table_name, n_live_tup AS analyze_count, pg_size_pretty(pg_table_size(relid)) as table_size, dirty_page_rate FROM PGXC_GET_STAT_ALL_TABLES WHERE schemaName NOT IN ('pg_toast', 'pg_catalog', 'information_schema', 'cstore', 'pmk') AND dirty_page_rate > 30 ORDER BY table_size DESC, dirty_page_rate DESC;

The following is an example of the possible execution result of the SQL statement (the dirty page rate of a table is high):1 2 3 4

schema | table_name | analyze_count | table_size | dirty_page_rate --------+------------+---------------+------------+----------------- public | test_table | 4333 | 656 KB | 71.11 (1 row)

- If any result is displayed in the command output, clear the tables with a high dirty page rate in serial mode.

1VACUUM FULL ANALYZE schema.table_name

The VACUUM FULL operation occupies extra defragmentation space, which is Table size x (1 – Dirty page rate). As a result, the disk usage temporarily increases and then decreases. Ensure that the remaining space of the cluster is sufficient and will not trigger read-only when the VACUUM FULL operation is performed. You are advised to start from small tables. In addition, the VACUUM FULL operation holds an exclusive lock, during which access to the operated table is blocked. You need to properly arrange the execution time to avoid affecting services.

- If no command output is displayed, no table with a high dirty page rate exists. You can expand the node or disk capacity of the cluster based on the following data warehouse types to prevent service interruption caused by read-only triggered by further disk usage increase.

- To scale out a storage-compute coupled data warehouse with cloud SSDs, see Disk Capacity Expansion of an EVS Cluster.

- To scale out a storage-compute coupled data warehouse with local SSDs, see Scaling Out a Cluster.

- Run the VACUUM FULL command to clear data. For details, see Solution to High Disk Usage and Cluster Read-Only. Connect to the database, run the following SQL statement to query tables whose dirty page rate exceeds 30%, and sort the tables by size in descending order:

- Check whether the difference between the highest and lowest data disk usages in the cluster exceeds 10%.

- If the data disk usage differs greatly, connect to the database and run the following SQL statement to check there are skew tables in the cluster:

1SELECT schemaname, tablename, pg_size_pretty(totalsize), skewratio FROM pgxc_get_table_skewness WHERE skewratio > 0.05 ORDER BY totalsize desc;

The following is an example of the possible execution result of the SQL statement:1 2 3 4 5 6 7

schemaname | tablename | pg_size_pretty | skewratio ------------+---------------------+----------------+----------- scheduler | workload_collection | 428 MB | .500 public | test_table | 672 KB | .429 public | tbl_col | 104 KB | .154 scheduler | scheduler_storage | 32 KB | .250 (4 rows)

- If the SQL statement output is displayed, select another distribution column for the table with severe skew based on the table size and skew rate. For 8.1.0 and later versions, use the ALTER TABLE syntax to adjust the distribution column. For other versions, see How Do I Adjust Distribution Columns?

- If the data disk usage differs greatly, connect to the database and run the following SQL statement to check there are skew tables in the cluster:

Alarm Clearance

After the disk usage decreases, the alarm is automatically cleared.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.