Viewing Table and Database Information

Querying Table Information

- Use the PG_TABLES system catalog to query the information about all tables in a database.

1SELECT * FROM PG_TABLES;

- Use the DBA_TAB_COLUMNS system view to query the table columns, data types, and comments of all tables in a specified schema.

Example: Query information about all table columns in a specified schema.

1SELECT * FROM DBA_TAB_COLUMNS WHERE schema = 'public';

- Querying the table structure using \d+ command of the gsql tool.

Example: Create a table customer_t1 and insert data into the table.

1 2 3 4 5 6 7 8 9

CREATE TABLE customer_t1 ( c_customer_sk integer, c_customer_id char(5), c_first_name char(6), c_last_name char(8) ) with (orientation = column,compression=middle) distribute by hash (c_last_name);

1 2 3 4

INSERT INTO customer_t1 (c_customer_sk, c_customer_id, c_first_name) VALUES (6885, 'map', 'Peter'), (4321, 'river', 'Lily'), (9527, 'world', 'James');

Query the table structure. If no schema is specified when you create a table, the schema of the table defaults to public.

1 2 3 4 5 6 7 8 9 10 11 12

\d+ customer_t1; Table "public.customer_t1" Column | Type | Modifiers | Storage | Stats target | Description ---------------+--------------+-----------+----------+--------------+------------- c_customer_sk | integer | | plain | | c_customer_id | character(5) | | extended | | c_first_name | character(6) | | extended | | c_last_name | character(8) | | extended | | Has OIDs: no Distribute By: HASH(c_last_name) Location Nodes: ALL DATANODES Options: orientation=column, compression=middle, colversion=2.0, enable_delta=false

The options may vary in different versions but the difference does not affect services. The options here are for reference only. The actual options are subject to the version.

- Use pg_get_tabledef to query the table definition.

1 2 3 4 5 6 7 8 9 10 11 12 13 14

SELECT * FROM PG_GET_TABLEDEF('customer_t1'); pg_get_tabledef ----------------------------------------------------------------------------------- SET search_path = tpchobs; + CREATE TABLE customer_t1 ( + c_customer_sk integer, + c_customer_id character(5), + c_first_name character(6), + c_last_name character(8) + ) + WITH (orientation=column, compression=middle, colversion=2.0, enable_delta=false)+ DISTRIBUTE BY HASH(c_last_name) + TO GROUP group_version1; (1 row)

- Querying all data in customer_t1

1 2 3 4 5 6 7

SELECT * FROM customer_t1; c_customer_sk | c_customer_id | c_first_name | c_last_name ---------------+---------------+--------------+------------- 6885 | map | Peter | 4321 | river | Lily | 9527 | world | James | (3 rows)

- Querying all data of a column in customer_t1 using SELECT

1 2 3 4 5 6 7

SELECT c_customer_sk FROM customer_t1; c_customer_sk --------------- 6885 4321 9527 (3 rows)

- Check whether a table has been analyzed. The time when the table was analyzed will be returned. If nothing is returned, it indicates that the table has not been analyzed.

1SELECT pg_stat_get_last_analyze_time(oid),relname FROM pg_class where relkind='r';

Query the time when the public table was analyzed.

1 2 3 4 5 6 7 8 9 10 11 12 13

SELECT pg_stat_get_last_analyze_time(c.oid),c.relname FROM pg_class c LEFT JOIN pg_namespace n ON c.relnamespace = n.oid WHERE c.relkind='r' AND n.nspname='public'; pg_stat_get_last_analyze_time | relname -------------------------------+---------------------- 2022-05-17 07:48:26.923782+00 | warehouse_t19 2022-05-17 07:48:26.964512+00 | emp 2022-05-17 07:48:27.016709+00 | test_trigger_src_tbl 2022-05-17 07:48:27.045385+00 | customer 2022-05-17 07:48:27.062486+00 | warehouse_t1 2022-05-17 07:48:27.114884+00 | customer_t1 2022-05-17 07:48:27.172256+00 | product_info_input 2022-05-17 07:48:27.197014+00 | tt1 2022-05-17 07:48:27.212906+00 | timezone_test (9 rows)

- Quickly query the column information of a table. If a view in information_schema has a large number of objects in the database, it takes a long time to return the result. You can run the following SQL statement to quickly query the column information of one or more tables:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35

SELECT /*+ set (enable_hashjoin off) */T.table_schema AS tableschema, T.TABLE_NAME AS tablename, T.dtd_identifier AS srcAttrId, COLUMN_NAME AS fieldName, 'N' AS isPrimaryKey, nvl ( nvl ( T.character_maximum_length, T.numeric_precision ), 0 ) AS fieldLength, T.udt_name AS fieldType from ( SELECT /*+ indexscan(co) indexscan(nco) indexscan(a) indexscan(t) leading((nc c a)) leading((co nco)) indexscan(bt) indexscan(nt) */ nc.nspname AS table_schema, c.relname AS table_name, a.attname AS column_name, information_schema._pg_char_max_length(information_schema._pg_truetypid(a.*, t.*), information_schema._pg_truetypmod(a.*, t.*))::information_schema.cardinal_number AS character_maximum_length, information_schema._pg_numeric_precision(information_schema._pg_truetypid(a.*, t.*), information_schema._pg_truetypmod(a.*, t.*))::information_schema.cardinal_number AS numeric_precision, COALESCE(bt.typname, t.typname)::information_schema.sql_identifier AS udt_name, a.attnum AS dtd_identifier FROM pg_attribute a LEFT JOIN pg_attrdef ad ON a.attrelid = ad.adrelid AND a.attnum = ad.adnum JOIN (pg_class c JOIN pg_namespace nc ON c.relnamespace = nc.oid) ON a.attrelid = c.oid JOIN (pg_type t JOIN pg_namespace nt ON t.typnamespace = nt.oid) ON a.atttypid = t.oid LEFT JOIN (pg_type bt JOIN pg_namespace nbt ON bt.typnamespace = nbt.oid) ON t.typtype = 'd'::"char" AND t.typbasetype = bt.oid LEFT JOIN (pg_collation co JOIN pg_namespace nco ON co.collnamespace = nco.oid) ON a.attcollation = co.oid AND (nco.nspname <> 'pg_catalog'::name OR co.collname <> 'default'::name) WHERE NOT pg_is_other_temp_schema(nc.oid) AND a.attnum > 0 AND NOT a.attisdropped AND (c.relkind = ANY (ARRAY['r'::"char", 'v'::"char", 'f'::"char"])) AND (pg_has_role(c.relowner, 'USAGE'::text) OR has_column_privilege(c.oid, a.attnum, 'SELECT, INSERT, UPDATE, REFERENCES'::text)) ) t WHERE 1 = 1 AND UPPER ( T.TABLE_NAME ) <> 'DIS_USER_DATARIGHT_IF_SPLIT_T' AND UPPER ( T.TABLE_NAME ) NOT LIKE'DIS_TMP_%' AND UPPER ( T.COLUMN_NAME ) <> '_DISAPP_AUTO_ID_' AND ( ( T.TABLE_NAME ), ( T.table_schema ) ) IN ( ( lower ( 'table_name' )::name, lower ( 'schema_name' )::name ) );

Quickly query the column information of the promotion table.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35

SELECT /*+ set (enable_hashjoin off) */T.table_schema AS tableschema, T.TABLE_NAME AS tablename, T.dtd_identifier AS srcAttrId, COLUMN_NAME AS fieldName, 'N' AS isPrimaryKey, nvl ( nvl ( T.character_maximum_length, T.numeric_precision ), 0 ) AS fieldLength, T.udt_name AS fieldType from ( SELECT /*+ indexscan(co) indexscan(nco) indexscan(a) indexscan(t) leading((nc c a)) leading((co nco)) indexscan(bt) indexscan(nt) */ nc.nspname AS table_schema, c.relname AS table_name, a.attname AS column_name, information_schema._pg_char_max_length(information_schema._pg_truetypid(a.*, t.*), information_schema._pg_truetypmod(a.*, t.*))::information_schema.cardinal_number AS character_maximum_length, information_schema._pg_numeric_precision(information_schema._pg_truetypid(a.*, t.*), information_schema._pg_truetypmod(a.*, t.*))::information_schema.cardinal_number AS numeric_precision, COALESCE(bt.typname, t.typname)::information_schema.sql_identifier AS udt_name, a.attnum AS dtd_identifier FROM pg_attribute a LEFT JOIN pg_attrdef ad ON a.attrelid = ad.adrelid AND a.attnum = ad.adnum JOIN (pg_class c JOIN pg_namespace nc ON c.relnamespace = nc.oid) ON a.attrelid = c.oid JOIN (pg_type t JOIN pg_namespace nt ON t.typnamespace = nt.oid) ON a.atttypid = t.oid LEFT JOIN (pg_type bt JOIN pg_namespace nbt ON bt.typnamespace = nbt.oid) ON t.typtype = 'd'::"char" AND t.typbasetype = bt.oid LEFT JOIN (pg_collation co JOIN pg_namespace nco ON co.collnamespace = nco.oid) ON a.attcollation = co.oid AND (nco.nspname <> 'pg_catalog'::name OR co.collname <> 'default'::name) WHERE NOT pg_is_other_temp_schema(nc.oid) AND a.attnum > 0 AND NOT a.attisdropped AND (c.relkind = ANY (ARRAY['r'::"char", 'v'::"char", 'f'::"char"])) AND (pg_has_role(c.relowner, 'USAGE'::text) OR has_column_privilege(c.oid, a.attnum, 'SELECT, INSERT, UPDATE, REFERENCES'::text)) ) t WHERE 1 = 1 AND UPPER ( T.TABLE_NAME ) <> 'DIS_USER_DATARIGHT_IF_SPLIT_T' AND UPPER ( T.TABLE_NAME ) NOT LIKE'DIS_TMP_%' AND UPPER ( T.COLUMN_NAME ) <> '_DISAPP_AUTO_ID_' AND ( ( T.TABLE_NAME ), ( T.table_schema ) ) IN ( ( lower ( 'promotion' )::name, lower ( 'public' )::name ) );

- Obtain the table definition by querying audit logs.

Use the pgxc_query_audit function to query audit logs of all CNs. The syntax is as follows:

1pgxc_query_audit(timestamptz startime,timestamptz endtime)

Query the audit records of multiple objects.

1 2

SET audit_object_name_format TO 'all'; SELECT object_name,result,operation_type,command_text FROM pgxc_query_audit('2024-05-26 8:00:00','2024-05-26 22:55:00') where command_text like '%student%';

Querying the Distribution Key of a Table

- Use the PG_GET_TABLEDEF function to query the distribution key of a table (for example, customer_t1). In the command output, HASH(c_last_name) indicates that the distribution mode of the table is HASH and the distribution key is c_last_name.

1 2 3 4 5 6 7 8 9 10 11 12 13 14

SELECT * FROM PG_GET_TABLEDEF('customer_t1'); pg_get_tabledef ----------------------------------------------------------------------------------- SET search_path = tpchobs; + CREATE TABLE customer_t1 ( + c_customer_sk integer, + c_customer_id character(5), + c_first_name character(6), + c_last_name character(8) + ) + WITH (orientation=column, compression=middle, colversion=2.0, enable_delta=false)+ DISTRIBUTE BY HASH(c_last_name) + TO GROUP group_version1; (1 row)

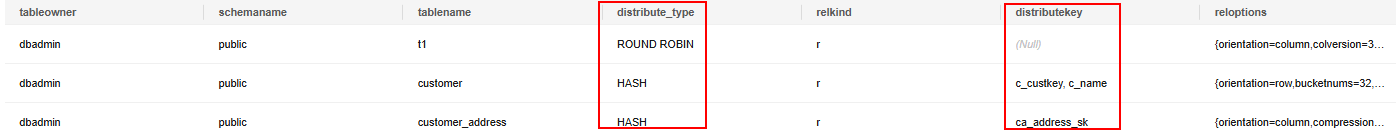

- Use the following SQL statement to query the distribution mode and distribution key of multiple tables in batches. Replace dbadmin at the end of the statement with the actual table owner. The following example shows how to query the distribution mode and distribution key of all tables created by dbadmin.

distribute_type indicates the distribution mode, for example, HASH. distributekey indicates the distribution key, for example, ca_address_sk.

1 2 3 4 5 6 7 8 9 10 11 12 13

SELECT pg_catalog.pg_get_userbyid(pc.relowner) AS tableowner, pn.nspname AS schemaname, pc.relname AS tablename, CASE pxc.pclocatortype WHEN 'N' THEN 'ROUND ROBIN' WHEN 'R' THEN 'REPLICATION' WHEN 'H' THEN 'HASH' WHEN 'M' THEN 'MODULO' END AS distribute_type, pc.relkind, getdistributekey(pxc.pcrelid) AS distributekey, pc.reloptions FROM pgxc_class pxc, pg_class pc,pg_namespace pn WHERE pxc.pcrelid = pc.oid AND pn.oid=pc.relnamespace AND tableowner='dbadmin';

Querying the Table Size

- Querying the total size of a table (indexes and data included)

1SELECT pg_size_pretty(pg_total_relation_size('<schemaname>.<tablename>'));

Example:

First, create an index on customer_t1.

1CREATE INDEX index1 ON customer_t1 USING btree(c_customer_sk);

Then, query the size of table customer_t1 of public.

1 2 3 4 5

SELECT pg_size_pretty(pg_total_relation_size('public.customer_t1')); pg_size_pretty ---------------- 264 kB (1 row)

- Querying the size of a table (indexes excluded)

1SELECT pg_size_pretty(pg_relation_size('<schemaname>.<tablename>'));

Example: Query the size of table customer_t1 of public.1 2 3 4 5

SELECT pg_size_pretty(pg_relation_size('public.customer_t1')); pg_size_pretty ---------------- 208 kB (1 row)

- Query all the tables, ranked by their occupied space.

1 2 3

SELECT table_schema || '.' || table_name AS table_full_name, pg_size_pretty(pg_total_relation_size('"' || table_schema || '"."' || table_name || '"')) AS size FROM information_schema.tables ORDER BY pg_total_relation_size('"' || table_schema || '"."' || table_name || '"') DESC limit xx;

Example 1: Query the 15 tables that occupy the most space.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21

SELECT table_schema || '.' || table_name AS table_full_name, pg_size_pretty(pg_total_relation_size('"' || table_schema || '"."' || table_name || '"')) AS size FROM information_schema.tables ORDER BY pg_total_relation_size('"' || table_schema || '"."' || table_name || '"') DESC limit 15; table_full_name | size ---------------------------+--------- pg_catalog.pg_attribute | 2048 KB pg_catalog.pg_rewrite | 1888 KB pg_catalog.pg_depend | 1464 KB pg_catalog.pg_proc | 1464 KB pg_catalog.pg_class | 512 KB pg_catalog.pg_description | 504 KB pg_catalog.pg_collation | 360 KB pg_catalog.pg_statistic | 352 KB pg_catalog.pg_type | 344 KB pg_catalog.pg_operator | 224 KB pg_catalog.pg_amop | 208 KB public.tt1 | 160 KB pg_catalog.pg_amproc | 120 KB pg_catalog.pg_index | 120 KB pg_catalog.pg_constraint | 112 KB (15 rows)

Example 2: Query the top 20 tables with the largest space usage in the public schema.1 2 3 4 5 6 7 8 9 10 11 12 13 14

SELECT table_schema || '.' || table_name AS table_full_name, pg_size_pretty(pg_total_relation_size('"' || table_schema || '"."' || table_name || '"')) AS size FROM information_schema.tables where table_schema='public' ORDER BY pg_total_relation_size('"' || table_schema || '"."' || table_name || '"') DESC limit 20; table_full_name | size -----------------------------+--------- public.tt1 | 160 KB public.product_info_input | 112 KB public.customer_t1 | 96 KB public.warehouse_t19 | 48 KB public.emp | 32 KB public.customer | 0 bytes public.test_trigger_src_tbl | 0 bytes public.warehouse_t1 | 0 bytes (8 rows)

Quickly Querying the Space Occupied by All Tables in the Database

In a large cluster (8.1.3 or later) with a large amount of data (more than 1000 tables), you are advised to use the pgxc_wlm_table_distribution_skewness view to query all tables in the database. This view can be used to query the tablespace usage and data skew in the database. The unit of total_size and avg_size is byte.

1 2 3 4 5 6 7 |

SELECT *, pg_size_pretty(total_size) as tableSize FROM pgxc_wlm_table_distribution_skewness ORDER BY total_size desc; schema_name | table_name | total_size | avg_size | max_percent | min_percent | skew_percent | tablesize --------------------+---------------------------------------------------+------------+-----------+-------------+-------------+--------------+----------- public | history_tbs_test_row_1 | 804347904 | 134057984 | 18.02 | 15.63 | 7.53 | 767 MB public | history_tbs_test_row_3 | 402096128 | 67016021 | 18.30 | 15.60 | 8.90 | 383 MB public | history_tbs_test_row_2 | 401743872 | 66957312 | 18.01 | 15.01 | 7.47 | 383 MB public | i_history_tbs_test_1 | 325263360 | 54210560 | 17.90 | 15.50 | 6.90 | 310 MB |

The query result shows that the history_tbs_test_row_1 table occupies the largest space and data skew occurs.

- The pgxc_wlm_table_distribution_skewness view can be queried only when the GUC parameter use_workload_manager and enable_perm_space is enabled. In earlier versions, you are advised to use the table_distribution() function to query the entire database. If only the size of a table is queried, the table_distribution(schemaname text, tablename text) function is recommended.

- In 8.2.1 and later cluster versions, DWS supports the pgxc_wlm_table_distribution_skewness view, which can be directly used for query.

- In the 8.1.3 cluster version, you can use the following definition to create a view and then perform query:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 |

CREATE OR REPLACE VIEW pgxc_wlm_table_distribution_skewness AS WITH skew AS ( SELECT schemaname, tablename, pg_catalog.sum(dnsize) AS totalsize, pg_catalog.avg(dnsize) AS avgsize, pg_catalog.max(dnsize) AS maxsize, pg_catalog.min(dnsize) AS minsize, (maxsize - avgsize) * 100 AS skewsize FROM pg_catalog.gs_table_distribution() GROUP BY schemaname, tablename ) SELECT schemaname AS schema_name, tablename AS table_name, totalsize AS total_size, avgsize::numeric(1000) AS avg_size, ( CASE WHEN totalsize = 0 THEN 0.00 ELSE (maxsize * 100 / totalsize)::numeric(5, 2) END ) AS max_percent, ( CASE WHEN totalsize = 0 THEN 0.00 ELSE (minsize * 100 / totalsize)::numeric(5, 2) END ) AS min_percent, ( CASE WHEN totalsize = 0 THEN 0.00 ELSE (skewsize / maxsize)::numeric(5, 2) END ) AS skew_percent FROM skew; |

Querying Database Information

- Querying the database list using the \l meta-command of the gsql tool.

1 2 3 4 5 6 7 8 9 10

\l List of databases Name | Owner | Encoding | Collate | Ctype | Access privileges -----------+-------+-----------+---------+-------+------------------- gaussdb | Ruby | SQL_ASCII | C | C | template0 | Ruby | SQL_ASCII | C | C | =c/Ruby + | | | | | Ruby=CTc/Ruby template1 | Ruby | SQL_ASCII | C | C | =c/Ruby + | | | | | Ruby=CTc/Ruby (3 rows)

- If the parameters LC_COLLATE and LC_CTYPE are not specified during database installation, the default values of them are C.

- If LC_COLLATE and LC_CTYPE are not specified during database creation, the sorting order and character classification of the template database are used by default.

For details, see CREATE DATABASE.

- Querying the database list using the pg_database system catalog

1 2 3 4 5 6 7

SELECT datname FROM pg_database; datname ----------- template1 template0 gaussdb (3 rows)

Querying the Database Size

1

|

select datname,pg_size_pretty(pg_database_size(datname)) from pg_database; |

Example:

1 2 3 4 5 6 7 |

select datname,pg_size_pretty(pg_database_size(datname)) from pg_database; datname | pg_size_pretty -----------+---------------- template1 | 61 MB template0 | 61 MB postgres | 320 MB (3 rows) |

Querying the Size of a Table and the Size of the Corresponding Index in a Specified Schema

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

SELECT t.tablename, indexname, c.reltuples AS num_rows, pg_size_pretty(pg_relation_size(quote_ident(t.tablename)::text)) AS table_size, pg_size_pretty(pg_relation_size(quote_ident(indexrelname)::text)) AS index_size, CASE WHEN indisunique THEN 'Y' ELSE 'N' END AS UNIQUE, idx_scan AS number_of_scans, idx_tup_read AS tuples_read, idx_tup_fetch AS tuples_fetched FROM pg_tables t LEFT OUTER JOIN pg_class c ON t.tablename=c.relname LEFT OUTER JOIN ( SELECT c.relname AS ctablename, ipg.relname AS indexname, x.indnatts AS number_of_columns, idx_scan, idx_tup_read, idx_tup_fetch, indexrelname, indisunique FROM pg_index x JOIN pg_class c ON c.oid = x.indrelid JOIN pg_class ipg ON ipg.oid = x.indexrelid JOIN pg_stat_all_indexes psai ON x.indexrelid = psai.indexrelid ) AS foo ON t.tablename = foo.ctablename WHERE t.schemaname='public' ORDER BY 1,2; |

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.