MatrixLink Network

Overview

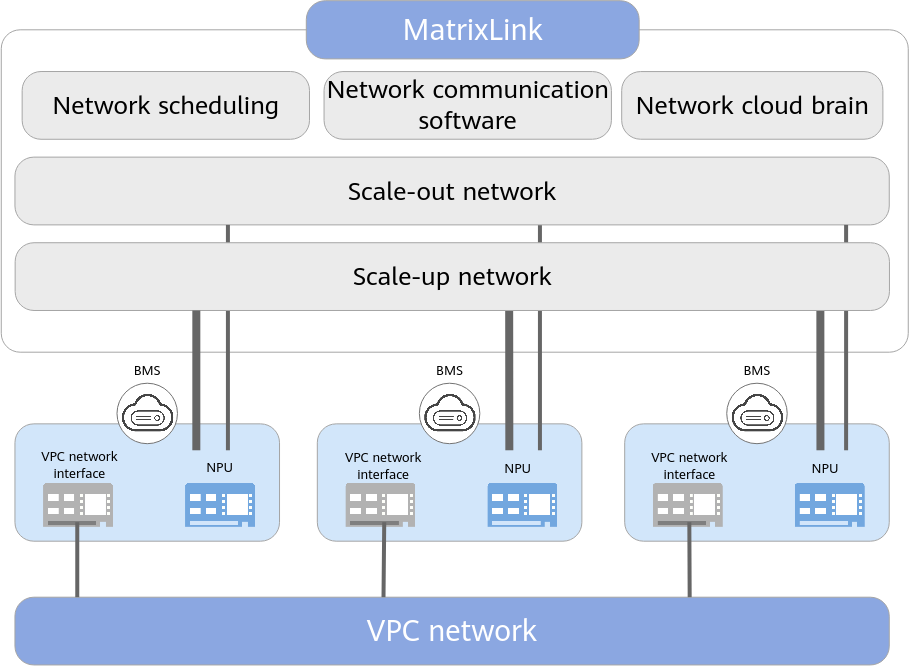

MatrixLink networks use high-performance protocols for high-speed communications between NPUs as well as network topology management and isolation for large-scale clusters. MatrixLink networks implement intelligent network scheduling based on global topology awareness. This can optimize traffic paths with reduced network traffic conflicts. Working together with high-performance communication operators and network cloud brain, MatrixLink provides networks with higher performance and reliability.

Advantages

- Excellent performance: Provides high-speed bandwidth for network communications to meet the requirements of a range of application scenarios.

- Flexible scheduling: Senses customer intents and provides flexible network scheduling based on the global topology.

- High reliability: Quickly detects, diagnoses, and recovers faults to ensure stable cluster running.

Application Scenarios

AI model training and inference require large-scale compute clusters. To maximize the utilization of compute and network resources, reduce network traffic conflicts if there are concurrent tasks, and improve the training and inference efficiency of AI tasks, MatrixLink provides scale-up and scale-out networks to ensure high bandwidth and low latency. Network scheduling enables optimal matching between customer models and network traffic. With the help of communication operators optimized by network communication software and the fast fault detection and recovery capabilities of the network cloud brain, MatrixLink provides ultimate network performance and high reliability for AI model training and inference.

Basic Functions

- Intelligent computing network

MatrixLink provides scale-up, scale-out, and VPC networks to maximize network performance. The scale-up network is responsible for high-speed communications within a supernode, scale-out network is for communications between supernodes, and VPC is for general network communications. The three layers of networks are divided by traffic characteristics. They are more suitable for hierarchical training communications and prefill-decode separation, providing ultimate network performance for AI training and inference.

- Scale-up network: Based on the Huawei-developed HCCS protocol stack, the network enables chip-level load/store semantic communications, full peer-to-peer high-speed interconnection of 384 cards per supernode, and dynamic slicing. Independent communication domains can be divided on demand to support parallel model running in distributed training. A single card supports a maximum bandwidth of 784 Gbit/s and a latency of less than 1 μs, ensuring efficient large model training.

- Scale-out network: It uses advanced RDMA semantics and physical RoCE protocol for high-speed communications across supernodes. A single card supports a maximum bandwidth of 400 Gbit/s, achieving a 1:1 non-convergence network. Multiple supernodes are interconnected, and a cluster supports a maximum of 128,000 cards. These can meet the training requirements of ultra-large-scale model training.

- VPC network: With the UBoE protocol, a VPC network delivers 400 Gbit/s of bandwidth on a single node, which is four times higher than that of a traditional one. This supports general data transmission in the cloud native environment, provides the most comprehensive multi-tenant network isolation capabilities, and ensures the security and quality of service traffic.

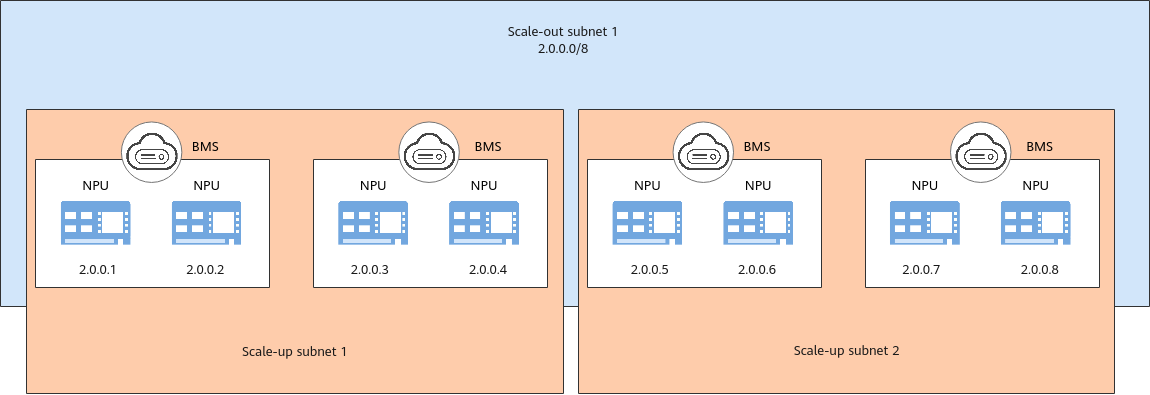

Network isolation between tenants is ensured by dividing scale-up and scale-out subnets, preventing traffic interference and ensuring security.

Figure 2 Networks between NPUs in different subnets

Network scheduling

Network scheduling

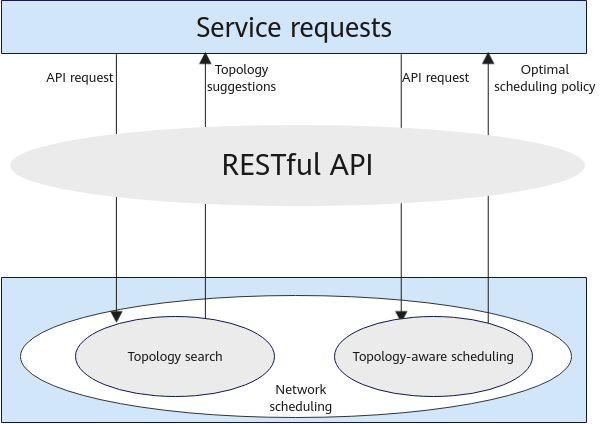

AI model training distributes tasks across compute nodes for distributed parallel training. This introduces complex communications between nodes to reduce training efficiency. Network scheduling considers the complexity of distributed training communications and multi-level convergence of cluster bandwidths. Based on the features of large model training tasks, parallelism, and available resource topology information, network scheduling provides topology-aware affinity deployment and path planning to reduce the transmission time during model training and improve the large model training performance.

Network scheduling provides the following capabilities through RESTful APIs:

- Topology search: Provides users with resource selection recommendations based on the model hyperparameters, parallelism, and available resource list provided by users as well as topology awareness.

- Topology-aware scheduling: Optimizes resource deployment and route planning and provides resource scheduling recommendations based on the model hyperparameters, parallelism, collective communication algorithm, and AI programming framework after resource selection.

Figure 3 RESTful API calling example

- Network communication software

Huawei Cloud builds a cloud-native high-performance communication library to fully utilize the ultra-low-latency memory semantic communication of supernodes, deeply explore the performance advantages of computing and communication convergence, and implement A2A and ARD communication operator acceleration in typical inference scenarios based on the supernode form and cluster networking of cloud infrastructure. In addition, the communication operator execution framework based on the NPU direct memory semantics supports batch hiding of communication operators and attention computing operators, providing high-throughput and low-latency networks in inference scenarios.

- Network cloud brain

The network cloud brain quickly detects faults based on the device and network link status. With the traffic diagnosis, traffic profiling, AI algorithms and a library of over 300 fault patterns, the network cloud brain can identify root causes of faults within minutes and determine fault recovery methods, reducing the fault impact scope, eliminating sudden interference, improving system reliability, and greatly enhancing I/O efficiency.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot