Viewing the Third-Party Model Evaluation Report

After an evaluation job is created, you can view the task report. The procedure is as follows:

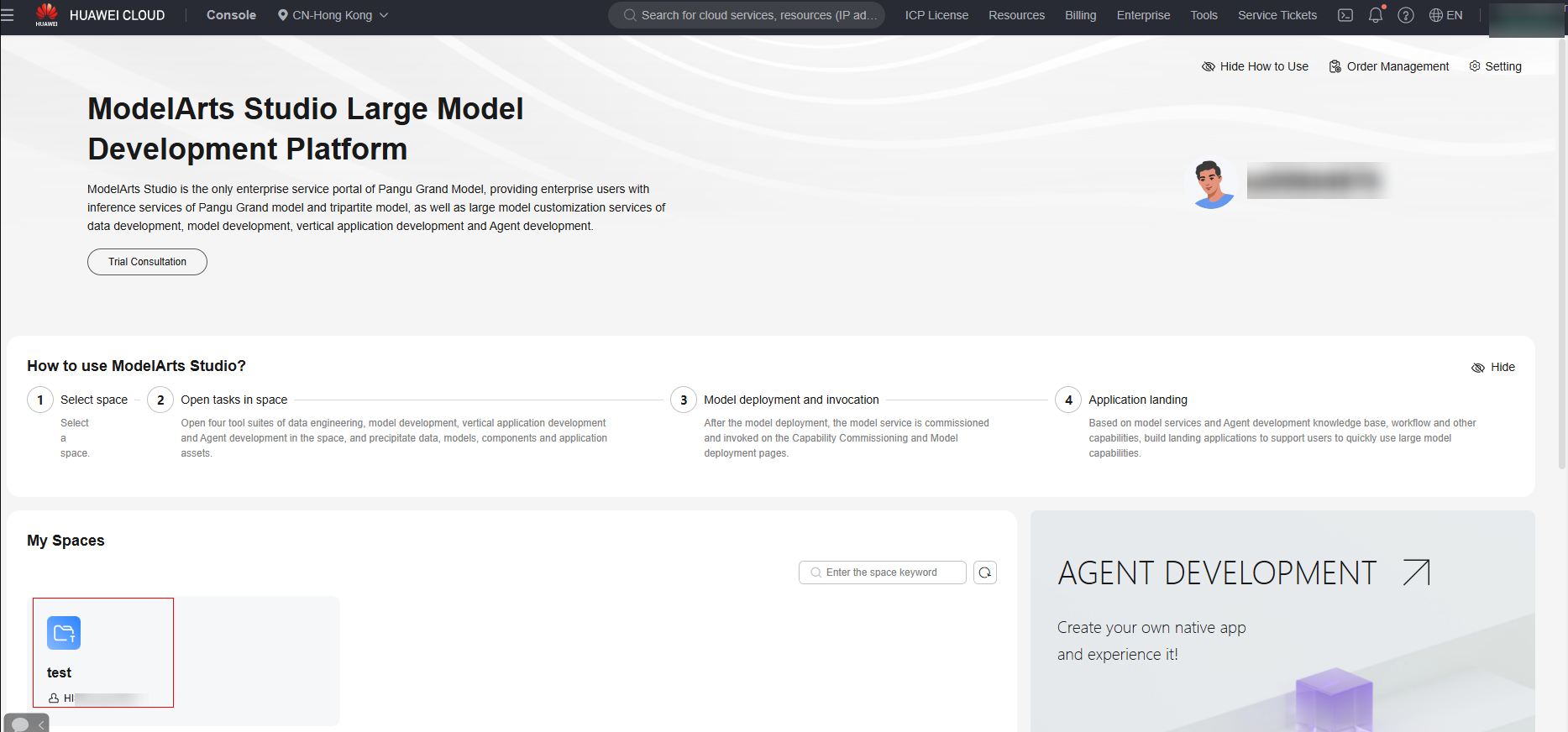

- Log in to ModelArts Studio Large Model Deveopment Platform. In the My Spaces area, click the required workspace.

Figure 1 My Spaces

- In the navigation pane, choose Evaluation Center > Evaluation Task.

- Click Assessment report in the Operation column. On the displayed page, you can view the basic information and overview of the evaluation job.

For details about each evaluation metric, see Third-Party Model Evaluation Metrics.

- Export the evaluation report.

- On the Evaluation Report > Service Result Analysis page, click Export, select the report to be exported, and click OK.

- Click Export Record on the right to view the exported task ID. Click Download in the Operation column to download the evaluation report to the local PC.

Third-Party Model Evaluation Metrics

The third-party model supports automatic evaluation and manual evaluation. For details about the metrics, see Table 1, Table 2, and Table 3.

|

Evaluation Metric (Automatic Evaluation - Custom Evaluation Dataset) |

Description |

|---|---|

|

Accuracy |

Proportion of samples that are correctly predicted (the labeling and prediction are completely matched) to the total number of samples. A higher score indicates a higher proportion of samples that are correctly predicted by the model, and better model effectiveness. |

|

F1 Score |

Harmonic mean of the precision and recall. A higher score indicates better performance of the model on the two metrics, that is, the model achieves a better balance between precision and recall. |

|

BLEU-1 |

Matching degree between the sentence generated by the model and the actual sentence at the single-word level. A larger score indicates better model effectiveness. |

|

BLEU-2 |

Matching degree between the sentence generated by the model and the actual sentence at the phrase level. A larger score indicates better model effectiveness. |

|

BLEU-4 |

Weighted average accuracy of the model generation result and actual sentences. A larger score indicates better model effectiveness. |

|

ROUGE-1 |

Recall rate calculated after the model generation result and labeling result are split by 1-gram (n-gram refers to a segment consisting of n consecutive words in a sentence). A larger score indicates better model effectiveness. |

|

ROUGE-2 |

Recall rate calculated after the model generation result and labeling result are split by 2-gram (n-gram refers to a segment consisting of n consecutive words in a sentence). A larger score indicates better model effectiveness. |

|

ROUGE-L |

Recall rate calculated after the model generation result and labeling result are split by longest-gram (longest-gram refers to a segment consisting of n consecutive words in a sentence). A larger score indicates better model effectiveness. |

|

Evaluation Metric (Automatic Evaluation- Evaluation Template Used) |

Description |

|---|---|

|

Score |

The score of each dataset is the pass rate of the model in the current dataset. If there are multiple datasets in the evaluation capability items, the weighted average pass rate is calculated based on the data volume. |

|

Comprehensive capability |

The comprehensive capability is the weighted average of the pass rates of all datasets. |

|

Evaluation Metric (Manual Evaluation) |

Description |

|---|---|

|

Accuracy |

The answer generated by the model is correct and there is no factual error. |

|

average |

The model calculates the average score of the generated sentence and the actual sentence based on the evaluation metric. |

|

goodcase |

The model calculates the proportion of test cases whose score is 5 after the generated sentence and the actual sentence are compared based on the evaluation metric. |

|

badcase |

The model calculates the proportion of test cases whose score is less than 1 after the generated sentence and the actual sentence are compared based on the evaluation metric. |

|

Custom metric |

Custom metrics, such as usability, logic, and security. |

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot