Deploying a Distilled DeepSeek Model with vLLM on a Single Server (Linux)

Scenarios

Distillation is a technology that transfers knowledge of a large pre-trained model into a smaller model. It is suitable for scenarios where smaller and more efficient models are required without significant loss of accuracy. In this section, we will learn how to use vLLM to quickly deploy a distilled DeepSeek model.

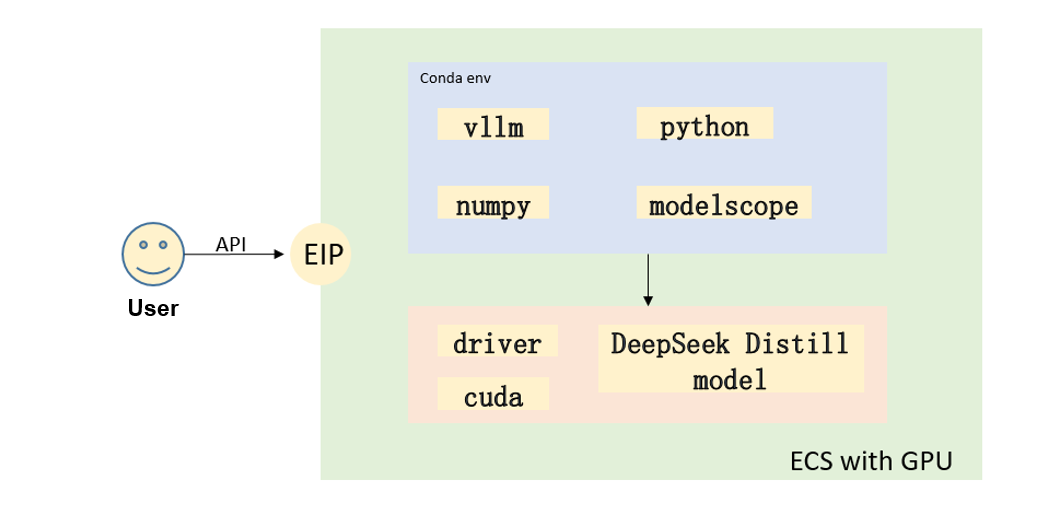

Solution Architecture

Advantages

vLLM is used to deploy distilled DeepSeek models from scratch in conda. It thoroughly understands the model runtime dependencies. With a small number of resources, vLLM can quickly and efficiently connect to services for production and achieves more refined performance and cost control.

Resource Planning

|

Resource |

Description |

Cost |

|---|---|---|

|

VPC |

VPC CIDR block: 192.168.0.0/16 |

Free |

|

VPC subnet |

|

Free |

|

Security group |

Inbound rule:

|

Free |

|

ECS |

|

The following resources generate costs:

For billing details, see Billing Mode Overview. |

|

No. |

Model Name |

Minimum Flavor |

GPU |

|---|---|---|---|

|

0 |

DeepSeek-R1-Distill-Qwen-7B DeepSeek-R1-Distill-Llama-8B |

p2s.2xlarge.8 |

V100 (32 GiB) × 1 |

|

p2v.4xlarge.8 |

V100 (16 GiB) × 2 |

||

|

pi2.4xlarge.4 |

T4 (16 GiB) × 2 |

||

|

g6.18xlarge.7 |

T4 (16 GiB) × 2 |

||

|

1 |

DeepSeek-R1-Distill-Qwen-14B |

p2s.4xlarge.8 |

V100 (32 GiB) × 2 |

|

p2v.8xlarge.8 |

V100 (16 GiB) × 4 |

||

|

pi2.8xlarge.4 |

T4 (16 GiB) × 4 |

||

|

2 |

DeepSeek-R1-Distill-Qwen-32B |

p2s.8xlarge.8 |

V100 (32 GiB) × 4 |

|

p2v.16xlarge.8 |

V100 (16 GiB) × 8 |

||

|

3 |

DeepSeek-R1-Distill-Llama-70B |

p2s.16xlarge.8 |

V100 (32 GiB) × 8 |

Contact Huawei Cloud technical support to select GPU ECSs suitable for your deployment.

Manually Deploying a Distilled DeepSeek Model with vLLM

To manually deploy a distilled DeepSeek model on a Linux ECS with vLLM, do as follows:

Procedure

- Create a GPU ECS.

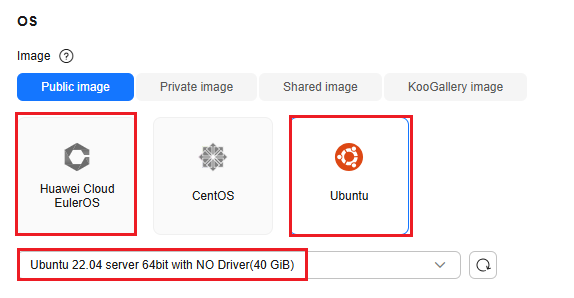

- Select the public image Huawei Cloud EulerOS 2.0 or Ubuntu 22.04 without a driver installed.

Figure 2 Selecting an image

- Select Auto assign for EIP. EIPs will be assigned for downloading dependencies and calling model APIs.

- Select the public image Huawei Cloud EulerOS 2.0 or Ubuntu 22.04 without a driver installed.

- Check the GPU driver and CUDA versions.

Install the driver of version 535 and CUDA of 12.2. For details, see Manually Installing a Tesla Driver on a GPU-accelerated ECS.

- Create a conda virtual environment.

- Download the miniconda installation package.

wget https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh

- Install miniconda.

bash Miniconda3-latest-Linux-x86_64.sh

- Add the conda environment variable to the startup file.

echo 'export PATH="$HOME/miniconda3/bin:$PATH"' >> ~/.bashrc source ~/.bashrc

- Create a Python 3.10 virtual environment.

conda create -n vllm-ds python=3.10 conda activate vllm-ds conda install numpy

- Download the miniconda installation package.

- Install dependencies, such as vLLM.

- Update pip.

python -m pip install --upgrade pip -i https://mirrors.tuna.tsinghua.edu.cn/pypi/web/simple

- Install vLLM.

pip install vllm -i https://mirrors.tuna.tsinghua.edu.cn/pypi/web/simple

You can run the vllm –version command to view the installed vLLM version.

- Install modelscope.

pip install modelscope -i https://mirrors.tuna.tsinghua.edu.cn/pypi/web/simple

ModelScope is an open-source model community in China. It is fast to download models from ModelScope if you are in China. However, if you are outside China, download models from Hugging Face.

- Update pip.

- Download the large model file.

- Create a script for downloading a model.

vim download_models.py

Add the following content into the script:

from modelscope import snapshot_download model_dir = snapshot_download('deepseek-ai/DeepSeek-R1-Distill-Qwen-7B', cache_dir='/root', revision='master')The model name DeepSeek-R1-Distill-Qwen-7B is used as an example. You can replace it with the required model by referring to Table 2. The local path for storing the model is /root. You can change it as needed.

- Download the model.

python3 download_models.py

Wait until the model is downloaded.

- Create a script for downloading a model.

- Start vllm.server to run the large model.

Run the foundation model.

python -m vllm.entrypoints.openai.api_server --model /root/deepseek-ai/DeepSeek-R1-Distill-Qwen-7B --served-model-name DeepSeek-R1-Distill-Qwen-7B --max-model-len=2048 &

- If the ECS uses multiple GPUs, add -tp ${number-of-GPUs}. For example, if there are two GPUs, add -tp 2.

- V100 or T4 GPUs cannot use BF16 precision and can only use float16. The --dtype float16 parameter must be added.

python -m vllm.entrypoints.openai.api_server --model /root/deepseek-ai/DeepSeek-R1-Distill-Qwen-7B --served-model-name DeepSeek-R1-Distill-Qwen-7B --max-model-len=2048 --dtype float16 -tp 2 &

- After the model is loaded, if the GPU memory is insufficient, add --enforce-eager to use eager mode and disable the CUDA graph to reduce the GPU memory usage.

python -m vllm.entrypoints.openai.api_server --model /root/deepseek-ai/DeepSeek-R1-Distill-Qwen-7B --served-model-name DeepSeek-R1-Distill-Qwen-7B --max-model-len=2048 –dtype float16 –enforce-eager &

- If the GPU memory is still insufficient, replace the ECS with another one that has a larger flavor based on Table 2.

- After the model is started, the default listening port is 8000.

- Call a model API to test the model performance.

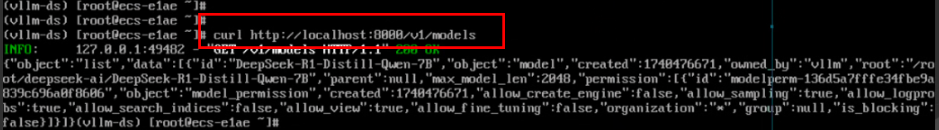

- Call an API to view the running model.

curl http://localhost:8000/v1/models

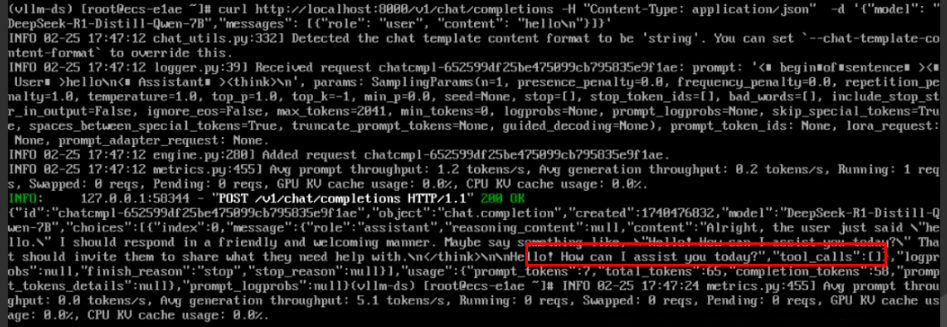

- Call an API to chat.

curl http://localhost:8000/v1/chat/completions \ -H "Content-Type: application/json" \ -d '{ "model": "DeepSeek-R1-Distill-Qwen-7B", "messages": [{"role": "user", "content": "hello\n"}] }'

The model is deployed and verified. You can use an EIP to call a model API for chats from your local Postman or your own service.

- Call an API to view the running model.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot