Viewing the Training Status and Metrics of the DeepSeek Model

Viewing the Model Training Status

After the model training is started, you can view the model training job status on the Model Training page. You can click a job name to go to the details page and view the training metrics, training job details, and training logs.

|

Status |

Meaning |

|---|---|

|

Initializing |

The model training job is being initialized and is ready for training. |

|

Queuing |

The model training job is queuing. Please wait. |

|

Running |

The model is being trained and the training process is not complete. |

|

Stopping |

Model training is being stopped. |

|

Stopped |

Model training has been manually stopped. |

|

Failed |

An error occurred during model training. You can view logs to locate the cause of the training failure. |

|

Completed |

Model training is complete. |

Viewing Training Metrics

For a training job in Completed state, click the job name to view the training metrics on the Train Result tab page.

|

Model |

Training Metric |

Description |

|---|---|---|

|

Third-party large model |

Training Loss |

The training loss is a metric that measures the difference between the predicted and actual outputs of a model. In normal cases, a smaller value indicates better model performance. Generally, a normal loss curve should be monotonically decreasing. That is, as the training proceeds, the loss value decreases continuously until it converges to a smaller value. |

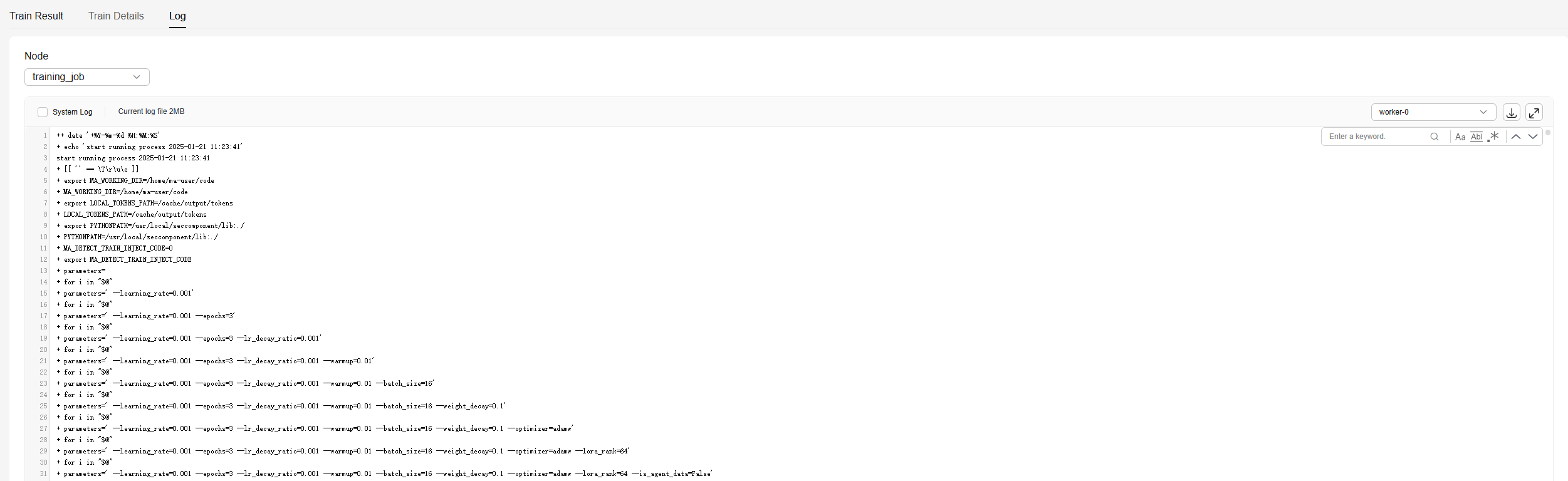

Obtaining training logs

Click the name of a training job to view the logs generated during the training on the Log tab page.

If a training job is abnormal or fails, you can locate the cause of the failure based on the training logs.

Training logs can be filtered and viewed by training phase. During distributed training, jobs are allocated to multiple worker nodes for parallel processing. Each worker node is responsible for processing a part of data or executing a specific computing task. You can also filter logs by worker node (for example, worker-0 indicates the first worker node).

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot