Creating a DeepSeek Model Training Job

Pre-training

To create a DeepSeek model pre-training job, perform the following steps:

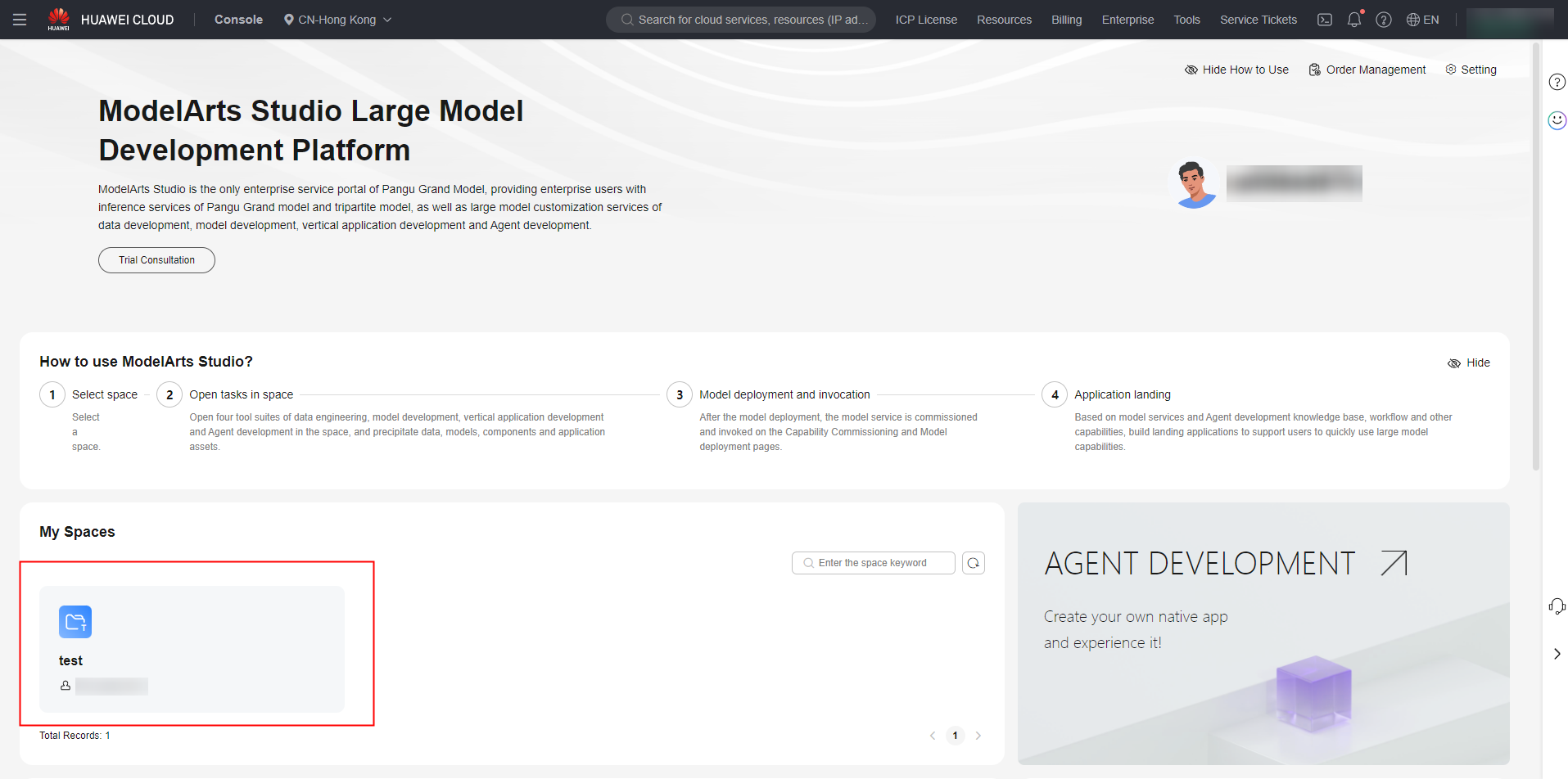

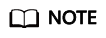

- Log in to ModelArts Studio Large Model Deveopment Platform. In the My Spaces area, click the required workspace.

Figure 1 My Spaces

- In the navigation pane, choose Model Development > Model Training. Click Create Training Job in the upper right corner.

- On the Create Training Job page, set training parameters by referring to Table 1.

Table 1 Parameters for pre-training the DeepSeek model Category

Training Parameter

Description

Training configuration

Select Model

You can modify the following information:

- Sources: Select Model Square.

- Type: Select NLP and select the base model and version (DeepSeek-V3-32K or DeepSeek-R1-32K) used for training.

Type

Select Pre-training.

Advanced Settings > checkpoints

checkpoints: During a model training job, checkpoints are used to store the model weight and status.

- Close: After this function is disabled, checkpoints are not saved and training cannot be resumed based on checkpoints.

- Automatic: All checkpoints during training are automatically saved.

- Custom: A specified number of checkpoints are saved based on the settings.

Training Parameters

epochs

Dataset iterations during model training.

learning_rate

It determines the step size at each training iteration. If the learning rate is too high, the model never converges. If the learning rate is too low, the model can take a long time to converge.

batch_size

It controls the number of samples used in one iteration of model training. A larger batch size leads to more stable gradients but consumes more GPU memory. This may cause out-of-memory (OOM) errors due to hardware limitations and extend the training time.

sequence_length

It specifies the maximum length of a single training data record. Data that exceeds the length will be truncated during training.

warmup

It controls the duration of the warm-up phase relative to the entire training process. During this phase, the learning rate starts low, allowing the model to stabilize gradually. Once stabilized, the model is then trained at a preset higher learning rate, which accelerates convergence and enhances performance.

lr_decay_ratio

It controls the learning rate to ensure the model converges more stably. The minimum learning rate will not be lower than the product of the Learning Rate and this parameter. If the learning rate does not decay, set this parameter to 1.

weight_decay

It is a regularization method that reduces the size of model parameters to prevent model overfitting and improve the model generalization capability.

checkpoint_save_strategy

It can be set to save_checkpoint_steps or save_checkpoint_epoch. It specifies whether to save checkpoint files based on the number of iteration steps or based on the number of epochs during training.

save_checkpoint_steps or save_checkpoint_epoch

save_checkpoint_steps specifies the number of training steps at which the model checkpoint file is saved during training.

save_checkpoint_epoch specifies the number of training epochs during which the model checkpoint file is saved.

checkpoints

During a model training job, checkpoints are used to store the model weight and status.

- Close: After this function is disabled, checkpoints are not saved and training cannot be resumed based on checkpoints.

- Automatic: All checkpoints during training are automatically saved.

- Custom: A specified number of checkpoints are saved based on the settings.

Training data configuration

Training set

Select the training dataset.

Resource Disposition

Billing model

Billing mode of the current training job.

Training Unit

Select the number of training units required for training the model.

The minimum number of training units required for the current training is displayed.

Single-instance training units

Select the number of training units for a single instance.

Number of instances

Select the number of instances.

Subscription reminder

Subscription reminder

After this function is enabled, the system sends SMS or email notifications to users when the task status is updated.

publish model

Enable automatic publishing

After this function is enabled, the final product generated after model training is automatically published as a workspace asset so that the model can be compressed, deployed, evaluated, and shared with other workspaces.

Basic Information

Name

Name of a training job.

Description

Description of the training job.

- Click Create Now.

- After a fine-tuning job is created, the Model Training page is displayed. You can view the job status at any time.

Full Fine-Tuning

To create a DeepSeek model full fine-tuning job, perform the following steps:

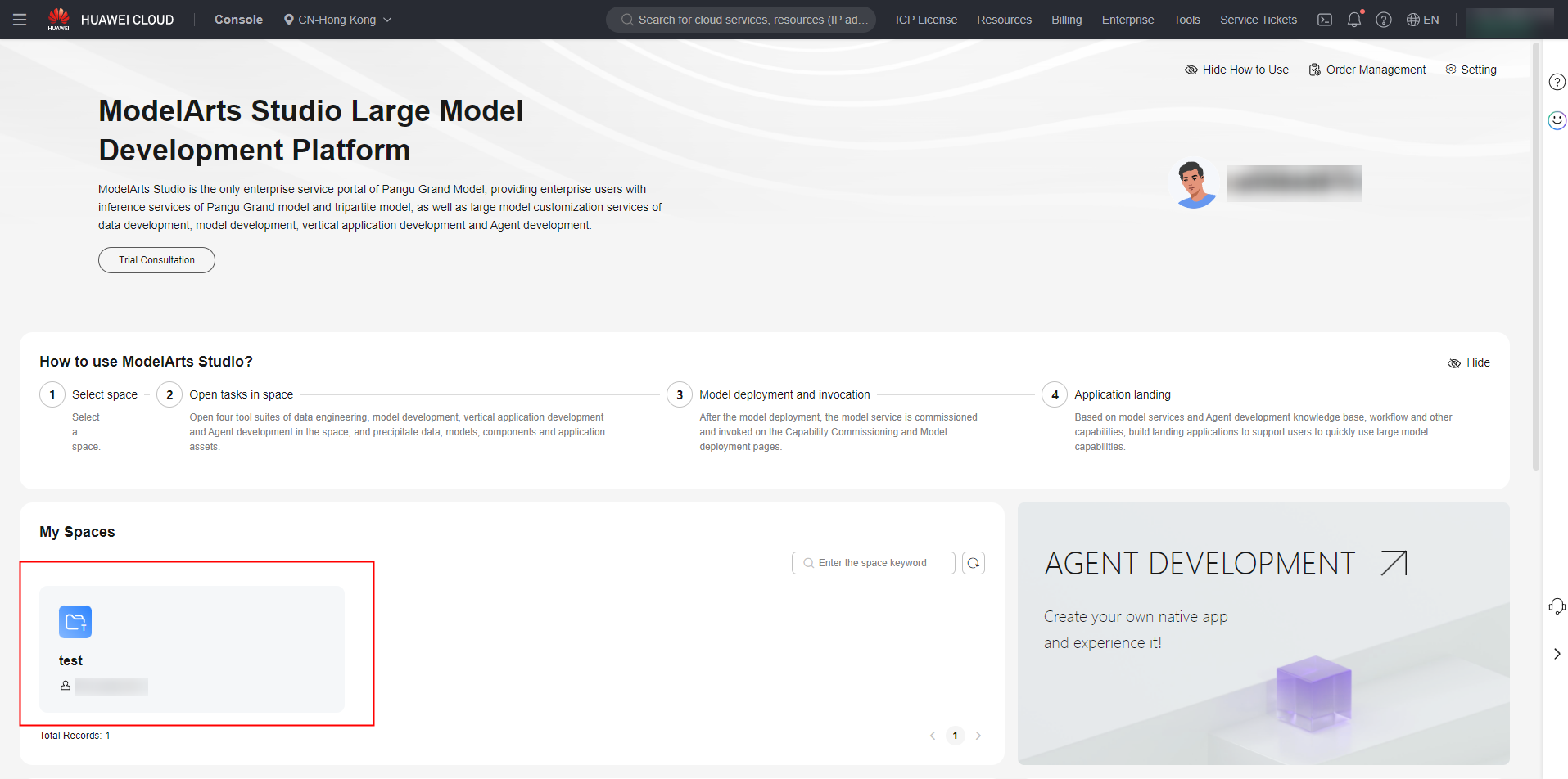

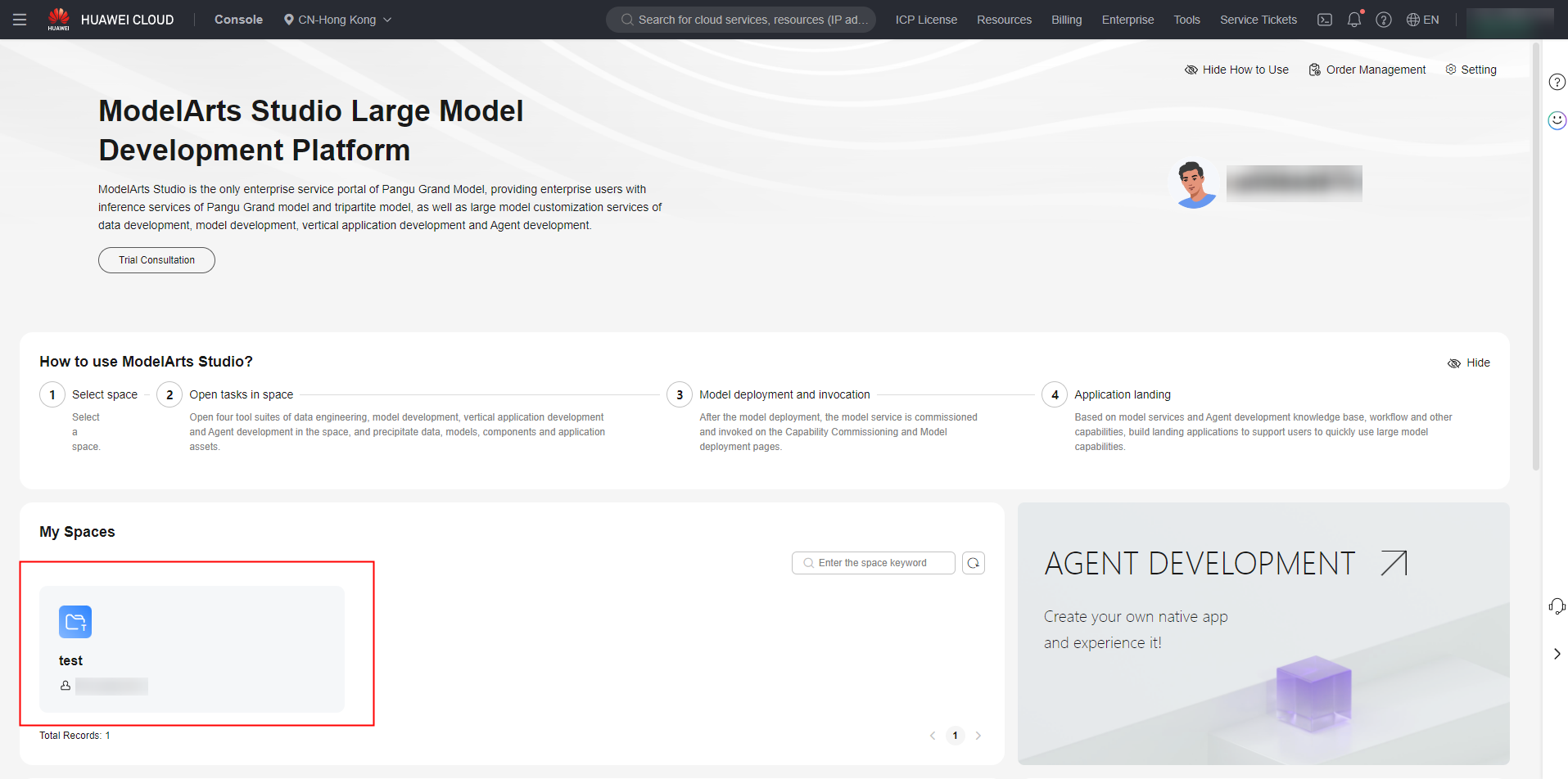

- Log in to ModelArts Studio Large Model Deveopment Platform. In the My Spaces area, click the required workspace.

Figure 2 My Spaces

- In the navigation pane, choose Model Development > Model Training. Click Create Training Job in the upper right corner.

- On the Create Training Job page, set training parameters by referring to Table 2.

Table 2 Parameters for full fine-tuning the DeepSeek model Category

Training Parameter

Description

Training configuration

Select Model

You can modify the following information:

- Sources: Select Model Square.

- Type: Select NLP and select the base model and version (DeepSeek-V3-32K or DeepSeek-R1-32K) used for training.

Type

Select Supervised fine-tuning.

Training Objective

Select Full fine-tuning.

- Full fine-tuning: When supervised fine-tuning is performed on a model, all parameters of the model are updated.

Advanced Settings

During a model training job, checkpoints are used to store the model weight and status.

- Close: After this function is disabled, checkpoints are not saved and training cannot be resumed based on checkpoints.

- Automatic: All checkpoints during training are automatically saved.

- Custom: A specified number of checkpoints are saved based on the settings.

Training Parameters

epochs

Dataset iterations during model training.

learning_rate

It determines the step size at each training iteration. If the learning rate is too high, the model never converges. If the learning rate is too low, the model can take a long time to converge.

batch_size

It controls the number of samples used in one iteration of model training. A larger batch size leads to more stable gradients but consumes more GPU memory. This may cause out-of-memory (OOM) errors due to hardware limitations and extend the training time.

sequence_length

It specifies the maximum length of a single training data record. Data that exceeds the length will be truncated during training.

warmup

It controls the duration of the warm-up phase relative to the entire training process. During this phase, the learning rate starts low, allowing the model to stabilize gradually. Once stabilized, the model is then trained at a preset higher learning rate, which accelerates convergence and enhances performance.

lr_decay_ratio

It controls the learning rate to ensure the model converges more stably. The minimum learning rate will not be lower than the product of the Learning Rate and this parameter. If the learning rate does not decay, set this parameter to 1.

weight_decay

It is a regularization method that reduces the size of model parameters to prevent model overfitting and improve the model generalization capability.

checkpoint_save_strategy

It can be set to save_checkpoint_steps or save_checkpoint_epoch. It specifies whether to save checkpoint files based on the number of iteration steps or based on the number of epochs during training.

save_checkpoint_steps or save_checkpoint_epoch

save_checkpoint_steps specifies the number of training steps at which the model checkpoint file is saved during training.

save_checkpoint_epoch specifies the number of training epochs during which the model checkpoint file is saved.

checkpoints

During a model training job, checkpoints are used to store the model weight and status.

- Close: After this function is disabled, checkpoints are not saved and training cannot be resumed based on checkpoints.

- Automatic: All checkpoints during training are automatically saved.

- Custom: A specified number of checkpoints are saved based on the settings.

Data configuration

Training set

Select the training dataset.

Resource Disposition

Billing model

Billing mode of the current training job.

Training Unit

Select the number of training units required for training the model.

The minimum number of training units required for the current training is displayed.

Single-instance training units

Select the number of training units for a single instance.

Number of instances

Select the number of instances.

Subscription reminder

Subscription reminder

After this function is enabled, the system sends SMS or email notifications to users when the task status is updated.

publish model

Enable automatic publishing

If this function is disabled, the model will be manually published to the model asset library after the training is complete.

If this function is enabled, configure the visibility, model name, and description.

Basic Information

Name

Name of a training job.

Description

Description of the training job.

The default values of training parameters vary depending on the model. The default values displayed on the frontend page prevail.

- Click Create Now.

- After a fine-tuning job is created, the Model Training page is displayed. You can view the job status at any time.

LoRA Fine-Tuning

To create a DeepSeek model LoRA fine-tuning job, perform the following steps:

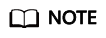

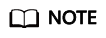

- Log in to ModelArts Studio Large Model Deveopment Platform. In the My Spaces area, click the required workspace.

Figure 3 My Spaces

- In the navigation pane, choose Model Development > Model Training. Click Create Training Job in the upper right corner.

- On the Create Training Job page, set training parameters by referring to Table 3.

Table 3 Parameters for DeepSeek model LoRA fine-tuning Category

Training Parameter

Description

Training configuration

Select Model

You can modify the following information:

- Sources: Select Model Square.

- Type: Select NLP and select the base model and version (DeepSeek-V3-32K or DeepSeek-R1-32K) used for training.

Type

Select Supervised fine-tuning.

Training Objective

Select LoRA fine-tuning.

- LoRA fine-tuning: During supervised fine-tuning, the pre-trained model's weight parameters are frozen. Low-rank decomposition is applied to the weight matrix in the self-attention module while retaining the original weights. During training, only the low-rank parameters are updated.

Training Parameter

epochs

Dataset iterations during model training.

learning_rate

It determines the step size at each training iteration. If the learning rate is too high, the model never converges. If the learning rate is too low, the model can take a long time to converge.

batch_size

It controls the number of samples used in one iteration of model training. A larger batch size leads to more stable gradients but consumes more GPU memory. This may cause out-of-memory (OOM) errors due to hardware limitations and extend the training time.

sequence_length

It specifies the maximum length of a single training data record. Data that exceeds the length will be truncated during training.

warmup

It controls the duration of the warm-up phase relative to the entire training process. During this phase, the learning rate starts low, allowing the model to stabilize gradually. Once stabilized, the model is then trained at a preset higher learning rate, which accelerates convergence and enhances performance.

lr_decay_ratio

It controls the learning rate to ensure the model converges more stably. The minimum learning rate will not be lower than the product of the Learning Rate and this parameter. If the learning rate does not decay, set this parameter to 1.

weight_decay

Adds a penalty term related to the model weight size to the loss function, encouraging the model to keep small weights to prevent overfitting or overly complex models.

Rank of the LoRA matrix

In the LoRA matrix, the rank value is used to measure the complexity and information amount of the matrix. A larger value enhances the representation capability of the model, but increases the training duration. A smaller value reduces the number of parameters and the overfitting risk.

Data configuration

Training set

Select the training dataset.

Resource Disposition

Billing model

Billing mode of the current training job.

Training Unit

Select the number of training units required for training the model.

The minimum number of training units required for the current training is displayed.

Single-instance training units

Select the number of training units for a single instance.

Number of instances

Select the number of instances.

Subscription reminder

Subscription reminder

After this function is enabled, the system sends SMS or email notifications to users when the task status is updated.

publish model

Enable automatic publishing

If this function is disabled, the model will be manually published to the model asset library after the training is complete.

If this function is enabled, configure the visibility, model name, and description.

Basic Information

Name

Name of a training job.

Description

Description of the training job.

The default values of training parameters vary depending on the model. The default values displayed on the frontend page prevail.

- Click Create Now.

- After a fine-tuning job is created, the Model Training page is displayed. You can view the job status at any time.

QLoRA Fine-Tuning

To create a DeepSeek model full fine-tuning job, perform the following steps:

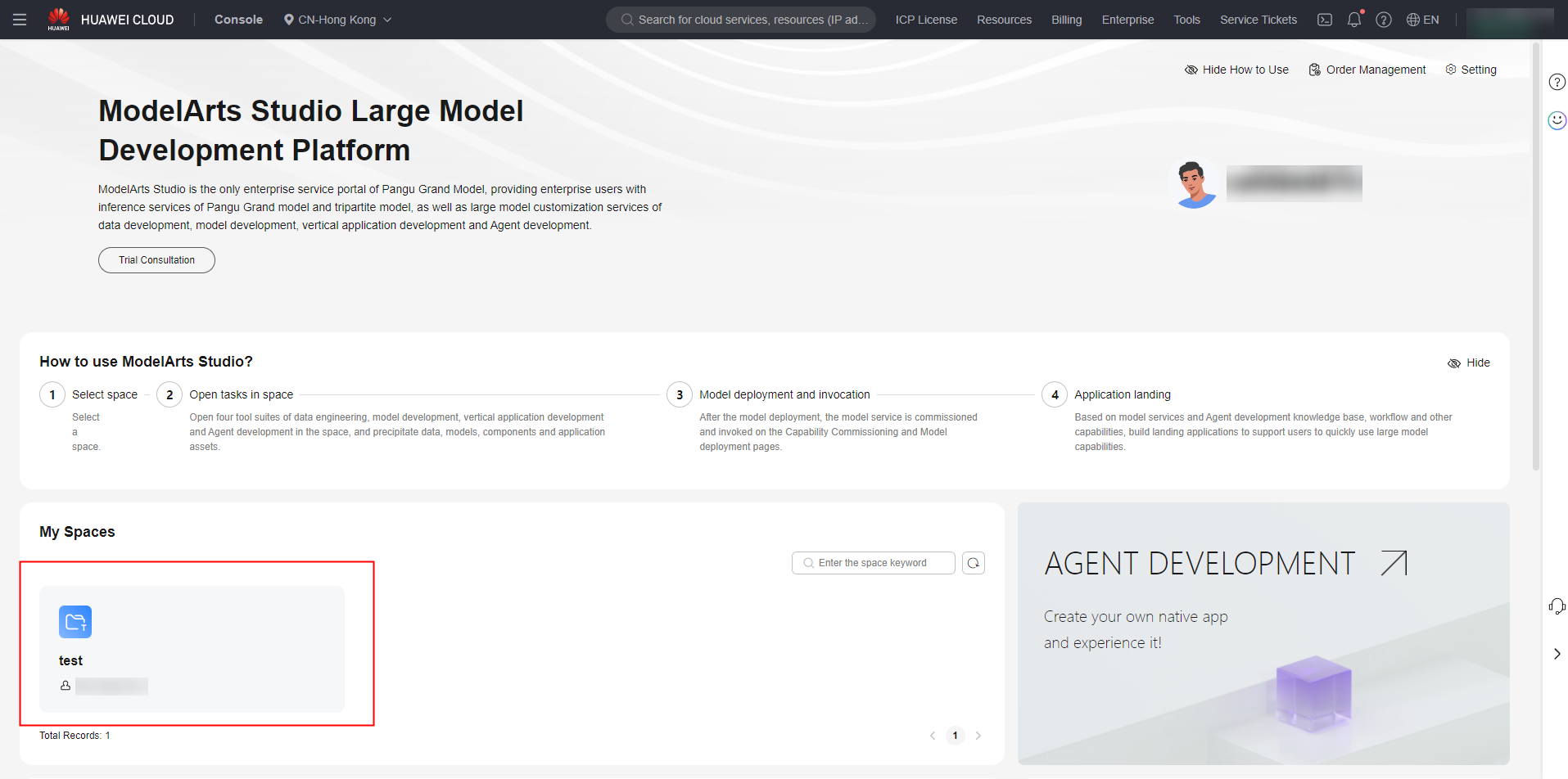

- Log in to ModelArts Studio Large Model Deveopment Platform. In the My Spaces area, click the required workspace.

Figure 4 My Spaces

- In the navigation pane, choose Model Development > Model Training. Click Create Training Job in the upper right corner.

- On the Create Training Job page, set training parameters by referring to Table 4.

Table 4 Parameters for DeepSeek model QLoRA fine-tuning Category

Training Parameter

Description

Training configuration

Select Model

You can modify the following information:

- Sources: Select Model Square.

- Type: Select NLP and select the base model and version (DeepSeek-V3-32K or DeepSeek-R1-32K) used for training.

Type

Select Supervised fine-tuning.

Training Objective

Select QLoRA fine-tuning.

- QLoRA fine-tuning: QLoRA is an efficient fine-tuning approach for LLMs. By combining quantization and low-rank adaptation, it significantly reduces GPU memory usage while maintaining model performance, making it suitable for resource-constrained environments.

Training Parameter

epochs

Dataset iterations during model training.

learning_rate

It determines the step size at each training iteration. If the learning rate is too high, the model never converges. If the learning rate is too low, the model can take a long time to converge.

batch_size

It controls the number of samples used in one iteration of model training. A larger batch size leads to more stable gradients but consumes more GPU memory. This may cause out-of-memory (OOM) errors due to hardware limitations and extend the training time.

sequence_length

It specifies the maximum length of a single training data record. Data that exceeds the length will be truncated during training.

warmup

It controls the duration of the warm-up phase relative to the entire training process. During this phase, the learning rate starts low, allowing the model to stabilize gradually. Once stabilized, the model is then trained at a preset higher learning rate, which accelerates convergence and enhances performance.

lr_decay_ratio

It controls the learning rate to ensure the model converges more stably. The minimum learning rate will not be lower than the product of the Learning Rate and this parameter. If the learning rate does not decay, set this parameter to 1.

weight_decay

It is a regularization method that reduces the size of model parameters to prevent model overfitting and improve the model generalization capability.

Rank of the LoRA matrix

In the LoRA matrix, the rank value is used to measure the complexity and information amount of the matrix. A larger value enhances the representation capability of the model, but increases the training duration. A smaller value reduces the number of parameters and the overfitting risk.

Data configuration

Training set

Select the training dataset.

Resource Disposition

Billing model

Billing mode of the current training job.

Training Unit

Select the number of training units required for training the model.

The minimum number of training units required for the current training is displayed.

Single-instance training units

Select the number of training units for a single instance.

Number of instances

Select the number of instances.

Subscription reminder

Subscription reminder

After this function is enabled, the system sends SMS or email notifications to users when the task status is updated.

publish model

Enable automatic publishing

If this function is disabled, the model will be manually published to the model asset library after the training is complete.

If this function is enabled, configure the visibility, model name, and description.

Basic Information

Name

Name of a training job.

Description

Description of the training job.

The default values of training parameters vary depending on the model. The default values displayed on the frontend page prevail.

- Click Create Now.

- After a fine-tuning job is created, the Model Training page is displayed. You can view the job status at any time.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot