Managing Lite Cluster Node Pools

To help you better manage nodes in a Kubernetes cluster, ModelArts provides node pools. A node pool consists of one or more nodes, allowing you to set up a group of nodes with specific configurations.

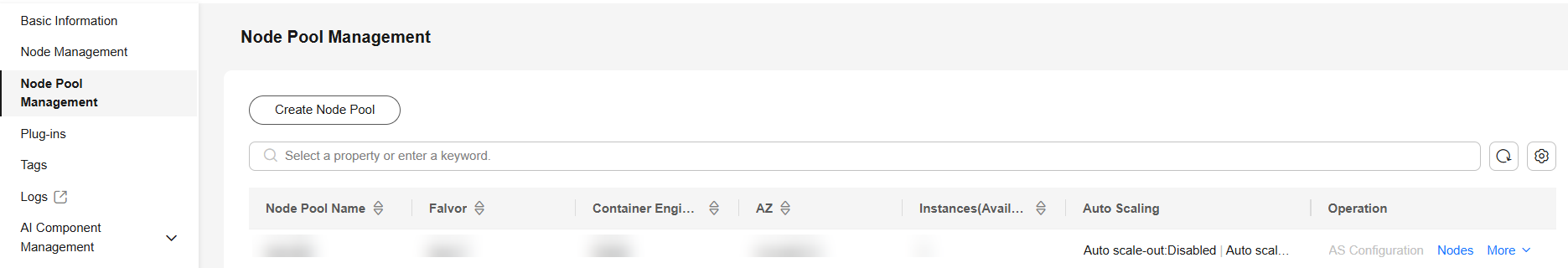

Accessing the Node Pool Management Page

- Log in to the ModelArts console. In the navigation pane on the left, choose Lite Cluster under Resource Management.

- On the displayed page, click the Lite Cluster name to access its details page.

- In the navigation pane on the left, choose Node Pool Management. You can create, update, and delete node pools.

Figure 1 Node pool management

Creating a Node Pool

- If you need more node pools, click Create Node Pool to create one. Configure the parameters by referring to Table 1.

In CN East 2, each Lite Cluster can contain a maximum of 15 node pools. In CN Southwest Guiyang1, each Lite Cluster can contain a maximum of 50 node pools. In other regions, each Lite Cluster can contain a maximum of 10 node pools.

Table 1 Node pool parameters Parameter

Description

Node Pool Name

Enter a custom node pool name.

Only lowercase letters, digits, and hyphens (-) are allowed. The value must start with a lowercase letter and cannot end with a hyphen (-) or -default.

Instance Specifications

Choose CPU, GPU, or Ascend as needed.

- CPU: general-purpose compute architecture, features low computing performance, is suitable for lightweight general tasks.

- GPU: parallel compute architecture, features high computing performance, is suitable for parallel tasks and scenarios such as deep learning training and image processing, and supports multi-PU distributed training.

- Ascend: dedicated AI architecture, features extremely high computing performance, is suitable for AI tasks and scenarios such as AI model training and inference acceleration, and supports multi-node distributed deployment.

Driver Version

You can select a driver version when the instance flavor is Snt9b or D310P.

Operating System

Specify the OS of the instance.

AZ Allocation

Select Automatic or Manual as required. An AZ is a physical region where resources use independent power supplies and networks. AZs are physically isolated but interconnected over an intranet.

- Automatic: AZs are automatically allocated.

- Manual: Specify AZs for resource pool instances. To ensure system disaster recovery (DR), deploy all instances in the same AZ. You can set the number of instances in an AZ.

Target Instances

Set the number of nodes in the node pool. More nodes indicate higher computing performance.

If AZ is set to Manual, you do not need to configure Instances.

Do not create more than 30 instances at a time. Otherwise, the creation may fail due to traffic limiting.

The total number of instances cannot exceed the cluster scale of the node pool. If the cluster scale of the node pool is set to the default value, the total number of instances cannot exceed 50. For details, see the console.

You can purchase instances by rack for certain specifications. The total number of instances is the number of racks multiplied by the number of instances per rack. Purchasing a full rack allows you to isolate tasks physically, preventing communication conflicts and maintaining linear computing performance as task scale increases. All instances in a rack must be created or deleted together.

You can purchase Snt9b23 instances with a custom step. The total number of instances is the number of instances multiplied by the step. The step is the minimum unit of each adjustment of the fault reporting quota. In the node binding scenario, nodes in each step are considered as a whole and belong to the same batch.

Virtual Private Cloud

The VPC to which the cluster belongs by default, which cannot be changed.

Kubernetes Label

Add key/value pairs that are attached to Kubernetes objects, such as Pods. A maximum of 20 labels can be added. Labels can be used to distinguish nodes. With workload affinity settings, container pods can be scheduled to a specified node.

Taint

This parameter is left blank by default. Configure anti-affinity by adding taints to nodes, with a maximum of 20 taints per node.

Container Engine

Container engine, one of the most important components of Kubernetes, manages the lifecycle of images and containers. The Kubelet interacts with a container runtime through the Container Runtime Interface (CRI). Docker and Containerd are supported. For details about the differences between Containerd and Docker, see Container Engines.

The CCE cluster version determines the available container engines. If it is earlier than 1.23, only Docker is supported. If it is 1.27 or later, only Containerd is supported. For all other versions, both Containerd and Docker are supported.

Node subnet

Choose a subnet within the same VPC. This subnet will be used to create node pools.

Associate Security Group

Security group used by the nodes created in the node pool. A maximum of four security groups can be selected. Traffic needs to pass through certain ports in the node security group to ensure node communications. If no security group is associated, the cluster's default rules are applied.

Resource Tag

You can add resource tags to classify resources.

Post-installation Command

Enter the script command, which cannot include Chinese characters. The Base64-encoded script must be transferred. The encoded script should not exceed 2,048 characters. The script will be executed after Kubernetes software is installed, which does not affect the installation.

Do not run the reboot command in the post-installation script to restart the system immediately. To restart the system, run the shutdown -r 1 command to restart with a delay of one minute.

Node Billing Mode

When you add nodes, you can enable this function to specify the billing mode or validity period for them.

If this parameter is not specified, the billing information is the same as that of the resource pool by default. For example, you can create pay-per-use nodes in a yearly/monthly resource pool. If the billing mode is not specified, the new nodes share the same billing mode with the resource pool.

If yearly/monthly nodes are to be created, select whether to enable auto-renewal. If auto-renewal is enabled, the nodes to be created will be automatically renewed upon expiration.

For a yearly/monthly node pool, if the billing mode of the nodes to be created is also yearly/monthly, the billing period of the new nodes cannot be later than that of the original node pool. For example, if the original yearly/monthly node pool is about to expire in six months, the nodes to be added cannot be billed later than six months.

- Confirm the configurations. Hover the cursor over the fees to confirm the details. Then, click Confirm.

- In the displayed dialog box, confirm whether to enable auto-renewal for the new nodes, and click OK.

You can view the created node pool on the node pool management page.

Configuring Auto Scaling for a Node Pool

Nodes in a node pool can be automatically added or removed based on the pod scheduling status and resource usage. Multiple scaling modes, such as multi-AZ, multi-instance specifications, metric triggering, and periodic triggering, are supported to meet different node scaling scenarios.

To use auto scaling for a node pool, you need to install the cluster elastic engine plug-in first. For details, see Cluster Autoscaler.

Viewing the Node List

To view information about nodes in a node pool, click Nodes in the Operation column to view the node name, specifications, and AZ.

Updating a Node Pool

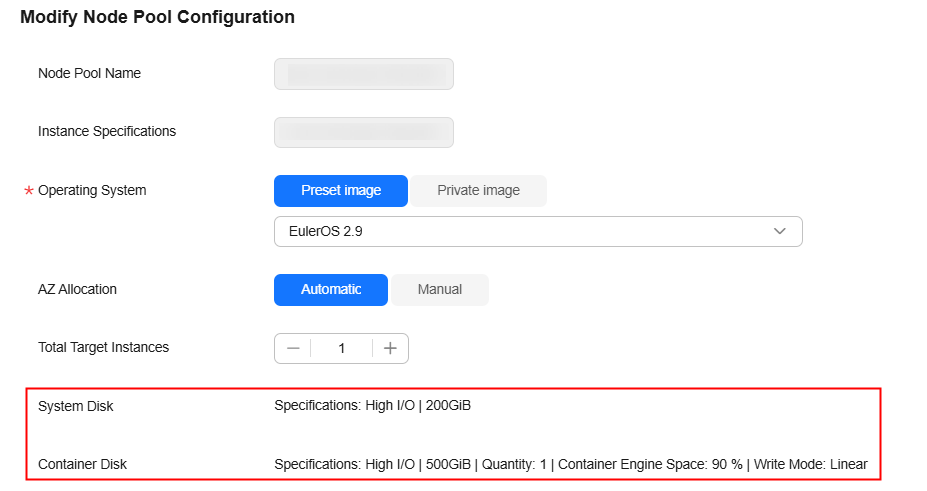

- Locate the target node pool and choose More > Modify Configuration in the Operation column. For details about the parameters, see Table 1.

Note the following:

- The total number of instances cannot exceed the cluster scale of the node pool. If the cluster scale of the node pool is set to the default value, the total number of instances cannot exceed 50. For details, see the console.

- When you update the node pool configuration, the advanced configuration takes effect only for new nodes. Synchronization for Existing Nodes (labels and taints) and Synchronization for Existing Nodes (labels) can be modified synchronously for existing nodes (by selecting the check boxes).

The updated resource tag information in the node pool is synchronized to its nodes.

Figure 2 Updating a node pool

- Confirm the configurations. Hover the cursor over the fees to confirm the details. Then, click Confirm.

- In the displayed dialog box, confirm whether to enable auto-renewal for the new nodes, and click OK.

You can view the updated node pool on the node pool management page.

Upgrading a Lite Cluster Resource Pool Driver

If there are GPU/Ascend resources in a Lite Cluster resource pool node, and the node performance cannot meet your requirements, you can upgrade the driver to resolve known issues, improve performance, or support new functions, ensuring resource pool performance and compatibility.

To upgrade the GPU or Ascend driver of the Lite Cluster resource pool, choose More > Upgrade Driver in the Operation column. For details, see Upgrading the Lite Cluster Resource Pool Driver.

Deleting a Node Pool

If there are multiple node pools, you can delete one. To do so, click Delete in the Operation column. Confirm the associated resources and jobs that will be affected, click Delete, enter DELETE, and click OK.

For yearly/monthly nodes that are not unsubscribed from or released, click Go Now to access the resource pool details page. For details, see Deleting, Unsubscribing from, or Releasing a Node.

Each resource pool must have at least one node pool. If there is only one node pool in a resource pool, it cannot be deleted.

Viewing the Storage Configuration of a Node Pool

On the Modify Node Pool Configuration page, you can view details like disk type, size, quantity, write mode, and container engine space size for system, container, or data disks.

Additionally, on the Lite resource pool scaling page, you can view the storage configuration of its node pools.

Searching for a Node Pool

In the search box on the node pool management page, you can search for node pools by keyword, such as the node pool name, specifications, container engine space size, or AZ.

Specifying Node Pool Information to Be Displayed

On the node pool management page, click  in the upper right corner to customize the information to be displayed in the node pool list.

in the upper right corner to customize the information to be displayed in the node pool list.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot