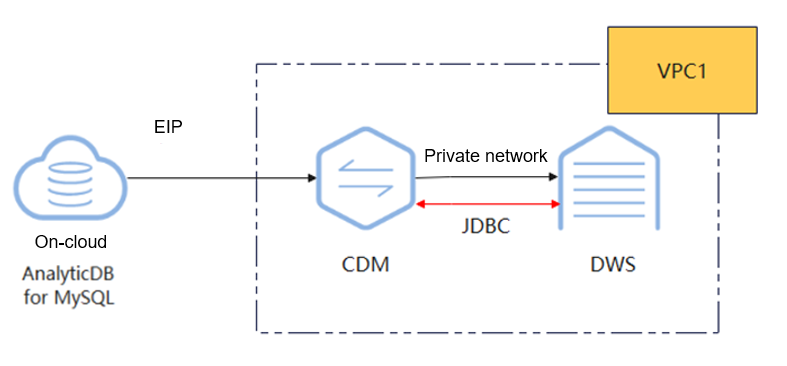

Using CDM to Migrate Data from AnalyticDB for MySQL to a DWS Cluster

This practice demonstrates how to use CDM to migrate data from AnalyticDB for MySQL (ADB) to DWS.

CDM is an efficient and easy-to-use service for batch data migration. For more information, see Cloud Data Migration.

This practice takes about 90 minutes and uses cloud services such as Virtual Private Cloud (VPC) and Subnet, Elastic IP (EIP), Cloud Data Migration (CDM), and DWS. The basic process is as follows:

Notes and Constraints

- If DELETE or UPDATE operations occur during CDM migration, data consistency cannot be guaranteed afterward. Re-migration will be required in such cases.

- A single database migration job can migrate only one database at a time. To migrate multiple databases, you need to configure multiple migration jobs.

- You need to create databases and schemas to be synchronized in the destination DWS.

- In ADB, the database level corresponds to the schema level in DWS.

- The ADB cluster and DWS cluster can communicate with CDM. In this example, DWS and CDM are created in the same region, private cloud, and subnet.

- You have the migration permission.

- The source and destination clients have been installed.

- The IP address of the CDM cluster has been whitelisted in the data security settings within the ADB source cluster.

- The migration tools listed in Table 1 have been prepared: DSC and DataCheck.

- The runtime environment of DataCheck meets the following requirements:

- The server is compatible with 64-bit operating systems and can run on either Linux or Windows.

- Either JDK or JRE 1.8 has been installed on the system.

- The server where DataCheck is installed and running can communicate with the database to be connected.

|

Tool |

Description |

How to Obtain |

|---|---|---|

|

DSC |

Syntax migration tool for DWS |

Obtain the download link. |

|

DataCheck |

Data check tool |

Contact technical support. |

Step 1: Migrating Metadata

- Export the source syntax. Exporting the source syntax, which represents the implementation logic of customer's services, from ADB and modifying it to be compatible with DWS can reduce the modeling workload and improve service migration efficiency.

To do so, select the target database in the ADB console, choose Export > Export Table Creation Statements, and save DDL_migration.sql.

Since the source syntax involves the identification of the service scope, operations require a DBA familiar with the service. It is recommended that the source syntax be provided by the customer's DBA.

- Use the DSC tool to convert the DDL syntax.

- Unzip the DSC tool package obtained in Prerequisites.

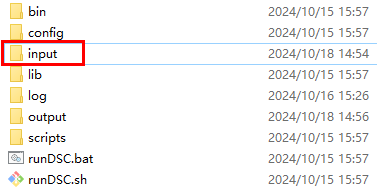

- Save the DDL syntax files to be converted into the input folder of DSC.

Figure 2 input directory

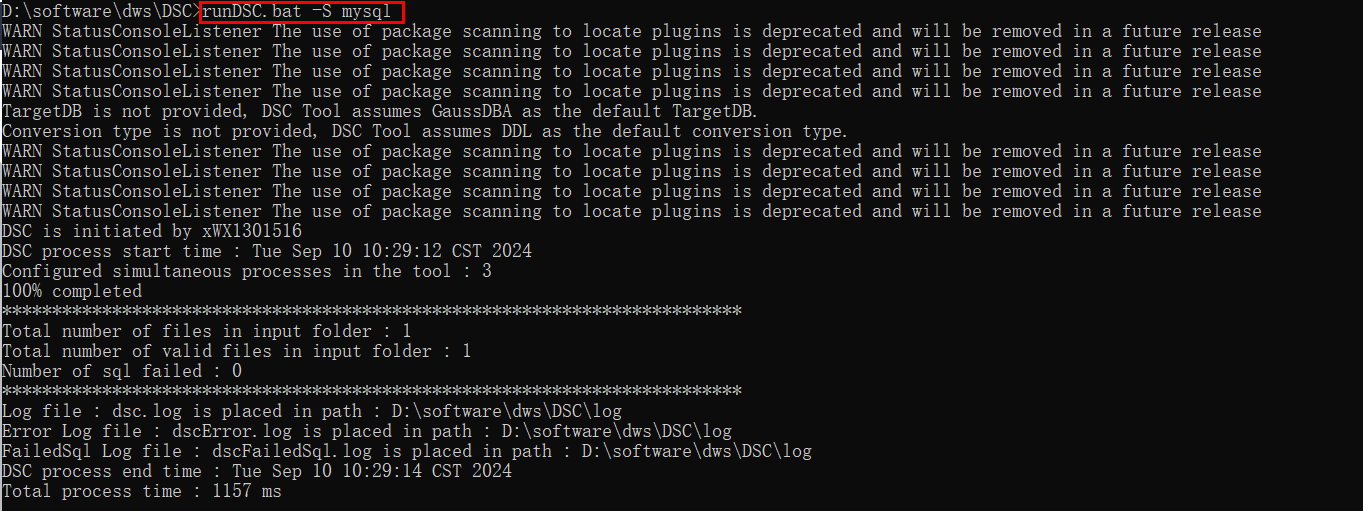

- Open the command line tool and double-click runDSC.bat in Windows. Run runDSC.sh in Linux.

- Convert the syntax:

1runDSC.bat -S mysql

Figure 3 DDL conversion

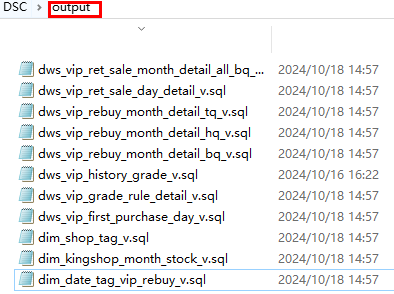

- View the conversion result in the output folder.

Figure 4 DDL conversion result

- Connect to DWS and run the SQL statement below to create a destination database, which in this practice is migration.

1CREATE DATABASE database_name WITH ENCODING 'UTF-8' DBCOMPATIBILITY 'mysql' TEMPLATE template0;

- The concept of a database in ADB is equivalent to the concept of a schema in DWS. A schema needs to be created in the destination DWS database.

First, switch to the newly created database, then execute the following SQL statement to create a schema.

1CREATE SCHEMA schema_name;

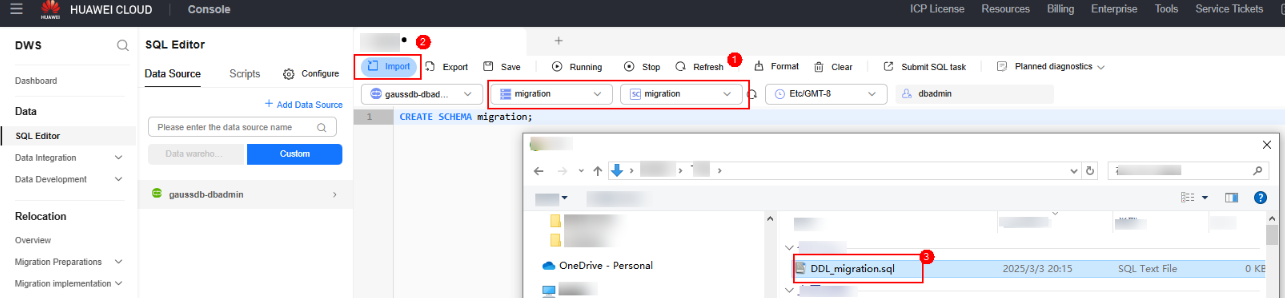

- In the SQL editor window of DWS, select the created database and schema, and click Import to import the table creation statement converted in 2.

Figure 5 Importing DDL statements

- After the import, click the run button to run the SQL statement to create a table.

- Check whether the table is created:

1SELECT table_name FROM information_schema.tables WHERE table_schema = 'migration';

Step 2: Migrating Table Data

- Configure the source link for CDM.

- Log in to the CDM console. In the navigation pane, click Cluster Management.

- If CDM is connected to ADB through the public network, bind an EIP. For details, see Binding or Unbinding an EIP.

- Click Job Management next to the cluster name.

- Before establishing a job link for the first time, install the driver. Choose Links > Driver Management and install the MySQL driver.

- After the driver installation, click Create Link on the link management page, select MySQL and then click Next.

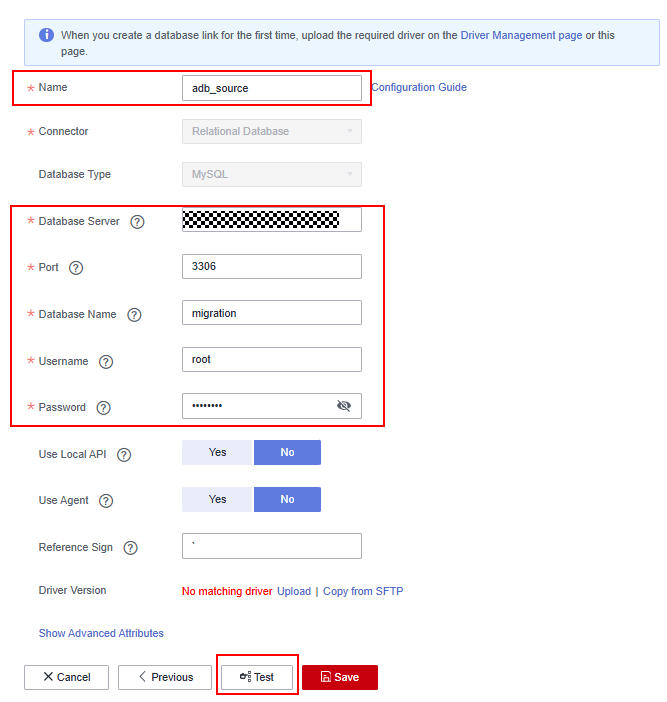

- Enter the ADB database information.

Figure 6 ADB information

- Click Test to check connectivity, and then click Save.

- Configure the destination link for CDM.

- Similarly, choose Job Management > Links and click Create Link.

- Select Data Warehouse Service and click Next.

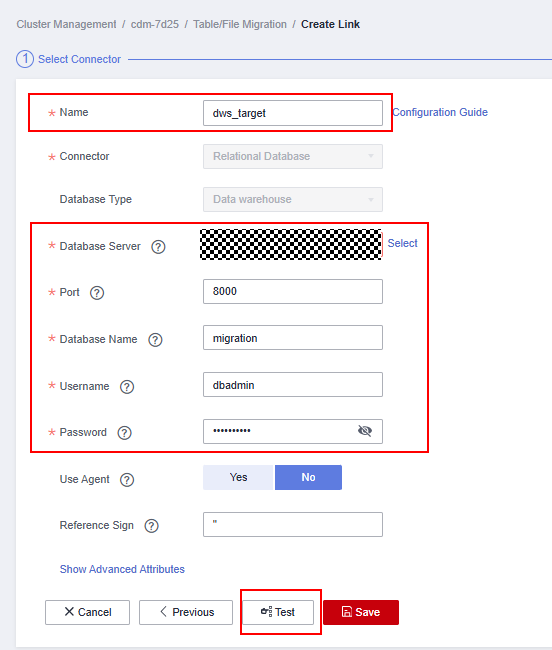

- Enter the DWS database information.

Figure 7 DWS information

- Click Test to check connectivity, and then click Save.

- Configure and start a table-level migration job.

- Click the Table/File Migration tab. This tab displays single-table migration jobs.

- Enter the source and destination information.

- Job Name: Enter a unique name.

- Source Job Configuration

- Source Link Name: Select the created MySQL source link.

- Use SQL Statement: Select No.

- Schema/Tablespace: Select the name of the schema or tablespace from which data is to be extracted.

- Table Name: Select the name of the table from which data is to be extracted.

- Retain default settings for other parameters.

- Destination Job Configuration

- Destination Link Name: Select the created DWS destination link.

- Schema/Tablespace: Select the DWS database to which data is to be written.

- Auto Table Creation: This parameter is displayed only when both the migration source and destination are relational databases.

- Table Name: Select the name of the table to which data is to be written. You can also enter a table name that does not exist. CDM automatically creates the table in DWS.

- Clear Data Before Import: Specify whether to clear data in the destination table before the migration task starts.

- Click Next to map fields.

- If the field mapping is incorrect, you can drag the fields to adjust the mapping.

- CDM expressions have built-in ability to convert fields of common strings, dates, and numbers. For details, see Configuring Field Converters.

- Confirm the information and click Next.

- On the task configuration page, configure the following parameters:

- Retry Upon Failure: If the job fails to be executed, you can determine whether to automatically retry. Retain the default value Never.

- Group: Select the group to which the job belongs. The default group is DEFAULT. On the Job Management page, jobs can be displayed, started, or exported by group.

- Schedule Execution: To configure scheduled jobs, enable this function. Retain the default value No.

- Concurrent Extractors: Indicates data extracted concurrently. The default value is 1, but you can increase it. However, it is recommended to keep it at or below 4.

- Write Dirty Data: Dirty data may be generated during data migration between tables. You are advised to select Yes.

- Confirm the information and click Save and Run.

After the migration job begins, you can view the status in the job list.

- Configure and start a database-level migration job.

- Click the Entire DB Migration tab and click Create Job.

- Enter the source information on the left and the destination information on the right.

- Job Name: Enter a unique name.

- Source Job Configuration

- Source Link Name: Select the created MySQL source link.

- Use SQL Statement: Select No.

- Schema/Tablespace: Select the name of the schema or tablespace from which data is to be extracted.

- Table Name: Select the name of the table from which data is to be extracted.

- Retain default settings for other parameters.

- Destination Job Configuration

- Destination Link Name: Select the created DWS destination link.

- Schema/Tablespace: Select the DWS database to which data is to be written.

- Auto Table Creation: This parameter is displayed only when both the migration source and destination are relational databases.

- Clear Data Before Import: Specify whether to clear data in the destination table before the migration task starts.

- Click Next.

- Select all tables or the tables to migrate, click the right arrow in the middle to move them to the right pane, and then click Next.

- Configure job parameters.

- Concurrent Subjobs: Indicates the number of tables to migrate simultaneously. The default value is 10; it is recommended to set it to a value less than 5.

- Concurrent Extractors: Indicates data extracted concurrently. The default value is 1, but you can increase it. However, it is recommended to keep it at or below 4.

Confirm the information and click Save and Run.

- Wait until the migration job is complete. Click the job name to view the migration status of each table.

- Connect to DWS and run the following SQL statements to check if the data has been migrated:

1 2 3 4

SELECT 'migration.users',count(1) FROM migration.users UNION ALL SELECT 'migration.products',count(1) FROM migration.products UNION ALL SELECT 'migration.orders',count(1) FROM migration.orders UNION ALL SELECT 'migration.employees',count(1) FROM migration.employees;

Step 3: Verifying Data Consistency

After the migration, check whether the data on the source and destination databases is consistent using DataCheck.

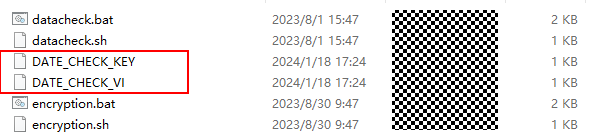

- Download and unzip DataCheck-*.zip, and then go to the DataCheck-* directory to use it. For details about how to use the files in the directory, see Table 2.

- Configure the tool package.

- In Windows:

Open the dbinfo.properties file in the conf folder and configure it based on your actual needs.

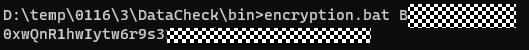

You can use the command below in the tool to generate the ciphertext of src.passwd and dws.passwd.

encryption.bat password

After the command is executed, an encrypted file is generated in the local bin directory.

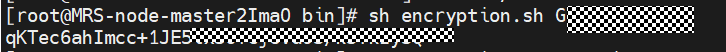

- In Linux:

The method of generating the ciphertext is similar to that for Windows. The command is sh encryption.sh Password. Other steps are the same.

- In Windows:

- Check data.

In Windows:

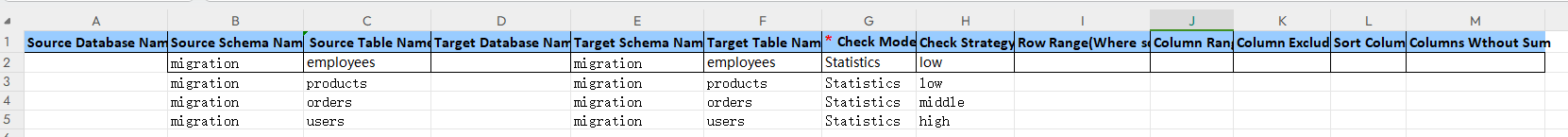

- Open the check.input file, enter the databases (if they are not entered, the content in the conf file is used), source table, and destination table to be checked, and fill in Row Range with a data query statement to specify the query scope.

- After configuring the source database name in the configuration file, the source database name in the check.input file defaults to this. However, if a different source database name is specified in the check.input file, it will take precedence.

- The Check Strategy offers three levels: high, medium, and low. If unspecified, the default is low.

The following figure shows the check_input file for metadata comparison.

Figure 8 check_input

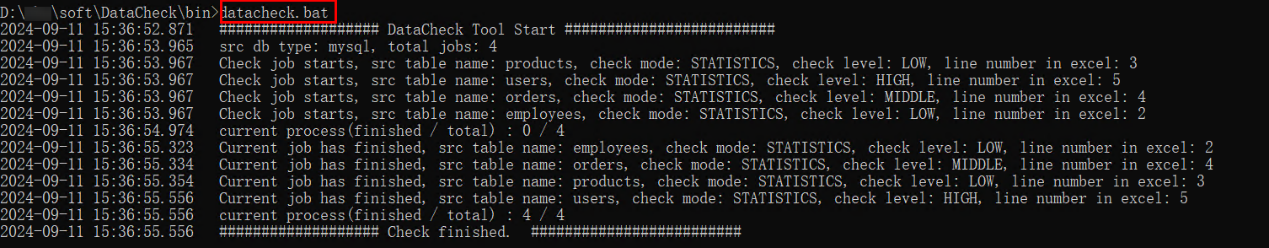

- Run the datacheck.bat command in the bin directory to execute the check tool.

- View the generated check result file check_input_result.xlsx.

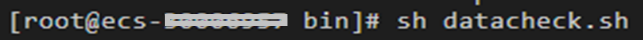

In Linux:

- Edit and upload the check_input.xlsx file. Refer to the step 1 for Windows.

- Run the sh datacheck.sh command to execute the check tool.

- View the check result in the check_input_result.xlsx file. (The check result analysis is the same as that for Windows.)

- Open the check.input file, enter the databases (if they are not entered, the content in the conf file is used), source table, and destination table to be checked, and fill in Row Range with a data query statement to specify the query scope.

Related Information

|

File or Folder |

Description |

|

|---|---|---|

|

DataCheck |

bin |

Saves the entry script of the check tool.

|

|

conf |

Configuration file, which is used to configure the connection between the source database and the destination database and set log printing. |

|

|

lib |

Stores JAR packages required for running the check tool. |

|

|

check_input.xlsx |

|

|

|

logs |

The package does not include this file. Once the check tool runs, it automatically generates this file to log the tool's execution process. |

|

|

check_input_result.xlsx |

The package does not include this file. Once the check tool runs, a check result file will be created in the same location as check_input.xlsx. |

|

|

DataCheck |

|---|

|

|

Check Level |

Description |

Syntax |

|---|---|---|

|

Low |

|

|

|

Middle |

|

|

|

High |

|

|

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot