Kuberay Add-on

Introduction

Kuberay is a native Kubernetes add-on designed to seamlessly manage and operate the Ray distributed computing framework within Kubernetes clusters, including CCE standard and Turbo clusters. Ray is a high-performance distributed computing library commonly used in machine learning, reinforcement learning, and data processing scenarios. The goal of Kuberay is to integrate Ray into Kubernetes for easy deployment, management, and scaling of RayClusters.

As a Kubernetes operator, Kuberay handles the lifecycle of RayClusters through CRDs. Its core functions include:

- RayCluster deployment: Kuberay handles the creation and management of both head and worker nodes in RayClusters automatically.

- Auto scaling: The number of worker node pods within a RayCluster adjusts dynamically based on workload demands.

- Resource management: Kuberay seamlessly integrates with Kubernetes' resource management system, including CPU, memory, and GPU resources.

- Fault recovery: Kuberay monitors the health of RayClusters and automatically recovers any faulty nodes.

- Logging and monitoring: Kuberay incorporates Kubernetes logging and monitoring tools to simplify debugging and optimization of Ray applications.

Open-source community: https://github.com/ray-project/kuberay

Notes and Constraints

- The CCE cluster (standard or Turbo) version must be v1.27 or later.

- This add-on is being deployed. For details about the regions where this add-on is available, see the console.

Billing

To access the Ray Dashboard, you need to bind an EIP to any node in the cluster. Using an EIP is billed. For details, see EIP Price Calculator.

Installing the Add-on

- Log in to the CCE console and click the cluster name to access the cluster console.

- In the navigation pane, choose Add-ons. Locate Kuberay on the right and click Install.

- On the Install Add-on page, configure the specifications. You can configure the CPU and memory quotas as required.

- CPU quotas are measured in cores, with the value being an integer followed by the unit suffix (m), such as 100m.

Table 1 CPU quotas Parameter

Example Value

Description

Request

100m

The minimum number of CPUs required by a container

Limit

500m

The maximum number of CPUs available for a container. If the CPU usage goes beyond the set limit, the instance might restart, impacting the add-on's regular operation.

- Memory quotas are measured in bytes, with the value being an integer followed by the unit suffix (Mi), such as 100Mi. To manage 500 Ray pods, you need approximately 500 MiB of memory. You can modify the settings based on memory usage.

Table 2 Memory quotas Parameter

Example Value

Description

Request

100Mi

The minimum amount of memory required by a container

Limit

500Mi

The maximum amount of memory available for a container. If the memory usage goes beyond the set limit, the instance might restart, impacting the add-on's regular operation.

- CPU quotas are measured in cores, with the value being an integer followed by the unit suffix (m), such as 100m.

- Configure the parameters. For details, see Table 3.

Table 3 Parameters Parameter

Example Value

Description

Add-on Namespace

default

Namespace where the Kuberay add-on is in. The parameter defaults to default, and the value can be customized.

Batch Scheduler

Do not use

Whether to use an external scheduler to schedule multiple RayJobs simultaneously. The parameter defaults to Do not use.

- Do not use: indicates that an external scheduler is not used to schedule multiple RayJobs simultaneously.

- Volcano: Volcano is used to schedule multiple RayJobs simultaneously. If you selected Volcano but do not have it on-premises, you are advised to install the Volcano Scheduler add-on. For details, see Volcano Scheduler.

Service Port

8080

Port used by Kuberay to provide services for external systems. The parameter defaults to 8080.

- Click Install. If the add-on is in the Running state, it means that the add-on has been installed.

Components

|

Component |

Description |

Resource Type |

|---|---|---|

|

kuberay-operator |

Used to deploy Ray and manage its lifecycle. |

Deployment |

How to Use the Add-on

The following provides different ways to submit a Ray job using the Kuberay add-on. For details about the workflow and application scenarios, see Table 5. For details about how to use Kuberay, see the official documentation.

|

How to Submit a Job |

Description |

|---|---|

|

Using a RayCluster custom resource |

To get started, create a RayCluster custom resource, log in to the head node pod, and submit a job. The head node will then automatically distribute the job to a worker node for execution. |

|

Using a RayJob custom resource |

You can mount a Python script directly to the head node of a RayCluster using the RayJob. The head node will then distribute the job to a worker node for execution. In this example, the YAML file for creating a RayJob custom resource is provided, which can be customized as needed.

|

- Install kubectl on an existing ECS and access a cluster using kubectl. For details, see Accessing a Cluster Using kubectl.

- Create a YAML file for configuring a RayJob custom resource. In this example, the file name is my-rayjob.yaml. You can change it as needed.

vim my-rayjob.yaml

If you encounter network issues when using an official Ray image, it may fail to be pulled. To avoid this, it is recommended that you push the image to an SWR image repository in advance and update the image address in the file with the SWR image address. For details about how to push an image to SWR, see Migrating Images to SWR Using Docker Commands.

The file content is as follows:

apiVersion: ray.io/v1 kind: RayJob # The resource type is RayJob. metadata: name: my-rayjob spec: # Define the specifications of the RayJob, including the job entry point, runtime environment, and RayCluster configuration. entrypoint: python /home/ray/samples/sample_code.py # Specify the entry point command of the job. In this example, a Python script will be run. runtimeEnvYAML: | env_vars: # Define the environment variables. counter_name: "test_counter" rayClusterSpec: # Define the configuration of the RayCluster. headGroupSpec: # Define the configuration of the head node in the RayCluster. rayStartParams: {} template: spec: containers: - name: ray-head image: rayproject/ray:2.41.0 ports: - containerPort: 6379 name: gcs-server - containerPort: 8265 # Ray dashboard name: dashboard - containerPort: 10001 name: client resources: limits: cpu: "1" requests: cpu: "200m" volumeMounts: - mountPath: /home/ray/samples name: code-sample volumes: - name: code-sample configMap: name: ray-job-code-sample items: - key: sample_code.py path: sample_code.py workerGroupSpecs: # Define the configuration of the worker nodes in the RayCluster. - replicas: 1 minReplicas: 1 maxReplicas: 5 groupName: small-group rayStartParams: {} template: spec: containers: - name: ray-worker image: rayproject/ray:2.41.0 resources: limits: cpu: "1" requests: cpu: "200m" --- # Define a specific job. The Python script sample_code.py is included, which creates a basic distributed counter. apiVersion: v1 kind: ConfigMap metadata: name: ray-job-code-sample data: sample_code.py: | import ray import os ray.init() @ray.remote class Counter: def __init__(self): # Used to verify runtimeEnv self.name = os.getenv("counter_name") assert self.name == "test_counter" self.counter = 0 def inc(self): self.counter += 1 def get_counter(self): return "{} got {}".format(self.name, self.counter) counter = Counter.remote() for _ in range(1000): ray.get(counter.inc.remote()) print(ray.get(counter.get_counter.remote()))

- Create the RayJob and ConfigMap (used to store job code).

kubectl create -f my-rayjob.yamlIf information similar to the following is displayed, the resources have been created:

rayjob.ray.io/my-rayjob created configmap/ray-job-code-sample created

- Check the deployment of the RayJob by performing the following operations in sequence:

- Check whether the RayCluster has been created.

kubectl get pod

If information similar to the following is displayed, the resource has been created: (In the following command, my-rayjob-raycluster-4464z-head-hb5qx indicates the head node pod, my-rayjob-raycluster-4464z-small-group-worker-csqb2 indicates the worker node pod, and my-rayjob-x2tv6 indicates the job execution status. If STATUS of my-rayjob-x2tv6 is Completed, it means that the job is complete.)

NAME READY STATUS RESTARTS AGE my-rayjob-raycluster-4464z-head-hb5qx 1/1 Running 0 24s my-rayjob-raycluster-4464z-small-group-worker-csqb2 1/1 Running 0 24s my-rayjob-x2tv6 1/1 Running 0 4s

- Check the status of the RayJob.

kubectl get rayjob

If information similar to the following is displayed, the job is still in progress: (If JOB STATUS is SUCCEEDED, the job is complete.)

NAME JOB STATUS DEPLOYMENT STATUS RAY CLUSTER NAME START TIME END TIME AGE my-rayjob RUNNING Running my-rayjob-raycluster-4464z 2025-02-10T07:16:26Z 28

- View the job execution status.

kubectl logs my-rayjob-x2tv6

Information similar to the following is displayed:

2025-02-21 03:32:23,631 INFO cli.py:36 -- Job submission server address: http://my-rayjob-raycluster-4464z-head-svc.default.svc.cluster.local:8265 2025-02-21 03:32:24,142 SUCC cli.py:60 -- -------------------------------------------- 2025-02-21 03:32:24,143 SUCC cli.py:61 -- Job 'my-rayjob-x2tv6' submitted successfully 2025-02-21 03:32:24,143 SUCC cli.py:62 -- -------------------------------------------- 2025-02-21 03:32:24,143 INFO cli.py:286 -- Next steps 2025-02-21 03:32:24,143 INFO cli.py:287 -- Query the logs of the job: 2025-02-21 03:32:24,143 INFO cli.py:289 -- ray job logs my-rayjob-x2tv6 2025-02-21 03:32:24,143 INFO cli.py:291 -- Query the status of the job: 2025-02-21 03:32:24,143 INFO cli.py:293 -- ray job status my-rayjob-x2tv6 2025-02-21 03:32:24,143 INFO cli.py:295 -- Request the job to be stopped: 2025-02-21 03:32:24,143 INFO cli.py:297 -- ray job stop my-rayjob-x2tv6 2025-02-21 03:32:24,147 INFO cli.py:304 -- Tailing logs until the job exits (disable with --no-wait): 2025-02-21 03:32:25,011 INFO worker.py:1429 -- Using address 172.20.0.10:6379 set in the environment variable RAY_ADDRESS 2025-02-21 03:32:25,012 INFO worker.py:1564 -- Connecting to existing Ray cluster at address: 172.20.0.10:6379... 2025-02-21 03:32:25,017 INFO worker.py:1740 -- Connected to Ray cluster. View the dashboard at 172.20.0.10:8265 test_counter got 1 test_counter got 2 test_counter got 3 test_counter got 4 ...

- Check whether the RayCluster has been created.

- Monitor and manage the RayCluster status, resource usage, and job execution in real time on the Ray Dashboard.

- Obtain the label of the head node pod in the RayCluster so that the pod can be identified and associated with the Service created in 5.b. You can change my-rayjob-raycluster-4464z-head-hb5qx as needed.

kubectl describe pod my-rayjob-raycluster-4464z-head-hb5qxThe following information is displayed. You are advised to use my-rayjob-raycluster-4464z-head as the label.

... Labels: app.kubernetes.io/created-by=kuberay-operator app.kubernetes.io/name=kuberay ray.io/cluster=my-rayjob-raycluster-4464z ray.io/group=headgroup ray.io/identifier=my-rayjob-raycluster-4464z-head ray.io/is-ray-node=yes ray.io/node-type=head ... - Create a YAML file for configuring a NodePort Service. In this example, the file name is ray-dashboard.yaml. You can change it as needed. This Service is used to expose services to external systems so that you can directly access the Ray Dashboard from a browser.

vim ray-dashboard.yamlThe file content is as follows:

apiVersion: v1 kind: Service metadata: name: ray-dashboard labels: ray.io/identifier: my-rayjob-raycluster-4464z-head namespace: default spec: ports: - name: cce-service-0 port: 8265 # Port for accessing the Service, which is set to 8265 protocol: TCP # Protocol used for accessing the Service. The value can be TCP or UDP. targetPort: 8265 # Port used by the Service to access the target container. This port is closely related to the application running in a container and must be 8265. selector: # Label selector ray.io/identifier: my-rayjob-raycluster-4464z-head externalTrafficPolicy: Cluster type: NodePort # Service type. NodePort indicates that services are accessed through a node port. - Create the Service.

kubectl create -f ray-dashboard.yamlIf information similar to the following is displayed, the Service has been created:

service/ray-dashboard created

- Obtain the node port of the Service.

kubectl get services

The following information is displayed, and 32638 specifies the node port.

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE ray-dashboard NodePort 10.247.147.102 <none> 8265:32638/TCP 8s

- Enter http://<EIP of a cluster node>:32638 in the address bar of a browser to access the Ray Dashboard. Ensure that an EIP has been bound to a node in the cluster. To bind an EIP to a node, log in to the CCE console, click the cluster name to access the cluster console, choose Nodes in the navigation pane, click the Nodes tab in the right pane, and click the node name to go to the ECS console. For details, see Binding an EIP.

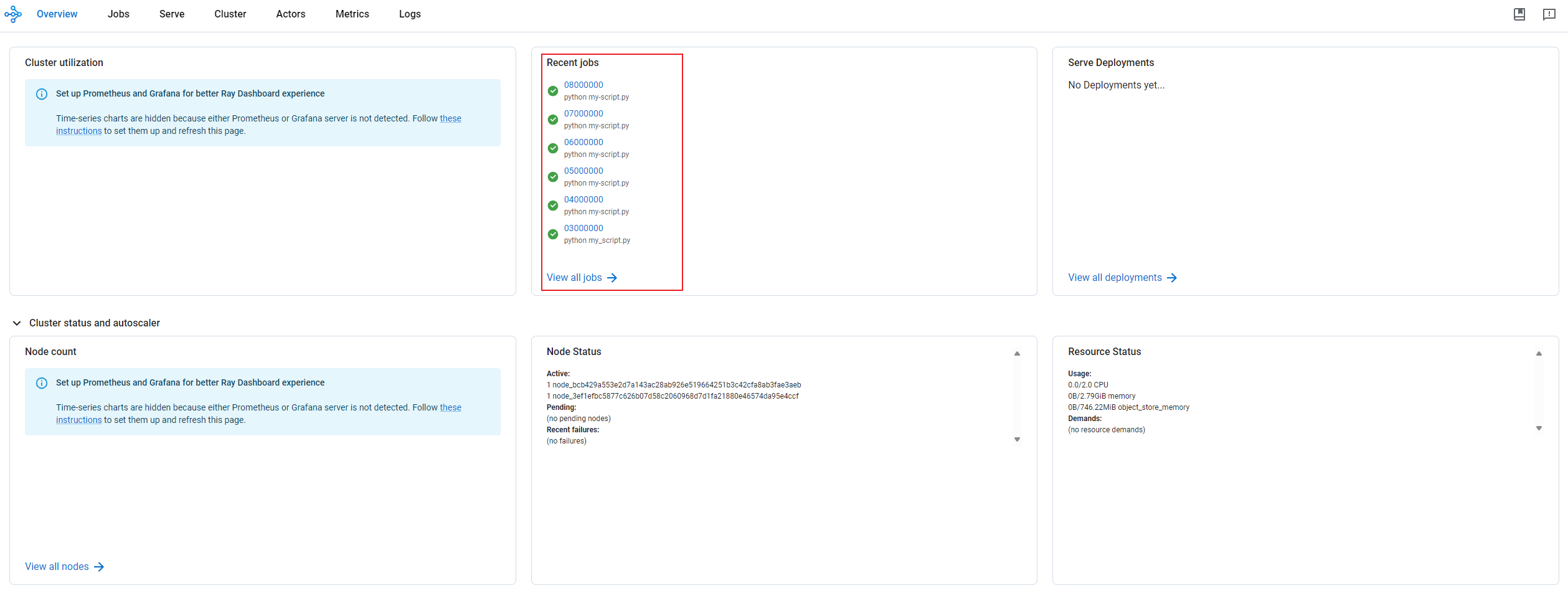

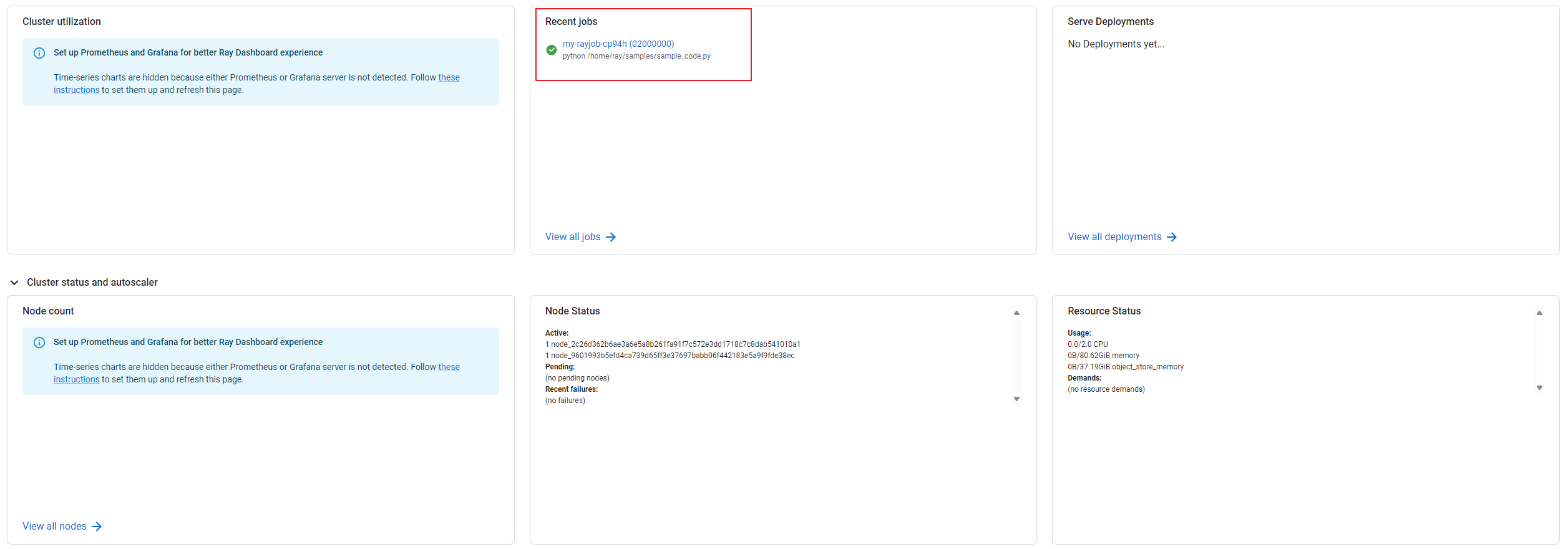

If the page displayed is similar to Figure 2, the access is successful. You can monitor and manage the status of the RayCluster, track resource usage, and oversee job execution in real time using the Ray Dashboard. For example, you can check the status of job execution in the Recent jobs area.

- Obtain the label of the head node pod in the RayCluster so that the pod can be identified and associated with the Service created in 5.b. You can change my-rayjob-raycluster-4464z-head-hb5qx as needed.

- Delete related resources by taking the following steps:

- Delete resources related to the RayJob.

kubectl delete -f my-rayjob.yamlInformation similar to the following is displayed:

rayjob.ray.io "my-rayjob" deleted configmap "ray-job-code-sample" deleted

- Delete the Service resources.

kubectl delete -f ray-dashboard.yamlInformation similar to the following is displayed:

service "ray-dashboard" deleted

- Delete resources related to the RayJob.

Common Issues

After removing the Kuberay add-on from a CCE cluster, the CRDs for the RayCluster, RayJob, and Ray services will still be present. You can delete these remaining resources by following these steps:

- On the ECS that has been connected to the cluster, search for the CRDs related to Ray.

kubectl get crd | grep ray

The following information is displayed, and it means that there are two related CRDs:

rayclusters.ray.io 2025-02-01T12:00:00Z rayjobs.ray.io 2025-02-01T12:00:00Z rayservices.ray.io 2025-02-01T12:00:00Z

- Delete the related resources in sequence. You can replace rayclusters.ray.io, rayjobs.ray.io, and rayservices.ray.io in the command as needed.

kubectl delete crd rayclusters.ray.io kubectl delete crd rayjobs.ray.io kubectl delete crd rayservices.ray.io

If information similar to the following is displayed, the resources have been deleted.

customresourcedefinition.apiextensions.k8s.io "rayclusters.ray.io" deleted customresourcedefinition.apiextensions.k8s.io "rayjobs.ray.io" deleted customresourcedefinition.apiextensions.k8s.io "rayservices.ray.io" deleted

Release History

|

Add-on Version |

Supported Cluster Version |

New Feature |

Community Version |

|---|---|---|---|

|

1.2.4 |

|

Clusters of v1.33 are supported. |

|

|

1.2.3 |

|

Clusters of v1.32 are supported. |

|

|

1.2.2 |

|

The Kuberay add-on is now available. |

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot