Deploying the Algorithm Package Component

The running of algorithm package components depends on computing resources such as CPUs and NPUs. If an application uses an algorithm package component, you need to deploy the component first. After the deployment, the algorithm package can run in an independent and isolated environment and can be accessed by other components.

- Log in to ModelArts Studio and access the required workspace.

- In the navigation pane, choose Application Development > Application Components > Component Management. Click the customize tab and then the Algorithm package tab.

- Select an algorithm package that has taken effect, and click More and click deploy in the Operation column. Alternatively, go to the algorithm package component details page and click deploy in the upper right corner to deploy the algorithm. For an algorithm package component that has not taken effect, click the Is Effective switch in the Operation column of the row where the component is located to make the component take effect.

Table 1 Deployment parameters Parameter

Description

Deployment Mode

Deployment mode of the service. Edge deployment is supported.

Service Name

Name of the service to be deployed.

Arch Type

Architecture type of the resource pool to be deployed, which is related to the actual algorithm architecture.

Resource Pool

Select the edge resource pool to be deployed. If Ascend cards are required, the supported inference card type is Snt3p.

Load balancer

Load balancer used for edge deployment. Both HTTPS and HTTP load balancing are supported. The load balancer is used to call the deployed service.

CPU

Minimum number of physical CPU cores required for deployment. The default value is 1 core. A more complex model usually requires more computing resources. You can increase the number of CPUs. If the service needs to process a large number of concurrent requests, you are advised to increase the number of CPUs. The value cannot exceed the number of available CPUs in the resource pool.

Memory

Minimum memory required for deployment. The default value is 1024 MB. The larger the model and the more data to be processed, the larger the memory required. You can adjust the value based on the actual model and the amount of data to be processed.

Ascend

Number of NPUs used for deployment. Ascend devices are usually used to accelerate deep learning models. You can configure Ascend devices only when models can run on Ascend and the resource pool contains Ascend resources. Ensure that the number of allocated Ascend devices is within the resource pool. Currently, the supported inference card type is Snt3p. The computing power of a single card is 70 TFLOPS@fp16. The computing resources consumed for loading different inference models are different. The number of required cards is calculated as follows: Number of cards ≈ Total computing power/Single-card computing power. The calculated number of cards can be rounded up as the parameter value.

If the computing power of the resource pool has been allocated, the number of cards indicates the number of logical cards. If the computing power of the resource pool is not allocated, the value is the number of physical cards. For example, if a physical card is divided into seven parts, the computing power of a logical card is 10 TFLOPS@fp16.

Environment Variable

External environment variables defined in the component code.

Tags

Custom tag information of the service to be deployed. The tag is in key-value format.

If the video decoding and video frame extraction algorithms are used, you are advised to set the number of CPUs to 4 and the memory to 8092 MB. If the algorithms need to run on Ascend devices, set the number of CPUs to 1. The CPU, memory, and Ascend specifications are related to the algorithm in use and the amount of data to be processed. Set them based on the resource requirements of the algorithm.

- After the deployment is complete, click the algorithm package name. On the details page that is displayed, you can view the service list in the service management area at the bottom.

- After the service is deployed, you can call the service based on the access address in the service list. The request body structure is set based on the structure set when the algorithm package component is created.

The access URL format of the edge deployment service is as follows: <Load balancing protocol>://<Load balancing IP address (usually the IP address of the active node in the resource pool)>:<Load balancing port>/<Service access address>

The following is an example: the HTTPS load balancer is used, the IP address of the load balancer is 127.0.0.1, the port number of the load balancer is 8080, and the service access address is 05806e52-6423-43bc-b1be-82d4fa1a158a.

The final access address is https://127.0.0.1:8080/05806e52-6423-43bc-b1be-82d4fa1a158a.

When calling the access address, you need to set the request header parameters.

Content-Type: Enter application/json.

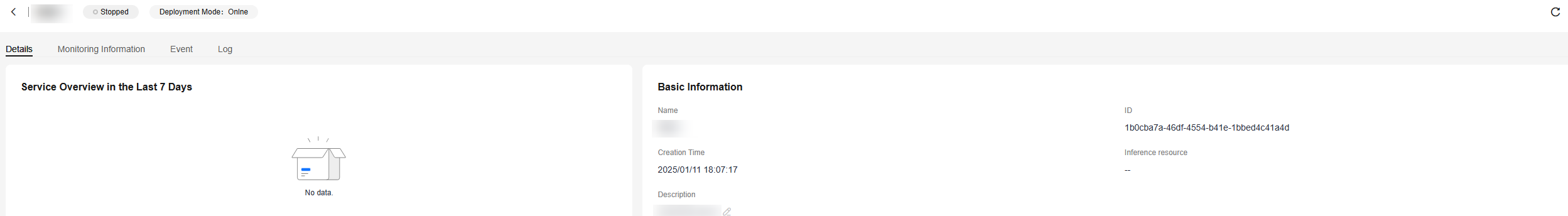

- Click a service name to go to the service details page.

Figure 1 Service details

- If a service fails to be deployed or is no longer required, delete the service from the service list.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot