Setting Cloud Structuring Parsing

LTS provides five log structuring modes: regular expressions, JSON, delimiters, Nginx, and structuring templates. You can make your choice flexibly.

- Regular Expressions: This mode applies to scenarios where each line in the log text is a raw log event and each log event can be extracted into multiple key-value pairs based on regular expressions. To use this mode to extract fields, you need to enter a log sample and customize a regular expression. Then, LTS extracts the corresponding key-value pairs based on the capture group in the regular expression.

- JSON: This mode applies to scenarios where each line in the log text is a raw log event and each log event can be extracted into multiple key-value pairs based on the JSON parsing rule.

- Delimiter: This mode applies to scenarios where each line in the log text is a raw log event and each log event can be extracted into multiple key-value pairs based on specified delimiters (such as colons, spaces, and characters).

- Nginx: This mode applies to scenarios where each line in the log text is a raw log event, each log event complies with the Nginx format, and the access log format can be defined by the log_format command.

- Structuring Template: This mode applies to scenarios where the log structure is complex or key-value extraction needs to be customized. You can use a built-in system template or a custom template to extract fields.

After log data is structured, you can use SQL statements to query and analyze it in the same way as you query and analyze data in two-dimensional database tables.

Constraints

- If indexing has not been configured, delimiters for structured fields are empty by default. The maximum size of a log event that can be structured is 20 KB, with any excess data truncated.

- If indexing has been configured, the default delimiters for structured fields are those listed in Configuring Log Content Delimiters. The maximum size of a log event that can be structured is 500 KB.

Precautions

- Log structuring is performed on a per-log-stream basis.

- Log structuring is recommended when most logs in a log stream share a similar pattern.

- After the structuring configuration is modified, the modification takes effect only for newly written log data, not for historical log data.

Cloud Structuring Parsing

- Log in to the LTS console. The Log Management page is displayed by default.

- Click the target log group or log stream to access the log stream details page.

- On the Log Search tab page, click Log Settings in the upper right corner. On the displayed page, click the Cloud Structuring Parsing tab to configure log structuring.

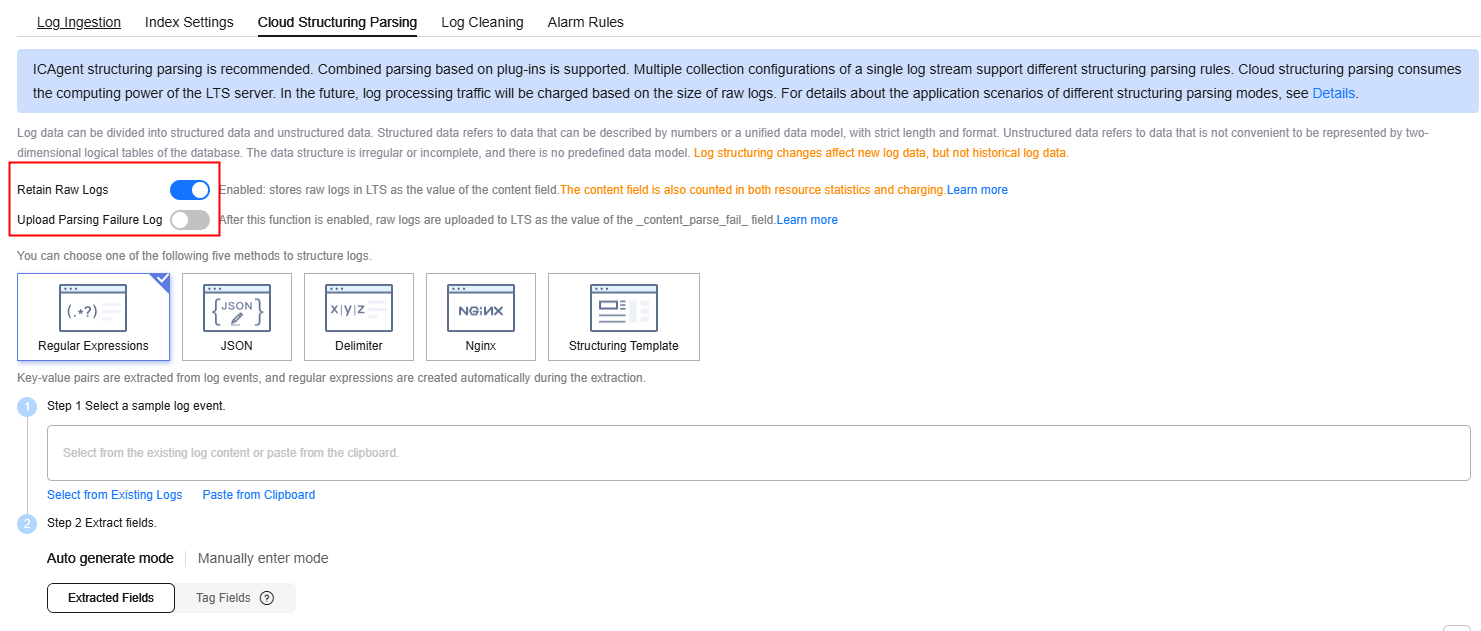

- If Retain Raw Logs is enabled, raw logs will be stored in LTS as the value of the content field. The content field is also counted in both resource statistics and charging.

- If Upload Parsing Failure Log is enabled, raw logs will be uploaded to LTS as the value of the _content_parse_fail_ field.

- The following describes how logs are reported when Retain Raw Logs and Upload Parsing Failure Log are enabled or disabled.

Figure 1 Cloud structuring parsing

Table 1 Log reporting description Parameter

Description

- Retain Raw Logs enabled

- Upload Parsing Failure Log enabled

- Parsing succeeded: The parsed log and the raw log's content field are reported.

- Parsing failed: To avoid redundancy, only the raw log's content field is reported. The _content_parse_fail_ field is not reported.

- Retain Raw Logs enabled

- Upload Parsing Failure Log disabled

- Parsing succeeded: The parsed log and the raw log's content field are reported.

- Parsing failed: The raw log's content field is reported.

- Retain Raw Logs disabled

- Upload Parsing Failure Log enabled

- Parsing succeeded: The parsed log is reported.

- Parsing failed: The _content_parse_fail_ field is reported.

- Retain Raw Logs disabled

- Upload Parsing Failure Log disabled

- Parsing succeeded: The parsed log is reported.

- Parsing failed: Only the system built-in and label fields are reported.

- The following system fields cannot be extracted during log structuring: groupName, logStream, lineNum, content, logContent, logContentSize, collectTime, category, clusterId, clusterName, containerName, hostIP, hostId, hostName, nameSpace, pathFile, and podName.

- Regular Expressions: Extract fields using regular expressions.

- JSON: Extract key-value pairs from JSON log events.

- Delimiter: Extract fields using delimiters (such as commas and spaces).

- Nginx: Customize the format of access logs by using the log_format command.

- Structuring Template: Extract fields using a custom or system template.

- Modify or delete the configured structuring configuration.

- On the Cloud Structuring Parsing tab page, click

to modify the structuring configuration.

to modify the structuring configuration. - On the Cloud Structuring Parsing tab page, click

to delete the structuring configuration.

to delete the structuring configuration.

Deleted structuring configurations cannot be restored. Exercise caution when performing this operation.

- On the Cloud Structuring Parsing tab page, click

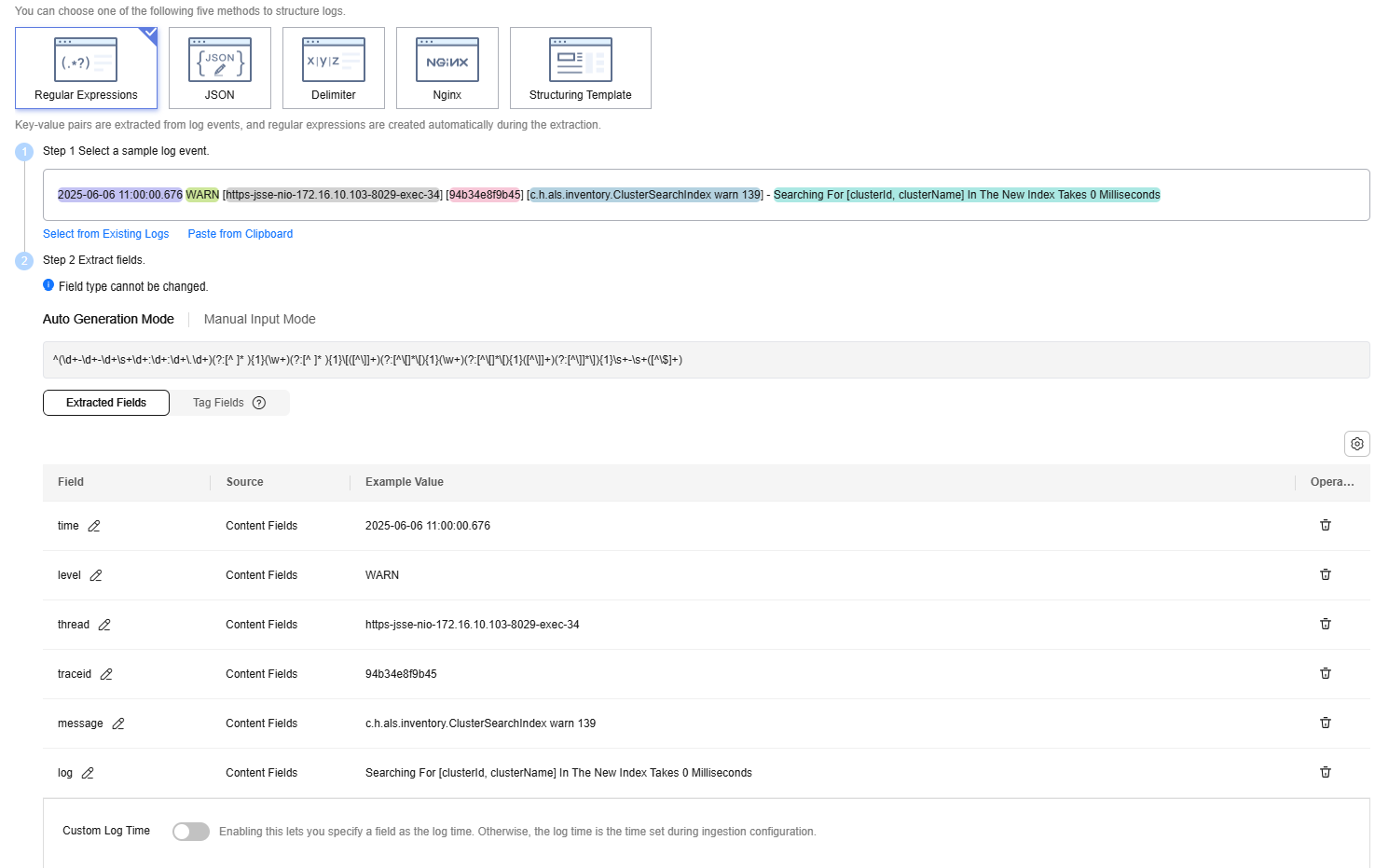

Regular Expressions

If you choose regular expressions, fields are extracted based on your defined regular expressions.

- Select a typical log event as the sample.

- Click Select from Existing Logs, select a log event, and click OK. You can select different time ranges to filter logs.

There are three types of time range: relative time from now, relative time from last, and specified time. Select a time range as required.

- From now: queries log data generated in a time range that ends with the current time, such as the previous 1, 5, or 15 minutes. For example, if the current time is 19:20:31 and 1 hour is selected as the relative time from now, the charts on the dashboard display the log data that is generated from 18:20:31 to 19:20:31.

- From last: queries log data generated in a time range that ends with the current time, such as the previous 1 or 15 minutes. For example, if the current time is 19:20:31 and 1 hour is selected as the relative time from last, the charts on the dashboard display the log data that is generated from 18:00:00 to 19:00:00.

- Specified: queries log data that is generated in a specified time range.

- Click Paste from Clipboard to paste the copied log content to the sample log box.

- Click Select from Existing Logs, select a log event, and click OK. You can select different time ranges to filter logs.

- Extract fields. Extracted fields are shown with their example values. You can extract fields in two ways:

- Auto Generation Mode: Select the log content you want to extract as a field in the sample log event. In the displayed dialog box, set a field name and click Add. A field name can contain only letters, digits, hyphens (-), underscores (_), and periods (.). It cannot start or end with a period, or end with double underscores (__).

For example, copy and paste the following raw log under Select a sample log event:

2025-06-06 11:00:00.676 WARN [https-jsse-nio-172.16.10.103-8029-exec-34] [94b34e8f9b45] [c.h.als.inventory.ClusterSearchIndex warn 139] - Searching For [clusterId, clusterName] In The New Index Takes 0 Milliseconds

Set the field names to time, level, thread, and message based on the raw log example. Figure 2 shows the automatically generated fields.

- Manual Input Mode: Enter a regular expression in the text box and click Extract Field. A regular expression may contain multiple capturing groups, which group strings with parentheses. There are three types of capturing groups:

- (exp): Capturing groups are numbered by counting their opening parentheses from left to right. The numbering starts with 1.

- (?<name>exp): named capturing group. It captures text that matches exp into the group name. The group name must start with a letter and contain only letters and digits. A group is recalled by group name or number.

- (?:exp): non-capturing group. It captures text that matches exp, but it is not named or numbered and cannot be recalled.

- Delimiters split log content into multiple words. Select a portion between two adjacent delimiters as a field. Default delimiters: ,'";=()[]{}@&<>/:\\?\n\t\r and spaces.

- You can enter up to 5,000 characters for a regular expression. You do not have to name capturing groups when entering the regular expression. When you click Extract Field, those unnamed groups will be named as field1, field2, field3, and so on.

- Double underscores (__) are not allowed when you manually enter a regular expression. However, you can rename a field under Content Fields to include double underscores.

- Auto Generation Mode: Select the log content you want to extract as a field in the sample log event. In the displayed dialog box, set a field name and click Add. A field name can contain only letters, digits, hyphens (-), underscores (_), and periods (.). It cannot start or end with a period, or end with double underscores (__).

- Specify a field as the log time. For details, see Setting Custom Log Time.

- Click Save. The type of extracted fields cannot be changed after the structuring is complete.

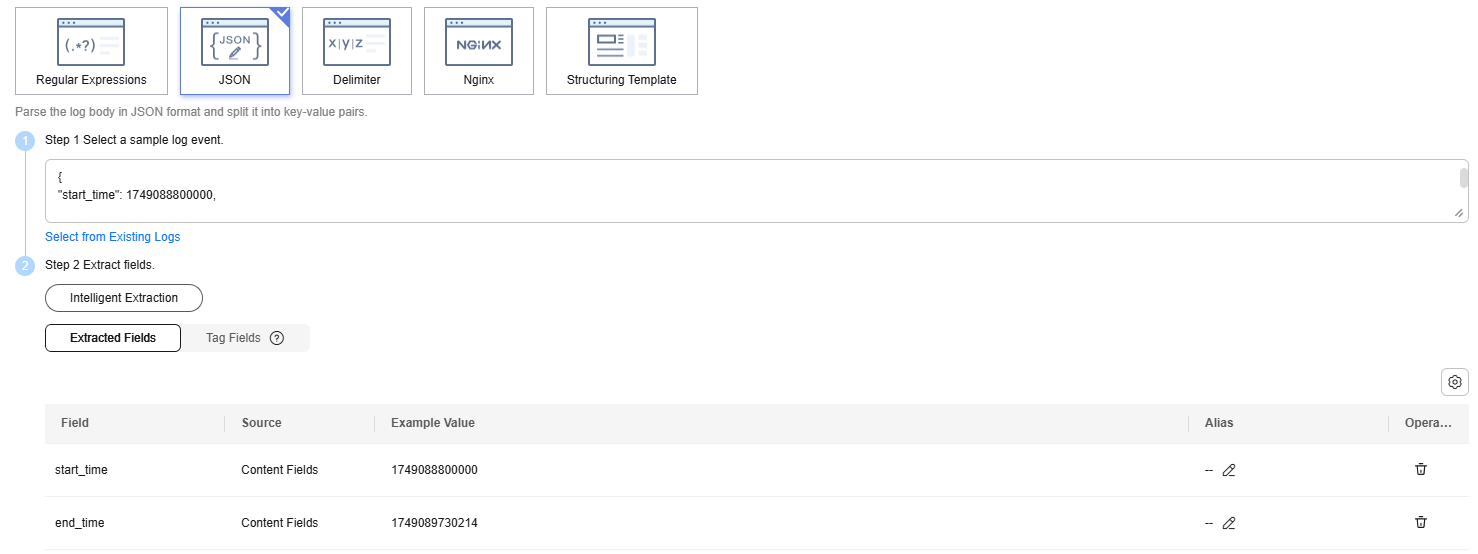

JSON

If you choose JSON, JSON logs are split into key-value pairs.

- Select a typical log event as the sample. Click Select from Existing Logs, select a log event, or enter a log event in the text box, and click OK. You can select different time ranges to filter logs.

- Extract fields. Extract fields from the log event. Extracted fields are shown with their example values.

Enter the following sample raw log in the text box and click Intelligent Extraction. Figure 3 shows the automatically generated fields.

{ "start_time": 1749088800000, "end_time": 1749089730214 }After fields are extracted, check and edit the fields and save them as a template if needed. For details about rules for configuring structured fields, see Setting Structured Fields.

- The float data type has 16 digit precision. If a value contains more than 16 valid digits, the extracted content is incorrect, which affects quick analysis. In this case, you are advised to change the field type to string.

- If the data type of the extracted fields is set to long and the log content contains more than 16 valid digits, only the first 16 valid digits are displayed, and the subsequent digits are changed to 0.

- If the data type of the extracted fields is set to long and the log content contains more than 21 valid digits, the fields are identified as the float type. You are advised to change the field type to string.

- Specify a field as the log time. For details, see Setting Custom Log Time.

- Click Save. The type of extracted fields cannot be changed after the structuring is complete.

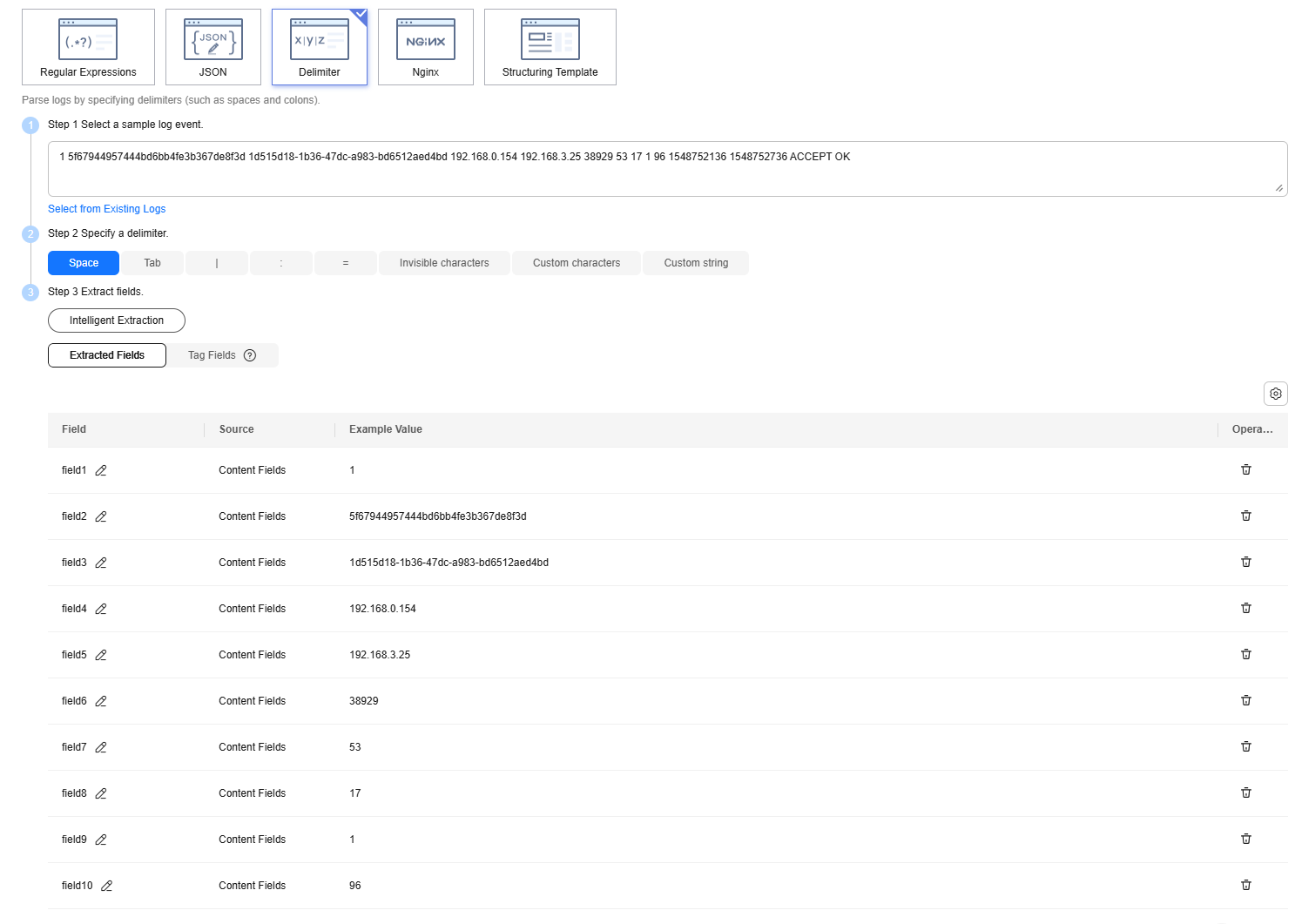

Delimiter

Logs can be parsed by delimiters, such as commas (,), spaces, or other special characters.

- Select a typical log event as the sample. Click Select from Existing Logs, select a log event, or enter a log event in the text box, and click OK. You can select different time ranges to filter logs.

There are three types of time range: relative time from now, relative time from last, and specified time. Select a time range as required.

- From now: queries log data generated in a time range that ends with the current time, such as the previous 1, 5, or 15 minutes. For example, if the current time is 19:20:31 and 1 hour is selected as the relative time from now, the charts on the dashboard display the log data that is generated from 18:20:31 to 19:20:31.

- From last: queries log data generated in a time range that ends with the current time, such as the previous 1 or 15 minutes. For example, if the current time is 19:20:31 and 1 hour is selected as the relative time from last, the charts on the dashboard display the log data that is generated from 18:00:00 to 19:00:00.

- Specified: queries log data that is generated in a specified time range.

- Select or customize a delimiter.

- For invisible characters, enter up to 4 hexadecimal characters starting with 0x for each delimiter, for example, 0x01. A maximum of 32 invisible characters can be entered.

- For custom characters, enter 1 to 10 characters, each as an independent delimiter.

- For a custom string, enter 1 to 30 characters as one whole delimiter.

- Extract fields. Extract fields from the log event. Extracted fields are shown with their example values.

Enter the following sample raw log in the text box and click Intelligent Extraction. Figure 4 shows the automatically generated fields.

1 5f67944957444bd6bb4fe3b367de8f3d 1d515d18-1b36-47dc-a983-bd6512aed4bd 192.168.0.154 192.168.3.25 38929 53 17 1 96 1548752136 1548752736 ACCEPT OK

After fields are extracted, check and edit the fields and save them as a template if needed. For details about rules for configuring structured fields, see Setting Structured Fields.

The float data type has seven digit precision.

If a value contains more than seven valid digits, the extracted content is incorrect, which affects quick analysis. In this case, you are advised to change the field type to string.

- Specify a field as the log time. For details, see Setting Custom Log Time.

- Click Save. The type of extracted fields cannot be changed after the structuring is complete.

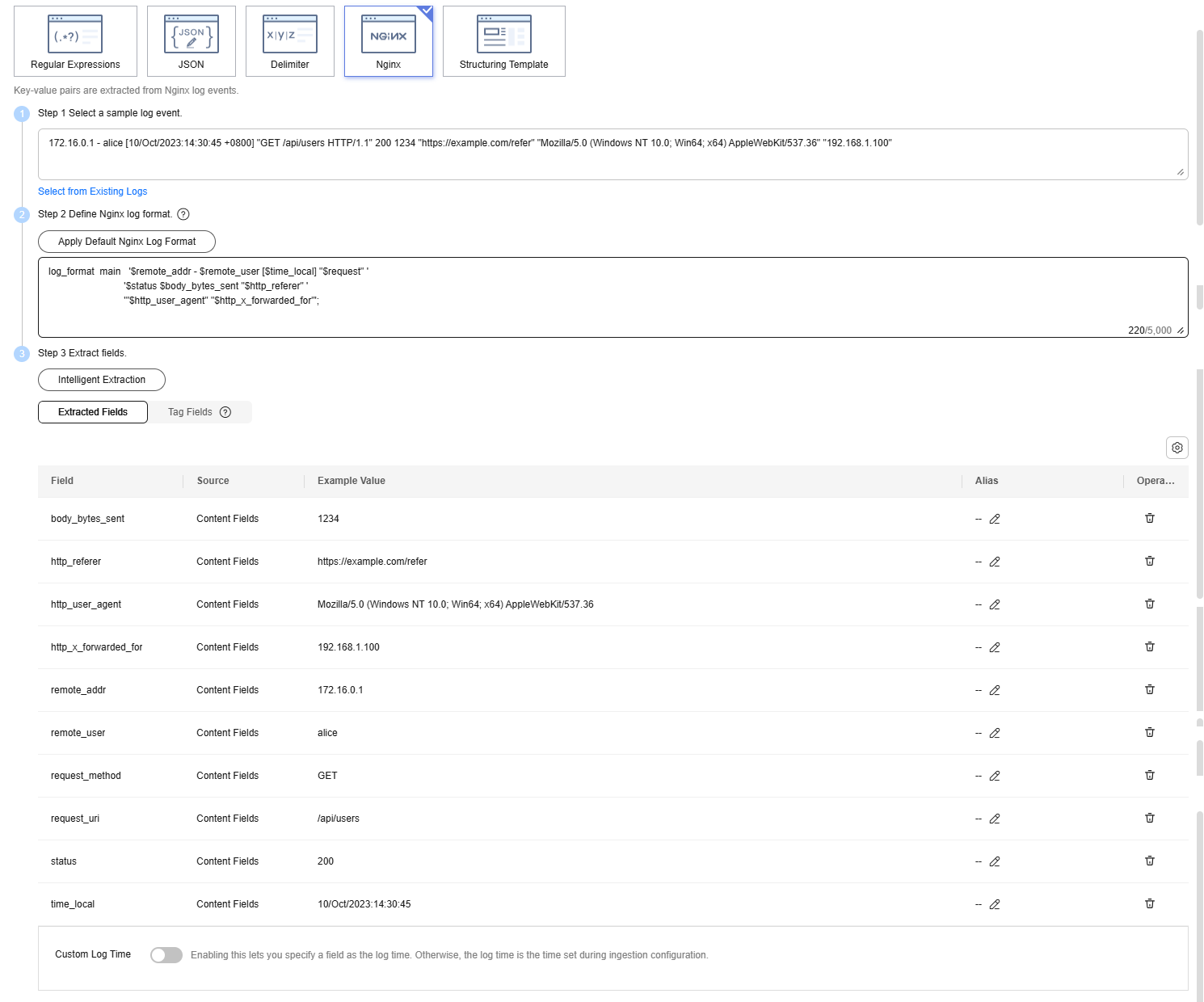

Nginx

You can customize the format of access logs by the log_format command.

- Select a typical log event as the sample. Click Select from Existing Logs, select a log event, or enter a log event in the text box, and click OK. You can select different time ranges to filter logs.

There are three types of time range: relative time from now, relative time from last, and specified time. Select a time range as required.

- From now: queries log data generated in a time range that ends with the current time, such as the previous 1, 5, or 15 minutes. For example, if the current time is 19:20:31 and 1 hour is selected as the relative time from now, the charts on the dashboard display the log data that is generated from 18:20:31 to 19:20:31.

- From last: queries log data generated in a time range that ends with the current time, such as the previous 1 or 15 minutes. For example, if the current time is 19:20:31 and 1 hour is selected as the relative time from last, the charts on the dashboard display the log data that is generated from 18:00:00 to 19:00:00.

- Specified: queries log data that is generated in a specified time range.

- Define the Nginx log format. You can click Apply Default Nginx Log Format to apply the default format.

In standard Nginx configuration files, the portion starting with log_format indicates the log configuration.

- The default configuration is as follows. For details about the default fields, see Table 2. For details about the common extended fields, see Table 3.

log_format main '$remote_addr - $remote_user [$time_local] "$request" ' '$status $body_bytes_sent "$http_referer" ' '"$http_user_agent" "$http_x_forwarded_for"';Table 2 Default fields Field

Description

remote_addr

Client IP address.

remote_user

Client username.

time_local

Server time, which must be enclosed in brackets ([]).

request

Request URL and HTTP protocol

status

Request status.

body_bytes_sent

Number of bytes sent to the client, excluding the size of the response header.

http_referer

Source page URL.

http_user_agent

Client browser information.

http_x_forwarded_for

Real IP address of the client forwarded by the proxy server.

Table 3 Extended fields Field

Description

request_time

Total time of the entire request, in seconds (accurate to milliseconds).

upstream_response_time

Backend server response time.

http_cookie

Cookie data sent by the client.

http_x_real_ip

Real IP address of the client.

uri

Request URI, for example, /index.html.

args

Parameters in the request line, for example, ?param1=value1¶m2=value2.

content_length

The Content-Length field in the request header.

content_type

The Content-Type field in the request header.

host

The Host field in the request header, or the name of the server that processes the request.

server_addr

Server IP address.

server_name

Server name.

server_port

Server port.

server_protocol

Protocol version used by the server to send responses to the client, for example, HTTP/1.1.

scheme

Request protocol, for example, http or https.

- You can also customize a format. The format must meet the following requirements:

- Must be configured using Nginx and cannot be empty.

- Must start with log_format and contain apostrophes (') and field names.

- Can contain up to 5,000 characters.

- Must match the sample log event.

- Any character except letters, digits, underscores (_), and hyphens (-) can be used to separate the log_format fields.

- Must end with an apostrophe (') or an apostrophe plus a semicolon (';).

- The default configuration is as follows. For details about the default fields, see Table 2. For details about the common extended fields, see Table 3.

- Extract fields. Extract fields from the log event. Extracted fields are shown with their example values.

Enter the following sample raw log in the text box and click Intelligent Extraction. Figure 5 shows the automatically generated fields.

172.16.0.1 - alice [10/Oct/2023:14:30:45 +0800] "GET /api/users HTTP/1.1" 200 1234 "https://example.com/refer" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36" "192.168.1.100"

Configure the following Nginx log format in Step 2.

log_format main '$remote_addr - $remote_user [$time_local] "$request" ' '$status $body_bytes_sent "$http_referer" ' '"$http_user_agent" "$http_x_forwarded_for"';After fields are extracted, check and edit the fields and save them as a template if needed. For details about rules for configuring structured fields, see Setting Structured Fields.

- The float data type has seven digit precision.

- If a value contains more than seven valid digits, the extracted content is incorrect, which affects quick analysis. In this case, you are advised to change the field type to string.

- Specify a field as the log time. For details, see Setting Custom Log Time.

- Click Save. The type of extracted fields cannot be changed after the structuring is complete.

Structuring Template

This mode extracts fields using a custom template or a system template.

For details, see Setting a Structuring Template.

Helpful Links

LTS allows you to create, query, and delete structuring configurations, as well as query structuring templates by calling APIs. For details, see Cloud Log Structuring.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot