Pod Eviction Upon a GPU Fault

There will be a GPU fault when a GPU is unable to deliver computing services reliably due to issues such as hardware damage, driver anomalies, communication failures, or environmental factors. These issues can lead to pod startup failures, interrupted tasks, and resource waste, particularly in AI tasks and high-performance computing applications. To mitigate these risks, CCE offers a pod eviction strategy upon GPU faults. With this strategy, when CCE AI Suite (NVIDIA GPU) detects a qualifying GPU fault, it will evict pods that carry a specific label. You can then decide whether to recreate or reschedule these evicted pods. This ensures service continuity and improves system stability.

Prerequisites

- A CCE standard or Turbo cluster of v1.27 or later is available.

- A NVIDIA GPU node is running properly in the cluster.

- The CCE AI Suite (NVIDIA GPU) add-on has been installed in the cluster. The add-on version must meet the following requirements:

- If the cluster version is v1.27, the add-on version must be 2.2.1 or later.

- If the cluster version is v1.28 or later, the add-on version must be 2.8.1 or later.

Notes and Constraints

- After pod eviction upon a GPU fault is enabled, eviction is triggered only when the CCE AI Suite (NVIDIA GPU) add-on detects a specific type of GPU fault. CCE response to such faults depends on the isolation policy associated with each fault type, which directly influences how pods are rescheduled. For details about the GPU fault types that support automatic pod eviction and the isolation policies for each fault type, see Table 1.

- If the detected fault leads to GPU isolation, the GPU, whether it is isolated due to an individual fault or the faulty GPU node, is marked as unavailable. In this case, the evicted pods will not be rescheduled to the same GPU until it recovers, thereby ensuring service continuity.

- If the detected fault does not lead to GPU isolation, the evicted pods may be reassigned to the same GPU in subsequent scheduling cycles.

- Pod eviction upon a GPU fault applies only to pods that use an entire GPU card or a shared GPU. It does not apply to pods that use GPU virtualization.

Process

|

Procedure |

Description |

|---|---|

|

Enable pod eviction upon a GPU fault in the device-plugin component of the CCE AI Suite (NVIDIA GPU) add-on. |

|

|

After pod eviction upon a GPU fault is enabled, CCE automatically evicts the affected pods with the designated label upon the detection of specific GPU faults. The required label must be added to the pod specification during the workload deployment. This label allows CCE to identify eligible pods and trigger eviction when there is a fault. |

|

|

If there is a GPU fault, check the events of the node hosting the faulty GPU to confirm whether the affected pod was evicted. |

|

|

(Optional) Perform these operations as required. Configure which Xid errors are treated as critical and which are application-induced based on your service requirements. The values specified for these two types of Xid errors must be different. If an error is identified as critical, CCE will isolate the affected GPU. If the error is determined to be application-induced, the GPU will not be isolated. By default, critical Xid errors are 74 and 79, and application-induced Xid errors are 13, 31, 43, 45, 68, and 137. |

Step 1: Enable Pod Eviction Upon a GPU Fault

Enable pod eviction upon a GPU fault in the device-plugin component of the CCE AI Suite (NVIDIA GPU) add-on.

- Log in to the CCE console and click the cluster name to access the cluster console. The Overview page is displayed.

- In the navigation pane, choose Add-ons. In the right pane, find the CCE AI Suite (NVIDIA GPU) add-on and click Edit.

- In the window that slides out from the right, click Edit YAML. Set enable_pod_eviction_on_gpu_error to true to enable pod eviction upon a GPU fault.

... custom: annotations: {} compatible_with_legacy_api: false component_schedulername: kube-scheduler disable_mount_path_v1: false disable_nvidia_gsp: true driver_mount_paths: bin,lib64 enable_fault_isolation: true enable_health_monitoring: true enable_metrics_monitoring: true enable_simple_lib64_mount: true enable_xgpu: false enable_xgpu_burst: false gpu_driver_config: {} health_check_xids_v2: 74,79 # The values must be different from those designated as application-induced Xid errors. install_nvidia_peermem: false is_driver_from_nvidia: true enable_pod_eviction_on_gpu_error: true ...- enable_pod_eviction_on_gpu_error controls whether to enable pod eviction upon a GPU fault. The options include:

- true: Pod eviction upon a GPU fault is enabled.

- false: (Default) Pod eviction upon a GPU fault is disabled.

- enable_pod_eviction_on_gpu_error controls whether to enable pod eviction upon a GPU fault. The options include:

- After the setting, click OK in the lower right corner of the page. CCE AI Suite (NVIDIA GPU) is then automatically upgraded. After the add-on status changes to Running, the pod eviction upon a GPU fault function becomes effective.

Step 2: Deploy a Workload with the Specified Label

After pod eviction upon a GPU fault is enabled, CCE automatically evicts the affected pods with the designated label upon the detection of specific GPU faults. The required label must be added to the pod specification during the workload deployment. This label allows CCE to identify eligible pods and trigger eviction when there is a fault.

- Log in to the CCE console and click the cluster name to access the cluster console. In the navigation pane, choose Workloads. In the upper right corner of the displayed page, click Create Workload.

- In the Container Settings area, click Basic Info, set GPU Quota to GPU card or Shared, and configure the related parameters.

- GPU card: An entire physical GPU will be exclusively allocated to the target pod.

- Shared: Multiple pods can share a physical GPU, including its compute and memory resources.

Figure 1 Using a GPU card

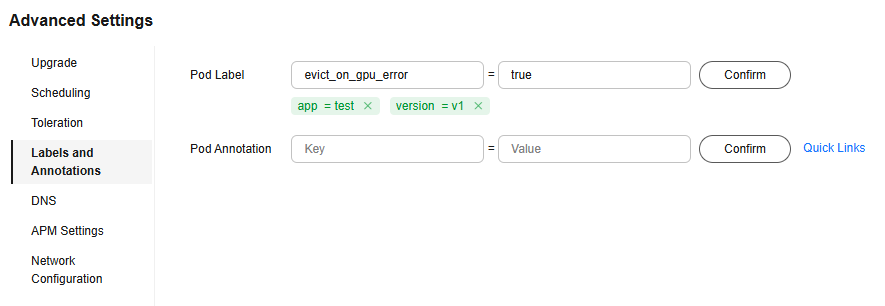

- In Advanced Settings area, click Labels and Annotations and add the evict_on_gpu_error=true label. When the add-on detects a GPU fault, it evicts the pods with this label from the faulty GPU.

Figure 2 Adding the required label

- Configure other parameters by referring to Creating a Workload. Then, click Create Workload in the lower right corner. When the workload changes to the Running state, it is created.

A job is used as an example. For other workloads, add the same label to the same field.

- Log in to the client and access the cluster using kubectl (Accessing a Cluster Using kubectl).

- Create a YAML file for creating a job with the specified label.

vim k8-job.yamlAdd the evict_on_gpu_error: "true" label to the workload. The file content is as follows:

apiVersion: batch/v1 kind: Job metadata: name: k8-job spec: completions: 2 parallelism: 2 template: metadata: # Manually add the specific label to the workload. labels: evict_on_gpu_error: "true" # When the add-on detects a GPU fault, it evicts the pods with this label from the faulty GPU. spec: restartPolicy: Never schedulerName: volcano containers: - name: k8-job image: pytorch:latest # Replace it with the needed image. imagePullPolicy: IfNotPresent command: ["/bin/bash", "-c", "python /etc/scripts/run.py"] # Replace it with the needed application command. resources: limits: nvidia.com/gpu: 1 requests: nvidia.com/gpu: 1

- Create the workload.

kubectl apply -f k8-job.yamlIf information similar to the following is displayed, the workload has been created:

job.batch/k8-job created

- Check whether the pods have been created.

kubectl get pod -n defaultIf information similar to the following is displayed and the pod statuses are all Running, the pods have been created.

NAME READY STATUS RESTARTS AGE k8-job1-jdjbb 1/1 Running 0 28s k8-job1-pr5m4 1/1 Running 0 28s

Step 3: Check Whether Pod Can Be Evicted

If there is a GPU fault, check the events of the node hosting the faulty GPU to confirm whether the affected pod was evicted.

- Log in to the CCE console and click the cluster name to access the cluster console. The Overview page is displayed.

- In the navigation pane, choose Nodes. In the right pane, click the Nodes tab.

- Locate the row containing the node and click View Events in the Operation column. If event similar to that shown in the figure below is displayed, the pod has been evicted.

Figure 3 Pod eviction event

Other Operations

By default, critical Xid errors are 74 and 79, and application-induced Xid errors are 13, 31, 43, 45, 68, and 137. You can configure which Xid errors are treated as critical and which are application-induced based on your service requirements. The values specified for these two types of Xid errors must be different. If an error is identified as critical, CCE will isolate the affected GPU. If the error is determined to be application-induced, the GPU will not be isolated.

These operations are optional.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot