Using WAF to Block Crawler Attacks

Application Scenarios

Web crawlers make network information collection and query easy, but they also introduce the following negative impacts:

- Web crawlers always consume too much server bandwidth and increase server load as they use specific policies to browser as much information of high value on a website as possible.

- Bad actors may use web crawlers to launch DoS attacks against websites. As a result, websites may fail to provide normal services due to resource exhaustion.

- Bad actors may use web crawlers to steal mission-critical data on your websites, which will damage your economic interests.

To comprehensively mitigate crawler attacks against websites, WAF provides three anti-crawler policies, general check and web shell detection by identifying User-Agent, website anti-crawler by checking browser validity, and CC attack protection by limiting the access traffic rate.

Overview

Figure 1 shows how WAF detects crawlers, where step 1 and step 2 are called JS challenges and step 3 is called JS authentication.

- If the client sends a normal request to the website, triggered by the received JavaScript code, the client will automatically send the request to WAF again. WAF then forwards the request to the origin server. This process is called JavaScript verification.

- If the client is a crawler, it cannot be triggered by the received JavaScript code and will not send a request to WAF again. The client fails JavaScript authentication.

- If a client crawler fabricates a WAF authentication request and sends the request to WAF, the WAF will block the request. The client fails JavaScript authentication.

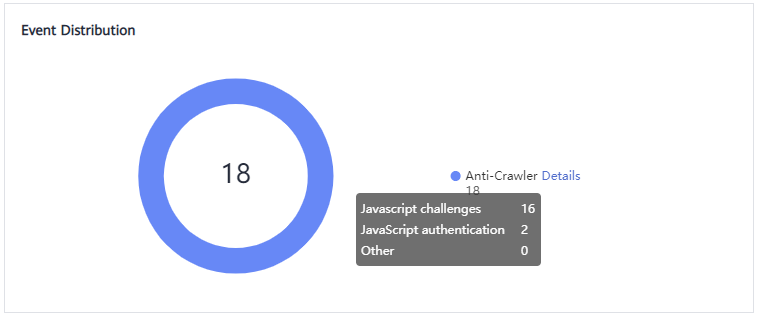

By collecting statistics on the number of JavaScript challenges and authentication responses, the system calculates how many requests the JavaScript anti-crawler defends. In Figure 2, the JavaScript anti-crawler has logged 18 events, 16 of which are JavaScript challenge responses, and 2 of which are JavaScript authentication responses. Other indicates the number of WAF authentication requests fabricated by the crawler.

Limitations and Constraints

- The cloud WAF standard edition does not support the website anti-crawler function.

- Cookies must be enabled and JavaScript supported by any browser used to access a website protected by anti-crawler protection rules.

- If your service is connected to CDN, exercise caution when using the JS anti-crawler function.

CDN caching may impact JS anti-crawler performance and page accessibility.

Resource and Cost Planning

|

Resource |

Description |

Monthly Fee |

|---|---|---|

|

Web Application Firewall |

Cloud - professional edition:

|

For details about pricing rules, see Billing Description. |

Step 1: Buy the Professional Edition Cloud WAF

The following describes how to buy the standard edition cloud WAF.

- Log in to Huawei Cloud management console.

- On the management console page, choose .

- In the upper right corner of the page, click Buy WAF. On the purchase page displayed, select Cloud Mode for WAF Mode.

- Region: Select the region nearest to your services WAF will protect.

- Edition: Select Professional.

- Expansion Package and Required Duration: Set them based on site requirements.

- Confirm the product details and click Buy Now in the lower right corner of the page.

- Check the order details and read the WAF Disclaimer. Then, select the box and click Pay Now.

- On the payment page, select a payment method and pay for your order.

Step 2: Add Website Information to WAF

The following example shows how to add a website information to WAF in cloud CNAME access mode.

- For details about the cloud load balancer access mode, see Connecting a Website to WAF (Cloud Mode - ELB Access).

- For details about the dedicated mode, see Connecting a Website to WAF (Dedicated Mode).

- In the navigation pane on the left, choose Website Settings.

- In the upper left corner of the website list, click Add Website.

- Select Cloud - CNAME and click Configure Now.

- Configure website information as prompted.

Figure 3 Configuring basic information

Table 2 Key parameters Parameter

Description

Example Value

Domain Name

Domain name you want to add to WAF for protection.

- The domain name has an ICP license.

- You can enter a single domain name (for example, top-level domain name example.com or level-2 domain name www.example.com) or a wildcard domain name (*.example.com).

www.example.com

Protected Port

The port over which the website traffic goes

Standard ports

Server Configuration

Web server address settings. You need to configure the client protocol, server protocol, server weights, server address, and server port.

- Client Protocol: protocol used by a client to access a server. The options are HTTP and HTTPS.

- Server Protocol: protocol used by WAF to forward client requests. The options are HTTP and HTTPS.

- Server Address: public IP address (generally corresponding to the A record of the domain name configured on the DNS) or domain name (generally corresponding to the CNAME record of the domain name configured on the DNS) of the web server that a client accesses.

- Server Port: service port over which the WAF instance forwards client requests to the origin server.

- Weight: Requests are distributed across backend origin servers based on the load balancing algorithm you select and the weight you assign to each server.

Client Protocol: Select HTTP.

Server Protocol: HTTP

Server Address: IPv4 XXX.XXX.1.1

Server Port: 80

Use Layer-7 Proxy

You need to configure whether you deploy layer-7 proxies in front of WAF.

Set this parameter based on your website deployment.

Yes

- Click Next. Then, whitelist WAF back-to-source IP address, test WAF, and modify DNS records as prompted.

Figure 4 Domain name added to WAF

Step 3: Enable General Check and Web Shell Detection (Identifying User-Agent)

General check and web shell detection in WAF can help detect and block threats such as malicious crawlers and web shells.

- In the navigation pane on the left, choose Website Settings.

- In the Policy column of the row containing the domain name, click the number to go to the Policies page.

- Ensure that Basic Web Protection is enabled (status:

).

Figure 5 Basic Web Protection configuration area

).

Figure 5 Basic Web Protection configuration area

- On the Protection Status page, enable General Check and Webshell Detection.

Figure 6 Protection configuration

If WAF detects that a malicious crawler is crawling your website, WAF immediately blocks it and logs the event. You can view the crawler protection logs on the Events page.

Step 4: Enable Anti-Crawler Protection to Verify Browser Validity

If you enable anti-crawler protection, WAF dynamically analyzes website service models and accurately identifies crawler behavior based on data risk control and bot identification approaches.

- Click the Anti-Crawler configuration area and toggle it on.

: enabled.

: enabled. : disabled.

: disabled.

- On the Feature Library page, enable Scanner and set other parameters based on your service requirements.

Figure 7 Feature Library

- Select the JavaScript tab and change Status if needed.

JavaScript anti-crawler is disabled by default. To enable it, click

and then click OK in the displayed dialog box to toggle on

and then click OK in the displayed dialog box to toggle on  .

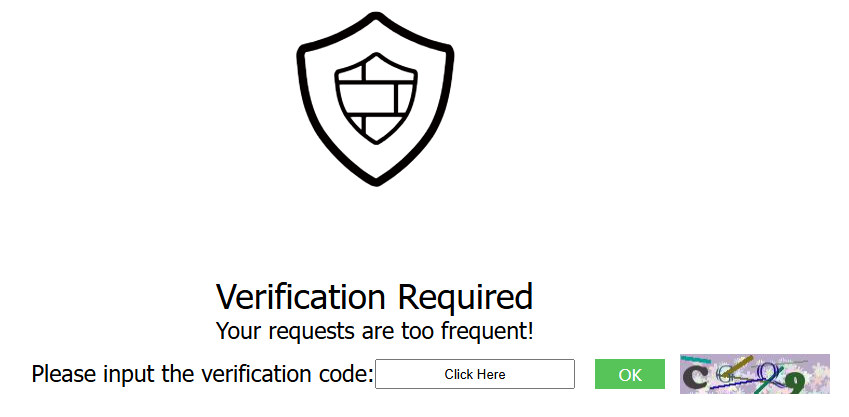

.Protective Action: Block or Log only. You can also select Verification code. If the JavaScript challenge fails, a verification code is required. As long as the visitor provides a valid verification code, their request will not be restricted.

- Cookies must be enabled and JavaScript supported by any browser used to access a website protected by anti-crawler protection rules.

- If your service is connected to CDN, exercise caution when using the JS anti-crawler function.

CDN caching may impact JS anti-crawler performance and page accessibility.

- Configure a JavaScript-based anti-crawler rule by referring to Table 3.

Two protective actions are provided: Protect all requests and Protect specified requests.

- To protect all requests except requests that hit a specified rule

- To protect a specified request only

Set Protection Mode to Protect specified requests, click Add Rule, configure the request rule, and click OK.

Figure 9 Add Rule

Table 3 Parameters of a JavaScript-based anti-crawler protection rule Parameter

Description

Example Value

Rule Name

Name of the rule

waf

Rule Description

A brief description of the rule. This parameter is optional.

-

Effective Date

Time the rule takes effect.

Immediate

Condition List

Parameters for configuring a condition are as follows:

- Field: Select the field you want to protect from the drop-down list. Currently, only Path and User Agent are included.

- Subfield

- Logic: Select a logical relationship from the drop-down list.

NOTE:

If you set Logic to Include any value, Exclude any value, Equal to any value, Not equal to any value, Prefix is any value, Prefix is not any of them, Suffix is any value, or Suffix is not any of them, you need to select a reference table.

- Content: Enter or select the content that matches the condition.

- Case-Sensitive: This parameter can be configured if Path is selected for Field. If you enable this, the system matches the case-sensitive path. It helps the system accurately identify and handle various crawler requests, improving the accuracy and effectiveness of anti-crawler policies.

Path Include /admin

Priority

Rule priority. If you have added multiple rules, rules are matched by priority. The smaller the value you set, the higher the priority.

5

If you enable anti-crawler, web visitors can only access web pages through a browser.

Step 5: Configure CC Attack Protection to Limit Access Frequency

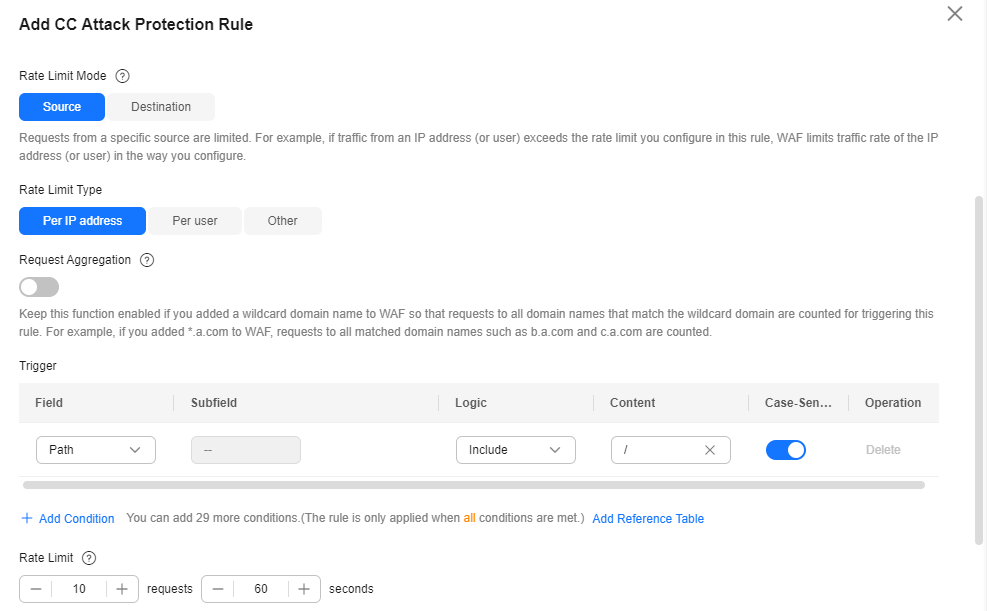

A CC attack protection rule uses a specific IP address, cookie, or referer to limit the access to a specific path (URL), mitigating the impact of CC attacks on web services.

- Ensure that the Status of CC Attack Protection is enabled (

).

Figure 10 CC Attack Protection configuration area

).

Figure 10 CC Attack Protection configuration area

- In the upper left corner above the CC Attack Protection rule list, click Add Rule. The following uses IP address-based rate limiting and human-machine verification as examples to describe how to add an IP address-based rate limiting rule, as shown in Figure 11.

If the number of access requests exceeds the configured rate limit, the visitors are required to enter a verification code to continue the access.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot