Auto Scaling Based on ELB Monitoring Metrics

Background

By default, Kubernetes scales a workload based on resource usage metrics such as CPU and memory. However, this mechanism cannot reflect the real-time resource usage when traffic bursts arrive, because the collected CPU and memory usage data lags behind the actual load balancer traffic metrics. For some services (such as flash sale and social media) that require fast auto scaling, scaling based on this rule may not be performed in a timely manner and cannot meet these services' actual needs. In this case, auto scaling based on ELB QPS data can respond to service requirements more timely.

Solution

This section describes an auto scaling solution based on ELB monitoring metrics. Compared with CPU/memory usage-based auto scaling, auto scaling based on ELB QPS data is more targeted and timely.

The key of this solution is to obtain the ELB metric data and report the data to Prometheus, convert the data in Prometheus to the metric data that can be identified by HPA, and then perform auto scaling based on the converted data.

The implementation scheme is as follows:

- Develop a Prometheus exporter to obtain ELB metric data, convert the data into the format required by Prometheus, and report it to Prometheus. This section uses cloudeye-exporter as an example.

- Convert the Prometheus data into the Kubernetes metric API for the HPA controller to use.

- Set an HPA rule to use ELB monitoring data as auto scaling metrics.

Other metrics can be collected in the similar way.

Prerequisites

- You are familiar with Prometheus.

- You have installed the Cloud Native Cluster Monitoring add-on (kube-prometheus-stack) of version 3.10.1 or later in the cluster.

Local Data Storage must be enabled for Data Storage Configuration.

Building an Exporter Image

This section uses cloudeye-exporter to monitor load balancer metrics. To develop an exporter, see Appendix: Developing an Exporter.

- Log in to a VM that can access the Internet and has Docker installed and write a Dockerfile.

vi Dockerfile

The content is as follows:RUN apt-get update \ && apt-get install -y wget ca-certificates \ && update-ca-certificates \ && wget https://github.com/huaweicloud/cloudeye-exporter/releases/download/v2.0.16/cloudeye-exporter-v2.0.16.tar.gz \ && tar -xzvf cloudeye-exporter-v2.0.16.tar.gz CMD ["./cloudeye-exporter -config=/tmp/clouds.yml"]cloudeye-exporter of v2.0.16 is used as an example. For more versions, see Releases.

- Build an image. The image name is cloudeye-exporter and the image tag is 2.0.16.

docker build --network host . -t cloudeye-exporter:2.0.16

- Push the image to SWR.

- (Optional) Log in to the SWR console, choose Organizations in the navigation pane, and click Create Organization in the upper right corner.

Skip this step if you already have an organization.

- In the navigation pane, choose My Images and then click Upload Through Client. On the page displayed, click Generate a temporary login command and click

to copy the command.

to copy the command. - Run the login command copied in the previous step on the cluster node. If the login is successful, the message "Login Succeeded" is displayed.

- Tag the cloudeye-exporter image.

docker tag {Image name 1:Tag 1}/{Image repository address}/{Organization name}/{Image name 2:Tag 2}

- {Image name 1:Tag 1}: name and tag of the local image to be uploaded.

- {Image repository address}: The domain name at the end of the login command in 3.b is the image repository address, which can be obtained on the SWR console.

- {Organization name}: name of the organization created in 3.a.

- {Image name 2:Tag 2}: desired image name and tag to be displayed on the SWR console.

The following is an example:

docker tag cloudeye-exporter:2.0.16 swr.ap-southeast-3.myhuaweicloud.com/container/cloudeye-exporter:2.0.16

- Push the image to the image repository.

docker push {Image repository address}/{Organization name}/{Image name 2:Tag 2}

The following is an example:

docker push swr.ap-southeast-3.myhuaweicloud.com/container/cloudeye-exporter:2.0.16

The following information will be returned upon a successful push:

... 030***: Pushed 2.0.16: digest: sha256:eb7e3bbd*** size: **

To view the pushed image, go to the SWR console and refresh the My Images page.

- (Optional) Log in to the SWR console, choose Organizations in the navigation pane, and click Create Organization in the upper right corner.

Deploying the Exporter

Prometheus can dynamically monitor pods if you add Prometheus annotations to the pods (the default path is /metrics). This section uses cloudeye-exporter as an example.

Common annotations in Prometheus are as follows:

- prometheus.io/scrape: If the value is true, the pod will be monitored.

- prometheus.io/path: URL from which the data is collected. The default value is /metrics.

- prometheus.io/port: port number of the endpoint to collect data from.

- prometheus.io/scheme: Defaults to http. If HTTPS is configured for security purposes, change the value to https.

- Use kubectl to connect to the cluster.

- Create a secret, which will be used by cloudeye-exporter for authentication.

- Create the clouds.yml file with the following content:

global: prefix: "huaweicloud" scrape_batch_size: 300 auth: auth_url: "https://iam.ap-southeast-3.myhuaweicloud.com/v3" project_name: "ap-southeast-3" access_key: "********" secret_key: "***********" region: "ap-southeast-3"Parameters in the preceding content are described as follows:

- auth_url: indicates the IAM endpoint, which can be obtained from Regions and Endpoints.

- project_name: indicates the project name. On the My Credential page, view the project name and project ID in the Projects area.

- access_key and secret_key: You can obtain them from Access Keys.

- region: indicates the region name, which must correspond to the project in project_name.

- Obtain the Base64-encrypted string of the preceding file.

cat clouds.yml | base64 -w0 ;echo

Information similar to the following is displayed:

ICAga*****

- Create the clouds-secret.yaml file with the following content:

apiVersion: v1 kind: Secret data: clouds.yml: ICAga***** # Replace it with the Base64-encrypted string. metadata: annotations: description: '' name: 'clouds.yml' namespace: default #Namespace where the secret is in, which must be the same as the deployment's namespace. labels: {} type: Opaque

- Create a secret.

kubectl apply -f clouds-secret.yaml

- Create the clouds.yml file with the following content:

- Create the cloudeye-exporter-deployment.yaml file with the following content:

kind: Deployment apiVersion: apps/v1 metadata: name: cloudeye-exporter namespace: default spec: replicas: 1 selector: matchLabels: app: cloudeye-exporter version: v1 template: metadata: labels: app: cloudeye-exporter version: v1 spec: volumes: - name: vol-166055064743016314 secret: secretName: clouds.yml defaultMode: 420 containers: - name: container-1 image: swr.ap-southeast-3.myhuaweicloud.com/container/cloudeye-exporter:2.0.16 # exporter image address built above command: - ./cloudeye-exporter # Startup command for building the cloudeye-exporter image - '-config=/tmp/clouds.yml' resources: {} volumeMounts: - name: vol-166055064743016314 readOnly: true mountPath: /tmp terminationMessagePath: /dev/termination-log terminationMessagePolicy: File imagePullPolicy: IfNotPresent restartPolicy: Always terminationGracePeriodSeconds: 30 dnsPolicy: ClusterFirst securityContext: {} imagePullSecrets: - name: default-secret schedulerName: default-scheduler strategy: type: RollingUpdate rollingUpdate: maxUnavailable: 25% maxSurge: 25% revisionHistoryLimit: 10 progressDeadlineSeconds: 600

Create the preceding workload.

kubectl apply -f cloudeye-exporter-deployment.yaml

- Create the cloudeye-exporter-service.yaml file.

apiVersion: v1 kind: Service metadata: name: cloudeye-exporter namespace: default labels: app: cloudeye-exporter version: v1 annotations: prometheus.io/port: '8087' #Port number of the endpoint to collect data from. prometheus.io/scrape: 'true' #If it is set to true, the resource is the monitoring target. prometheus.io/path: "/metrics" #URL from which the data is collected. The default value is /metrics. prometheus.io/scheme: "http" #The default value is http. If https is set for security purposes, you need to change it to https. spec: ports: - name: cce-service-0 protocol: TCP port: 8087 targetPort: 8087 selector: app: cloudeye-exporter version: v1 type: ClusterIP

Create the preceding Service.

kubectl apply -f cloudeye-exporter-service.yaml

Interconnecting with Prometheus

After collecting monitoring data, Prometheus needs to convert the data into the Kubernetes metric API for the HPA controller to perform auto scaling.

In this example, the ELB metrics associated with the workload need to be monitored. Therefore, the target workload must use the Service or ingress of the LoadBalancer type.

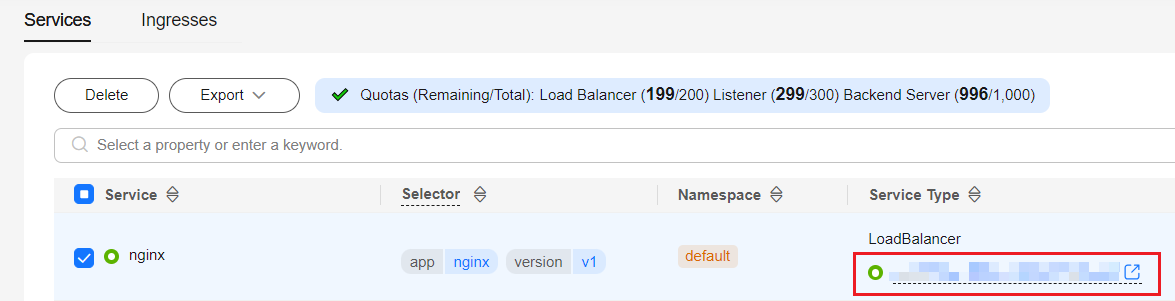

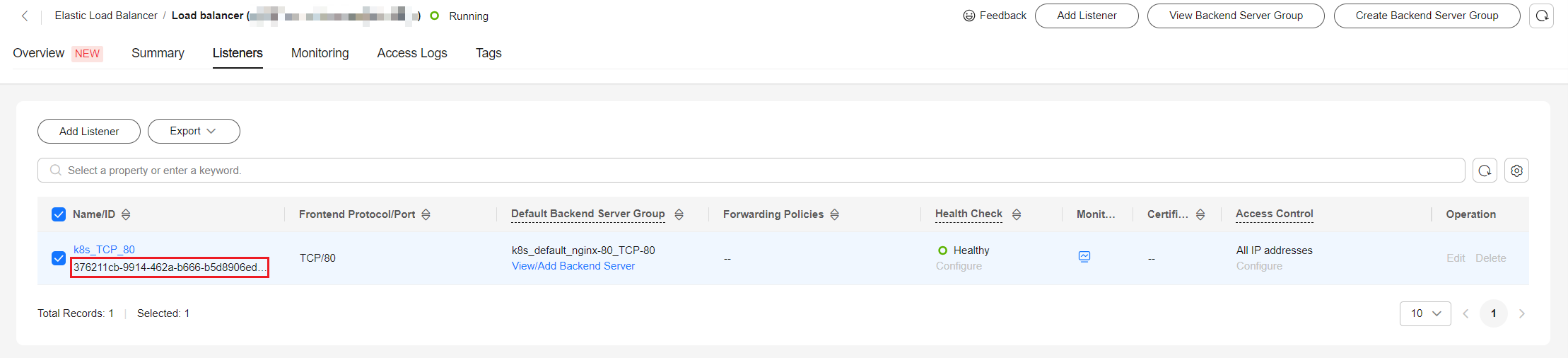

- View the access mode of the workload to be monitored and obtain the ELB listener ID.

- On the CCE cluster console, choose Networking. On the Services or Ingresses tab page, view the Service or ingress of the LoadBalancer type and click the load balancer to access the load balancer page.

- On the Listeners tab, view the listener corresponding to the workload and copy the listener ID.

- On the CCE cluster console, choose Networking. On the Services or Ingresses tab page, view the Service or ingress of the LoadBalancer type and click the load balancer to access the load balancer page.

- Use kubectl to connect to the cluster and add Prometheus configurations. In this example, collect load balancer metrics. For details about advanced usage, see Configuration.

- Create the prometheus-additional.yaml file, add the following content to the file, and save the file:

- job_name: elb_metric params: services: ['SYS.ELB'] kubernetes_sd_configs: - role: endpoints relabel_configs: - action: keep regex: '8087' source_labels: - __meta_kubernetes_service_annotation_prometheus_io_port - action: replace regex: ([^:]+)(?::\d+)?;(\d+) replacement: $1:$2 source_labels: - __address__ - __meta_kubernetes_service_annotation_prometheus_io_port target_label: __address__ - action: labelmap regex: __meta_kubernetes_service_label_(.+) - action: replace source_labels: - __meta_kubernetes_namespace target_label: kubernetes_namespace - action: replace source_labels: - __meta_kubernetes_service_name target_label: kubernetes_service - Use the preceding configuration file to create a secret named additional-scrape-configs.

kubectl create secret generic additional-scrape-configs --from-file prometheus-additional.yaml -n monitoring --dry-run=client -o yaml | kubectl apply -f -

- Edit the persistent-user-config configuration item to enable AdditionalScrapeConfigs.

kubectl edit configmap persistent-user-config -n monitoring

Add --common.prom.default-additional-scrape-configs-key=prometheus-additional.yaml under operatorConfigOverride to enable AdditionalScrapeConfigs as follows:

... data: lightweight-user-config.yaml: | customSettings: additionalScrapeConfigs: [] agentExtraArgs: [] metricsDeprecated: globalDeprecateMetrics: [] nodeExporterConfigOverride: [] operatorConfigOverride: - --common.prom.default-additional-scrape-configs-key=prometheus-additional.yaml ... - Go to Prometheus to check whether custom metrics are successfully collected.

- Create the prometheus-additional.yaml file, add the following content to the file, and save the file:

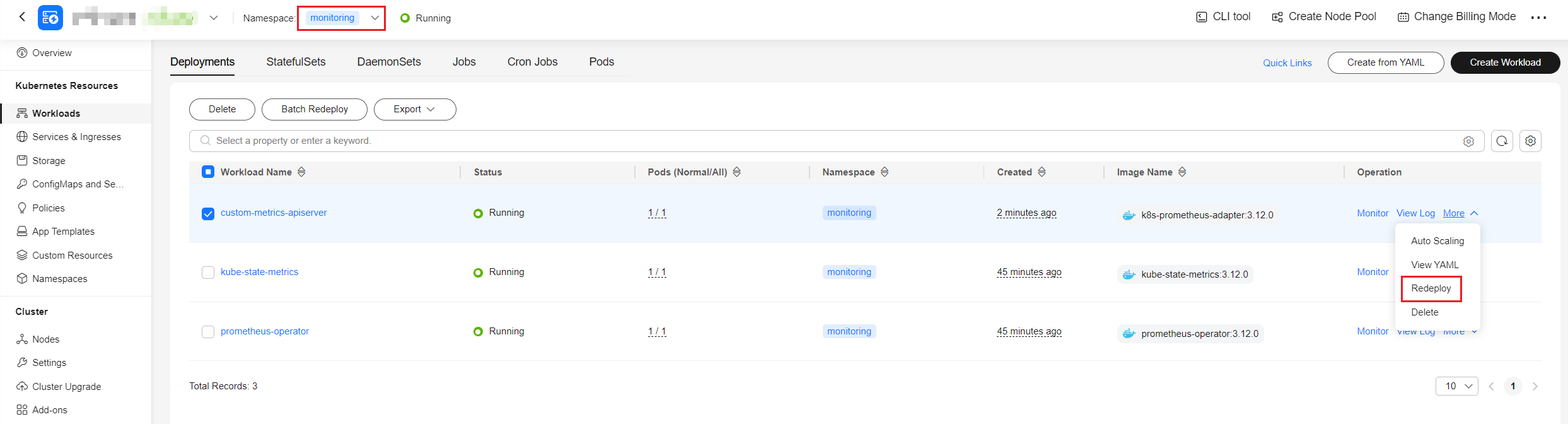

- Modify the user-adapter-config configuration item.

kubectl edit configmap user-adapter-config -nmonitoringAdd the following content to the rules field, replace lbaas_listener_id with the listener ID obtained in 1, and save the file.apiVersion: v1 data: config.yaml: |- rules: - metricsQuery: sum(<<.Series>>{<<.LabelMatchers>>,lbaas_listener_id="*****"}) by (<<.GroupBy>>) resources: overrides: kubernetes_namespace: resource: namespace kubernetes_service: resource: service name: matches: huaweicloud_sys_elb_(.*) as: "elb01_${1}" seriesQuery: '{lbaas_listener_id="*****"}' ... - Redeploy the custom-metrics-apiserver workload in the monitoring namespace.

Creating an HPA Policy

After the data reported by the exporter to Prometheus is converted into the Kubernetes metric API by using the Prometheus adapter, you can create an HPA policy for auto scaling.

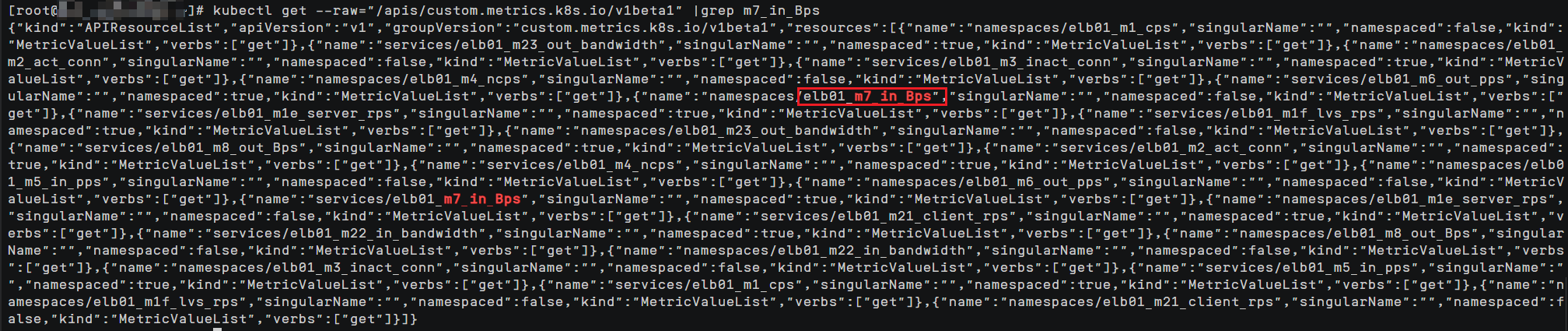

- Obtain the name of a custom metric in the cluster. m7_in_Bps (inbound traffic rate) is used as an example. For details about other ELB metrics, see ELB Listener Metrics.

kubectl get --raw="/apis/custom.metrics.k8s.io/v1beta1" |grep m7_in_Bps

Information similar to that in the figure below is displayed.

- Create an HPA policy. The inbound traffic of the ELB load balancer is used to trigger scale-out. When the value of m7_in_Bps (inbound traffic rate) exceeds 1000, the nginx Deployment will be scaled.

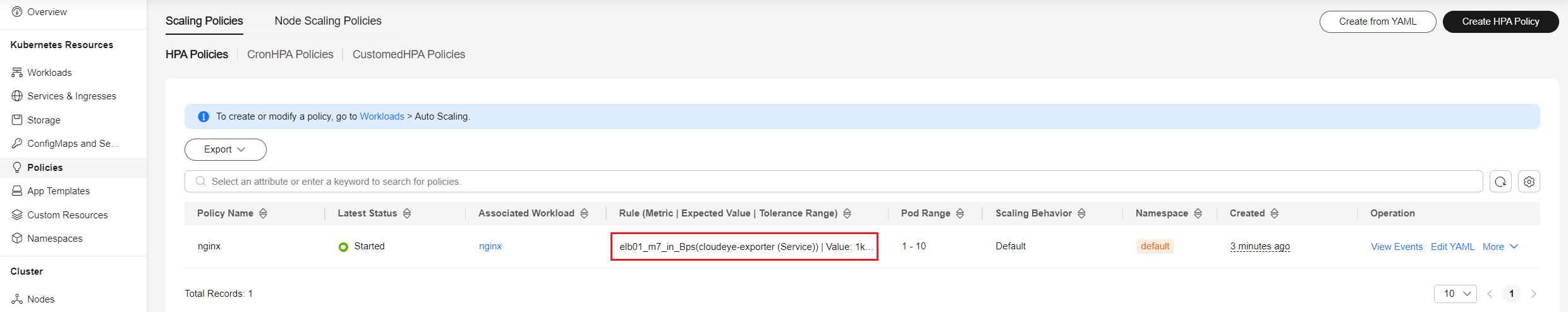

apiVersion: autoscaling/v2 kind: HorizontalPodAutoscaler metadata: name: nginx namespace: default spec: scaleTargetRef: apiVersion: apps/v1 kind: Deployment name: nginx minReplicas: 1 maxReplicas: 10 metrics: - type: Object object: metric: name: elb01_m7_in_Bps # Name of the custom monitoring metric obtained in the previous step describedObject: apiVersion: v1 kind: Service name: cloudeye-exporter target: type: Value value: 1000Figure 2 Created HPA Policy

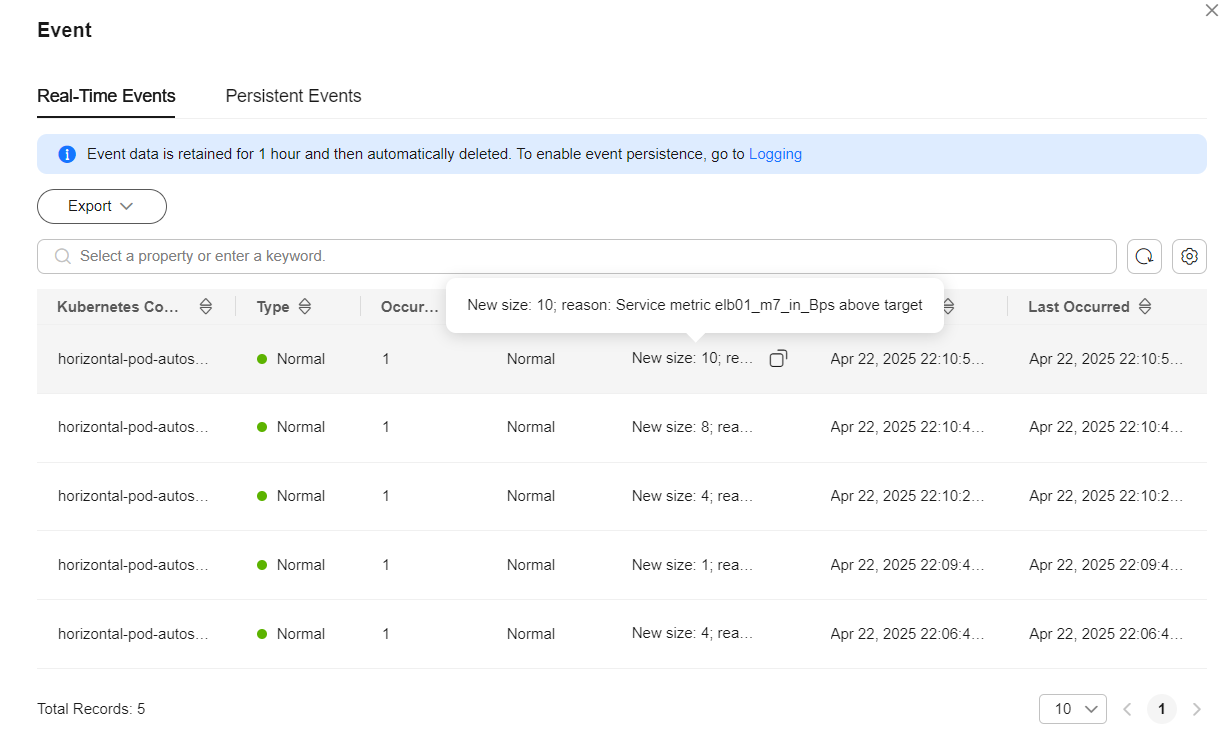

- After the HPA policy is created, perform a pressure test on the workload (accessing the pods through ELB). Then, the HPA controller determines whether scaling is required based on the configured value.

In the Events dialog box, obtain scaling records in the Kubernetes Event column.Figure 3 Scaling events

ELB Listener Metrics

The following table lists the ELB listener metrics that can be collected using the method described in this section.

|

Metric |

Name |

Unit |

Description |

|---|---|---|---|

|

m1_cps |

Concurrent Connections |

Count |

Number of concurrent connections processed by a load balancer. |

|

m1e_server_rps |

Reset Packets from Backend Servers |

Count/Second |

Number of reset packets sent from the backend server to clients. These reset packages are generated by the backend server and then forwarded by load balancers. |

|

m1f_lvs_rps |

Reset Packets from Load Balancers |

Count/Second |

Number of reset packets sent from load balancers. |

|

m21_client_rps |

Reset Packets from Clients |

Count/Second |

Number of reset packets sent from clients to the backend server. These reset packages are generated by the clients and then forwarded by load balancers. |

|

m22_in_bandwidth |

Inbound Bandwidth |

bit/s |

Inbound bandwidth of a load balancer. |

|

m23_out_bandwidth |

Outbound Bandwidth |

bit/s |

Outbound bandwidth of a load balancer. |

|

m2_act_conn |

Active Connections |

Count |

Number of current active connections. |

|

m3_inact_conn |

Inactive Connections |

Count |

Number of current inactive connections. |

|

m4_ncps |

New Connections |

Count |

Number of current new connections. |

|

m5_in_pps |

Incoming Packets |

Count |

Number of packets sent to a load balancer. |

|

m6_out_pps |

Outgoing Packets |

Count |

Number of packets sent from a load balancer. |

|

m7_in_Bps |

Inbound Rate |

byte/s |

Number of incoming bytes per second on a load balancer. |

|

m8_out_Bps |

Outbound Rate |

byte/s |

Number of outgoing bytes per second on a load balancer. |

Appendix: Developing an Exporter

Prometheus periodically calls the /metrics API of the exporter to obtain metric data. Applications only need to report monitoring data through /metrics. You can select a Prometheus client in a desired language and integrate it into applications to implement the /metrics API. For details about the client, see Client libraries. For details about how to write an exporter, see Writing exporters.

The monitoring data must be in the format that Prometheus supports. Each data record provides the ELB ID, listener ID, namespace where the Service is located, Service name, and Service UID as labels, as shown in the following figure.

To obtain the preceding data, perform the following operations:

- Obtain all Services.

The annotations field in the returned information contains the ELB associated with the Service.

- kubernetes.io/elb.id

- kubernetes.io/elb.class

- Use APIs in Querying Listeners to get the listener ID based on the load balancer ID obtained in the previous step.

- Obtain the ELB monitoring data.

The ELB monitoring data is obtained using the CES APIs described in Querying Monitoring Data of Multiple Metrics . For details about ELB monitoring metrics, see Monitoring Metrics. Example:

- m1_cps: number of concurrent connections

- m5_in_pps: number of incoming data packets

- m6_out_pps: number of outgoing data packets

- m7_in_Bps: incoming rate

- m8_out_Bps: outgoing rate

- Aggregate data in the format that Prometheus supports and expose the data through the /metrics API.

The Prometheus client can easily call the /metrics API. For details, see Client libraries. For details about how to write an exporter, see Writing exporters.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot