Copied.

Getting Started with HTAP Standard Edition

You can let an application directly connect to an HTAP instance to enable complex OLAP queries.

Operation Process

Step 1: Buy a Standard HTAP Instance

Step 2: Synchronize TaurusDB Instance Data to the Standard HTAP Instance

Prerequisites

- Parameters have been configured for a TaurusDB instance according to the following table.

Table 1 Parameter description Parameter

Value

How to Modify

Description

binlog_expire_logs_seconds

86400

It is recommended that the binlog retention period be greater than one day. 86,400s = 60 (seconds) x 60 (minutes) x 24 (hours). This prevents incremental replication failures caused by a short binlog retention period.

rds_global_sql_log_bin

ON

To use this parameter, ensure that the kernel version of your TaurusDB instance is 2.0.45.230900 or later.

For details about how to check the kernel version, see How Can I Check the Version of a TaurusDB Instance?

- Databases and tables have been created for the TaurusDB instance.

Procedure

Step 1: Buy a Standard HTAP Instance

- Log in to the management console.

- Click

in the upper left corner and select a region and project.

in the upper left corner and select a region and project. - Click

in the upper left corner of the page and choose Databases > TaurusDB.

in the upper left corner of the page and choose Databases > TaurusDB. - On the Instances page, locate a TaurusDB instance and click its name to access the Basic Information page.

- In the navigation pane, choose HTAP Analysis. On the displayed page, click Create HTAP Instance.

- Set parameters for the standard HTAP instance.

- Basic configuration

Figure 1 Basic configuration

Table 2 Basic configuration Parameter

Description

Billing Mode

Select Pay-per-use or Yearly/Monthly.

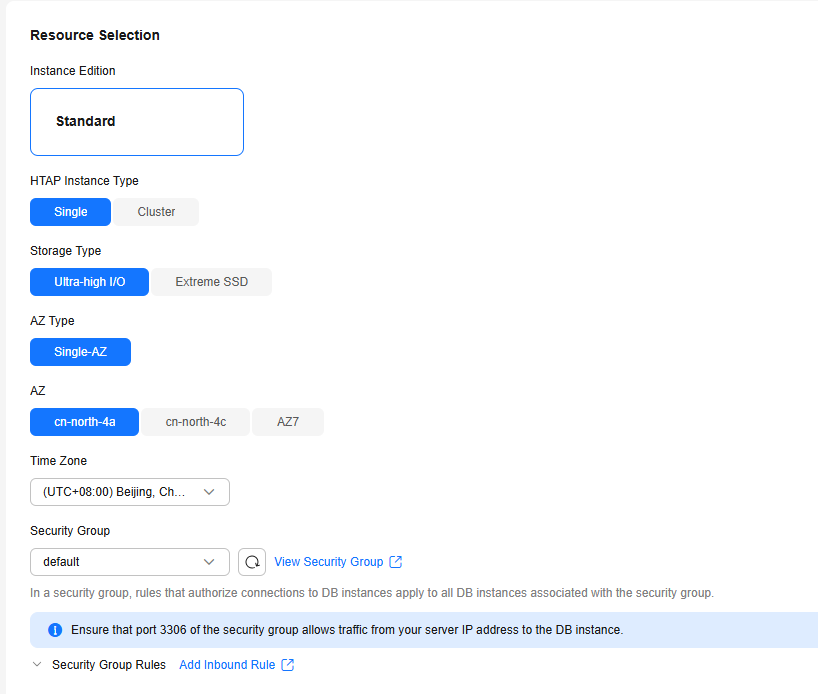

- Resource selection

Figure 2 Resource selection

Table 3 Resource selection Parameter

Description

Instance Edition

Only Standard is available. This edition is developed based on open-source StarRocks.

HTAP Instance Type

Select Single or Cluster.

- Single: There is only one FE node and one BE node. It is used only for function experience and testing and does not ensure SLA.

- Cluster: There are at least three FE or BE nodes and at most 10 FE or BE nodes.

Storage Type

Select Extreme SSD or Ultra-high I/O.

- Extreme SSD: uses 25GE network and RDMA technologies to provide you with up to 1,000 MB/s throughput per disk and sub-millisecond latency.

- Ultra-high I/O: uses disk striping to balance I/O loads among multiple disks to improve read/write bandwidth. The maximum throughput is 1.7 GB/s.

AZ Type

Only Single-AZ is available.

AZ

Select an AZ as needed.

Time Zone

Select a time zone for your instance based on the region hosting your instance. The time zone is selected during instance creation and cannot be changed after the instance is created.

Security Group

The default value is the security group of the TaurusDB instance. You are advised to keep the security group consistent with that of the TaurusDB instance.

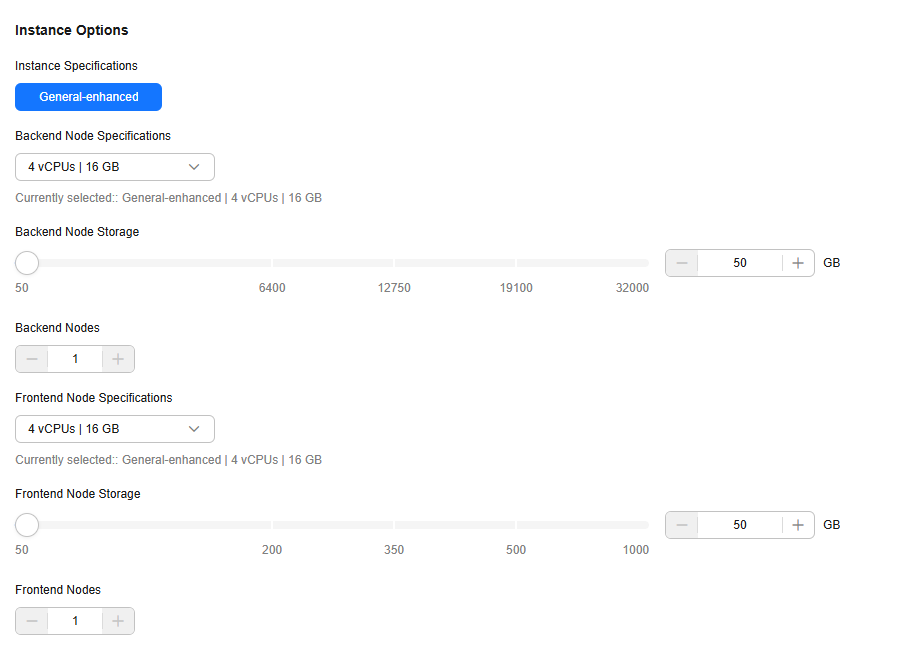

- Instance options

Figure 3 Instance options

Table 4 Instance options Parameter

Description

Instance Specifications

Only general-enhanced is available.

Backend Node Specifications

Select the BE node specifications.

The BE nodes are for data storage and SQL computing.

Backend Node Storage (GB)

Select the storage for BE nodes. The default storage is 50 GB and can be expanded to up to 32,000 GB.

Backend Nodes

- A single-node instance has only one BE node.

- A cluster instance has 3 to 10 BE nodes. You can apply for a maximum of 10 nodes at once.

Frontend Node Specifications

Select the FE node specifications.

The FE nodes manage metadata, manage client connections, and plan and schedule queries.

Frontend Node Storage (GB)

Select the storage for FE nodes. The default storage is 50 GB and can be expanded to up to 1,000 GB.

Frontend Nodes

- A single-node instance has only one FE node.

- A cluster instance has 3 to 10 FE nodes. You can apply for a maximum of 10 nodes at once.

- Instance configuration

Figure 4 Instance configuration

Table 5 Instance configuration Parameter

Description

DB Instance Name

The instance name must start with a letter and consist of 4 to 64 characters. Only letters (case-sensitive), digits, hyphens (-), and underscores (_) are allowed.

Administrator Password

The password must consist of 8 to 32 characters and contain at least three types of the following characters: uppercase letters, lowercase letters, digits, and special characters (~!@#%^*-_=+?,()&$|.). Enter a strong password and periodically change it to improve security and defend against threats such as brute force cracking attempts.

Confirm Password

Enter the administrator password again.

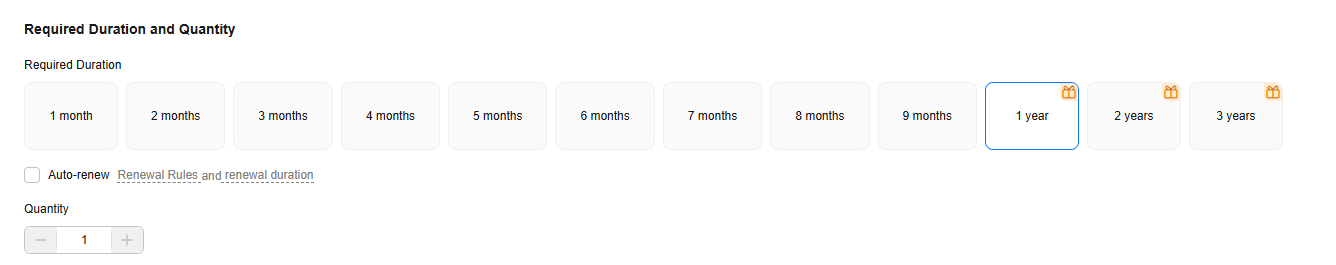

- Required duration and quantity

Figure 5 Required duration and quantity

Table 6 Required duration and quantity Parameter

Description

Required Duration

This parameter is only available for yearly/monthly instances. The system will automatically calculate the fee based on the selected required duration. The longer the required duration is, the larger discount you will enjoy.

Auto-renew

- By default, this option is not selected.

- If you select this option, the auto-renewal cycle is determined by the selected required duration.

Quantity

You can only buy one instance at a time.

- Basic configuration

- Click Next in the lower right corner.

- Confirm the information and click Submit.

To modify the instance information, click Previous.

- Confirm the order.

- To modify the instance information, click Previous.

- If you do not need to modify your settings, click Pay Now. On the order page, complete the payment.

- On the HTAP instance list page, view and manage the HTAP instance.

Step 2: Synchronize TaurusDB Instance Data to the Standard HTAP Instance

- On the Instances page, locate a TaurusDB instance and click its name to access the Basic Information page.

- In the navigation pane, choose HTAP Analysis.

- Click the name of an HTAP instance to access the Basic Information page.

- In the navigation pane, choose Data Synchronization. On the displayed page, click Create Synchronization Task.

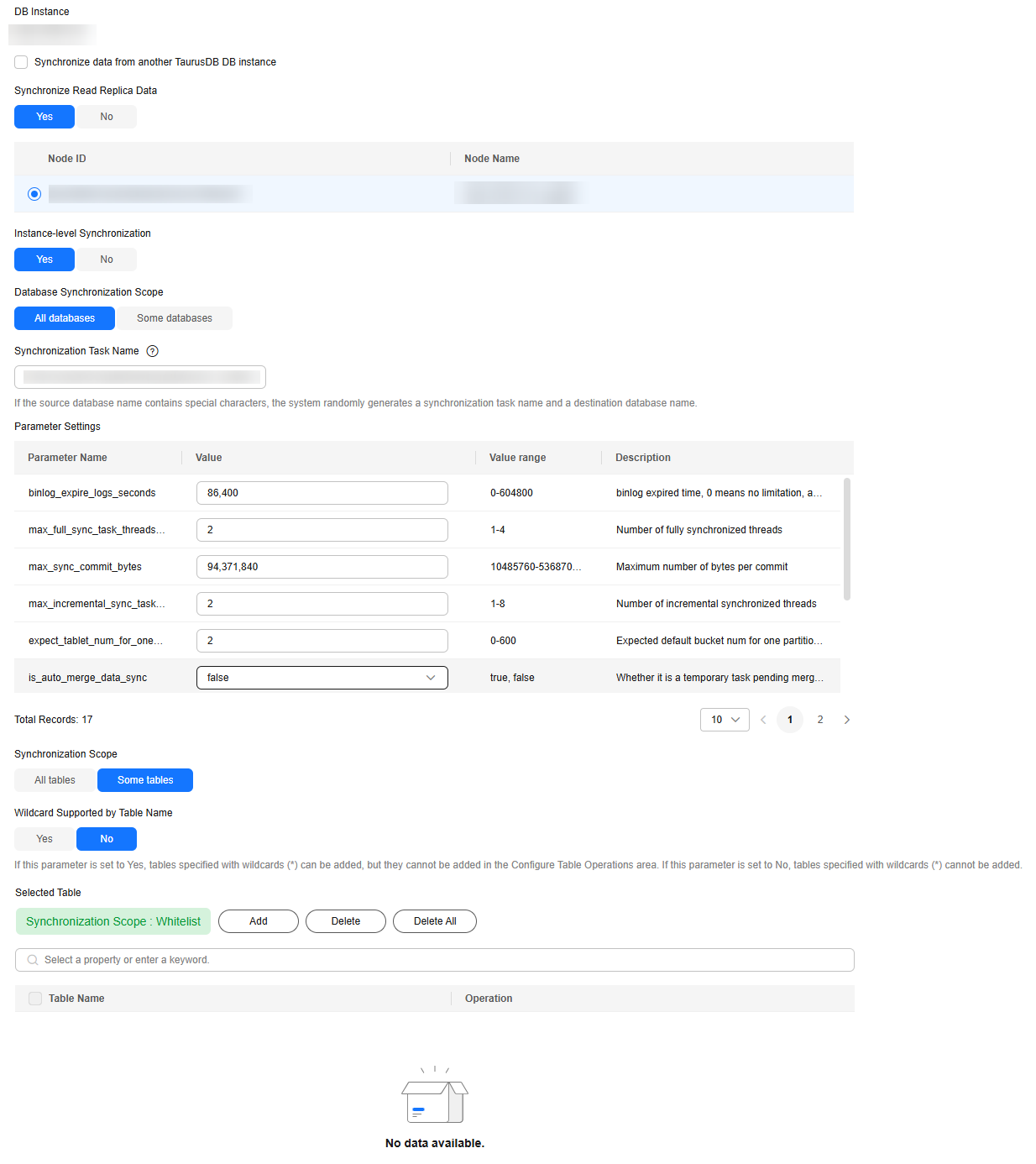

- Configure required parameters.

Figure 6 Creating a synchronization task

Table 7 Parameter description Parameter

Description

Synchronize Read Replica Data

Controls whether to synchronize full data from a read replica. During a full synchronization, ensure that the read replica is available, or the synchronization will fail and you will need to perform the synchronization again.

- Yes: Full data is synchronized from the selected read replica, preventing query load on the primary node during a full synchronization. If there is only one read replica, this node is selected by default.

- No: No synchronization is performed.

Instance-level Synchronization

Controls whether to synchronize multiple databases.

- Yes: A synchronization task can synchronize multiple or all databases.

- No: A synchronization task can synchronize only one database.

Database Synchronization Scope

This parameter is only available when Instance-level Synchronization is set to Yes.

- All databases: All databases are synchronized by default. You do not need to specify any database name.

- Some databases: You need to specify two or more database names.

Parameters Settings

Retain the default values, unless otherwise specified.

Synchronization Task Name

The name can contain 3 to 128 characters. Only letters, digits, underscores (_) are allowed.

Destination Database

The name can contain 3 to 128 characters. Only letters, digits, underscores (_) are allowed.

This parameter is not displayed when Database Synchronization Scope is set to All databases.

When Assign Requests to Row and Column Store Nodes is enabled, the source database name must be the same as the destination database name.

Database to be Synchronized

Select a database that the data will be synchronized to from the drop-down list. You can modify the database parameters of the HTAP instance as required. The drop-down list is hidden when Database Synchronization Scope is set to All databases.

Synchronization Scope

Select All Tables or Some Tables.

If you select Some tables for instance-level synchronization, you need to enter the range of tables to be synchronized. You can use table name wildcards to simplify the operation.

Wildcard Supported by Table Name

This parameter is only displayed when Instance-level Synchronization is set to Yes and Synchronization Scope is set to Some tables.

In an instance-level synchronization scenario, you can determine whether table names in the blacklist or whitelist support wildcards * and ?.

The wildcard * matches zero or more characters, and the wildcard ? matches exactly one character.

Synchronization Scope: Whitelist

If Synchronization Scope is set to Some Tables, you need to configure tables for the blacklist or whitelist.

- You can set either a blacklist or a whitelist. If you select the whitelist, only the tables in the whitelist are synchronized. If you select the blacklist, the tables in the blacklist are not synchronized.

- The tables to be synchronized must contain primary keys or a non-empty unique key, or they cannot be synchronized to the HTAP instance.

- Extra disk space may be used during backend data combination and query. You are advised to reserve 50% of the disk space for the system.

- When setting the table blacklist or whitelist, you can enter multiple tables in the search box at once. The tables can be separated by commas (,), spaces, or line breaks (\n). After entering multiple tables, you need to click

. These tables will be selected by default and displayed in the Selected Table area.

. These tables will be selected by default and displayed in the Selected Table area.

Configure Table Operations

Enable or disable it as required.

- Enabled: Select a synchronized table on the left and perform operations on its columns. The operations include order by, key columns, distributed by, partition by, data_model, buckets, replication_num, and enable_persistent_index. Separate multiple operations by semicolons (;).

For details about the syntax, see Table 8.

- Disabled: No operations are required.

NOTICE:By default, when data is synchronized from an OLTP table to an OLAP table, the primary key of the OLTP table is used as the primary key and sorting key, and for hash bucketing. Partitions are not synchronized.

If the default settings do not meet the performance requirements of OLAP workloads, you can adjust the sorting key, bucketing key, and partitions of an OLAP table by referring to Performance Tuning. You can adjust specific tables using table synchronization settings.

Table 8 Operation syntax Operation Type

Syntax

order by

order by (column1, column2) or order by column1,column2

key columns

key columns (column1, column2) or key columns column1,column2

distributed by

distributed by (column1, column2) buckets 3

NOTE:buckets is optional. If it is not set, the default value is used.

partition by

There are expression partitioning and list partitioning. For details, see Partitioning Syntax Supported by Table Synchronization Settings.

data_model

Specifies the table type. The value can be primary key, duplicate key, or unique key.

Syntax:

data_model=primary key, data_model=duplicate key, or data_model=unique key

replication_num

replication_num=3

NOTE:The value cannot exceed the number of BE nodes, or the verification fails.

enable_persistent_index

Specifies whether to make the index persistent. Syntax:

enable_persistent_index=true or enable_persistent_index=false

Combined scenario

data_model=duplicate key;key columns column1, column2;

- After the settings are complete, click Create Synchronization Task.

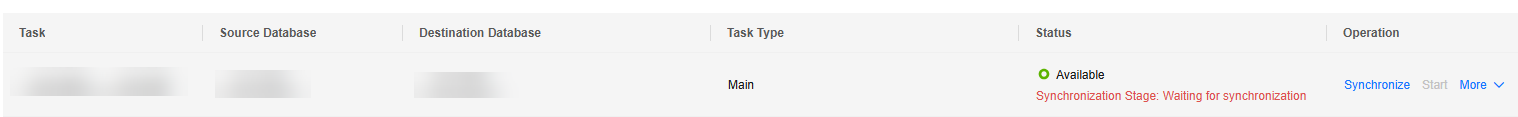

- Click Back to Synchronization List to return to the data synchronization page. A synchronization task to be synchronized is generated. The task status is Synchronization Stage: Waiting for synchronization.

Figure 7 Viewing the task status

- To start the task, click Synchronize in the Operation column.

If the task status changes to Synchronization Stage: Incremental synchronization in progress, the data synchronization is complete.

- During the full synchronization, if some tables fail to be synchronized, an alarm will be generated and those tables will be skipped. The remaining tables will continue to be synchronized. After the full synchronization is complete, the incremental synchronization starts. You can repair tables that failed to be synchronized during the incremental synchronization.

- When you start a synchronization task, it begins with a full synchronization, during which tables are locked using the FLUSH TABLES WITH READ LOCK command. To minimize the impact on workloads, start synchronization tasks during off-peak hours.

- During service tests, if you want to suspend a synchronization task, click Stop. The suspension duration cannot exceed the binlog retention period set for the source primary TaurusDB instance. If the suspension duration exceeds the binlog retention period, the synchronization task cannot continue. You need to delete the task and create a new one. Do not suspend synchronization tasks in the production environment to prevent data inconsistency between OLTP and OLAP.

Step 3: Connect to the HTAP Instance for OLAP Queries

Connect to the HTAP instance to query the data synchronization results. For details, see Connecting to a Standard HTAP Instance Through DAS.

Expression Partitioning

You only need to specify a partition expression (time function expression or column expression) when creating a table. During data import, an HTAP instance automatically creates partitions based on the data and the rule defined in the partition expression.

Partitioning Based on a Time Function Expression

If data is often queried and managed based on a continuous date range, you only need to specify a partition column of the date type (DATE or DATETIME) and a partition granularity (year, month, day, or hour) in the time function expression. An HTAP instance automatically creates partitions and sets the start time and end time of the partitions based on the imported data and partition expression.

- Syntax

PARTITION BY expression ... [ PROPERTIES( 'partition_live_number' = 'xxx' ) ] expression ::= { date_trunc ( <time_unit> , <partition_column> ) | time_slice ( <partition_column> , INTERVAL <N> <time_unit> [ , boundary ] ) }Table 9 Parameter description Parameter

Mandatory

Description

expression

Yes

Currently, only the date_trunc and time_slice functions are supported. If you use time_slice, you do not need to pass the boundary parameter because this parameter defaults to and can only be floor in this scenario. It cannot be set to ceil.

time_unit

Yes

Partition granularity. Currently, the value can only be hour, day, month, or year. It cannot be week. If the partition granularity is hour, the partition column can only be of the DATETIME type. It cannot be of the DATE type.

partition_column

Yes

Partition column.

- It can only be of the DATE or DATETIME type. If date_trunc is used, the partition column can be of the DATE or DATETIME type. If time_slice is used, the partition column can only be of the DATETIME type. The partition column allows NULL values.

- If the partition column is of the DATE type, the value range is from 0000-01-01 to 9999-12-31. If the partition column is of the DATETIME type, the value range is from 0000-01-01 01:01:01 to 9999-12-31 23:59:59.

- Currently, only one partition column can be specified.

- Example

If you often query data by day, you can use the partition expression date_trunc (), set the partition column to event_day, and set the partition granularity to day during table creation. In this way, data is automatically partitioned based on dates when being imported. Data of the same day is stored in the same partition. Partition pruning can be used to significantly accelerate queries.

CREATE TABLE site_access1 ( event_day DATETIME NOT NULL, site_id INT DEFAULT '10', city_code VARCHAR(100), user_name VARCHAR(32) DEFAULT '', pv BIGINT DEFAULT '0' ) DUPLICATE KEY(event_day, site_id, city_code, user_name) PARTITION BY date_trunc('day', event_day) DISTRIBUTED BY HASH(event_day, site_id);

Partitioning Based on the Column Expression

If you often query and manage data based on enumerated values, you only need to specify the column representing the type as the partition column. An HTAP instance automatically divides and creates partitions based on the partition column value of the imported data.

- Syntax:

PARTITION BY expression ... [ PROPERTIES( 'partition_live_number' = 'xxx' ) ] expression ::= ( <partition_columns> ) partition_columns ::= <column>, [ <column> [,...] ]Table 10 Parameter description Parameter

Mandatory

Description

partition_columns

Yes

Partition columns.

- The partition column values can be string (except BINARY), date, integer, or boolean values. They cannot be NULL.

- After the import, a partition automatically created can contain only one value of each partition column. If multiple values of each partition column need to be contained, use list partitioning.

- Example

If you often query the equipment room billing details by date range and city, you can use a partition expression to specify the dt and city columns as the partition columns when creating a table. In this way, data of the same date and city is grouped into the same partition, and partition pruning can be used to significantly accelerate queries.

CREATE TABLE t_recharge_detail1 ( id bigint, user_id bigint, recharge_money decimal(32,2), city varchar(20) not null, dt varchar(20) not null ) DUPLICATE KEY(id) PARTITION BY (dt,city) DISTRIBUTED BY HASH(`id`);

List Partitioning

Data is partitioned based on a list of enumerated values that you explicitly define. You need to explicitly list the enumerated values contained in each list partition, and the values do not need to be consecutive.

List partitioning is suitable for data whose columns contain a small number of enumerated values, and you often query and manage data based on these enumerated values. For example, columns represent geographical locations, states, or categories. Each value in a column represents an independent category. Partitioning data based on enumerated values of columns improves query performance and facilitates data management. List partitioning is especially suitable for scenarios where a partition needs to contain multiple values of each partition column.

For example, a table includes the city column that represents the city where an individual is from, and you often query and manage data by state and city. You can use the city column as the partition column for list partitioning when creating a table, and specify that data of multiple cities in the same state is stored in the same partition: PARTITION pCalifornia VALUES IN ("Los Angeles","San Francisco","San Diego"). This method can accelerate queries and facilitate data management.

Partitions must be created during table creation. Partitions cannot be automatically created during data import. If the table does not contain the partitions corresponding to the data, an error will be reported.

- Syntax

PARTITION BY LIST (partition_columns)( PARTITION <partition_name> VALUES IN (value_list) [, ...] ) partition_columns::= <column> [,<column> [, ...] ] value_list ::= value_item [, value_item [, ...] ] value_item ::= { <value> | ( <value> [, <value>, [, ...] ] ) }Table 11 Parameter description Parameter

Mandatory

Description

partition_columns

Yes

Partition columns.

The partition column values can be string (except BINARY), date (DATE and DATETIME), integer, or boolean values. They cannot be NULL.

partition_name

Yes

Partition name.

You are advised to set appropriate partition names to distinguish data categories in different partitions.

value_list

Yes

List of enumerated values of partition columns in a partition.

- Example 1

If you often query the equipment room billing details by state or city, you can specify the city column as the partition column and specify that the cities in each partition belong to the same state. In this way, you can quickly query data of a specific state or city and manage data by state or city.

CREATE TABLE t_recharge_detail2 ( id bigint, user_id bigint, recharge_money decimal(32,2), city varchar(20) not null, dt varchar(20) not null ) DUPLICATE KEY(id) PARTITION BY LIST (city) ( PARTITION pCalifornia VALUES IN ("Los Angeles","San Francisco","San Diego"), -- These cities belong to the same state. PARTITION pTexas VALUES IN ("Houston","Dallas","Austin") ) DISTRIBUTED BY HASH(`id`); - Example 2

If you often query the equipment room billing details by date range and state or city, you can specify the dt and city columns as the partition columns when creating a table. In this way, data of a specific date and a specific state or city is grouped into the same partition, which accelerates queries and facilitates data management.

CREATE TABLE t_recharge_detail4 ( id bigint, user_id bigint, recharge_money decimal(32,2), city varchar(20) not null, dt varchar(20) not null ) ENGINE=OLAP DUPLICATE KEY(id) PARTITION BY LIST (dt,city) ( PARTITION p202204_California VALUES IN ( ("2022-04-01", "Los Angeles"), ("2022-04-01", "San Francisco"), ("2022-04-02", "Los Angeles"), ("2022-04-02", "San Francisco") ), PARTITION p202204_Texas VALUES IN ( ("2022-04-01", "Houston"), ("2022-04-01", "Dallas"), ("2022-04-02", "Houston"), ("2022-04-02", "Dallas") ) ) DISTRIBUTED BY HASH(`id`);

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot