Managing Instances

Overview

Data migration provides independent clusters for secure and reliable data migration. Clusters are isolated from each other and cannot access each other. With instance management, you can easily create and manage clusters by purchasing GDS-Kafka instances. GDS-Kafka consumes and caches data from Kafka. If the data cache time or size reaches a preconfigured threshold, GDS-Kafka will copy the data to a DWS temporary table, and then insert or update data in the temporary table.

- The format of data generated by the Kafka message producer is specified by the kafka.source.event.type parameter. For details, see Message Formats Supported by GDS-Kafka.

- In GDS-Kafka, you can directly insert data for tables without primary keys, or update data by merging. Direct insert can achieve better performance, because it does not involve update operations. Determine your update mode based on the target table type in DWS. The data import mode is determined by the app.insert.directly parameter and whether a primary key exists. For details, see GDS-Kafka Data Import Modes.

- GDS-kafka only allows lowercase target table and column names.

- GDS-Kafka deletes historical data based on pos in the extended field. If imported data involves the delete operation, the extended field must be used.

Purchasing a GDS-Kafka Instance

To use the data migration feature, you need to purchase a GDS-kafka instance (cluster). Cluster instances provide secure and reliable data migration services. Clusters are isolated from each other.

- Log in to the DWS console.

- In the navigation pane, choose Data > Data Integration > Instances.

- In the upper right corner of the page, click Buy GDS-Kafka Instance. Configure cluster parameters. Only the pay-per-use billing mode is supported.

Table 1 Parameter description Parameter

Description

Example Value

CPU Architecture

The following CPU architectures can be selected:

- x86

- Kunpeng

NOTE:The x86 and Kunpeng architectures differ only in their underlying structure, which is not sensible to the application layer. Both architectures use the same SQL syntax. If you need to create a cluster and find that x86 servers are not enough, you can opt for the Kunpeng architecture.

x86

Flavor

Select a node flavor.

-

Capacity

Storage capacity of a node.

-

Current Flavor

Current flavor of the cluster.

-

Name

Set the name of the data warehouse cluster.

Enter 4 to 64 characters. Only case-insensitive letters, digits, hyphens (-), and underscores (_) are allowed. The value must start with a letter. Letters are not case-sensitive.

-

Version

Version of the database instance installed in the cluster.

-

VPC

Specify a VPC to isolate the cluster's network.

If you create a data warehouse cluster for the first time and have not configured the VPC, click View VPC. On the VPC management console that is displayed, create a VPC as needed.

-

Subnet

Specify a VPC subnet.

A subnet provides dedicated network resources that are isolated from other networks, improving network security.

-

Security Group

Specify a VPC security group.

A security group restricts access rules to enhance security when DWS and other services access each other.

-

EIP

Specify whether users can use a client to connect to a cluster's database over the Internet. The following methods are supported:

- Do not use: Do not specify any EIPs here. If DWS is used in the production environment, first bind it to ELB, and then bind it to an EIP on the ELB page.

- Buy now: Specify bandwidth for EIPs, and the system will automatically assign EIPs with dedicated bandwidth to clusters. You can use the EIPs to access the clusters over the Internet. The bandwidth name of an automatically assigned EIP starts with the cluster name.

- Specify: Specify an EIP to be bound to the cluster. If no available EIPs are displayed in the drop-down list, click Create EIP to go to the Elastic IP page and create an EIP as needed. The bandwidth can be customized.

-

Enterprise Project

Select the enterprise project of the cluster. You can configure this parameter only when the Enterprise Project Management service is enabled. The default value is default.

default

- If the configuration is correct, click Buy Now.

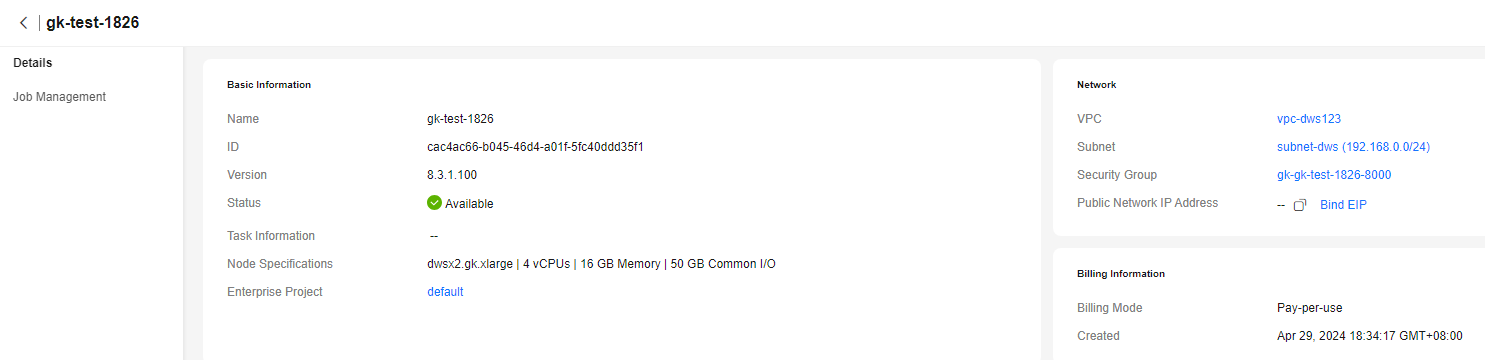

Viewing Instance Details

On the instance details page, you can view the basic information and network information about the cluster.

- Log in to the DWS console.

- In the navigation pane, choose Data > Data Integration > Instances.

- Click the name of an instance to go to the instance details page.

Figure 1 Viewing Instance Details

Message Formats Supported by GDS-Kafka

|

Kafka Source Event Type |

Format |

Description |

||

|---|---|---|---|---|

|

cdc.drs.avro |

Internal format of Huawei Cloud DRS. DRS generates data in the avro format used by Kafka. GDS-Kafka can directly interconnect with DRS to parse and import the data. |

None |

||

|

drs.cdc |

To use the avro format for drs.cdc, specify the Maven dependency of GDS-Kafka-common and GDS-Kafka-source in the upstream programs of Kafka, and then create and fill in the Record object. A Record object represents a table record. It will be serialized into a byte[] array, produced and sent to Kafka, and used by the downstream GDS-Kafka. In the following example, the target table is the person table in the public schema. The person table consists of the id, name, and age fields. The op_type is U, which indicates an update operation. This example changes the name field from a to b in the record with the ID 0, and changes the value of the age field from 18 to 20.

|

Standard avro format:

|

||

|

cdc.json |

In the following example, the target table is the person table in the public schema. The person table consists of the id, name, and age fields. The op_type is U, which indicates an update operation. This example changes the name field from a to b in the record with the ID 1, and changes the value of the age field from 18 to 20.

|

Standard JSON format:

|

||

|

industrial.iot.json |

|

IoT data format:

|

||

|

industrial.iot.recursion.json |

|

IoT data format:

|

||

|

industrial.iot.event.json.independent.table |

|

IoT event stream data format:

|

||

|

industrial.iot.json.multi.events |

|

IoT event stream data format:

|

GDS-Kafka Import Modes

To import GDS-Kafka data to the database, copy the data to a temporary table, and then merge or insert the data. The following table describes their usage and scenarios.

|

Operation |

Direct Insertion |

Primary Key Table |

Import Mode |

|---|---|---|---|

|

insert |

true (only for tables without primary keys) |

No |

Use INSERT SELECT to write data from the temporary table to the target table. |

|

false |

Yes |

Merge data from the temporary table to the target table based on the primary key. |

|

|

No |

Use INSERT SELECT to write data from the temporary table to the target table. |

||

|

delete |

true (only for tables without primary keys) |

No |

Use INSERT SELECT to write data from the temporary table to the target table. |

|

false

NOTE:

You can mark deletion by configuring the app.del.flag parameter. The flag of a deleted record will be set to 1. |

Yes |

|

|

|

No |

|

||

|

update |

true (only for tables without primary keys) |

No |

Use INSERT SELECT to write data from the temporary table to the target table. |

|

false

NOTE:

The update operation is split. The message in before or beforeImage is processed as a delete operation, and the message in after or afterImage is processed as an insert operation. Then, the message is saved to the database based on the insert and delete operations. |

Yes |

Equivalent to the insert+delete operation on a table with a primary key. |

|

|

No |

Equivalent to the insert+delete operation on a table without a primary key. |

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot