Creating a Deployment

A Deployment is a Kubernetes application that does not retain data or state while running. Each pod of the same Deployment is identical, allowing for seamless creation, deletion, and replacement without impacting the application functionality. Deployments are ideal for stateless applications, such as web front-end servers and microservices, which do not require data storage. They enable easy lifecycle management of applications, including updates, rollbacks, and scaling.

Prerequisites

- A cluster is available. For details about how to create a cluster, see Buying a CCE Standard/Turbo Cluster.

- There are some available nodes in the cluster. If no node is available, create one by referring to Creating a Node.

Creating a Deployment

You can create a Deployment on the console or using kubectl.

- Log in to the CCE console.

- Click the cluster name to go to the cluster console, choose Workloads in the navigation pane, and click Create Workload in the upper right corner.

- Configure basic information about the workload.

Parameter

Description

Workload Type

Select Deployment. For details about different workload types, see Workload Overview.

Workload Name

Enter a name for the workload. Enter 1 to 63 characters starting with a lowercase letter and ending with a lowercase letter or digit. Only lowercase letters, digits, and hyphens (-) are allowed.

Namespace

Select a namespace for the workload. The default value is default. You can also click Create Namespace to create one. For details, see Creating a Namespace.

Pods

Enter the number of workload pods.

Container Runtime

A CCE standard cluster uses a common runtime by default, whereas a CCE Turbo cluster supports both common and secure runtimes. For details about their differences, see Secure Runtime and Common Runtime.

Time Zone Synchronization

Configure whether to enable time zone synchronization. After this function is enabled, the container and node will share the same time zone. Time zone synchronization relies on the local disk mounted to the container. Do not modify or delete the local disk. For details, see Configuring Time Zone Synchronization.

- Configure container settings for the workload.

- Container Information: Click Add Container on the right to configure multiple containers for the pod.

If you configured multiple containers for a pod, ensure that the ports used by each container do not conflict with each other, or the workload cannot be deployed.

- Basic Info: Configure basic information about the container.

Parameter

Description

Container Name

Enter a name for the container.

Pull Policy

Image update or pull policy. If you select Always, the image is pulled from the image repository each time. If you do not select Always, the existing image of the node is preferentially used. If the image does not exist, the image is pulled from the image repository.

Image Name

Click Select Image and select the image used by the container.

- SWR Shared Edition: easy-to-use, secure, and reliable image management

- My Images: You can select a private image that you have uploaded. For details about how to upload images, see Pushing an Image Through a Container Engine Client.

- Open Source Images: Select a public image provided by SWR. For details, see Image Center.

- Shared Images: You can select an image shared by another account. For details, see Sharing Private Images.

To use a third-party image, directly enter image path. Ensure that the image access credential can be used to access the image repository. For details, see Using Third-Party Images.

Image Tag

Select the image tag to be deployed.

CPU Quota

- Request: minimum number of CPU cores required by a container. The default value is 0.25 cores.

- Limit: maximum number of CPU cores that can be used by a container. This prevents containers from using excessive resources.

If Request and Limit are not specified, the quota is not limited. For more information and suggestions about Request and Limit, see Configuring Container Specifications.

Memory Quota

- Request: minimum amount of memory required by a container. The default value is 512 MiB.

- Limit: maximum amount of memory available for a container. When memory usage exceeds the specified memory limit, the container will be terminated.

If Request and Limit are not specified, the quota is not limited. For more information and suggestions about Request and Limit, see Configuring Container Specifications.

(Optional) GPU Quota

Configurable only when the cluster contains GPU nodes and the CCE AI Suite (NVIDIA GPU) add-on has been installed.

- Do not use: No GPU will be used.

- GPU card: The GPU is dedicated for the container.

- GPU Virtualization: percentage of GPU resources used by the container. For example, if this parameter is set to 10%, the container will use 10% of GPU resources.

For details about how to use GPUs in a cluster, see Default GPU Scheduling in Kubernetes.

(Optional) NPU Quota

Number of required NPU chips. The value must be an integer and the CCE AI Suite (Ascend NPU) add-on must be installed.

For details about how to use NPUs in a cluster, see Complete NPU Allocation.

(Optional) Privileged Container

Programs in a privileged container have certain privileges. If this option is enabled, the container will be assigned privileges. For example, privileged containers can manipulate network devices on the host machine, modify kernel parameters, access all devices on the node.

For more information, see Pod Security Standards.

(Optional) Init Container

Whether to use the container as an init container. An init container does not support health check.

An init container is a special container that runs before other app containers in a pod are started. Each pod can contain multiple containers. In addition, a pod can contain one or more init containers. Application containers in a pod are started and run only after the running of all init containers completes. For details, see Init Containers.

- SWR Shared Edition: easy-to-use, secure, and reliable image management

- (Optional) Lifecycle: Configure operations to be performed in a specific phase of the container lifecycle, such as Startup Command, Post-Start, and Pre-Stop. For details, see Configuring the Container Lifecycle.

- (Optional) Health Check: Set the liveness probe, ready probe, and startup probe as required. For details, see Configuring Container Health Check.

- (Optional) Environment Variables: Configure variables for the container running environment using key-value pairs. These variables transfer external information to containers running in pods and can be flexibly modified after application deployment. For details, see Configuring Environment Variables.

- (Optional) Data Storage: Mount local storage or cloud storage to the container. The application scenarios and mounting modes vary with the StorageClass. For details, see Storage.

If the workload contains more than one pod, EVS volumes cannot be mounted.

- (Optional) Security Context: Assign container permissions to protect the system and other containers from being affected. Enter the user ID to assign container permissions and prevent systems and other containers from being affected.

- (Optional) Logging: Report standard container output logs to AOM by default, without requiring manual settings. You can manually configure the log collection path. For details, see Collecting Container Logs Using ICAgent (Not Recommended).

To disable the collection of the standard output logs of the current workload, add the annotation kubernetes.AOM.log.stdout: [] in Labels and Annotations in the Advanced Settings area. For details about how to use this annotation, see Table 1.

- Basic Info: Configure basic information about the container.

- Image Access Credential: Select the credential used for accessing the image repository. The default value is default-secret. You can use default-secret to access images in SWR Shared Edition. For details about default-secret, see default-secret.

- (Optional) GPU: All is selected by default. The workload instance will be scheduled to the node of the specified GPU type.

- Container Information: Click Add Container on the right to configure multiple containers for the pod.

- (Optional) Configure the settings of the Service associated with the workload.

A Service provides external access for pods. With a static IP address, a Service forwards access traffic to pods and automatically balances load for these pods.

You can also create a Service after creating a workload. For details about Services of different types, see Service Overview.

- (Optional) Configure advanced settings for the workload.

Parameter

Description

Upgrade

Specify the upgrade mode and parameters of the workload. Rolling upgrade and Replace upgrade are available. For details, see Upgrading and Rolling Back a Workload.

Scheduling

Configure affinity and anti-affinity policies for flexible workload scheduling. Load affinity and node affinity are provided.

- Load Affinity: Common load affinity policies are offered for quick load affinity deployment.

- Not configured: No load affinity policy is configured.

- Multi-AZ deployment preferred: Workload pods are preferentially scheduled to nodes in different AZs through pod anti-affinity.

- Forcible multi-AZ deployment: Workload pods are forcibly scheduled to nodes in different AZs through pod anti-affinity (podAntiAffinity). If there are fewer AZs than pods, the extra pods will fail to run.

- Customize affinity: Affinity and anti-affinity policies can be customized. For details, see Configuring Workload Affinity or Anti-affinity Scheduling (podAffinity or podAntiAffinity).

- Node Affinity: Common node affinity policies are offered for quick load affinity deployment.

- Not configured: No node affinity policy is configured.

- Specify node: Workload pods can be deployed on specified nodes through node affinity (nodeAffinity). If no node is specified, the pods will be randomly scheduled based on the default scheduling policy of the cluster.

- Specify node pool: Workload pods can be deployed in a specified node pool through node affinity (nodeAffinity). If no node pool is specified, the pods will be randomly scheduled based on the default scheduling policy of the cluster.

- Customize affinity: Affinity and anti-affinity policies can be customized. For details, see Configuring Node Affinity Scheduling (nodeAffinity).

Toleration

Using both taints and tolerations allows (not forcibly) the pod to be scheduled to a node with the matching taints, and controls the pod eviction policies after the node where the pod is located is tainted. For details, see Configuring Tolerance Policies.

Labels and Annotations

Add labels or annotations for pods using key-value pairs. After the setting, click Confirm. For details about labels and annotations, see Configuring Labels and Annotations.

DNS

Configure a separate DNS policy for the workload. For details, see DNS Configuration.

Network Configuration

- Pod ingress/egress bandwidth limit: You can set ingress and egress bandwidth limits for pods. For details, see Configuring QoS for a Pod.

- Whether to enable a specified container network configuration: available only for clusters that support this function. After you enable a specified container network configuration, the workload will be created using the container subnet and security group in the configuration. For details, see Binding a Subnet and Security Group to a Namespace or Workload Using a Container Network Configuration.

- Specify the container network configuration name: Only the custom container network configuration whose associated resource type is workload can be selected.

- Load Affinity: Common load affinity policies are offered for quick load affinity deployment.

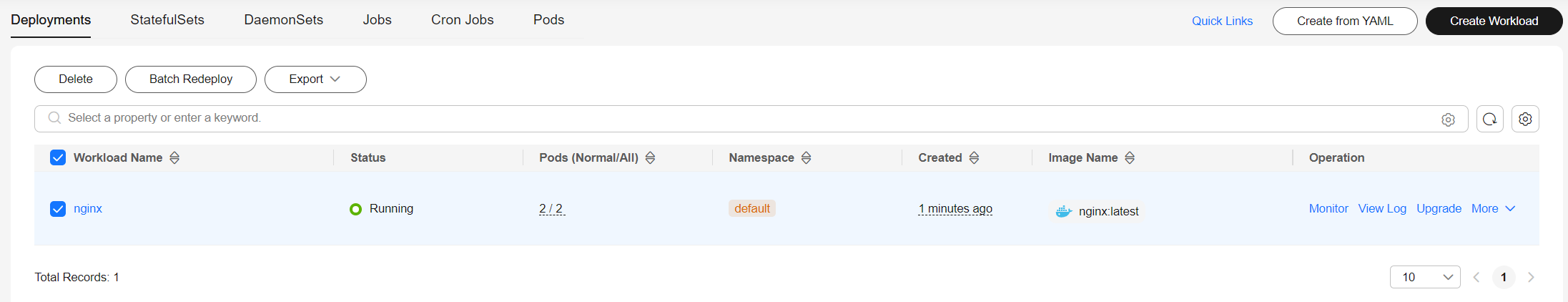

- Click Create Workload in the lower right corner. After a period of time, the workload enters the Running state.

Nginx is used as an example to describe how to create a workload using kubectl.

- Use kubectl to access the cluster. For details, see Accessing a Cluster Using kubectl.

- Create a file named nginx-deployment.yaml. nginx-deployment.yaml is an example file name, and you can rename it as needed.

vi nginx-deployment.yaml

Below is example of the file. For details about the Deployment configuration, see the Kubernetes official documentation.

apiVersion: apps/v1 kind: Deployment # Workload type metadata: name: nginx # Workload name namespace: default # Namespace where the workload is located spec: replicas: 1 # Number of pods in the specified workload selector: matchLabels: # The workload manages pods based on the pod labels in the label selector. app: nginx template: # Pod configuration metadata: labels: # Pod labels app: nginx spec: containers: - image: nginx:latest # Specify a container image. If you use an image in My Images, obtain the image path from SWR. imagePullPolicy: Always # Image pull policy name: nginx # Container name resources: # Node resources allocated to the container requests: # Requested resources cpu: 250m memory: 512Mi limits: # Resource limit cpu: 250m memory: 512Mi imagePullSecrets: # Secret for image pull - name: default-secret - Create the Deployment.

kubectl create -f nginx-deployment.yaml

If information similar to the following is displayed, the Deployment is being created:

deployment.apps/nginx created

- Check the Deployment status.

kubectl get deployment

If the Deployment is in the Running, it means that the Deployment has been created.

NAME READY UP-TO-DATE AVAILABLE AGE nginx 1/1 1 1 4m5s

Parameters

- NAME: Name of the application running in the pod.

- READY: indicates the number of available workloads. The value is displayed as "the number of available pods/the number of expected pods".

- UP-TO-DATE: indicates the number of replicas that have been updated.

- AVAILABLE: indicates the number of available pods.

- AGE: period the Deployment keeps running

- If the Deployment will be accessed through a ClusterIP or NodePort Service, create such a Service. For details, see Networking.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.