NPU日志收集上传

场景描述

当Lite Server节点上的NPU出现故障时,您可通过本方案快速下发NPU日志采集任务,采集到的日志信息保存在Lite Server节点的指定目录,在用户授权同意后自动上传至华为云技术支持提供的OBS桶中,供后台进行问题定位分析。

采集日志范围包括:

- Device侧日志:Device侧Control CPU上的系统类日志、EVENT级别系统日志、非Control CPU上的系统类日志及黑匣子日志。当设备出现异常、崩溃或性能问题时,精准定位到导致问题的根本原因,缩短故障排查时间,提高运维效率。

- 主机侧日志:主机侧内核消息日志、主机侧系统监测类文件、主机侧操作系统日志文件、系统崩溃时保存的Host侧内核消息日志文件。运维人员可以第一时间查看主机监测数据,迅速判断问题是出在应用本身,还是底层的主机资源(如CPU爆满、内存耗尽、磁盘写满)导致的。精准定位避免盲目排查,极大提升排错效率。

- NPU环境日志:通过npu-smi、hccn等工具采集的日志。提升运维效率、保障系统稳定、优化资源利用,助力问题根因分析。

约束限制

- 一键日志采集功能仅支持Snt9b节点和超节点Snt9b23。手动收集日志功能支持300IDuo、Snt9b、Snt9b23。

- 同一个任务最多支持选择50个普通节点或超节点的子节点。

- 同一时间节点上最多同时支持一个任务,任务开始后无法中断, 请您规划好任务优先级。

- 请确保待节点无业务运行,日志收集任务中的命令执行可能导致当前业务中断或异常。

- 请确保用于收集日志的目录空间需大于1GB。

前提条件

快速采集日志操作依赖在Lite Server节点上预安装的AI插件,如未安装该插件,请参考安装Lite Server AI插件章节完成插件安装。

一键日志采集

- 登录ModelArts管理控制台。

- 在左侧导航栏中,选择,进入“任务中心”。

图1 任务中心

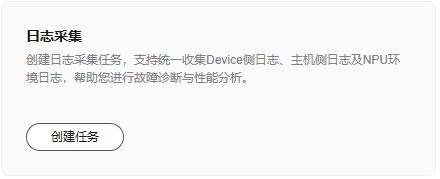

- 单击任务中心页面左上角的“创建任务”,进入“任务模板”页面,在该页面选择“日志采集”,单击“创建任务”。

图2 任务模板

- 在日志采集任务创建页面,填写“任务名称”、“任务描述”,选择“机型”和“节点类型”,选择“采集项”,勾选使用须知并单击“立即创建”。

表1 创建任务参数 参数分类

参数说明

任务名称

系统自动填入任务名称,用户可以自定义。

任务描述

对该任务的描述信息,方便快速查找任务。

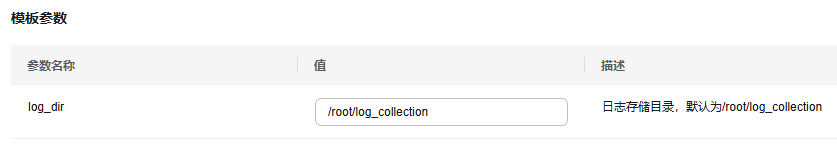

模板参数

请填写Lite Server上的节点目录,用于指定日志存放位置。默认为/root/log_collection。

机型

支持Snt9b或超节点Snt9b23。

采集项

支持选择Device侧日志、主机侧日志和NPU环境日志,也可以全部选择同时采集。

- Device侧日志:Device侧Control CPU上的系统类日志、EVENT级别系统日志、非Control CPU上的系统类日志及黑匣子日志。

- 主机侧日志:主机侧内核消息日志、主机侧系统监测类文件、主机侧操作系统日志文件、系统崩溃时保存的Host侧内核消息日志文件。

- NPU环境日志:通过npu-smi、hccn等工具采集的日志。

- 勾选“日志上传”,授权后可以将采集的日志上传至OBS,用于华为云技术支持人员进行日志分析。单击“立即创建”。

- 返回“任务中心”页面,显示任务的执行状态。

- 单击具体的任务名称,可以进入任务详情页,查看任务的详细信息。

- 在任务详情页,单击“查看日志”,在页面右侧弹窗中查看任务执行的详细日志信息。所有日志收集结果会在任务日志中呈现,并说明日志收集及上传是否成功。

手动收集日志

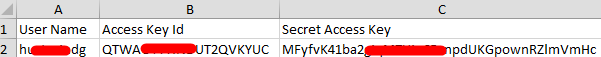

- 获取AK/SK。该AK/SK用于后续脚本配置,做认证授权。

如果已生成过AK/SK,则可跳过此步骤,找到原来已下载的AK/SK文件,文件名一般为:credentials.csv。

如下图所示,文件包含了租户名(User Name),AK(Access Key Id),SK(Secret Access Key)。

图3 credential.csv文件内容 AK/SK生成步骤:

AK/SK生成步骤:- 登录华为云管理控制台。

- 单击右上角的用户名,在下拉列表中单击“我的凭证”。

- 单击“访问密钥”。

- 单击“新增访问密钥”。

- 下载密钥,并妥善保管。

-

将您的租户名ID和IAM用户名ID提供给技术支持,技术支持将根据您提供的信息,为您配置OBS桶策略,以便用户收集的日志可以上传至对应的OBS桶。

技术支持配置完成后,会给您提供对应的OBS桶目录“obs_dir”,该目录用于后续配置的脚本中。

图4 租户名ID和IAM用户名ID

- 准备日志收集上传脚本。

修改以下脚本中NpuLogCollection的参数,将ak、sk、obs_dir替换为前面步骤中获取到的值,如果是300IDuo机型将is_300_iduo改为True。然后把该脚本上传到要收集NPU日志的节点上。

import json import os import sys import hashlib import hmac import binascii import subprocess import re from datetime import datetime class NpuLogCollection(object): NPU_LOG_PATH = "/var/log/npu_log_collect" SUPPORT_REGIONS = ['cn-southwest-2', 'cn-north-9', 'cn-east-4', 'cn-east-3', 'cn-north-4', 'cn-south-1'] OPENSTACK_METADATA = "http://169.254.169.254/openstack/latest/meta_data.json" OBS_BUCKET_PREFIX = "npu-log-" def __init__(self, ak, sk, obs_dir, is_300_iduo=False): self.ak = ak self.sk = sk self.obs_dir = obs_dir self.is_300_iduo = is_300_iduo self.region_id = self.get_region_id() self.card_ids, self.chip_count = self.get_card_ids() def get_region_id(self): meta_data = os.popen("curl {}".format(self.OPENSTACK_METADATA)) json_meta_data = json.loads(meta_data.read()) meta_data.close() region_id = json_meta_data["region_id"] if region_id not in self.SUPPORT_REGIONS: print("current region {} is not support.".format(region_id)) raise Exception('region exception') return region_id def gen_collect_npu_log_shell(self): # 300IDUO does not support hccn_tool_log_shell = "echo {npu_network_info}\n" \ "for i in {npu_card_ids}; do hccn_tool -i $i -net_health -g >> {npu_log_path}/npu-smi_net-health.log ;done\n" \ "for i in {npu_card_ids}; do hccn_tool -i $i -link -g >> {npu_log_path}/npu-smi_link.log ;done\n" \ "for i in {npu_card_ids}; do hccn_tool -i $i -tls -g |grep switch >> {npu_log_path}/npu-smi_switch.log;done\n" \ "for i in {npu_card_ids}; do hccn_tool -i $i -optical -g | grep prese >> {npu_log_path}/npu-smi_present.log ;done\n" \ "for i in {npu_card_ids}; do hccn_tool -i $i -link_stat -g >> {npu_log_path}/npu_link_history.log ;done\n" \ "for i in {npu_card_ids}; do hccn_tool -i $i -ip -g >> {npu_log_path}/npu_roce_ip_info.log ;done\n" \ "for i in {npu_card_ids}; do hccn_tool -i $i -lldp -g >> {npu_log_path}/npu_nic_switch_info.log ;done\n" \ .format(npu_log_path=self.NPU_LOG_PATH, npu_card_ids=self.card_ids, npu_network_info="collect npu network info") collect_npu_log_shell = "# !/bin/sh\n" \ "step=1\n" \ "rm -rf {npu_log_path}\n" \ "mkdir -p {npu_log_path}\n" \ "echo {echo_npu_driver_info}\n" \ "npu-smi info > {npu_log_path}/npu-smi_info.log\n" \ "cat /usr/local/Ascend/driver/version.info > {npu_log_path}/npu-smi_driver-version.log\n" \ "/usr/local/Ascend/driver/tools/upgrade-tool --device_index -1 --component -1 --version > {npu_log_path}/npu-smi_firmware-version.log\n" \ "for i in {npu_card_ids}; do for ((j=0;j<{chip_count};j++)); do npu-smi info -t health -i $i -c $j; done >> {npu_log_path}/npu-smi_health-code.log;done;\n" \ "for i in {npu_card_ids}; do npu-smi info -t board -i $i >> {npu_log_path}/npu-smi_board.log; done;\n" \ "echo {echo_npu_ecc_info}\n" \ "for i in {npu_card_ids};do npu-smi info -t ecc -i $i >> {npu_log_path}/npu-smi_ecc.log; done;\n" \ "lspci | grep acce > {npu_log_path}/Device-info.log\n" \ "echo {echo_npu_device_log}\n" \ "cd {npu_log_path} && msnpureport -f > /dev/null\n" \ "tar -czvPf {npu_log_path}/log_messages.tar.gz /var/log/message* > /dev/null\n" \ "tar -czvPf {npu_log_path}/ascend_install.tar.gz /var/log/ascend_seclog/* > /dev/null\n" \ "echo {echo_npu_tools_log}\n" \ "tar -czvPf {npu_log_path}/ascend_toollog.tar.gz /var/log/nputools_LOG_* > /dev/null\n" \ .format(npu_log_path=self.NPU_LOG_PATH, npu_card_ids=self.card_ids, chip_count=self.chip_count, echo_npu_driver_info="collect npu driver info.", echo_npu_ecc_info="collect npu ecc info.", echo_npu_device_log="collect npu device log.", echo_npu_tools_log="collect npu tools log.") if self.is_300_iduo: return collect_npu_log_shell return collect_npu_log_shell + hccn_tool_log_shell def collect_npu_log(self): print("begin to collect npu log") os.system(self.gen_collect_npu_log_shell()) date_collect = datetime.now().strftime('%Y%m%d%H%M%S') instance_ip_obj = os.popen("curl http://169.254.169.254/latest/meta-data/local-ipv4") instance_ip = instance_ip_obj.read() instance_ip_obj.close() log_tar = "%s-npu-log-%s.tar.gz" % (instance_ip, date_collect) os.system("tar -czvPf %s %s > /dev/null" % (log_tar, self.NPU_LOG_PATH)) print("success to collect npu log with {}".format(log_tar)) return log_tar def upload_log_to_obs(self, log_tar): obs_bucket = "{}{}".format(self.OBS_BUCKET_PREFIX, self.region_id) print("begin to upload {} to obs bucket {}".format(log_tar, obs_bucket)) obs_url = "https://%s.obs.%s.myhuaweicloud.com/%s/%s" % (obs_bucket, self.region_id, self.obs_dir, log_tar) date = datetime.utcnow().strftime('%a, %d %b %Y %H:%M:%S GMT') canonicalized_headers = "x-obs-acl:public-read" obs_sign = self.gen_obs_sign(date, canonicalized_headers, obs_bucket, log_tar) auth = "OBS " + self.ak + ":" + obs_sign header_date = '\"' + "Date:" + date + '\"' header_auth = '\"' + "Authorization:" + auth + '\"' header_obs_acl = '\"' + canonicalized_headers + '\"' cmd = "curl -X PUT -T " + log_tar + " -w %{http_code} " + obs_url + " -H " + header_date + " -H " + header_auth + " -H " + header_obs_acl result = subprocess.run(cmd, shell=True, capture_output=True, text=True) http_code = result.stdout.strip() if result.returncode == 0 and http_code == "200": print("success to upload {} to obs bucket {}".format(log_tar, obs_bucket)) else: print("failed to upload {} to obs bucket {}".format(log_tar, obs_bucket)) print(result) # calculate obs auth sign def gen_obs_sign(self, date, canonicalized_headers, obs_bucket, log_tar): http_method = "PUT" canonicalized_resource = "/%s/%s/%s" % (obs_bucket, self.obs_dir, log_tar) IS_PYTHON2 = sys.version_info.major == 2 or sys.version < '3' canonical_string = http_method + "\n" + "\n" + "\n" + date + "\n" + canonicalized_headers + "\n" + canonicalized_resource if IS_PYTHON2: hashed = hmac.new(self.sk, canonical_string, hashlib.sha1) obs_sign = binascii.b2a_base64(hashed.digest())[:-1] else: hashed = hmac.new(self.sk.encode('UTF-8'), canonical_string.encode('UTF-8'), hashlib.sha1) obs_sign = binascii.b2a_base64(hashed.digest())[:-1].decode('UTF-8') return obs_sign # get NPU Id and Chip count def get_card_ids(self): card_ids = [] cmd = "npu-smi info -l" result = subprocess.run(cmd, shell=True, capture_output=True, text=True) if result.returncode != 0: print("failed to execute command[{}]".format(cmd)) return "" match = re.search(r'Chip Count\s*:\s*(\d+)', result.stdout) # default chip count is 1, 300IDUO or 910C is 2 chip_count = 1 if match and int(match.group(1)) > 0: chip_count=int(match.group(1)) # filter NPU ID Regex pattern = re.compile(r'NPU ID(.*?): (.*?)\n', re.DOTALL) matches = pattern.findall(result.stdout) for match in matches: if len(match) != 2: continue id = int(match[1]) # if drop card if id < 0: print("Card may not be found, NPU ID: {}".format(id)) continue card_ids.append(id) print("success to get card id {}, Chip Count {}".format(card_ids, chip_count)) return " ".join(str(x) for x in card_ids), chip_count def execute(self): if self.obs_dir == "": print("the obs_dir is null, please enter a correct dir") else: log_tar = self.collect_npu_log() self.upload_log_to_obs(log_tar) if __name__ == '__main__': npu_log_collection = NpuLogCollection(ak='ak', sk='sk', obs_dir='obs_dir', is_300_iduo=False) npu_log_collection.execute() - 执行脚本收集日志。

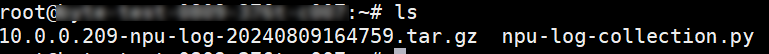

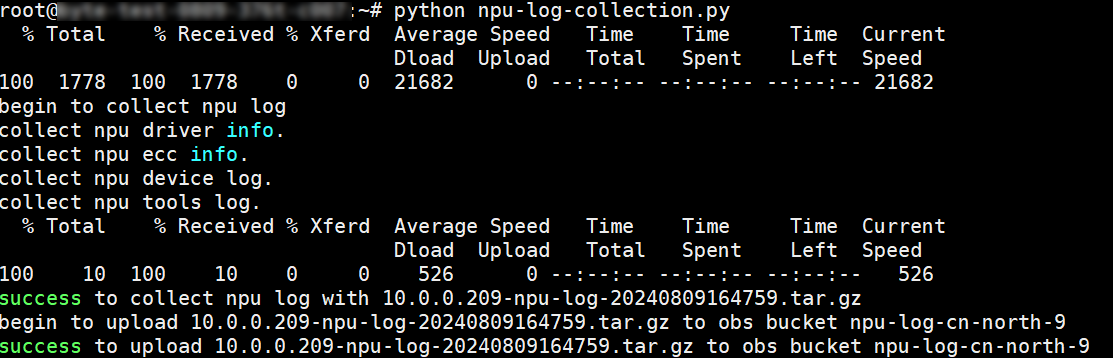

在节点上执行该脚本,可以看到有如下输出,代表日志收集完成并成功上传至OBS。

图5 日志收集完成

- 查看在脚本的同级目录下,可以看到收集到的日志压缩包。

图6 查看结果