使用延迟绑定的云硬盘(csi-disk-topology)实现跨AZ调度

应用现状

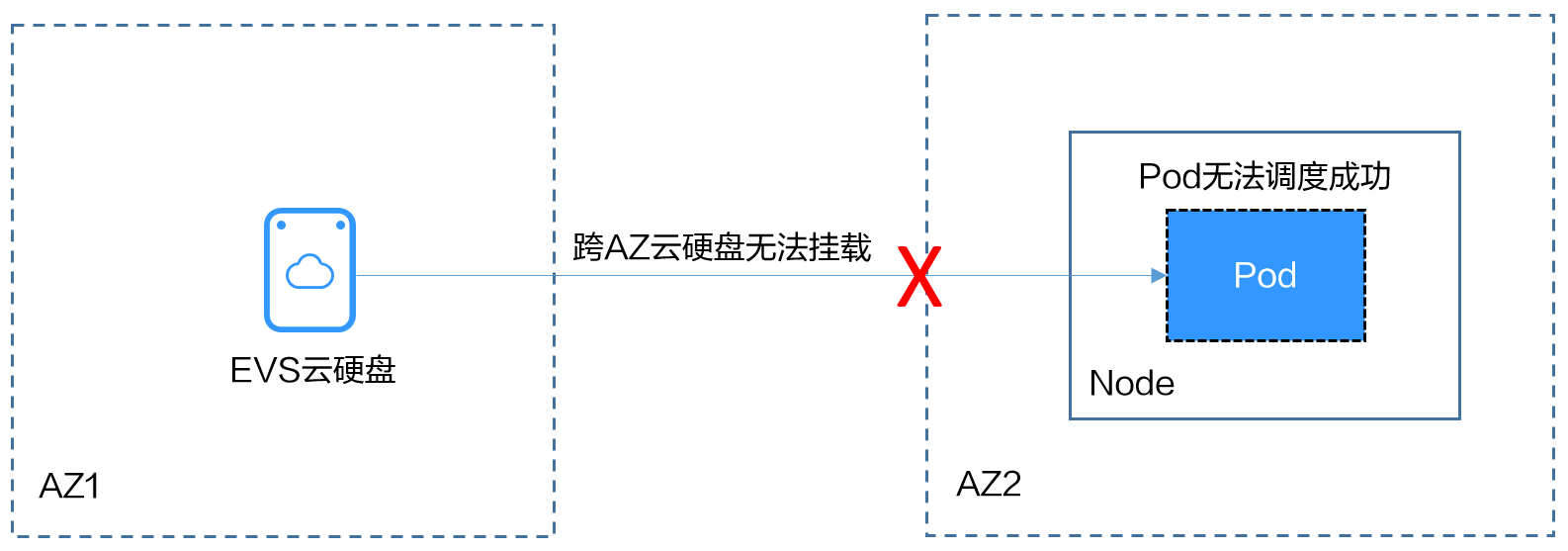

云硬盘使用在使用时无法实现跨AZ挂载,即AZ1的云硬盘无法挂载到AZ2的节点上。有状态工作负载调度时,如果使用csi-disk存储类,会立即创建PVC和PV(创建PV会同时创建云硬盘),然后PVC绑定PV。但是当集群节点位于多AZ下时,PVC创建的云硬盘可能会与Pod调度到的节点不在同一个AZ,导致Pod无法调度成功。

解决方案

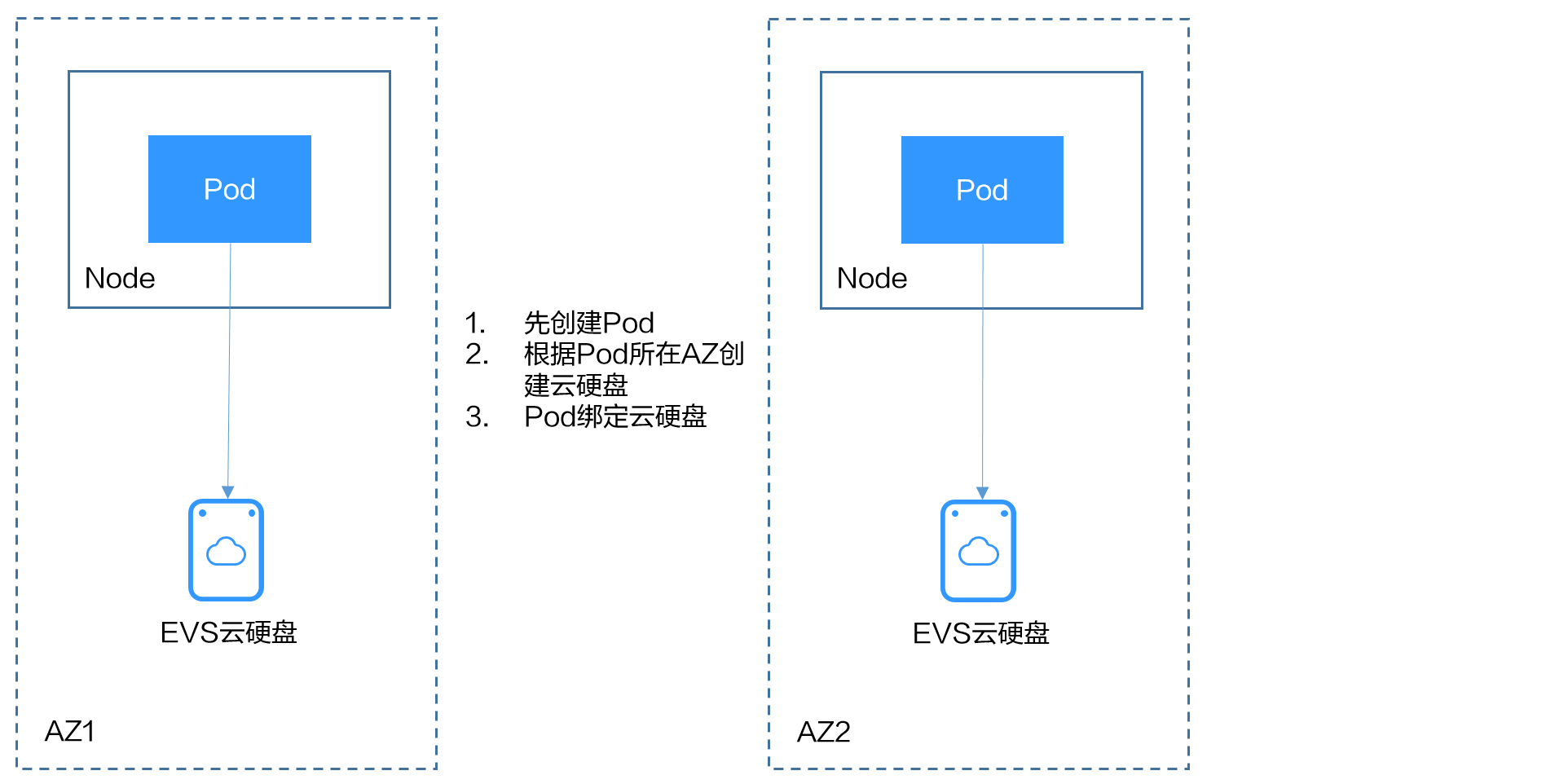

CCE提供了名为csi-disk-topology的StorageClass,也叫延迟绑定的云硬盘存储类型。使用csi-disk-topology创建PVC时,不会立即创建PV,而是等Pod先调度,然后根据Pod调度到节点的AZ信息再创建PV,在Pod所在节点同一个AZ创建云硬盘,这样确保云硬盘能够挂载,从而确保Pod调度成功。

节点多AZ情况下使用csi-disk导致Pod调度失败

创建一个3节点的集群,3个节点在不同AZ下。

使用csi-disk创建一个有状态应用,观察该应用的创建情况。

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: nginx

spec:

serviceName: nginx # headless service的名称

replicas: 4

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: container-0

image: nginx:alpine

resources:

limits:

cpu: 600m

memory: 200Mi

requests:

cpu: 600m

memory: 200Mi

volumeMounts: # Pod挂载的存储

- name: data

mountPath: /usr/share/nginx/html # 存储挂载到/usr/share/nginx/html

imagePullSecrets:

- name: default-secret

volumeClaimTemplates:

- metadata:

name: data

annotations:

everest.io/disk-volume-type: SAS

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: csi-disk

有状态应用使用如下Headless Service。

apiVersion: v1

kind: Service # 对象类型为Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- name: nginx # Pod间通信的端口名称

port: 80 # Pod间通信的端口号

selector:

app: nginx # 选择标签为app:nginx的Pod

clusterIP: None # 必须设置为None,表示Headless Service

创建后查看PVC和Pod状态,如下所示,可以看到PVC都已经创建并绑定成功,而有一个Pod处于Pending状态。

# kubectl get pvc -owide NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE VOLUMEMODE data-nginx-0 Bound pvc-04e25985-fc93-4254-92a1-1085ce19d31e 1Gi RWO csi-disk 64s Filesystem data-nginx-1 Bound pvc-0ae6336b-a2ea-4ddc-8f63-cfc5f9efe189 1Gi RWO csi-disk 47s Filesystem data-nginx-2 Bound pvc-aa46f452-cc5b-4dbd-825a-da68c858720d 1Gi RWO csi-disk 30s Filesystem data-nginx-3 Bound pvc-3d60e532-ff31-42df-9e78-015cacb18a0b 1Gi RWO csi-disk 14s Filesystem # kubectl get pod -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-0 1/1 Running 0 2m25s 172.16.0.12 192.168.0.121 <none> <none> nginx-1 1/1 Running 0 2m8s 172.16.0.136 192.168.0.211 <none> <none> nginx-2 1/1 Running 0 111s 172.16.1.7 192.168.0.240 <none> <none> nginx-3 0/1 Pending 0 95s <none> <none> <none> <none>

查看这个Pod的事件信息,可以发现调度失败,没有一个可用的节点,其中两个节点是因为没有足够的CPU,一个是因为创建的云硬盘不是节点所在的可用区,Pod无法使用该云硬盘。

# kubectl describe pod nginx-3 Name: nginx-3 ... Events: Type Reason Age From Message ---- ------ ---- ---- ------- Warning FailedScheduling 111s default-scheduler 0/3 nodes are available: 3 pod has unbound immediate PersistentVolumeClaims. Warning FailedScheduling 111s default-scheduler 0/3 nodes are available: 3 pod has unbound immediate PersistentVolumeClaims. Warning FailedScheduling 28s default-scheduler 0/3 nodes are available: 1 node(s) had volume node affinity conflict, 2 Insufficient cpu.

查看PVC创建的云硬盘所在的可用区,发现data-nginx-3是在可用区1,而此时可用区1的节点没有资源,只有可用区3的节点有CPU资源,导致无法调度。由此可见PVC先绑定PV创建云硬盘会导致问题。

延迟绑定的云硬盘StorageClass

在集群中查看StorageClass,可以看到csi-disk-topology的绑定模式为WaitForFirstConsumer,表示等有Pod使用这个PVC时再创建PV并绑定,也就是根据Pod的信息创建PV以及底层存储资源。

# kubectl get storageclass NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE csi-disk everest-csi-provisioner Delete Immediate true 156m csi-disk-topology everest-csi-provisioner Delete WaitForFirstConsumer true 156m csi-nas everest-csi-provisioner Delete Immediate true 156m csi-obs everest-csi-provisioner Delete Immediate false 156m

如上内容中VOLUMEBINDINGMODE列是在1.19版本集群中查看到的,1.17和1.15版本不显示这一列。

从csi-disk-topology的详情中也能看到绑定模式。

# kubectl describe sc csi-disk-topology Name: csi-disk-topology IsDefaultClass: No Annotations: <none> Provisioner: everest-csi-provisioner Parameters: csi.storage.k8s.io/csi-driver-name=disk.csi.everest.io,csi.storage.k8s.io/fstype=ext4,everest.io/disk-volume-type=SAS,everest.io/passthrough=true AllowVolumeExpansion: True MountOptions: <none> ReclaimPolicy: Delete VolumeBindingMode: WaitForFirstConsumer Events: <none>

下面创建csi-disk和csi-disk-topology两种类型的PVC,观察两者之间的区别。

- csi-disk

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: disk annotations: everest.io/disk-volume-type: SAS spec: accessModes: - ReadWriteOnce resources: requests: storage: 10Gi storageClassName: csi-disk # StorageClass - csi-disk-topology

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: topology annotations: everest.io/disk-volume-type: SAS spec: accessModes: - ReadWriteOnce resources: requests: storage: 10Gi storageClassName: csi-disk-topology # StorageClass

创建并查看,如下所示,可以发现csi-disk已经是Bound也就是绑定状态,而csi-disk-topology是Pending状态。

# kubectl create -f pvc1.yaml persistentvolumeclaim/disk created # kubectl create -f pvc2.yaml persistentvolumeclaim/topology created # kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE disk Bound pvc-88d96508-d246-422e-91f0-8caf414001fc 10Gi RWO csi-disk 18s topology Pending csi-disk-topology 2s

查看topology PVC的详情,可以在事件中看到“waiting for first consumer to be created before binding”,意思是等使用PVC的消费者也就是Pod创建后再绑定。

# kubectl describe pvc topology Name: topology Namespace: default StorageClass: csi-disk-topology Status: Pending Volume: Labels: <none> Annotations: everest.io/disk-volume-type: SAS Finalizers: [kubernetes.io/pvc-protection] Capacity: Access Modes: VolumeMode: Filesystem Used By: <none> Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal WaitForFirstConsumer 5s (x3 over 30s) persistentvolume-controller waiting for first consumer to be created before binding

创建工作负载使用该PVC,其中申明PVC名称的地方填写topology,如下所示。

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 1

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx:alpine

name: container-0

volumeMounts:

- mountPath: /tmp # 挂载路径

name: topology-example

restartPolicy: Always

volumes:

- name: topology-example

persistentVolumeClaim:

claimName: topology # PVC的名称

创建完成后查看PVC的详情,可以看到此时已经绑定成功。

# kubectl describe pvc topology Name: topology Namespace: default StorageClass: csi-disk-topology Status: Bound .... Used By: nginx-deployment-fcd9fd98b-x6tbs Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal WaitForFirstConsumer 84s (x26 over 7m34s) persistentvolume-controller waiting for first consumer to be created before binding Normal Provisioning 54s everest-csi-provisioner_everest-csi-controller-7965dc48c4-5k799_2a6b513e-f01f-4e77-af21-6d7f8d4dbc98 External provisioner is provisioning volume for claim "default/topology" Normal ProvisioningSucceeded 52s everest-csi-provisioner_everest-csi-controller-7965dc48c4-5k799_2a6b513e-f01f-4e77-af21-6d7f8d4dbc98 Successfully provisioned volume pvc-9a89ea12-4708-4c71-8ec5-97981da032c9

节点多AZ情况下使用csi-disk-topology

下面使用csi-disk-topology创建有状态应用,将上面应用改为使用csi-disk-topology。

volumeClaimTemplates:

- metadata:

name: data

annotations:

everest.io/disk-volume-type: SAS

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: csi-disk-topology

创建后查看PVC和Pod状态,如下所示,可以看到PVC和Pod都能创建成功,nginx-3这个Pod是创建在可用区3这个节点上。

# kubectl get pvc -owide NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE VOLUMEMODE data-nginx-0 Bound pvc-43802cec-cf78-4876-bcca-e041618f2470 1Gi RWO csi-disk-topology 55s Filesystem data-nginx-1 Bound pvc-fc942a73-45d3-476b-95d4-1eb94bf19f1f 1Gi RWO csi-disk-topology 39s Filesystem data-nginx-2 Bound pvc-d219f4b7-e7cb-4832-a3ae-01ad689e364e 1Gi RWO csi-disk-topology 22s Filesystem data-nginx-3 Bound pvc-b54a61e1-1c0f-42b1-9951-410ebd326a4d 1Gi RWO csi-disk-topology 9s Filesystem # kubectl get pod -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-0 1/1 Running 0 65s 172.16.1.8 192.168.0.240 <none> <none> nginx-1 1/1 Running 0 49s 172.16.0.13 192.168.0.121 <none> <none> nginx-2 1/1 Running 0 32s 172.16.0.137 192.168.0.211 <none> <none> nginx-3 1/1 Running 0 19s 172.16.1.9 192.168.0.240 <none> <none>